变形

import numpy as np

import pandas as pd

一、长表变宽表

长表:一个表中把性别存储在某一个列中,它就是关于性别的长表

宽表:把性别作为列名,列中的元素是某一其他的相关特征数值,这个表是关于性别的宽表

pd.DataFrame({

'Gender':['F','F','M','M'],

'Heigth':[163,160,175,180]})

|

Gender |

Heigth |

| 0 |

F |

163 |

| 1 |

F |

160 |

| 2 |

M |

175 |

| 3 |

M |

180 |

pd.DataFrame({

'Height:F':[163,160],

'Height:M':[175,180]})

|

Height:F |

Height:M |

| 0 |

163 |

175 |

| 1 |

160 |

180 |

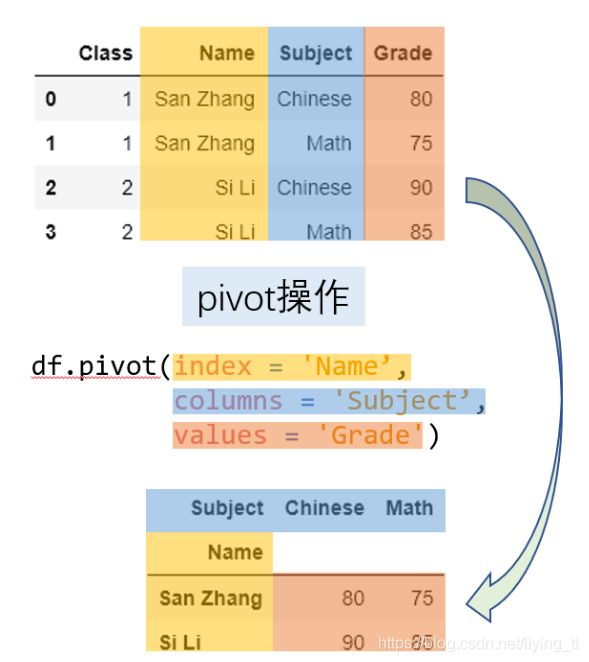

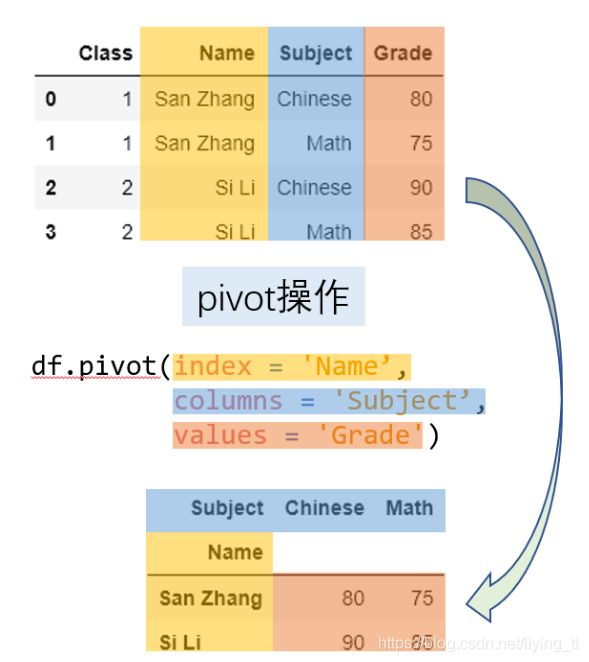

1. pivot

1.pivot是长表变宽表的函数

一个基本的长变宽的操作而言,最重要的有三个要素:

(1)变形后的行索引: index <-- 对应列的unique值

(2)需要转到列索引的列:columns <-- 对应列的unique值

(3)这些列和行索引对应的数值:values

例:把语文和数学分数作为列来展示

df = pd.DataFrame({

'Class':[1,1,2,2,2],

'Name':['San Zhang','San Zhang','Si Li','Si Li','Si Li'],

'Subject': ['Chinese','Math','Chinese','Math','Science'],

'Grade':[80,75,90,85,90]})

df

|

Class |

Name |

Subject |

Grade |

| 0 |

1 |

San Zhang |

Chinese |

80 |

| 1 |

1 |

San Zhang |

Math |

75 |

| 2 |

2 |

Si Li |

Chinese |

90 |

| 3 |

2 |

Si Li |

Math |

85 |

| 4 |

2 |

Si Li |

Science |

90 |

df.pivot(index='Name',columns='Subject',values='Grade')

| Subject |

Chinese |

Math |

Science |

| Name |

|

|

|

| San Zhang |

80.0 |

75.0 |

NaN |

| Si Li |

90.0 |

85.0 |

90.0 |

pivot变形过程:

利用 pivot 进行变形操作需要满足唯一性的要求,即由于在新表中的行列索引对应了唯一的 value ,因此原表中的 index 和 columns 对应两个列的行组合必须唯一。

利用 pivot 进行变形操作需要满足唯一性的要求,即由于在新表中的行列索引对应了唯一的 value ,因此原表中的 index 和 columns 对应两个列的行组合必须唯一。

例如,现在把原表中第二行张三的数学改为语文就会报错,这是由于 Name 与 Subject 的组合中两次出现 (“San Zhang”, “Chinese”) ,从而最后不能够确定到底变形后应该是填写80分还是75分。

df.loc[1,'Subject'] = 'Chinese'

try:

df.pivot(index='Name',columns='Subject',values='Grade')

except Exception as e:

Err_Msg = e

Err_Msg

ValueError('Index contains duplicate entries, cannot reshape')

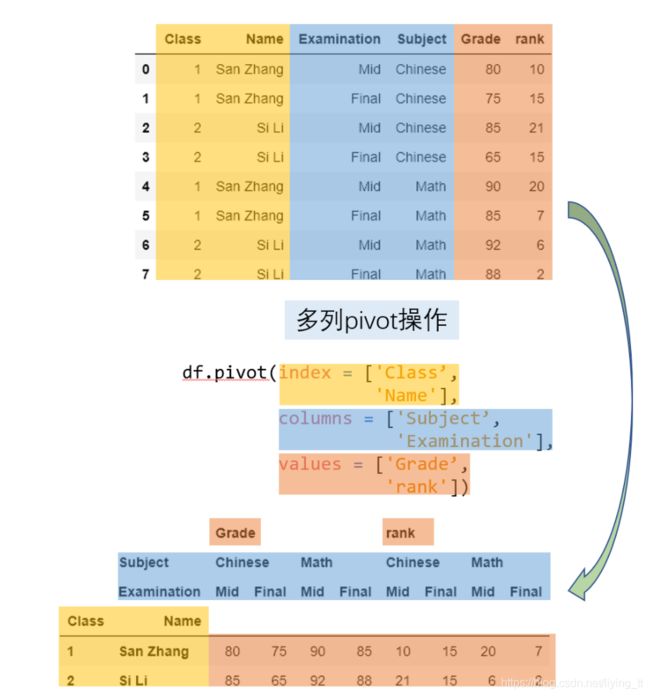

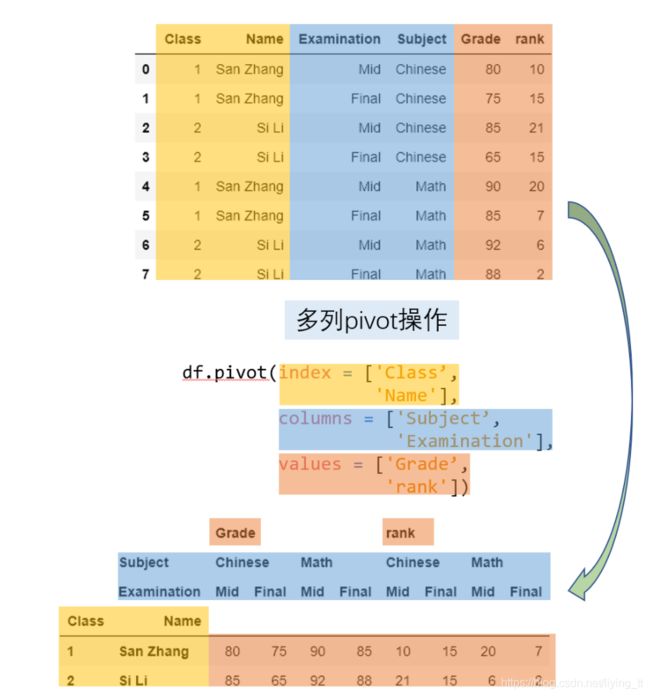

2.pivot 相关的三个参数允许被设置为列表

df = pd.DataFrame({

'Class':[1,1,2,2,1,1,2,2],

'Name':['San Zhang','San Zhang','Si Li','Si Li',

'San Zhang','San Zhang','Si Li','Si Li'],

'Examination':['Mid','Final','Mid','Final',

'Mid','Final','Mid','Final'],

'Subject': ['Chinese','Chinese','Chinese','Chinese',

'Math','Math','Math','Math'],

'Grade':[80,75,85,65,90,85,92,88],

'rank':[10,15,21,15,20,7,6,21]})

df

|

Class |

Name |

Examination |

Subject |

Grade |

rank |

| 0 |

1 |

San Zhang |

Mid |

Chinese |

80 |

10 |

| 1 |

1 |

San Zhang |

Final |

Chinese |

75 |

15 |

| 2 |

2 |

Si Li |

Mid |

Chinese |

85 |

21 |

| 3 |

2 |

Si Li |

Final |

Chinese |

65 |

15 |

| 4 |

1 |

San Zhang |

Mid |

Math |

90 |

20 |

| 5 |

1 |

San Zhang |

Final |

Math |

85 |

7 |

| 6 |

2 |

Si Li |

Mid |

Math |

92 |

6 |

| 7 |

2 |

Si Li |

Final |

Math |

88 |

21 |

例:把测试类型和科目联合组成的四个类别(期中语文、期末语文、期中数学、期末数学)转到列索引,并且同时统计成绩和排名

pivot_multi = df.pivot(index = ['Class','Name'],

columns = ['Subject','Examination'],

values = ['Grade','rank'])

pivot_multi

|

|

Grade |

rank |

|

Subject |

Chinese |

Math |

Chinese |

Math |

|

Examination |

Mid |

Final |

Mid |

Final |

Mid |

Final |

Mid |

Final |

| Class |

Name |

|

|

|

|

|

|

|

|

| 1 |

San Zhang |

80 |

75 |

90 |

85 |

10 |

15 |

20 |

7 |

| 2 |

Si Li |

85 |

65 |

92 |

88 |

21 |

15 |

6 |

21 |

根据唯一性原则,新表的行索引等价于对 index 中的多列使用 drop_duplicates ,而列索引的长度为 values 中的元素个数乘以 columns 的唯一组合数量(与 index 类似)

)]

2. pivot_table

pivot 的使用依赖于唯一性条件,那如果不满足唯一性条件,那么必须通过聚合操作使得相同行列组合对应的多个值变为一个值

df = pd.DataFrame({

'Name':['San Zhang','San Zhang','San Zhang','San Zhang',

'Si Li','Si Li','Si Li','Si Li'],

'Subject':['Chinese','Chinese','Math','Math',

'Chinese','Chinese','Math','Math'],

'Grade':[80,90,100,90,70,80,985,95]})

df

|

Name |

Subject |

Grade |

| 0 |

San Zhang |

Chinese |

80 |

| 1 |

San Zhang |

Chinese |

90 |

| 2 |

San Zhang |

Math |

100 |

| 3 |

San Zhang |

Math |

90 |

| 4 |

Si Li |

Chinese |

70 |

| 5 |

Si Li |

Chinese |

80 |

| 6 |

Si Li |

Math |

985 |

| 7 |

Si Li |

Math |

95 |

(1) 参数

aggfunc: 使用的聚合函数

margins=True: 边际汇总的功能

df.pivot_table(index='Name',

columns='Subject',

values='Grade',

aggfunc= lambda x:x.mean())

| Subject |

Chinese |

Math |

| Name |

|

|

| San Zhang |

85 |

95 |

| Si Li |

75 |

540 |

df.pivot_table(index='Name',

columns='Subject',

values='Grade',

aggfunc='mean',

margins=True)

| Subject |

Chinese |

Math |

All |

| Name |

|

|

|

| San Zhang |

85 |

95.0 |

90.00 |

| Si Li |

75 |

540.0 |

307.50 |

| All |

80 |

317.5 |

198.75 |

3. 练一练1

在上面的边际汇总例子中,行或列的汇总为新表中行元素或者列元素的平均值,而总体的汇总为新表中四个元素的平均值。这种关系一定成立吗?若不成立,请给出一个例子来说明。

思路:我的理解是,边际汇总的值依据的是给定的函数,它满足的条件应该是函数的条件,上面的例子因为我们只用了平均值,所以计算的结果也满足平均值,但是当我们将函数复杂度提高之后,例如下面的例子,我求的是平均值和汇总值的和,我们会发现总体的汇总就不满足新表的四个元素均值和汇总值的和

df.pivot_table(index='Name',

columns='Subject',

values='Grade',

aggfunc='sum',

margins=True)

| Subject |

Chinese |

Math |

All |

| Name |

|

|

|

| San Zhang |

170 |

190 |

360 |

| Si Li |

150 |

1080 |

1230 |

| All |

320 |

1270 |

1590 |

df.pivot_table(index='Name',

columns='Subject',

values='Grade',

aggfunc= lambda x :x.mean()+x.sum(),

margins=True)

| Subject |

Chinese |

Math |

All |

| Name |

|

|

|

| San Zhang |

255 |

285.0 |

450.00 |

| Si Li |

225 |

1620.0 |

1537.50 |

| All |

400 |

1587.5 |

1788.75 |

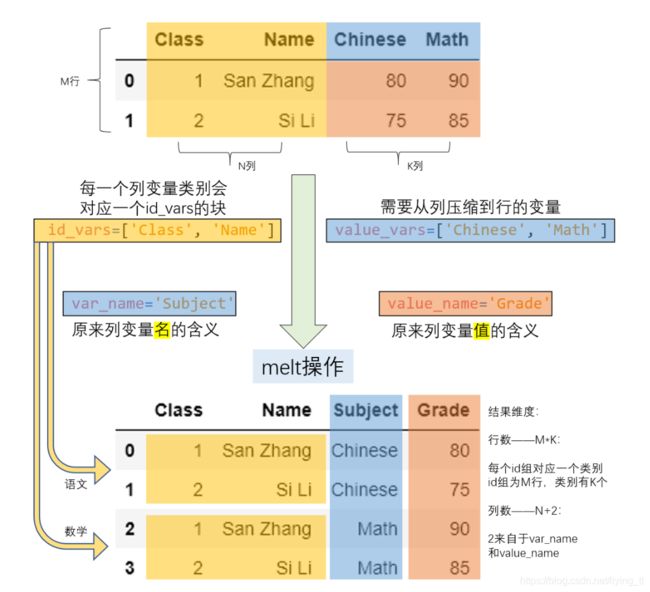

二、宽表变长表

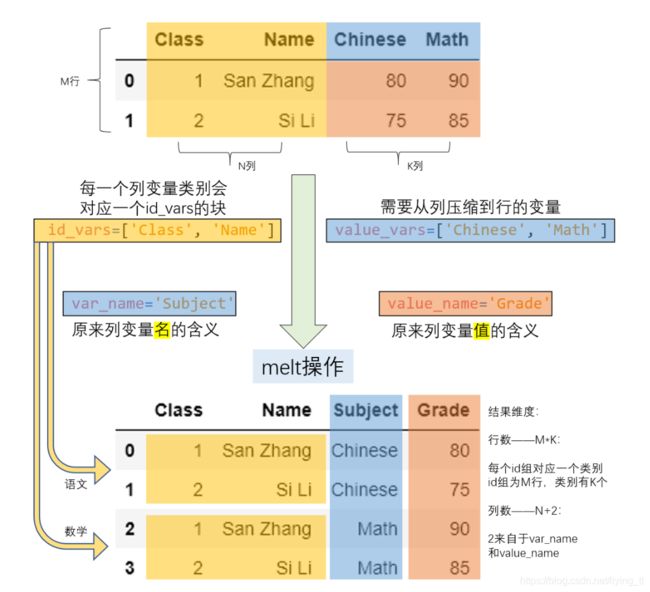

1. melt

(1)参数:

id_vars 不需要被转换的列名,标识列,不是索引

value_vars 被转换的列名

var_name “被转换的列名”,组成的新列的名称

value_name "被转换的列名"下的数据,组成的新列的名称

df = pd.DataFrame({

'Class':[1,2],

'Name':['San Zhang','Si Li'],

'Chinese':[80,90],

'Math':[80,75]})

df

|

Class |

Name |

Chinese |

Math |

| 0 |

1 |

San Zhang |

80 |

80 |

| 1 |

2 |

Si Li |

90 |

75 |

df_melted = df.melt(id_vars = ['Class','Name'],

value_vars=['Chinese','Math'],

var_name='Subject',

value_name='Grade')

df_melted

|

Class |

Name |

Subject |

Grade |

| 0 |

1 |

San Zhang |

Chinese |

80 |

| 1 |

2 |

Si Li |

Chinese |

90 |

| 2 |

1 |

San Zhang |

Math |

80 |

| 3 |

2 |

Si Li |

Math |

75 |

例:使用pivot将df_melted转换为df

df_unmeltes = df_melted.pivot(index=['Class','Name'],

columns='Subject',

values='Grade')

df_unmeltes

|

Subject |

Chinese |

Math |

| Class |

Name |

|

|

| 1 |

San Zhang |

80 |

80 |

| 2 |

Si Li |

90 |

75 |

df_unmeltes = df_unmeltes.reset_index().rename_axis(columns={

'Subject':''})

df_unmeltes

|

Class |

Name |

Chinese |

Math |

| 0 |

1 |

San Zhang |

80 |

80 |

| 1 |

2 |

Si Li |

90 |

75 |

df_unmeltes.equals(df)

True

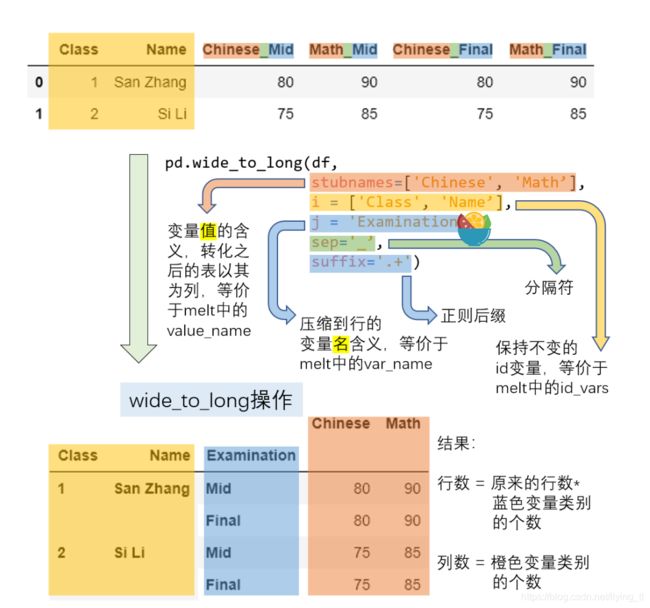

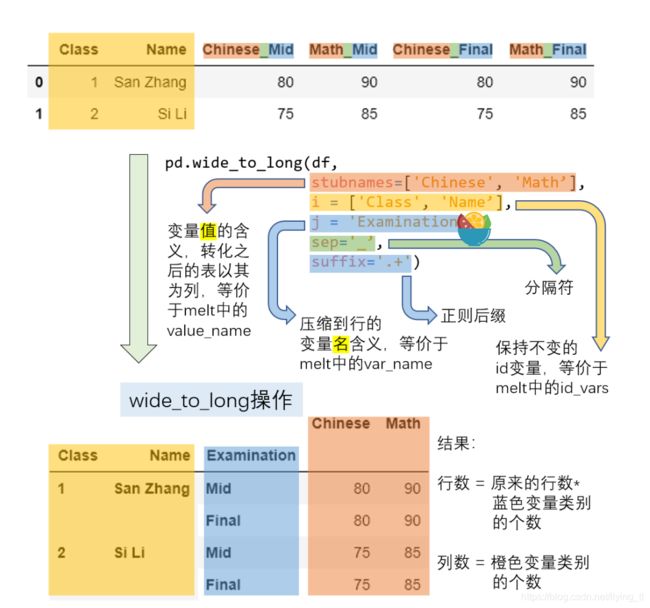

2. wide_to_long

melt 方法中,在列索引中被压缩的一组值对应的列元素只能代表同一层次的含义,即 values_name 。如果列中包含了交叉类别,要把 values_name 对应的 Grade 扩充为两列,使用 wide_to_long 函数来完成

(1)参数:

stubnames:转换之后的表以其为列,等价于value_name

i: 保持不变的id变量,等价于id_vars

j: 压缩到行的变量名,等价于var_name

sep: 分隔符

suffix: 正则后缀

df = pd.DataFrame({

'Class':[1,2],

'Name':['San Zhang','Si Li'],

'Chinese_Mid':[80,75],

'Math_Mid':[90,85],

'Chinese_Final':[80,75],

'Math_Final':[90,85]})

df

|

Class |

Name |

Chinese_Mid |

Math_Mid |

Chinese_Final |

Math_Final |

| 0 |

1 |

San Zhang |

80 |

90 |

80 |

90 |

| 1 |

2 |

Si Li |

75 |

85 |

75 |

85 |

pd.wide_to_long(df,

stubnames=['Chinese','Math'],

i = ['Class','Name'],

j = 'Examination',

sep = '_',

suffix = '.+')

|

|

|

Chinese |

Math |

| Class |

Name |

Examination |

|

|

| 1 |

San Zhang |

Mid |

80 |

90 |

| Final |

80 |

90 |

| 2 |

Si Li |

Mid |

75 |

85 |

| Final |

75 |

85 |

把之前在 pivot 一节中多列操作的结果(产生了多级索引),利用 wide_to_long 函数,将其转为原来的形态

res = pivot_multi.copy()

res

|

|

Grade |

rank |

|

Subject |

Chinese |

Math |

Chinese |

Math |

|

Examination |

Mid |

Final |

Mid |

Final |

Mid |

Final |

Mid |

Final |

| Class |

Name |

|

|

|

|

|

|

|

|

| 1 |

San Zhang |

80 |

75 |

90 |

85 |

10 |

15 |

20 |

7 |

| 2 |

Si Li |

85 |

65 |

92 |

88 |

21 |

15 |

6 |

21 |

res.columns = res.columns.map(lambda x: '_'.join(x))

res.head(2)

|

|

Grade_Chinese_Mid |

Grade_Chinese_Final |

Grade_Math_Mid |

Grade_Math_Final |

rank_Chinese_Mid |

rank_Chinese_Final |

rank_Math_Mid |

rank_Math_Final |

| Class |

Name |

|

|

|

|

|

|

|

|

| 1 |

San Zhang |

80 |

75 |

90 |

85 |

10 |

15 |

20 |

7 |

| 2 |

Si Li |

85 |

65 |

92 |

88 |

21 |

15 |

6 |

21 |

res = res.reset_index()

res.head(2)

|

Class |

Name |

Grade_Chinese_Mid |

Grade_Chinese_Final |

Grade_Math_Mid |

Grade_Math_Final |

rank_Chinese_Mid |

rank_Chinese_Final |

rank_Math_Mid |

rank_Math_Final |

| 0 |

1 |

San Zhang |

80 |

75 |

90 |

85 |

10 |

15 |

20 |

7 |

| 1 |

2 |

Si Li |

85 |

65 |

92 |

88 |

21 |

15 |

6 |

21 |

res = pd.wide_to_long(res,

stubnames=['Grade','rank'],

i = ['Class','Name'],

j = 'Subject_Examination',

sep = '_',

suffix = '.+')

res = res.reset_index()

res[['Subject','Examination']] = res['Subject_Examination'].str.split('_',expand=True)

res = res[['Class','Name','Examination','Subject','Grade','rank']].sort_values('Subject')

res=res.reset_index(drop=True)

res

|

Class |

Name |

Examination |

Subject |

Grade |

rank |

| 0 |

1 |

San Zhang |

Mid |

Chinese |

80 |

10 |

| 1 |

1 |

San Zhang |

Final |

Chinese |

75 |

15 |

| 2 |

2 |

Si Li |

Mid |

Chinese |

85 |

21 |

| 3 |

2 |

Si Li |

Final |

Chinese |

65 |

15 |

| 4 |

1 |

San Zhang |

Mid |

Math |

90 |

20 |

| 5 |

1 |

San Zhang |

Final |

Math |

85 |

7 |

| 6 |

2 |

Si Li |

Mid |

Math |

92 |

6 |

| 7 |

2 |

Si Li |

Final |

Math |

88 |

21 |

三、索引的变形

1. unstack

把行索引转为列索引

在 unstack 中必须保证 被转为列索引的行索引层 和 被保留的行索引层 构成的组合是唯一的,

df = pd.DataFrame(np.ones((4,2)),

index=pd.Index([('A','cat','big'),

('A','dog','small'),

('B','cat','big'),

('B','dog','small')]),

columns=['col_1','col_2'])

df

|

|

|

col_1 |

col_2 |

| A |

cat |

big |

1.0 |

1.0 |

| dog |

small |

1.0 |

1.0 |

| B |

cat |

big |

1.0 |

1.0 |

| dog |

small |

1.0 |

1.0 |

df.unstack()

|

|

col_1 |

col_2 |

|

|

big |

small |

big |

small |

| A |

cat |

1.0 |

NaN |

1.0 |

NaN |

| dog |

NaN |

1.0 |

NaN |

1.0 |

| B |

cat |

1.0 |

NaN |

1.0 |

NaN |

| dog |

NaN |

1.0 |

NaN |

1.0 |

unstack 的主要参数是移动的层号,默认转化最内层,移动到列索引的最内层,同时支持同时转化多个层:

df.unstack(2)

|

|

col_1 |

col_2 |

|

|

big |

small |

big |

small |

| A |

cat |

1.0 |

NaN |

1.0 |

NaN |

| dog |

NaN |

1.0 |

NaN |

1.0 |

| B |

cat |

1.0 |

NaN |

1.0 |

NaN |

| dog |

NaN |

1.0 |

NaN |

1.0 |

df.unstack([0,2])

|

col_1 |

col_2 |

|

A |

B |

A |

B |

|

big |

small |

big |

small |

big |

small |

big |

small |

| cat |

1.0 |

NaN |

1.0 |

NaN |

1.0 |

NaN |

1.0 |

NaN |

| dog |

NaN |

1.0 |

NaN |

1.0 |

NaN |

1.0 |

NaN |

1.0 |

2. stack

把列索引的层压入行索引

df = pd.DataFrame(np.ones((4,2)),

index=pd.Index([('A','cat','big'),

('A','dog','small'),

('B','cat','big'),

('B','dog','small')]),

columns=['index_1','index_2']).T

df

|

A |

B |

|

cat |

dog |

cat |

dog |

|

big |

small |

big |

small |

| index_1 |

1.0 |

1.0 |

1.0 |

1.0 |

| index_2 |

1.0 |

1.0 |

1.0 |

1.0 |

df.stack()

|

|

A |

B |

|

|

cat |

dog |

cat |

dog |

| index_1 |

big |

1.0 |

NaN |

1.0 |

NaN |

| small |

NaN |

1.0 |

NaN |

1.0 |

| index_2 |

big |

1.0 |

NaN |

1.0 |

NaN |

| small |

NaN |

1.0 |

NaN |

1.0 |

df.stack([1,2])

|

|

|

A |

B |

| index_1 |

cat |

big |

1.0 |

1.0 |

| dog |

small |

1.0 |

1.0 |

| index_2 |

cat |

big |

1.0 |

1.0 |

| dog |

small |

1.0 |

1.0 |

3. 聚合与变形的关系

(1)除了带有聚合效果的 pivot_table 以外,所有的函数在变形前后并不会带来 values 个数的改变,只是这些值在呈现的形式上发生了变化。

(2)分组聚合操作,由于生成了新的行列索引,属于某种特殊的变形操作,但由于聚合之后把原来的多个值变为了一个值,因此 values 的个数产生了变化,这也是分组聚合与变形函数的最大区别。

四、其他变形函数

1. crosstab

crosstab 并不是一个值得推荐使用的函数,因为它能实现的所有功能 pivot_table 都能完成,并且速度更快。在默认状态下, crosstab 可以统计元素组合出现的频数,即 count 操作

例:统计 learn_pandas 数据集中学校和转系情况对应的频数

df = pd.read_csv('data/learn_pandas.csv')

pd.crosstab(index=df.School, columns=df.Transfer)

| Transfer |

N |

Y |

| School |

|

|

| Fudan University |

38 |

1 |

| Peking University |

28 |

2 |

| Shanghai Jiao Tong University |

53 |

0 |

| Tsinghua University |

62 |

4 |

这等价于如下 crosstab 的如下写法,这里的 aggfunc 即聚合参数:

pd.crosstab(index=df.School, columns=df.Transfer,

values=[0]*df.shape[0], aggfunc='count')

| Transfer |

N |

Y |

| School |

|

|

| Fudan University |

38.0 |

1.0 |

| Peking University |

28.0 |

2.0 |

| Shanghai Jiao Tong University |

53.0 |

NaN |

| Tsinghua University |

62.0 |

4.0 |

同样,可以利用 pivot_table 进行等价操作,由于这里统计的是组合的频数,因此 values 参数无论传入哪一个列都不会影响最后的结果:

df.pivot_table(index='School',

columns='Transfer',

values='Name',

aggfunc='count')

| Transfer |

N |

Y |

| School |

|

|

| Fudan University |

38.0 |

1.0 |

| Peking University |

28.0 |

2.0 |

| Shanghai Jiao Tong University |

53.0 |

NaN |

| Tsinghua University |

62.0 |

4.0 |

从上面可以看出这两个函数的区别在于, crosstab 的对应位置传入的是具体的序列,而 pivot_table 传入的是被调用表对应的名字,若传入序列对应的值则会报错。

除了默认状态下的 count 统计,所有的聚合字符串和返回标量的自定义函数都是可用的,例如统计对应组合的身高均值

pd.crosstab(index=df.School, columns=df.Transfer,

values=df.Height, aggfunc='mean')

| Transfer |

N |

Y |

| School |

|

|

| Fudan University |

162.043750 |

177.20 |

| Peking University |

163.429630 |

162.40 |

| Shanghai Jiao Tong University |

163.953846 |

NaN |

| Tsinghua University |

163.253571 |

164.55 |

2. 练一练2

前面提到了 crosstab 的性能劣于 pivot_table ,请选用多个聚合方法进行验证。

思路:我验证了mean、sum、cumsum,发现pivot_table的运行效率比crosstab高

import time

start = time.time()

pd.crosstab(index=df.School, columns=df.Transfer,

values=df.Height, aggfunc='cumsum')

end = time.time()

print(end-start)

0.04938101768493652

start = time.time()

df.pivot_table(index='School',

columns='Transfer',

values='Height',

aggfunc='cumsum')

end = time.time()

print(end-start)

0.008005380630493164

3.explode

explode 参数能够对某一列的元素进行纵向的展开,被展开的单元格必须存储 list, tuple, Series, np.ndarray 中的一种类型

df_ex = pd.DataFrame({

'A':[[1,2],

'my_str',

{

1,2},

pd.Series([3,4])],

'B':1})

df_ex

|

A |

B |

| 0 |

[1, 2] |

1 |

| 1 |

my_str |

1 |

| 2 |

{1, 2} |

1 |

| 3 |

0 3 1 4 dtype: int64 |

1 |

df_ex.explode('A')

|

A |

B |

| 0 |

1 |

1 |

| 0 |

2 |

1 |

| 1 |

my_str |

1 |

| 2 |

{1, 2} |

1 |

| 3 |

3 |

1 |

| 3 |

4 |

1 |

4. get_dummies

get_dummies 是用于特征构建的重要函数之一,其作用是把类别特征转为指示变量

例:对年级一列转为指示变量,属于某一个年级的对应列标记为1,否则为0:

pd.get_dummies(df.Grade).head()

|

Freshman |

Junior |

Senior |

Sophomore |

| 0 |

1 |

0 |

0 |

0 |

| 1 |

1 |

0 |

0 |

0 |

| 2 |

0 |

0 |

1 |

0 |

| 3 |

0 |

0 |

0 |

1 |

| 4 |

0 |

0 |

0 |

1 |

五、练习

1. 美国非法药物数据集

现有一份关于美国非法药物的数据集,其中 SubstanceName, DrugReports 分别指药物名称和报告数量

df = pd.read_csv('data/Drugs.csv').sort_values([

'State','COUNTY','SubstanceName'],ignore_index=True)

df.head(2)

|

YYYY |

State |

COUNTY |

SubstanceName |

DrugReports |

| 0 |

2011 |

KY |

ADAIR |

Buprenorphine |

3 |

| 1 |

2012 |

KY |

ADAIR |

Buprenorphine |

5 |

1.将数据转为如下的形式

思考:其中遇到了一个将index的标题修改为’'的问题,后来使用rename_axis解决

df_1 = df.pivot(index = ['State','COUNTY','SubstanceName'],

columns = 'YYYY',

values='DrugReports')

df_1 = df_1.reset_index()

df_1 = df_1.rename_axis(None, axis=1)

df_1.head()

|

State |

COUNTY |

SubstanceName |

2010 |

2011 |

2012 |

2013 |

2014 |

2015 |

2016 |

2017 |

| 0 |

KY |

ADAIR |

Buprenorphine |

NaN |

3.0 |

5.0 |

4.0 |

27.0 |

5.0 |

7.0 |

10.0 |

| 1 |

KY |

ADAIR |

Codeine |

NaN |

NaN |

1.0 |

NaN |

NaN |

NaN |

NaN |

1.0 |

| 2 |

KY |

ADAIR |

Fentanyl |

NaN |

NaN |

1.0 |

NaN |

NaN |

NaN |

NaN |

NaN |

| 3 |

KY |

ADAIR |

Heroin |

NaN |

NaN |

1.0 |

2.0 |

NaN |

1.0 |

NaN |

2.0 |

| 4 |

KY |

ADAIR |

Hydrocodone |

6.0 |

9.0 |

10.0 |

10.0 |

9.0 |

7.0 |

11.0 |

3.0 |

2.将第1问中的结果恢复为原表。

思路:还原后,我们会发现列的顺序与原来不一致,最后再赋值将列的位置调整一下

date = list(set(df.YYYY))

date

[2016, 2017, 2010, 2011, 2012, 2013, 2014, 2015]

df_2 = df_1.melt(id_vars = ['State','COUNTY','SubstanceName'],

value_vars = date,

var_name = 'YYYY',

value_name = 'DrugReports')

df_2.head()

|

State |

COUNTY |

SubstanceName |

YYYY |

DrugReports |

| 0 |

KY |

ADAIR |

Buprenorphine |

2016 |

7.0 |

| 1 |

KY |

ADAIR |

Codeine |

2016 |

NaN |

| 2 |

KY |

ADAIR |

Fentanyl |

2016 |

NaN |

| 3 |

KY |

ADAIR |

Heroin |

2016 |

NaN |

| 4 |

KY |

ADAIR |

Hydrocodone |

2016 |

11.0 |

cols = list(df.columns)

df_2 = df_2.loc[:,cols]

df_2.head(2)

|

YYYY |

State |

COUNTY |

SubstanceName |

DrugReports |

| 0 |

2016 |

KY |

ADAIR |

Buprenorphine |

7.0 |

| 1 |

2016 |

KY |

ADAIR |

Codeine |

NaN |

3.按 State 分别统计每年的报告数量总和,其中 State, YYYY 分别为列索引和行索引,要求分别使用 pivot_table 函数与 groupby+unstack 两种不同的策略实现,并体会它们之间的联系

思考:pivot_table可以直接按照行、列的排列将数据汇总,也就是相当于先使用groupby得到含两个索引的Series,之后通过unstack将Series转换为DataFrame,也就是pivot_table之后的结果

df_3 = df.pivot_table(index = 'YYYY',

columns = 'State',

values = 'DrugReports',

aggfunc = 'sum')

df_3.head(2)

| State |

KY |

OH |

PA |

VA |

WV |

| YYYY |

|

|

|

|

|

| 2010 |

10453 |

19707 |

19814 |

8685 |

2890 |

| 2011 |

10289 |

20330 |

19987 |

6749 |

3271 |

gb_3 = df.groupby(['YYYY','State'])['DrugReports'].sum()

type(gb_3)

pandas.core.series.Series

gb_3.unstack().head(2)

| State |

KY |

OH |

PA |

VA |

WV |

| YYYY |

|

|

|

|

|

| 2010 |

10453 |

19707 |

19814 |

8685 |

2890 |

| 2011 |

10289 |

20330 |

19987 |

6749 |

3271 |

2. 特殊的wide_to_long方法

从功能上看, melt 方法应当属于 wide_to_long 的一种特殊情况,即 stubnames 只有一类。请使用 wide_to_long 生成 melt 一节中的 df_melted 。(提示:对列名增加适当的前缀)

思考:在Chinese和Math列加入一个Grade前缀,Grade会单独形成一列,之后的科目名称会存入到一列,最后去掉索引即可。

df_4 = pd.DataFrame({

'Class': [1,2],

'Name':['San Zhang','Si Li'],

'Chinese':[80,90],

'Math':[80,75]})

df_4

|

Class |

Name |

Chinese |

Math |

| 0 |

1 |

San Zhang |

80 |

80 |

| 1 |

2 |

Si Li |

90 |

75 |

df_4_melted = df_4.melt(id_vars = ['Class','Name'],

value_vars = ['Chinese','Math'],

var_name = 'Subject',

value_name = 'Grade')

df_4_melted

|

Class |

Name |

Subject |

Grade |

| 0 |

1 |

San Zhang |

Chinese |

80 |

| 1 |

2 |

Si Li |

Chinese |

90 |

| 2 |

1 |

San Zhang |

Math |

80 |

| 3 |

2 |

Si Li |

Math |

75 |

df_new = df_4.rename(columns={

'Chinese':'Grade_Chinese','Math':'Grade_Math'})

df_new.head(2)

|

Class |

Name |

Grade_Chinese |

Grade_Math |

| 0 |

1 |

San Zhang |

80 |

80 |

| 1 |

2 |

Si Li |

90 |

75 |

df_new = pd.wide_to_long(df_new,

stubnames=['Grade'],

i = ['Class','Name'],

j = 'Subject',

sep = '_',

suffix = '.+')

df_new.reset_index()

|

Class |

Name |

Subject |

Grade |

| 0 |

1 |

San Zhang |

Chinese |

80 |

| 1 |

1 |

San Zhang |

Math |

80 |

| 2 |

2 |

Si Li |

Chinese |

90 |

| 3 |

2 |

Si Li |

Math |

75 |

利用 pivot 进行变形操作需要满足唯一性的要求,即由于在新表中的行列索引对应了唯一的 value ,因此原表中的 index 和 columns 对应两个列的行组合必须唯一。

利用 pivot 进行变形操作需要满足唯一性的要求,即由于在新表中的行列索引对应了唯一的 value ,因此原表中的 index 和 columns 对应两个列的行组合必须唯一。