银河麒麟高级服务器操作系统V10上基于k8s部署EFK(ElasticSearch Fluentd Kibana)日志收集方案

前言

本文介绍基于银河麒麟高级服务器操作系统V10已安装部署的k8s单机集群上部署EFK方案

本文涉及部署脚本主要基于kubernetes官方项目https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/fluentd-elasticsearch在arm64上的迁移适配项目https://github.com/hknarutofk/fluentd-elasticsearch-arm64

前置条件

银河麒麟高级服务器操作系统V10上安装k8s单机集群:https://blog.csdn.net/m0_46573967/article/details/112935319

银河麒麟高级服务器操作系统V10上k8s部署集成GlusterFS、Heketi:https://blog.csdn.net/m0_46573967/article/details/112983717

一、下载fluentd-elasticsearch-arm64项目

[yeqiang@192-168-110-185 桌面]$ sudo su

[sudo] yeqiang 的密码:

[root@192-168-110-185 桌面]# cd ~

[root@192-168-110-185 ~]# git clone --depth=1 https://github.com/hknarutofk/fluentd-elasticsearch-arm64.git

正克隆到 'fluentd-elasticsearch-arm64'...

remote: Enumerating objects: 36, done.

remote: Counting objects: 100% (36/36), done.

remote: Compressing objects: 100% (32/32), done.

remote: Total 36 (delta 7), reused 15 (delta 0), pack-reused 0

展开对象中: 100% (36/36), 完成.

二、执行部署

[root@192-168-110-185 fluentd-elasticsearch-arm64]# sh deploy.sh

serviceaccount/elasticsearch-logging created

clusterrole.rbac.authorization.k8s.io/elasticsearch-logging created

clusterrolebinding.rbac.authorization.k8s.io/elasticsearch-logging created

statefulset.apps/elasticsearch-logging created

service/elasticsearch-logging created

configmap/fluentd-es-config-v0.2.0 created

serviceaccount/fluentd-es created

clusterrole.rbac.authorization.k8s.io/fluentd-es created

clusterrolebinding.rbac.authorization.k8s.io/fluentd-es created

daemonset.apps/fluentd-es-v2.7.0 created

deployment.apps/kibana-logging created

service/kibana-logging created

说明:脚本会等待es启动,需要耐心等待

检查部署结果

[root@192-168-110-185 fluentd-elasticsearch-arm64]# kubectl get statefulset -n kube-system

NAME READY AGE

elasticsearch-logging 2/2 5m2s

[root@192-168-110-185 fluentd-elasticsearch-arm64]# kubectl get ds -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

fluentd-es-v2.7.0 1 1 1 1 1 5m16s

kube-flannel-ds-arm64 1 1 1 1 1 4h10m

[root@192-168-110-185 fluentd-elasticsearch-arm64]# kubectl get deployment -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

coredns 1/1 1 1 4h10m

dashboard-metrics-scraper 1/1 1 1 4h10m

kibana-logging 1/1 1 1 4m11s

kubernetes-dashboard 1/1 1 1 4h10m

metrics-server 1/1 1 1 4h10m

可以看到elasticsearch-logging启动了两个实例,fluentd因为只有一个节点,因此启动了一个实例,kibana启动了一个实例

三、登陆kibana、创建index pattern

获取登陆地址

[root@192-168-110-185 fluentd-elasticsearch-arm64]# kubectl get svc -n kube-system | grep kibana

kibana-logging NodePort 10.68.206.162 5601:25742/TCP 5m52s

得到登陆地址:http://localhost:25742/

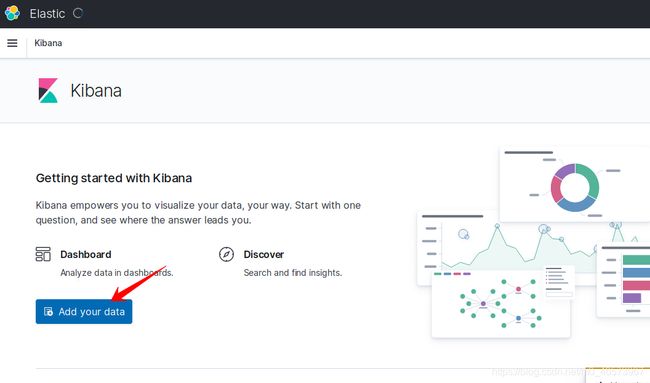

打开火狐,输入地址

选择Explore on my own(右边的安全警告忽略掉)

点击Kibana Visualize & analyze

选择Add your data

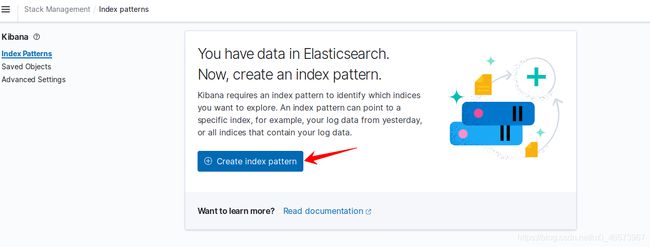

点击Create index pattern按钮

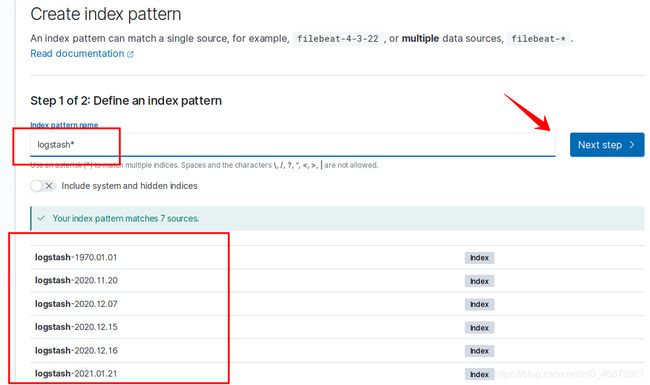

index pattern name输入logstash*, 点击Next step

Time field选择 @timestamp,点击Create index pattern按钮

可以看到index pattern创建成功

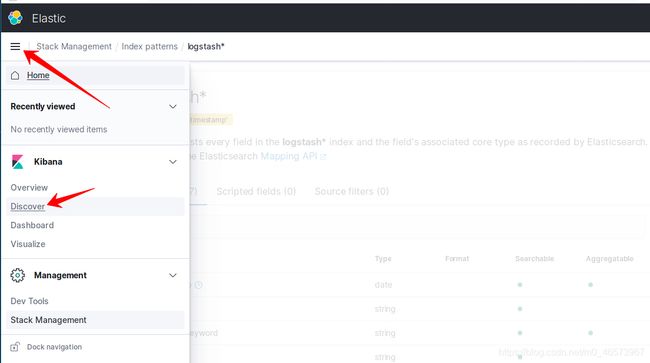

四、kibana搜索日志

点击左侧菜单图标,选择Kibana下面的Discover

接下来就可以通过KQL或者Lucene语法搜索想要的日志内容了。

总结

pv自动创建依赖glusterfs、heketi,配置内容在es-statefulset.yaml,可以根据实际情况修改申请空间大小,本文只是演示,10Gi非常小

# 基于heketi glusterfs 采用gluster-storage存储类型动态创建

volumeClaimTemplates:

- metadata:

name: elasticsearch-logging

creationTimestamp: null

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: glusterfs-storage

volumeMode: Filesystem

本文涉及的镜像

es-image: https://github.com/hknarutofk/fluentd-elasticsearch-arm64/tree/master/es-image

fluentd-es-image:https://github.com/hknarutofk/fluentd-elasticsearch-arm64/tree/master/fluentd-es-image

kibana:https://github.com/hknarutofk/hknarutofk-kibana-oss-docker-arm64/tree/master/7.10.2