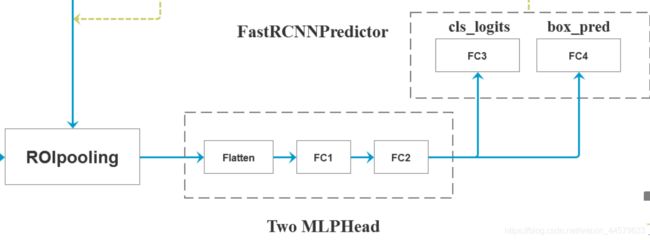

Faster R-CNN ROIAlign TwoMLPHead FastRCNNPredictor

bilibili

class FasterRCNNBase(nn.Module):

"""

Main class for Generalized R-CNN.

Arguments:

backbone (nn.Module):

rpn (nn.Module):

roi_heads (nn.Module): takes the features + the proposals from the RPN and computes

detections / masks from it.

transform (nn.Module): performs the data transformation from the inputs to feed into

the model

"""

def forward(self, images, targets=None):

# type: (List[Tensor], Optional[List[Dict[str, Tensor]]]) -> Tuple[Dict[str, Tensor], List[Dict[str, Tensor]]]

'''...'''

original_image_sizes = torch.jit.annotate(List[Tuple[int, int]], [])

for img in images:

val = img.shape[-2:]

assert len(val) == 2 # 防止输入的是个一维向量

original_image_sizes.append((val[0], val[1]))

# original_image_sizes = [img.shape[-2:] for img in images]

images, targets = self.transform(images, targets) # 对图像进行预处理

# print(images.tensors.shape)

features = self.backbone(images.tensors) # 将图像输入backbone得到特征图

if isinstance(features, torch.Tensor): # 若只在一层特征层上预测,将feature放入有序字典中,并编号为‘0’

features = OrderedDict([('0', features)]) # 若在多层特征层上预测,传入的就是一个有序字典

# 将特征层以及标注target信息传入rpn中

# proposals: List[Tensor], Tensor_shape: [num_proposals, 4],

# 每个proposals是绝对坐标,且为(x1, y1, x2, y2)格式

proposals, proposal_losses = self.rpn(images, features, targets)

# 将rpn生成的数据以及标注target信息传入fast rcnn后半部分

detections, detector_losses = self.roi_heads(features, proposals, images.image_sizes, targets)

# 对网络的预测结果进行后处理(主要将bboxes还原到原图像尺度上)

detections = self.transform.postprocess(detections, images.image_sizes, original_image_sizes)

losses = {

}

losses.update(detector_losses)

losses.update(proposal_losses)

if torch.jit.is_scripting():

if not self._has_warned:

warnings.warn("RCNN always returns a (Losses, Detections) tuple in scripting")

self._has_warned = True

return losses, detections

else:

return self.eager_outputs(losses, detections)

# if self.training:

# return losses

#

# return detections

查看faster_rcnn_framework.py中的forward函数中与TwoMLPHead类相关的部分:

class FasterRCNN(FasterRCNNBase):

def __init__(self, backbone, ... ):

# fast RCNN中roi pooling后的展平处理两个全连接层部分

if box_head is None:

resolution = box_roi_pool.output_size[0] # 默认等于7

representation_size = 1024

box_head = TwoMLPHead(

out_channels * resolution ** 2,

representation_size

)

# 将roi pooling, box_head以及box_predictor结合在一起

roi_heads = RoIHeads(

# box

box_roi_pool, box_head, box_predictor,

box_fg_iou_thresh, box_bg_iou_thresh, # 0.5 0.5

box_batch_size_per_image, box_positive_fraction, # 512 0.25

bbox_reg_weights,

box_score_thresh, box_nms_thresh, box_detections_per_img) # 0.05 0.5 100

)

- 第一个参数

box_roi_pool对应框图中的:

使用的是torchvision中封装的ROIAlign - 第二个参数

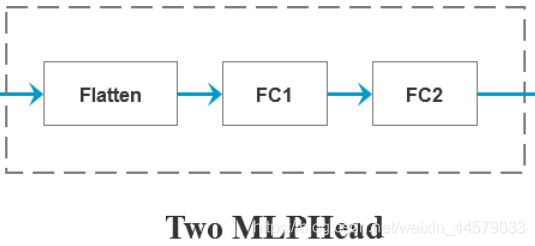

box_head对应框图中的TwoMLPHead,进入faster_rcnn_framework.py中的TwoMLPHead类

class TwoMLPHead(nn.Module):

"""

Standard heads for FPN-based models

Arguments:

in_channels (int): number of input channels

representation_size (int): size of the intermediate representation

"""

def __init__(self, in_channels, representation_size):

super(TwoMLPHead, self).__init__()

self.fc6 = nn.Linear(in_channels, representation_size)

self.fc7 = nn.Linear(representation_size, representation_size)

def forward(self, x):

x = x.flatten(start_dim=1)

x = F.relu(self.fc6(x))

x = F.relu(self.fc7(x))

return x

class FastRCNNPredictor(nn.Module):

def __init__(self, in_channels, num_classes):

super(FastRCNNPredictor, self).__init__()

self.cls_score = nn.Linear(in_channels, num_classes)

self.bbox_pred = nn.Linear(in_channels, num_classes * 4)

def forward(self, x):

if x.dim() == 4:

assert list(x.shape[2:]) == [1, 1]

x = x.flatten(start_dim=1)

scores = self.cls_score(x)

bbox_deltas = self.bbox_pred(x)

return scores, bbox_deltas