安装tensorflow gpu版本出现的问题:Not creating XLA devices, tf_xla_enable_xla_devices not set

装完后用以下两行代码测试,结果竟然是False,输出了图片中的报错,肯定是哪装的有问题

import tensorflow as tf

tf.test.is_gpu_available()

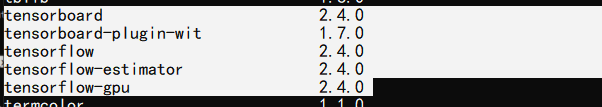

我的tensorflow版本:

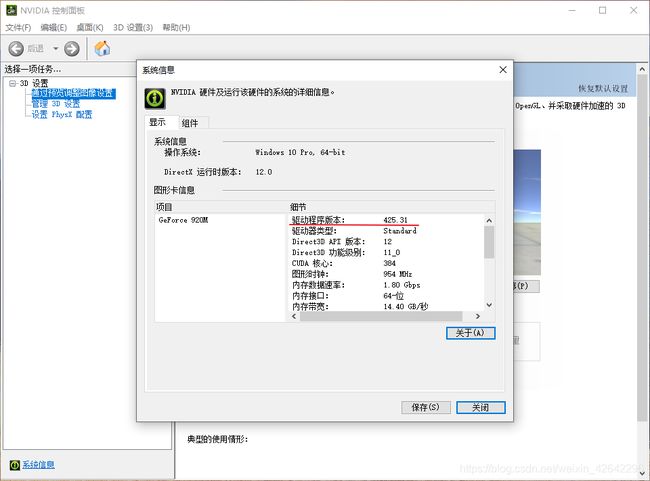

我的cuda版本:cuda_11.1.1_456.81_win10

我的cudnn版本:cuDNN v8.0.4 (September 28th, 2020), for CUDA 11.1

cuda下载地址: https://developer.nvidia.com/cuda-toolkit-archive

cudnn下载地址:(需要注册登录才能下)https://developer.nvidia.com/rdp/cudnn-archive

我觉得版本对应上应该是没有问题的。

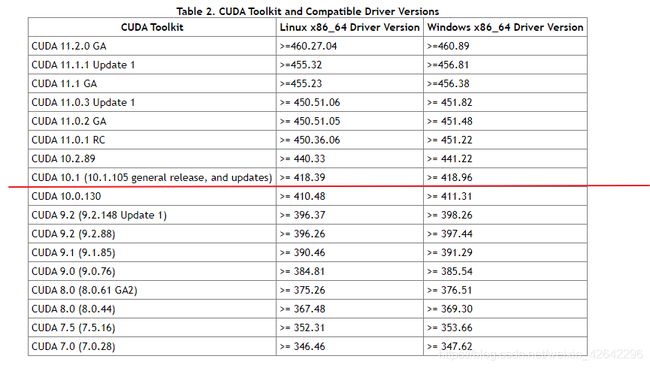

但是网上搜了一阵子后,我开始怀疑是不是版本有问题了,然后我找了一个版本对应关系,猜测我这个可能不适合安装cuda11

然后我就把cuda卸了(控制面板卸载,再把之前的安装文件夹删了),重装了cuda10.1和cudnn8再改了环境变量,发现在导入tensorflow时又报如下错误:

In [1]: import tensorflow as tf

2021-01-13 16:02:54.399456: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'cudart64_110.dll'; dlerror: cudart64_110.dll not found

2021-01-13 16:02:54.399792: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

依提示来看我应该用cuda11来着,但是刚才装的11就没弄好,我也不知道tensorflow2.4应该对应哪个版本的cuda,于是我又重新装了tensorflow2.3,

tensorflow和cuda版本的对应关系: https://tensorflow.google.cn/install/source_windows#tensorflow_2x

这下再导入tf,结果如下:

In [1]: import tensorflow as tf

2021-01-13 16:17:24.786482: W tensorflow/stream_executor/platform/default/dso_loader.cc:59] Could not load dynamic library 'cudart64_101.dll'; dlerror: cudart64_101.dll not found

2021-01-13 16:17:24.786734: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

说明cuda版本装10.1应该是没问题的,但是我在对应目录下能找到cudart64_101.dll,环境变量也是对的,这就很奇怪,然后重启了一下发现tf能导入了,但测试的时候又了出来了新的错误:

Could not load dynamic library 'cudnn64_7.dll'; dlerror: cudnn64_7.dll not found

2021-01-13 16:24:42.757869: W tensorflow/core/common_runtime/gpu/gpu_device.cc:1753] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform.

Skipping registering GPU devices...

2021-01-13 16:24:42.858065: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1257] Device interconnect StreamExecutor with strength 1 edge matrix:

2021-01-13 16:24:42.858269: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1263] 0

2021-01-13 16:24:42.859765: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1276] 0: N

2021-01-13 16:24:42.868797: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x1954aacca00 initialized for platform CUDA (this does not guarantee that XLA will be used). Devices:

2021-01-13 16:24:42.868991: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): GeForce 920M, Compute Capability 3.5

因为我装的cudnn版本是 cuDNN v8.0.4 (September 28th, 2020), for CUDA 10.1,所以报这个错倒也能理解,于是我又重新下了cuDNN v7.6.5 (November 5th, 2019), for CUDA 10.1,复制过去后再重新运行tf.test.is_gpu_available()这下终于提示True了。

但是偶尔它会提示以下错误,不知道是咋回事:

In [3]: tf.test.is_gpu_available()

2021-01-13 16:37:43.104996: F tensorflow/stream_executor/lib/statusor.cc:34] Attempting to fetch value instead of handling error Internal: failed to get device attribute 13 for device 0: CUDA_ERROR_UNKNOWN: unknown error

问题解决!

这东西竟然又花了我一天时间,这个版本对应问题真的是好麻烦。

借鉴了这两篇博客:

安装TensorFlow-gpu 2.2的详细教程:血泪巨坑(包教包会)

tensorflow GPU测试tf.test.is_gpu_avaiable()返回false解决方法

最后可以用如下代码检测一下gpu加速的效果(我的920M竟然还是有些效果)

import tensorflow as tf

import timeit

with tf.device('/cpu:0'):

cpu_a = tf.random.normal([10000, 1000])

cpu_b = tf.random.normal([1000, 2000])

print(cpu_a.device, cpu_b.device)

with tf.device('/gpu:0'):

gpu_a = tf.random.normal([10000, 1000])

gpu_b = tf.random.normal([1000, 2000])

print(gpu_a.device, gpu_b.device)

def cpu_run():

with tf.device('/cpu:0'):

c = tf.matmul(cpu_a, cpu_b)

return c

def gpu_run():

with tf.device('/gpu:0'):

c = tf.matmul(gpu_a, gpu_b)

return c

# warm up 这里就当是先给gpu热热身了

cpu_time = timeit.timeit(cpu_run, number=10)

gpu_time = timeit.timeit(gpu_run, number=10)

print('warmup:', cpu_time, gpu_time)

cpu_time = timeit.timeit(cpu_run, number=10)

gpu_time = timeit.timeit(gpu_run, number=10)

print('run time:', cpu_time, gpu_time)

运行结果:

...

warmup: 3.0217729 0.33188580000000023

run time: 2.979343599999999 0.000807700000001077