Kubernetes集群1.18.3版本安装搭建

Kubernetes集群安装

一步步来,绝对无坑

- Kubernetes集群安装

- 一、docker前期准备

-

- 1、修改主机名:hostnamectl set-hostname k8s-master /k8s-nodes1 / k8s-nodes2

- 2、修改域名文件:vim /etc/hosts

- 3、安装docker

- 4、下载加速器

- 二、kubernetes前期准备

-

- 1、配置kubernetes阿里源

- 2、安装kubernetes组件

- 3、升级内核

- 4、kubernetes必备前提条件

- 5、关闭防火墙

-

- 如果不关闭防火墙,请放下以下端口

- Master节点

- Node节点

- 6、开启docker、并修改docker需要开启的几个参数

- 6-2、内核优化参数(硅谷教育)

- 7、关闭selinux

- 8、安装kubernetes TAB键

- 三、集群构建

-

- 1、配置kubernetes阿里源

- 2、安装kubernetes组件

- 3、开启kubelet

- 4、安装依赖镜像

- 5、安装kubernetes-flannel网络插件

- 6、初始化master节点

- 7、将nodes节点加入kubernetes集群

- 8、修改Docker cgroup驱动程序,改为推荐的systemd

一、docker前期准备

4台centos7.7

| 主机名 | IP地址 | 硬件需求 |

|---|---|---|

| k8s-master | 192.168.168.11 | CPU2核、内存4G |

| k8s-node1 | 192.168.168.12 | CPU2核、内存4G |

| k8s-node2 | 192.168.168.13 | CPU2核、内存4G |

| Harbor | 192.168.168.14 | 私有仓库 |

1、修改主机名:hostnamectl set-hostname k8s-master /k8s-nodes1 / k8s-nodes2

2、修改域名文件:vim /etc/hosts

192.168.168.11 k8s-master

192.168.168.12 k8s-nodes1

192.168.168.13 k8s-nodes2

scp /etc/hosts [email protected]:/etc/hosts

scp /etc/hosts [email protected]:/etc/hosts

3、安装docker

cd /etc/yum.repos.d

vi ali-docker.repo

[ali.docker]

name=mirrors.aliyun.com.docker

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpgyum -y install docker-ce

4、下载加速器

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-‘EOF’

{

“registry-mirrors”: [“https://m41udh8b.mirror.aliyuncs.com”]

}

EOF

二、kubernetes前期准备

1、配置kubernetes阿里源

vim /etc/yum.repos/ali-k8s.repo

[aliyun]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

scp ali-k8s.repo root@k8s-nodes1:/etc/yum.repos.d/

scp ali-k8s.repo root@k8s-nodes2:/etc/yum.repos.d/

2、安装kubernetes组件

yum -y install kubelet kubeadm kubectl

kubeadm:引导集群的命令。kubelet:在群集中所有计算机上运行的组件,它执行诸如启动Pod和容器之类的操作。kubectl:用于与您的集群通信的命令行工具。

安装好之后先不进行任何操作

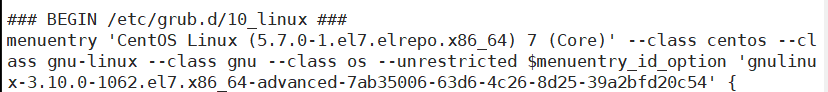

3、升级内核

下载内核官网地址:http://elrepo.org/tiki/tiki-index.php

所有节点,安装elrepo.org源

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

yum install https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpm

所有节点,根据elrepo源其中的elrepo-kernel来获取当前内核列表

yum --disablerepo="*" --enablerepo=“elrepo-kernel” list available --showduplicates

找到日志列表如下:

kernel-ml.x86_64 5.6.15-1.el7.elrepo elrepo-kernel

kernel-ml.x86_64 5.7.0-1.el7.elrepo elrepo-kernel所有节点,下载最新版本的kernel-ml-devel内核

yum --enablerepo=“elrepo-kernel” -y install kernel-ml.x86_64

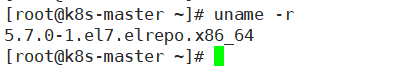

所有节点,查看是否成功安装了最新的 linux内核

从第0号文件开始引导内核,并重新生成一个grub.cfg文件

grub2-set-default 0

grub2-mkconfig -o /boot/grub2/grub.cfg

4、kubernetes必备前提条件

-

集群MAC地址不能相同 cat /sys/class/net/ens33/address

-

集群UUID号不能相同 cat /sys/class/dmi/id/product_uuid

-

必须关闭SWAP分区 swapoff -a ; cp /etc/fstab /etc/fstab_bak

sed -ri ‘11 s/^#*/#/’ /etc/fstab 注释#/dev/mapper/centos-swap swap 让其不再生效-

关闭swap分区的内核参数优化

-

echo “vm.swappiness = 0” >> /etc/sysctl.conf ; sysctl -p

-

free -m 验证是否关闭了swap分区

-

5、关闭防火墙

systemctl disable firewalld ; systemctl stop firewalld

如果不关闭防火墙,请放下以下端口

Master节点

| 协议 | 方向 | 端口范围 | 目的 | 使用者 |

|---|---|---|---|---|

| TCP协议 | 入站 | 64430-64439 | Kubernetes API server | 所有 |

| TCP协议 | 入站 | 2379-2380 | etcd server client API | kube-apiserver, etcd |

| TCP协议 | 入站 | 10250 | Kubelet API | Self, Control plane |

| TCP协议 | 入站 | 10251 | kube-scheduler | Self |

| TCP协议 | 入站 | 10252 | kube-controller-manager | Self |

Node节点

| 协议 | 方向 | 端口范围 | 目的 | 使用者 |

|---|---|---|---|---|

| TCP协议 | 入站 | 10250 | Kubelet API | Self, Control plane |

| TCP协议 | 入站 | 30000-32767 | NodePort Services† | 所有 |

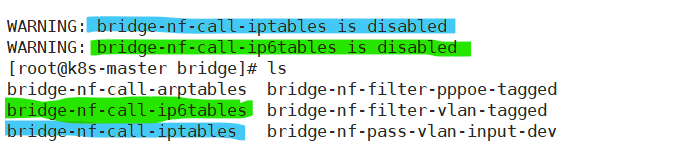

6、开启docker、并修改docker需要开启的几个参数

systemctl start docker; systemctl enable docker

修改 /proc/sys/net/bridge/目录下的配置文件 ;docker Info

echo “net.bridge.bridge-nf-call-iptables = 1” >> /etc/sysctl.conf

echo “net.bridge.bridge-nf-call-ip6tables = 1” >> /etc/sysctl.conf

sysctl -p 生效,如果不生效执行下列两条命令

modprobe ip_vs_rr

modprobe br_netfilter

6-2、内核优化参数(硅谷教育)

vi sysctl.conf

#必须指定的优化参数

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

#优化参数

vm.panic_on_oom = 0

fs.inotify.max_user_instances = 8192

fs.inotify.max_user_watches = 1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6 = 17、关闭selinux

sed -ri ‘s/^SELINUX=enforcing/SELINUX=disable/’ /etc/selinux/config

8、安装kubernetes TAB键

yum -y install bash-completion

source <(kubectl completion bash) && source <(kubeadm completion bash)

vim .bashrc

末尾添加

source <(kubeadm completion bash)

source <(kubectl completion bash)三、集群构建

1、配置kubernetes阿里源

vim /etc/yum.repos/ali-k8s.repo

[aliyun]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

scp ali-k8s.repo root@k8s-nodes1:/etc/yum.repos.d/

scp ali-k8s.repo root@k8s-nodes2:/etc/yum.repos.d/

2、安装kubernetes组件

yum -y install kubelet kubeadm kubectl

kubeadm:引导集群的命令。kubelet:在群集中所有计算机上运行的组件,它执行诸如启动Pod和容器之类的操作。kubectl:用于与您的集群通信的命令行工具。

3、开启kubelet

systemctl start kubelet ; systemctl enable kubelet

此时kubelet是不会开启成功的,没有关系,接着往下继续

4、安装依赖镜像

获取所需镜像列表

[root@k8s-master ~]# kubeadm config images list

W0602 20:06:30.250926 3917 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

k8s.gcr.io/kube-apiserver:v1.18.3

k8s.gcr.io/kube-controller-manager:v1.18.3

k8s.gcr.io/kube-scheduler:v1.18.3

k8s.gcr.io/kube-proxy:v1.18.3

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.7

由于国内无法访问k8s.gcr.io镜像仓库,先从daocloud.io镜像仓库下载所需镜像,然后修改镜像标签。

所有节点上(k8s-master, k8s-node1, k8s-node2)下载安装kubernetes集群所需镜像

# 下载镜像

docker pull daocloud.io/daocloud/kube-apiserver:v1.18.3

docker pull daocloud.io/daocloud/kube-controller-manager:v1.18.3

docker pull daocloud.io/daocloud/kube-scheduler:v1.18.3

docker pull daocloud.io/daocloud/kube-proxy:v1.18.3

docker pull daocloud.io/daocloud/pause:3.2

docker pull daocloud.io/daocloud/etcd:3.4.3-0

docker pull daocloud.io/daocloud/coredns:1.6.7

# 给镜像打tag

docker tag daocloud.io/daocloud/kube-apiserver:v1.18.3 k8s.gcr.io/kube-apiserver:v1.18.3

docker tag daocloud.io/daocloud/kube-controller-manager:v1.18.3 k8s.gcr.io/kube-controller-manager:v1.18.3

docker tag daocloud.io/daocloud/kube-scheduler:v1.18.3 k8s.gcr.io/kube-scheduler:v1.18.3

docker tag daocloud.io/daocloud/kube-proxy:v1.18.3 k8s.gcr.io/kube-proxy:v1.18.3

docker tag daocloud.io/daocloud/pause:3.2 k8s.gcr.io/pause:3.2

docker tag daocloud.io/daocloud/etcd:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0

docker tag daocloud.io/daocloud/coredns:1.6.7 k8s.gcr.io/coredns:1.6.7

# 清理原镜像

docker rmi daocloud.io/daocloud/kube-apiserver:v1.18.3

docker rmi daocloud.io/daocloud/kube-controller-manager:v1.18.3

docker rmi daocloud.io/daocloud/kube-scheduler:v1.18.3

docker rmi daocloud.io/daocloud/kube-proxy:v1.18.3

docker rmi daocloud.io/daocloud/pause:3.2

docker rmi daocloud.io/daocloud/etcd:3.4.3-0

docker rmi daocloud.io/daocloud/coredns:1.6.7

docker images

5、安装kubernetes-flannel网络插件

flannel网络镜像官网:https://quay.io/repository/coreos/flannel?tab=tags

所有节点下载flannel镜像:

docker pull quay.io/coreos/flannel:v0.12.0-amd64

所有节点,创建flannel配置文件

[root@k8s-master ~]# mkdir -p /etc/cni/net.d

[root@k8s-master ~]# vi /etc/cni/net.d/10-flannel.conf

{

"name":"cbr0","type":"flannel","delegate":{

"isDefaultGateway":true}}[root@k8s-master ~]# scp /etc/cni/net.d/10-flannel.conf root@k8s-nodes2:/etc/cni/net.d/10-flannel.conf

所有节点,创建flannel网段内容

mkdir /usr/share/oci-umount/oci-umount.d -p

mkdir /run/flannel

vim /run/flannel/subent.env ; 填写如下内容

FLANNEL_NETWORK=172.20.0.0/16 #网段

FLANNEL_SUBENT=172.20.1.0/24 #子网

FLANNEL_MTU=1450 #最大传输单元

FLANNEL_IPMASQ=true #允许上网

scp /run/flannel/subent.env root@k8s-nodes1:/run/flannel/subent.env

scp /run/flannel/subent.env root@k8s-nodes2:/run/flannel/subent.env

所有节点,重启kubelet、docker

[root@k8s-master ~]# systemctl daemon-reload && systemctl restart kubelet && systemctl restart docker

6、初始化master节点

[root@k8s-master ~]# kubeadm init --pod-network-cidr=10.244.0.0/16

–apiserver-advertise-address 192.168.168.11

–kubernetes-version v1.18.3

--pod-network-cidr=10.244.0.0/16 flannel网络的固定网段

--apiserver-advertise-address 192.168.168.11 通告侦听地址

--kubernetes-version v1.18.3 指定当前的kubernetes版本输入日志信息如下

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

#=========重点执行以下三条配置========

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#==================================

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.168.11:6443 --token g6ycg6.4fde7i4qvdy7rjp8 \

--discovery-token-ca-cert-hash sha256:d73b2aa8037d91de782eca82ef926c3ec2c58812a53efdce1a56d21bcd6ba063

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown ( i d − u ) : (id -u): (id−u):(id -g) $HOME/.kube/config

1.下载flannel插件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

只有主节点,安装flannel网络插件,如果出现不能下载,请点击跳转

2.根据yml剧本,安装此插件

kubectl apply -f kube-flannel.yml

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

3.查看pod状态

kubectl get pod --all-namespaces

如果出现Init:ImagePullBackOff报错,请点击跳转

7、将nodes节点加入kubernetes集群

kubeadm join 192.168.168.11:6443 --token g6ycg6.4fde7i4qvdy7rjp8

–discovery-token-ca-cert-hash sha256:d73b2aa8037d91de782eca82ef926c3ec2c58812a53efdce1a56d21bcd6ba063

kubectl get nodes 查看集群节点状况

[root@k8s-master kubernetes]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 29m v1.18.3

k8s-node1 Ready <none> 24m v1.18.3

k8s-node2 Ready <none> 24m v1.18.3STATUS必须是 Ready状态才是加入成功

8、修改Docker cgroup驱动程序,改为推荐的systemd

官方指南:https://kubernetes.io/docs/setup/cri/

[root@k8s-master ~]# vim /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}[root@k8s-master ~]# mkdir -p /etc/systemd/system/docker.service.d

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl restart docker