回归预测 | MATLAB实现PSO-BP多输入多输出

回归预测 | MATLAB实现PSO-BP多输入多输出

本程序基于MATLAB实现PSO-BP多输入多输出回归型预测,3个输入,2个输出。

主程序代码如下:

%% 清除工作空间中的变量和图形

clc

clear

%% 构造样本集

load dataOTH input output

%%节点个数

inputnum=3;

hiddennum=6;

outputnum=2;

numsum=inputnum*hiddennum+hiddennum+hiddennum*outputnum+outputnum;

%划分训练、测试样本

input_train=input(1:72,:)';

input_test=input(73:108,:)';

output_train=output(1:72,1:2)';

output_test=output(73:108,1:2)';

%数据归一化

[inputn,inputps]=mapminmax(input_train);

[outputn,outputps]=mapminmax(output_train);

%%构建网络

net=newff(inputn,outputn,hiddennum);

%参数初始化

%粒子群算法中的两个参数

c1 = 1.49445;

c2 = 1.49445;

%进化次数

maxgen=20;

%粒子规模

sizepop=20;

%粒子和速度最大最小值

Vmax=1;

Vmin=-1;

popmax=5;

popmin=-5;

for i=1:sizepop

pop(i,:)=5*rands(1,numsum);

V(i,:)=rands(1,numsum);

fitness(i)=fun(pop(i,:),inputnum,hiddennum,outputnum,net,inputn,outputn);

end

%寻找粒子极值和群体极值

[bestfitness,bestindex]=min(fitness);

%全局最佳

zbest=pop(bestindex,:);

%个体最佳

gbest=pop;

%个体最佳适应度值

fitnessgbest=fitness;

%全局最佳适应度值

fitnesszbest=bestfitness;

%% 迭代寻优

for i=1:maxgen

i;

for j=1:sizepop

%速度更新

V(j,:) = V(j,:) + c1*rand*(gbest(j,:) - pop(j,:)) + c2*rand*(zbest - pop(j,:));

V(j,find(V(j,:)>Vmax))=Vmax;

V(j,find(V(j,:)popmax))=popmax;

pop(j,find(pop(j,:)0.95

pop(j,pos)=5*rands(1,1);

end

%适应度值

fitness(j)=fun(pop(j,:),inputnum,hiddennum,outputnum,net,inputn,outputn);

end

for j=1:sizepop

%群体最优更新

if fitness(j) < fitnessgbest(j)

gbest(j,:) = pop(j,:);

fitnessgbest(j) = fitness(j);

end

%群体最优更新

if fitness(j) < fitnesszbest

zbest = pop(j,:);

fitnesszbest = fitness(j);

end

end

yy(i)=fitnesszbest;

end

x=zbest;

%%

% %

w1=x(1:inputnum*hiddennum);

B1=x(inputnum*hiddennum+1:inputnum*hiddennum+hiddennum);

w2=x(inputnum*hiddennum+hiddennum+1:inputnum*hiddennum+hiddennum+hiddennum*outputnum);

B2=x(inputnum*hiddennum+hiddennum+hiddennum*outputnum+1:inputnum*hiddennum+hiddennum+hiddennum*outputnum+outputnum);

net.iw{1,1}=reshape(w1,hiddennum,inputnum);

net.lw{2,1}=reshape(w2,outputnum,hiddennum);

net.b{1}=reshape(B1,hiddennum,1);

net.b{2}=reshape(B2,outputnum,1);

%%

net.trainParam.epochs=1000;

net.trainParam.lr=0.9;

net.trainParam.goal=0.00001;

net.trainParam.max_fail=6;

net.trainParam.min_grad=1e-15;

net.trainParam.showWindow=1;

net.trainFcn='trainlm';

[net,per2]=train(net,inputn,outputn);

inputn_test=mapminmax('apply',input_test,inputps);

an=sim(net,inputn_test);

test_simu=mapminmax('reverse',an,outputps);

error=test_simu-output_test;

variance=error./output_test;

mseindepth=mse(error(1,:));

avgerrorindepth=mean(error(1,:));

mseinra1=mse(error(2,:));

avgerrorinra1=mean(error(2,:));

%% 数据可视化

%%

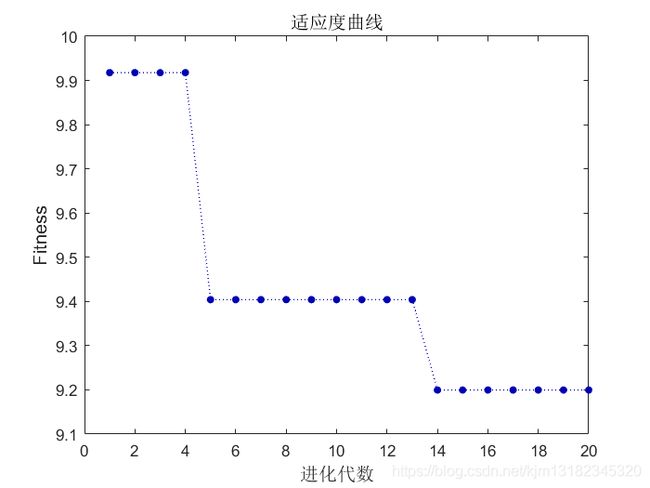

figure(1)

plot(yy,':o','Color',[0 0 180]./255,'linewidth',0.8,'Markersize',4,'MarkerFaceColor',[0 0 180]./255)

title('适应度曲线','fontsize',12)

xlabel('进化代数','fontsize',12);ylabel('Fitness','fontsize',12);

figure(2)

plot(test_simu(1,:),'r:o','Color',[255 0 0]./255,'linewidth',0.8,'Markersize',4,'MarkerFaceColor',[255 0 0]./255)

hold on

plot(output_test(1,:),'k-s','Color',[0 0 0]./255,'linewidth',0.8,'Markersize',5,'MarkerFaceColor',[0 0 0]./255);

legend('预测值','实际值')

title('BP神经网络预测1','fontsize',12)

ylabel('预测值','fontsize',12)

xlabel('样本值','fontsize',12);

figure(3)

plot(test_simu(2,:),'b:o','Color',[0 0 255]./255,'linewidth',0.8,'Markersize',4,'MarkerFaceColor',[0 0 255]./255)

hold on

plot(output_test(2,:),'k-s','Color',[0 0 0]./255,'linewidth',0.8,'Markersize',5,'MarkerFaceColor',[0 0 0]./255);

legend('预测值','实际值')

title('BP神经网络预测2','fontsize',12)

ylabel('预测值','fontsize',12)

xlabel('样本值','fontsize',12);

%% 误差分析

figure(4)

plot(variance(1,:)*100,'b-o','Color',[255 0 255]./255,'linewidth',0.8,'Markersize',4,'MarkerFaceColor',[255 0 255]./255);

title('预测相对误差1','fontsize',12)

ylabel('百分比误差(%)','fontsize',12)

xlabel('测试样本','fontsize',12);

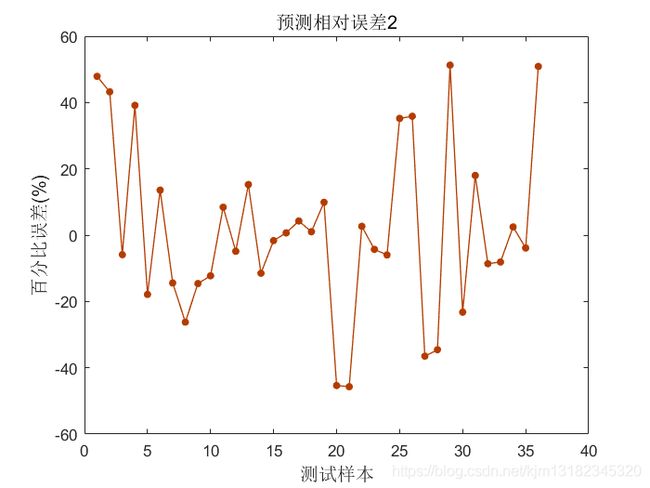

figure(5)

plot(variance(2,:)*100,'b-o','Color',[180 60 0]./255,'linewidth',0.8,'Markersize',4,'MarkerFaceColor',[180 60 0]./255);

title('预测相对误差2','fontsize',12)

ylabel('百分比误差(%)','fontsize',12)

xlabel('测试样本','fontsize',12);

函数子文件如下:

function error = fun(x,inputnum,hiddennum,outputnum,net,inputn,outputn)

w1=x(1:inputnum*hiddennum);

B1=x(inputnum*hiddennum+1:inputnum*hiddennum+hiddennum);

w2=x(inputnum*hiddennum+hiddennum+1:inputnum*hiddennum+hiddennum+hiddennum*outputnum);

B2=x(inputnum*hiddennum+hiddennum+hiddennum*outputnum+1:inputnum*hiddennum+hiddennum+hiddennum*outputnum+outputnum);

net=newff(inputn,outputn,hiddennum);

%Construction of BP network

net.trainParam.epochs=3000;

net.trainParam.lr=0.1;

net.trainParam.mc=0.9;

net.trainFcn='trainlm';

net.trainParam.goal=0.00001;

net.trainParam.show=50;

net.trainParam.showWindow=0;

%BP network training

net.iw{1,1}=reshape(w1,hiddennum,inputnum);

net.lw{2,1}=reshape(w2,outputnum,hiddennum);

net.b{1}=reshape(B1,hiddennum,1);

net.b{2}=reshape(B2,outputnum,1);

net=train(net,inputn,outputn);

an=sim(net,inputn);

error=sum(abs(an(:)-outputn(:)));

- 建模不易,需要定制模型的话有偿咨询,需要的同学添加Q~Q【1153460737】交流,记得备注。

- 也欢迎关注公=众=号【交通专业小明同学】,一起学习,一起进步。

- 程序和数据下载地址参见本人主页资源。