Linear Regression

Reference:

Bishop C M. Pattern recognition and machine learning[M]. springer, 2006.

- Chapter 1 up to and including Subsection 1.2.5

- Chapter 3 up to and including 3.1.2

Content

-

- Linear Regression: Intro

-

- Example: Polynomial Curve Fitting

- Probabilistic Perspective

- Linear Basis Function Models

-

- Maximum likelihood and least squares

- Geometry of least squares

Linear Regression: Intro

Example: Polynomial Curve Fitting

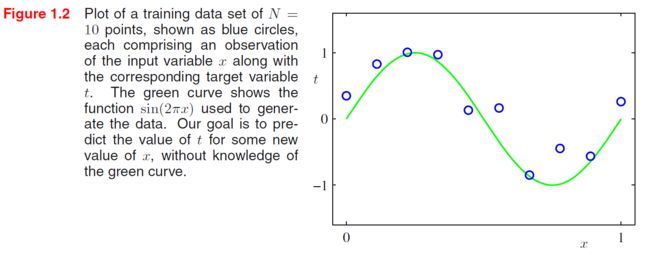

Suppose that we are given a training set comprising N N N observations of x x x, written X = ( x ( 1 ) , ⋯ , x ( N ) ) T \mathbf X=(x^{(1)},\cdots,x^{(N)})^T X=(x(1),⋯,x(N))T, together with corresponding observations of the values of t t t, denoted t = ( t ( 1 ) , ⋯ , t ( N ) ) T \mathbf t=(t^{(1)},\cdots,t^{(N)})^T t=(t(1),⋯,t(N))T. Our goal is to exploit this training set in order to make predictions of the value of t ^ \hat t t^ of the target variable for some new value x ^ \hat x x^ of the input variable.

For this moment, we shall fit the data using a polynomial function of the form

y ( x , w ) = w 0 + w 1 x + ⋯ + w M x M = ∑ j = 0 M w j x j (CF.1) y(x,\mathbf w)=w_0+w_1x+\cdots+w_Mx^M=\sum_{j=0}^Mw_jx^j\tag{CF.1} y(x,w)=w0+w1x+⋯+wMxM=j=0∑Mwjxj(CF.1)

where M M M is the order of the polynomial. Note that, although the polynomial function y ( x , w ) y(x,\mathbf w) y(x,w) is a nonlinear function of x x x, it is a linear function of the coefficients w \mathbf w w. Functions, such as the polynomial, which are linear in the unknown parameters have important properties and are called linear models.

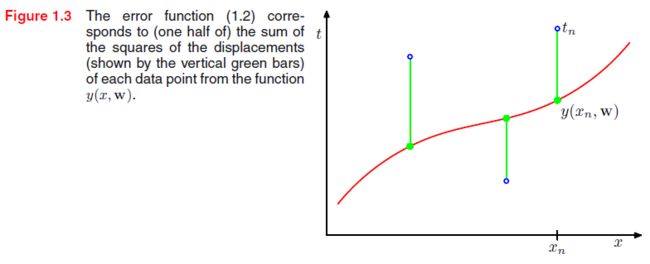

The values of the coefficients will be determined by fitting the polynomial to the training data. This can be done by minimizing an error function that measures the misfit between the function y ( x , w ) y(x,\mathbf w) y(x,w). One simple choice of error function, is given by the sum of the squares of the errors between the predictions y ( x ( n ) , w ) y(x^{(n)},\mathbf w) y(x(n),w) for each data point x ( n ) x^{(n)} x(n) and the corresponding target values t ( n ) t^{(n)} t(n), so that we minimize

E ( w ) = 1 2 ∑ n = 1 N { y ( x ( n ) , w ) − t ( n ) } 2 (CF.2) E(\mathbf w)=\frac{1}{2}\sum_{n=1}^N \{ y(x^{(n)},\mathbf w)-t^{(n)} \}^2\tag{CF.2} E(w)=21n=1∑N{ y(x(n),w)−t(n)}2(CF.2)

where the factor of 1 / 2 1/2 1/2 is included for later convenience.

We can solve the curve fitting problem by setting the derivative of E ( w ) E(\mathbf w) E(w) w.r.t. w \mathbf w w equal to zero. Because the error function is a quadratic function of the coefficients w \mathbf w w, its derivatives with respect to the coefficients will be linear in the elements of $ \mathbf w$, and so the minimization of the error function has a unique solution, denoted by w ∗ \mathbf w^* w∗, which can be found in closed form. The resulting polynomial is given by the function y ( x , w ∗ ) y(x,\mathbf w^*) y(x,w∗).

There remains the problem of choosing the order M M M of the polynomial. In Figure 1.4, we show four examples of the results of fitting polynomials having orders M = 0 , 1 , 3 , 9 M = 0, 1, 3, 9 M=0,1,3,9 to the data set shown in Figure 1.2.

We notice that the constant ( M = 0 ) (M = 0) (M=0) and first order ( M = 1 ) (M = 1) (M=1) polynomials give rather poor fits to the data and consequently rather poor representations of the function s i n ( 2 π x ) sin(2πx) sin(2πx). The third order ( M = 3 ) (M = 3) (M=3) polynomial seems to give the best fit to the function s i n ( 2 π x ) sin(2πx) sin(2πx) of the examples shown in Figure 1.4. When we go to a much higher order polynomial ( M = 9 ) (M = 9) (M=9), we obtain an excellent fit to the training data since this polynomial contains 10 10 10 degrees of freedom corresponding to the 10 10 10 coefficients w 0 , ⋯ , w 9 w_0,\cdots,w_9 w0,⋯,w9. In fact, the polynomial passes exactly through each data point and E ( w ∗ ) = 0 E(\mathbf w^*) = 0 E(w∗)=0. However, the fitted curve oscillates wildly and gives a very poor representation of the function s i n ( 2 π x ) sin(2πx) sin(2πx). This latter behavior is known as overfitting.

We shall see that the least squares approach to finding the model parameters represents a specific case of maximum likelihood, and that the over-fitting problem can be understood as a general property of maximum likelihood.

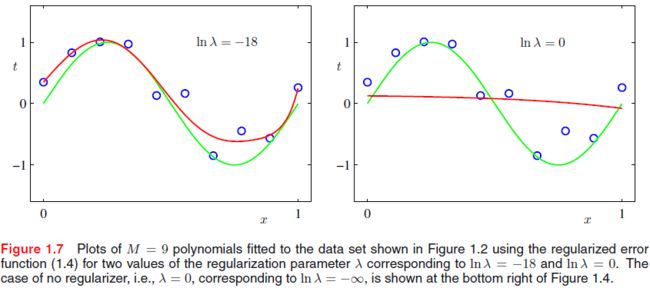

One technique that is often used to control the over-fitting phenomenon in such cases is that of regularization, which involves adding a penalty term to the error function in order to discourage the coefficients from reaching large values. The simplest such penalty term takes the form of a sum of squares of all of the coefficients, leading to a modified error function of the form

E ~ ( w ) = 1 2 ∑ n = 1 N { y ( x ( n ) , w ) − t ( n ) } 2 + λ 2 ∥ w ∥ 2 (CF.3) \tilde E(\mathbf w)=\frac{1}{2}\sum_{n=1}^N \{ y(x^{(n)},\mathbf w)-t^{(n)}\}^2+\frac{\lambda}{2}\|\mathbf w\|^2\tag{CF.3} E~(w)=21n=1∑N{ y(x(n),w)−t(n)}2+2λ∥w∥2(CF.3)

where the λ \lambda λ governs the relative importance of the regularization term compared with the sum-of-squares error term.

Probabilistic Perspective

We have seen how the problem of polynomial curve fitting can be expressed in terms of error minimization. Here we return to the curve fitting example and view it from a probabilistic perspective, thereby gaining some insights into error functions and regularization, as well as taking us towards a full Bayesian treatment.

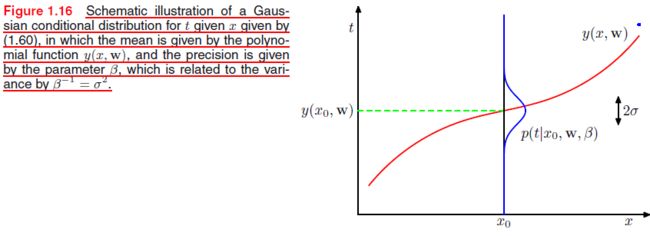

We assume that, given the value of x x x, the corresponding value of t t t has a Gaussian distribution with a mean equal to the value y ( x , w ) y(x,\mathbf w) y(x,w) of the polynomial curve given by ( C F . 1 ) (CF.1) (CF.1). Thus we have

p ( t ∣ x , w , β ) = N ( t ∣ y ( x , w ) , β − 1 ) (PP.1) p(t|x,\mathbf w,\beta)=\mathcal N(t|y(x,\mathbf w),\beta^{-1})\tag{PP.1} p(t∣x,w,β)=N(t∣y(x,w),β−1)(PP.1)

where we have defined a precision parameter β \beta β corresponding to the inverse variance of the distribution.

We now use the training data ( X , t ) (\mathbf X,\mathbf t) (X,t) to determine the value of the unknown parameters w \mathbf w w and β \beta β by maximum likelihood. If the data are assumed to be drawn independently from the distribution ( P P . 1 ) (PP.1) (PP.1), then the likelihood function is given by

p ( t ∣ X , w , β ) = ∏ n = 1 N N ( t ( n ) ∣ y ( x ( n ) , w ) , β − 1 ) (PP.2) p(\mathbf t|\mathbf X,\mathbf w,\beta)=\prod_{n=1}^N\mathcal N(t^{(n)}|y(x^{(n)},\mathbf w),\beta^{-1})\tag{PP.2} p(t∣X,w,β)=n=1∏NN(t(n)∣y(x(n),w),β−1)(PP.2)

It is convenient to maximize the logarithm of the likelihood function.

ln p ( t ∣ X , w , β ) = − β 2 ∑ n = 1 N { y ( x ( n ) , w ) − t ( n ) } 2 + N 2 ln β − N 2 ln ( 2 π ) (PP.3) \ln p(\mathbf t|\mathbf X,\mathbf w,\beta)=-\frac{\beta}{2}\sum_{n=1}^N\{ y(x^{(n)},\mathbf w)-t^{(n)} \}^2+\frac{N}{2}\ln \beta-\frac{N}{2}\ln (2\pi)\tag{PP.3} lnp(t∣X,w,β)=−2βn=1∑N{ y(x(n),w)−t(n)}2+2Nlnβ−2Nln(2π)(PP.3)

We therefore see that maximizing likelihood is equivalent, so far as determining w \mathbf w w is concerned, to minimizing the sum-of-squares error function defined by ( C F . 2 ) (CF.2) (CF.2).

E ( w ) = 1 2 ∑ n = 1 N { y ( x ( n ) , w ) − t ( n ) } 2 (CF.2) E(\mathbf w)=\frac{1}{2}\sum_{n=1}^N \{ y(x^{(n)},\mathbf w)-t^{(n)} \}^2\tag{CF.2} E(w)=21n=1∑N{ y(x(n),w)−t(n)}2(CF.2)

The optimized value is denoted as w M L \mathbf w_{ML} wML.

We can also use maximum likelihood to determine the precision parameter β \beta β of the Gaussian conditional distribution. Maximizing ( P P . 3 ) (PP.3) (PP.3) w.r.t. β \beta β gives

1 β M L = 1 N ∑ n = 1 N { y ( x ( n ) , w ) − t ( n ) } 2 (PP.4) \frac{1}{\beta_{ML}}=\frac{1}{N}\sum_{n=1}^N\{ y(x^{(n)},\mathbf w)-t^{(n)} \}^2\tag{PP.4} βML1=N1n=1∑N{ y(x(n),w)−t(n)}2(PP.4)

Note that the solution for w M L \mathbf w_{ML} wML decouples from that for β \beta β, so we can first evaluate ( C F . 2 ) (CF.2) (CF.2) and subsequently use this result to evaluate ( P P . 4 ) (PP.4) (PP.4).

Having determined the parameters w \mathbf w w and β \beta β, we can now make predictions for new values of x x x. Because we now have a probabilistic model, these are expressed in terms of the predictive distribution that gives the probability distribution over t, rather than simply a point estimate, and is obtained by substituting the maximum likelihood parameters into ( P P . 1 ) (PP.1) (PP.1) to give

p ( t ∣ x , w M L , β M L ) = N ( t ∣ y ( x , w M L ) , β M L − 1 ) (PP.5) p(t| x,\mathbf w_{ML},\beta_{ML})=\mathcal N(t|y(x,\mathbf w_{ML}),\beta_{ML}^{-1})\tag{PP.5} p(t∣x,wML,βML)=N(t∣y(x,wML),βML−1)(PP.5)

Now let us take a step towards a more Bayesian approach and introduce a prior distribution over the polynomial coefficients w \mathbf w w. For simplicity, let us consider a Gaussian distribution of the form

p ( w ∣ α ) = N ( w ∣ 0 , α − 1 I ) = ( α 2 π ) ( M + 1 ) / 2 exp { − α 2 w T w } (PP.6) p(\mathbf w|\alpha)=\mathcal{N}(\mathbf w|\mathbf 0,\alpha^{-1}\mathbf I)=\left(\frac{\alpha}{2\pi}\right)^{(M+1)/2}\exp\left\{ -\frac{\alpha}{2}\mathbf w^T \mathbf w \right\}\tag{PP.6} p(w∣α)=N(w∣0,α−1I)=(2πα)(M+1)/2exp{ −2αwTw}(PP.6)

where α \alpha α is the precision of the distribution, and ( M + 1 ) (M+1) (M+1) is the total number of elements in the vector w \mathbf w w for an M t h M^{th} Mth order polynomial.

Using Bayes’ theorem, the posterior distribution for w \mathbf w w is proportional to the product of the prior distribution and the likelihood function

p ( w ∣ X , t , α , β ) ∝ p ( t ∣ X , w , β ) p ( w ∣ α ) (PP.7) p(\mathbf w|\mathbf X,\mathbf t,\alpha,\beta) \varpropto p(\mathbf t|\mathbf X,\mathbf w,\beta)p(\mathbf w|\alpha)\tag{PP.7} p(w∣X,t,α,β)∝p(t∣X,w,β)p(w∣α)(PP.7)

We can now determine w by finding the most probable value of w \mathbf w w given the data, in other words by maximizing the posterior distribution. This technique is called maximum posterior, or simply MAP. Taking the negative logarithm of ( P P . 7 ) (PP.7) (PP.7) and combining with ( P P . 3 ) (PP.3) (PP.3) and ( P P . 6 ) (PP.6) (PP.6), we find that the maximum of the posterior is given by the minimum of

β 2 ∑ n = 1 N { y ( x ( n ) , w ) − t ( n ) } 2 + α 2 w T w (PP.8) \frac{\beta}{2}\sum_{n=1}^N\{ y(x^{(n)},\mathbf w)-t^{(n)} \}^2+\frac{\alpha}{2}\mathbf w^T \mathbf w \tag{PP.8} 2βn=1∑N{ y(x(n),w)−t(n)}2+2αwTw(PP.8)

Thus we see that maximizing the posterior distribution is equivalent to minimizing the regularized sum-of-squares error function encountered earlier in the form ( C F . 3 ) (CF.3) (CF.3), with a regularization parameter given by λ = α / β \lambda=\alpha/\beta λ=α/β.

Linear Basis Function Models

The simplest linear model for regression involves a linear combination of the input variables

y ( x , w ) = w 0 + w 1 x 1 + ⋯ w D x D (LM.1) y(\mathbf x,\mathbf w)=w_0+w_1x_1+\cdots w_Dx_D\tag{LM.1} y(x,w)=w0+w1x1+⋯wDxD(LM.1)

where x = [ x 1 , x 2 , ⋯ , x D ] T \mathbf x=[x_1,x_2,\cdots, x_D]^T x=[x1,x2,⋯,xD]T are the features. This is often simply known as linear regression. However, a linear function of the input variables x i x_i xi imposes significant limitations on the model. We therefore extend the class of models by considering linear combinations of fixed nonlinear functions of the input variables of the form

y ( x , w ) = w 0 + ∑ j = 1 M − 1 w j ϕ j ( x ) (LM.2) y(\mathbf x,\mathbf w)=w_0+\sum_{j=1}^{M-1}w_j\phi_j(\mathbf x)\tag{LM.2} y(x,w)=w0+j=1∑M−1wjϕj(x)(LM.2)

where ϕ j ( x ) \phi_j(\mathbf x) ϕj(x) are known as basis functions.

It is often convenient to define an additional dummy ‘basis function’ ϕ 0 ( x ) = 1 \phi_0(\mathbf x)=1 ϕ0(x)=1 so that

y ( x , w ) = ∑ j = 0 M − 1 w j ϕ j ( x ) = w T ϕ ( x ) (LM.3) y(\mathbf x,\mathbf w)=\sum_{j=0}^{M-1}w_j\phi_j(\mathbf x)=\mathbf w^T\phi(\mathbf x)\tag{LM.3} y(x,w)=j=0∑M−1wjϕj(x)=wTϕ(x)(LM.3)

By using nonlinear basis functions, we allow the function y ( x , w ) y(\mathbf x,\mathbf w) y(x,w) to be a nonlinear function of the input vector x \mathbf x x. Functions of the form ( L M . 3 ) (LM.3) (LM.3) are called linear models, however, because this function is linear in w \mathbf w w.

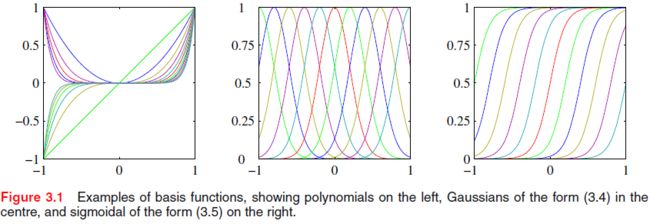

In the [curve fitting example](# Example: Polynomial Curve Fitting), the basis functions take the form of powers of x x x so that ϕ j ( x ) = x j \phi_j(x)=x^j ϕj(x)=xj. There are many other possible choices for the basis functions, for example

Gaussian basis functions: ϕ j ( x ) = exp { − ( x − μ j ) 2 2 s 2 } \text{Gaussian basis functions: }\phi_j(x)=\exp \left\{ -\frac{(x-\mu_j)^2}{2s^2} \right\} Gaussian basis functions: ϕj(x)=exp{ −2s2(x−μj)2}

Sigmoidal basis functions: ϕ j ( x ) = σ ( x − μ j s ) where σ ( a ) is the sigmoid function defined by σ ( a ) = 1 1 + exp ( − a ) \text{Sigmoidal basis functions: } \phi_j(x)=\sigma\left(\frac{x-\mu_j}{s}\right)\\\text{ where }\sigma(a) \text{ is the sigmoid function defined by }\sigma(a)=\frac{1}{1+\exp(-a)} Sigmoidal basis functions: ϕj(x)=σ(sx−μj) where σ(a) is the sigmoid function defined by σ(a)=1+exp(−a)1

Most of the discussion, however, is independent of the particular choice of basis function set, and so for most of our discussion we shall not specify the particular form of the basis functions.

Maximum likelihood and least squares

In [Probabilistic Perspective](# Probabilistic Perspective), we showed that the error function could be motivated as the maximum likelihood solution under the assumed Gaussian noise model. Let us return to this discussion and consider the least squares approach, and its relation to maximum likelihood, in more detail.

As before, we assume that the target variable t t t is given by a deterministic function y ( x , w ) y(\mathbf x,\mathbf w) y(x,w) with additive Gaussian noise so that

t = y ( x , w ) + ϵ (LM.4) t=y(\mathbf x,\mathbf w)+\epsilon \tag{LM.4} t=y(x,w)+ϵ(LM.4)

where ϵ \epsilon ϵ is a zero mean Gaussian random variable with precision β \beta β. Thus we can write

p ( t ∣ x , w , β ) = N ( t ∣ y ( x , w ) , β − 1 ) (LM.5) p(t|\mathbf x,\mathbf w,\beta)=\mathcal{N}(t|y(\mathbf x,\mathbf w),\beta^{-1})\tag{LM.5} p(t∣x,w,β)=N(t∣y(x,w),β−1)(LM.5)

Now consider a data set of inputs X = { x ( 1 ) , ⋯ , x ( N ) } \mathbf X=\{\mathbf x^{(1)} ,\cdots, \mathbf x^{(N)} \} X={ x(1),⋯,x(N)} with corresponding target values t = [ t ( 1 ) , ⋯ , t ( N ) ] \mathbf t=[t^{(1)},\cdots,t^{(N)}] t=[t(1),⋯,t(N)]. Making the assumption that these data points are drawn independently from the distribution ( L M . 5 ) (LM.5) (LM.5), we obtain the following expression for the likelihood function, which is a function of the adjustable parameters w \mathbf w w and β \beta β, in the form

p ( t ∣ X , w , β ) = ∏ n = 1 N N ( t ( n ) ∣ w T ϕ ( x ( n ) ) , β − 1 ) (LM.6) p(\mathbf t|\mathbf X,\mathbf w,\beta)=\prod_{n=1}^N\mathcal{N}(t^{(n)}|\mathbf w^T\phi(\mathbf x^{(n)}),\beta^{-1})\tag{LM.6} p(t∣X,w,β)=n=1∏NN(t(n)∣wTϕ(x(n)),β−1)(LM.6)

Since X \mathbf X X always appear in the set of conditioning variables, we will drop the explicit X \mathbf X X from expressions for conciseness. Taking the logarithm of the likelihood function, we have

ln p ( t ∣ w , β ) = ∑ n = 1 N ln N ( t ( n ) ∣ w T ϕ ( x ( n ) ) , β − 1 ) = N 2 ln β − N 2 ln ( 2 π ) − β E D ( w ) (LM.7) \begin{aligned} \ln p(\mathbf{t}|\mathbf{w}, \beta) &=\sum_{n=1}^{N} \ln \mathcal{N}\left(t^{(n)}|\mathbf{w}^{\mathrm{T}} \phi\left(\mathbf{x}^{(n)}\right), \beta^{-1}\right) \\ &=\frac{N}{2} \ln \beta-\frac{N}{2} \ln (2 \pi)-\beta E_{D}(\mathbf{w}) \end{aligned}\tag{LM.7} lnp(t∣w,β)=n=1∑NlnN(t(n)∣wTϕ(x(n)),β−1)=2Nlnβ−2Nln(2π)−βED(w)(LM.7)

where the sum-of-squares error function is defined by

E D ( w ) = 1 2 ∑ n = 1 N { t ( n ) − w T ϕ ( x ( n ) ) } 2 (LM.8) E_{D}(\mathbf{w})=\frac{1}{2} \sum_{n=1}^{N}\left\{t^{(n)}-\mathbf{w}^{\mathrm{T}} {\phi}\left(\mathbf{x}^{(n)}\right)\right\}^{2}\tag{LM.8} ED(w)=21n=1∑N{ t(n)−wTϕ(x(n))}2(LM.8)

We see that maximization of the likelihood function under a conditional Gaussian noise distribution for a linear model is equivalent to minimizing a sum-of-squares error function given by E D ( w ) E_D(\mathbf w) ED(w).

Consider first the maximization with respect to w \mathbf w w. The gradient of the log likelihood function ( L M . 7 ) (LM.7) (LM.7) takes the form

∇ ln p ( t ∣ w , β ) = ∑ n = 1 N { t ( n ) − w T ϕ ( x ( n ) ) } ϕ ( x ( n ) ) T (LM.9) \nabla \ln p(\mathbf t|\mathbf w,\beta)=\sum_{n=1}^N\left\{t^{(n)}-\mathbf{w}^{\mathrm{T}} {\phi}\left(\mathbf{x}^{(n)}\right)\right\}\phi(\mathbf x^{(n)})^{\mathrm{T}}\tag{LM.9} ∇lnp(t∣w,β)=n=1∑N{ t(n)−wTϕ(x(n))}ϕ(x(n))T(LM.9)

Setting this gradient to zero gives

∑ n = 1 N t ( n ) ϕ ( x ( n ) ) T − w T ( ∑ n = 1 N ϕ ( x ( n ) ) ϕ ( x ( n ) ) T ) = 0 (LM.10) \sum_{n=1}^Nt^{(n)}\phi(\mathbf x^{(n)})^{\mathrm{T}}-\mathbf{w}^{\mathrm{T}} \left(\sum_{n=1}^N {\phi}\left(\mathbf{x}^{(n)}\right)\phi(\mathbf x^{(n)})^{\mathrm{T}}\right)=0\tag{LM.10} n=1∑Nt(n)ϕ(x(n))T−wT(n=1∑Nϕ(x(n))ϕ(x(n))T)=0(LM.10)

Solving for w \mathbf w w we obtain

w M L = ( Φ T Φ ) − 1 Φ T t ≜ Φ † t (LM.11) \mathbf w_{ML}=(\boldsymbol\Phi^T\boldsymbol\Phi)^{-1}\boldsymbol\Phi^T \mathbf t\triangleq\boldsymbol\Phi^{\dagger}\mathbf t\tag{LM.11} wML=(ΦTΦ)−1ΦTt≜Φ†t(LM.11)

where

Φ = [ ϕ ( x ( 1 ) ) T ⋮ ϕ ( x ( N ) ) T ] = [ ϕ ( x 1 ( 1 ) ) ⋯ ϕ ( x D ( 1 ) ) ⋮ ⋱ ⋮ ϕ ( x 1 ( N ) ) ⋯ ϕ ( x D ( N ) ) ] (LM.12) \boldsymbol\Phi=\left[\begin{matrix}\phi(\mathbf x^{(1)})^T\\\vdots\\\phi(\mathbf x^{(N)})^T \end{matrix}\right]=\left[\begin{matrix}\phi(x_1^{(1)})& \cdots & \phi(x_D^{(1)}) \\\vdots& \ddots & \vdots\\\phi( x_1^{(N)})& \cdots & \phi( x_D^{(N)})\end{matrix}\right]\tag{LM.12} Φ=⎣⎢⎡ϕ(x(1))T⋮ϕ(x(N))T⎦⎥⎤=⎣⎢⎢⎡ϕ(x1(1))⋮ϕ(x1(N))⋯⋱⋯ϕ(xD(1))⋮ϕ(xD(N))⎦⎥⎥⎤(LM.12)

which are known as the normal equations for the least square problem.

Then we can also maximize the log likelihood function ( L M . 7 ) (LM.7) (LM.7) with respect to the noise precision parameter β \beta β, giving

1 β M L = 1 N ∑ n = 1 N { t ( n ) − w M L T ϕ ( x ( n ) ) } 2 \frac{1}{\beta_{ML}}=\frac{1}{N}\sum_{n=1}^N\left\{t^{(n)}-\mathbf{w}_{ML}^{\mathrm{T}} {\phi}\left(\mathbf{x}^{(n)}\right)\right\}^{2} βML1=N1n=1∑N{ t(n)−wMLTϕ(x(n))}2

and so we see that the inverse of the noise precision is given by the residual variance of the target values around the regression function.

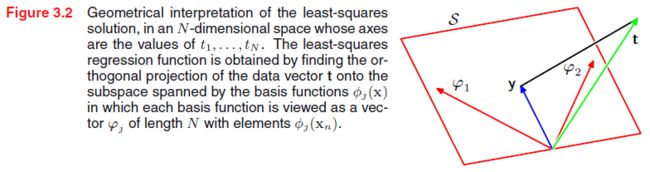

Geometry of least squares

We can stack y ( x , w ) y(\mathbf x,\mathbf w) y(x,w) as a vector with the definition in ( L M . 12 ) (LM.12) (LM.12)

y = [ y ( 1 ) ( x ( 1 ) , w ) ⋮ y ( N ) ( x ( N ) , w ) ] = [ ϕ ( x ( 1 ) ) T ⋮ ϕ ( x ( N ) ) T ] w = Φ w (LM.13) \mathbf y=\left[\begin{matrix}y^{(1)}(\mathbf x^{(1)},\mathbf w)\\\vdots\\y^{(N)}(\mathbf x^{(N)},\mathbf w) \end{matrix}\right]=\left[\begin{matrix}\phi(\mathbf x^{(1)})^T\\\vdots\\\phi(\mathbf x^{(N)})^T \end{matrix}\right]\mathbf w=\boldsymbol\Phi \mathbf w \tag{LM.13} y=⎣⎢⎡y(1)(x(1),w)⋮y(N)(x(N),w)⎦⎥⎤=⎣⎢⎡ϕ(x(1))T⋮ϕ(x(N))T⎦⎥⎤w=Φw(LM.13)

Then minimize the error function ( L M . 8 ) (LM.8) (LM.8) is equivalent to minimize

E ( w ) = ∥ Φ w − t ∥ 2 (LM.14) E(\mathbf w)=\|\boldsymbol\Phi\mathbf w-\mathbf t\|_2\tag{LM.14} E(w)=∥Φw−t∥2(LM.14)

If the number of features D D D is smaller than the number of data points N N N, the columns of Φ \boldsymbol\Phi Φ, denoted as φ j , j = 1 , ⋯ , D \varphi_j,j=1,\cdots,D φj,j=1,⋯,D will span a linear subspace S \mathcal{S} S of dimensionality D D D. y \mathbf y y will be an arbitrary linear combination of the vectors φ j \varphi_j φj, thus can live anywhere in the D D D-dimensional subspace. ( L M . 14 ) (LM.14) (LM.14) is then equal to the Euclidean distance between y \mathbf y y and t \mathbf t t. Therefore, y \mathbf y y is the orthogonal projection of t \mathbf t t onto the subspace S \mathcal{S} S, i.e.,

( t − Φ w ) ∈ S ⊥ ⟹ Φ T ( Φ w − t ) = 0 (LM.15) (\mathbf t-\boldsymbol\Phi\mathbf w)\in \mathcal{S}^{\perp}\Longrightarrow \boldsymbol\Phi^T(\boldsymbol\Phi \mathbf w-\mathbf t)=0\tag{LM.15} (t−Φw)∈S⊥⟹ΦT(Φw−t)=0(LM.15)