Knowledge Graph Embedding: A Survey of Approaches and Applications 摘要

想看原文戳这里

1. introduction

(1) the definition of KG

A KG is a multi-relational graph composed of entities (nodes) and relations (different types of edges).

(2) examples

Freebase, DBpedia, YAGO, NELL

(3) key idea of KGE

The key idea is to embed components of a KG including entities and relations into continuous vector spaces, so as to simplify the manipulation while preserving the inherent structure of the KG.

(4) traditional procedure of KGE

Given a KG, such a technique first represents entities and relations in a continuous vector space, and defines a scoring function on each fact to measure its plausibility. Entity and relation embeddings can then be obtained by maximizing the total plausibility of observed facts.

2. notations

…

3. KG Embedding with Facts Alone

(1) facts

Facts observed in the KG are stored as a collection of triples D+={(h,r,t)}. Each triple is composed of a head entity h∈E, a tail entity t∈E, and a relation r∈R between them.

(2) steps

① representing entities and relations

Entities are usually represented as vectors, i.e., deterministic points in the vector space. Relations are typically taken as operations in the vector space.

② defining a scoring function

A scoring function fr(h,t) is defined on each fact (h,r,t) to measure its plausibility. Facts observed in the KG tend to have higher scores than those that have not been observed.

③ learning entity and relation representations

The third step solves an optimization problem that maximizes the total plausibility of observed facts.

(3) embedding techniques

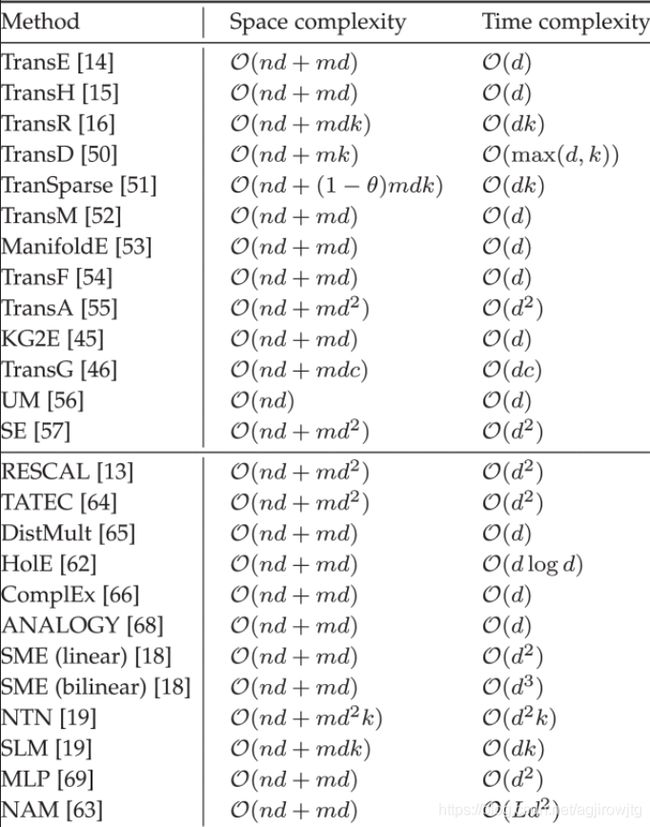

① Translational Distance Models

exploit distance-based scoring functions; measure the plausibility of a fact as the distance between the two entities, usually after a translation carried out by the relation

(i) TransE

-represents both entities and relations as vectors in the same space, say Rd

-Given a fact (h,r,t), the relation is interpreted as a translation vector r so that the embedded entities h and t can be connected by r with low error, i.e., h+r≈t when (h,r,t) holds.

- ![]()

-TransE has flaws in dealing with 1-to-N, N-to-1, and N-to-N relations : given a 1-to-N relation, e.g., DirectorOf, TransE might learn very similar vector representations for Psycho, Rebecca, and RearWindow which are all films directed by AlfredHitchcock, even though they are totally different entities

(ii) TransE’s Extensions

Relation-Specific Entity Embeddings: allow an entity to have distinct representations when involved in different relations

- TransH

* relation-specific hyperplanes

* models entities as vectors

* models each relation r as a vector r on a hyperplane with wr as the normal vector

* By introducing the mechanism of projecting to relation-specific hyperplanes, TransH enables different roles of an entity in different relations.

- TransR

* relation-specific spaces

* entities are represented as vectors in an entity space Rd, and each relation is associated with a specific space Rk and modeled as a translation vector in that space

* Mr∈Rk×d is a projection matrix from the entity space to the relation space of r

* ![]()

![]()

- TransD

* simplifies TransR

* ![]()

![]()

- TranSparse (share)

* simplifies TransR

* ![]()

- TranSparse (separate)

* simplifies TransR

* ![]()

Relaxing Translational Requirement: relaxing the overstrict requirement of h+r≈t

- TransM

* ![]()

- ManifoldE

- TransF

* only requires t to lie in the same direction with h+r, and meanwhile h in the same direction with t-r

* ![]()

- TransA

(iii) Gaussian Embeddings

take into account entities’ uncertainties, and model them as random variables

- KG2E

- TransG

(iv) Other Distance Models

- UM (unstructured model)

* a naive version of TransE by setting all r=0

- SE (structured embedding)

* ![]()

② Semantic Matching Models

exploit similarity-based scoring functions; measure plausibility of facts by matching latent semantics of entities and relations

(i) RESCAL and Its Extensions

- RESCAL

- TATEC

* models not only the three-way interaction h⊤Mrt but also two-way interactions, e.g., those between an entity and a relation

* ![]()

- DistMult

* this over-simplified model can only deal with symmetric relations

- HolE

* combines the expressive power of RESCAL with the efficiency and simplicity of DistMult

- ComplEx

* extends DistMult by introducing complex-valued embeddings so as to better model asymmetric relations

* entity and relation embeddings h,r,t no longer lie in a real space but a complex space

* every ComplEx has an equivalent HolE

- ANALOGY

* extends RESCAL so as to further model the analogical properties of entities and relations

(ii) Matching with Neural Networks

- Semantic Matching Energy (SME)

- Neural Tensor Network (NTN)

- Multi-Layer Perceptron (MLP)

- Neural Association Model (NAM)

③ Model Training

(i) Training under Open World Assumption

- KGs contain only true facts and non-observed facts can be either false or just missing. D+ stores only positive examples.

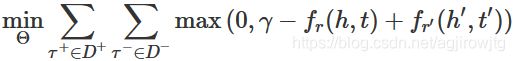

- Given the positive set D+ and a negative set D- constructed accordingly, we can learn entity and relation representations Θ by minimizing the logistic loss/ pairwise ranking loss.

- Minimizing the pairwise ranking loss has an additional advantage: it does not assume that negative examples are necessarily false, just that they are more invalid than those positive ones.

- the logistic loss generally yields better results for semantic matching models such as DistMult and ComplEx, while the pairwise ranking loss may be more suitable for translational distance models like TransE

- steps

* Initializing Entity and Relation Embeddings

~ usually initialized randomly from uniform distributions or Gaussian distributions

~ also initialized with results of simple models such as TransE

~ also represent an entity as the average word vector of its name or description, and initialize word vectors with those pre-trained on a text corpus

* Generating Negative Training Examples

~ To reduce false-negative examples, (i) set different probabilities for replacing the head and the tail, i.e., to give more chance to replacing the head if the relation is 1-to-N and the tail if the relation is N-to-1 or (ii) given a positive fact, it corrupts a position (i.e., head or tail) using only entities that have appeared in that position with the same relation

~ 50 negatives per positive example is a good trade-off between accuracy and training time

(ii) Training under Closed World Assumption

- all facts that are not contained in D+ are false

- other possible loss functions include the logistic loss and absolute loss

④ Model Comparison

⑤ Other Approaches

learn representations for head-tail entity pairs rather than individual entities

4. Incorporating Additional Information

(1) Entity Types

① a straight forward method : take IsA as an ordinary relation and the corresponding triples as ordinary training examples

② semantically smooth embedding (SSE)

③ type-embodied knowledge representation learning (TKRL)

(i) can handle hierarchical entity categories and multiple category labels

(ii) a translational distance model with type-specific entity projections

(2) Relation Paths

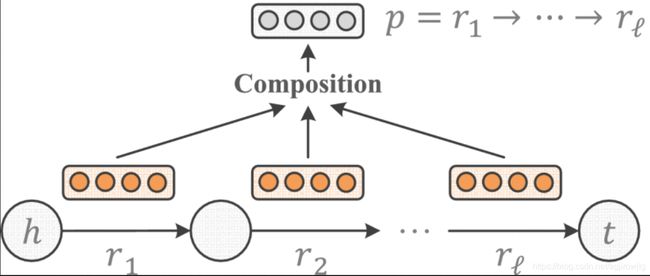

A relation path is typically defined as a sequence of relations r1→⋯→rℓ through which two entities can be connected on the graph. A key challenge then is how to represent such paths in the same vector space along with entities and relations.

① a straight forward method : represent a path as a composition of the representations of its constituent relations; typical composition operations include addition, multiplication, and recurrent neural network (RNN)

② path-based TransE (PTransE)

…

(3) Textual Descriptions

① align the given KG with an auxiliary text corpus, and then jointly conduct KG embedding and word embedding

② description-embodied knowledge representation learning (DKRL)

③ a text-enhanced KG embedding model, referred to as TEKE

(4) Logical Rules

systems such as WARMR, ALEPH, and AMIE can extract logical rules automatically from KGs

① utilize rules to refine embedding models during KG completion

② a joint model which embeds KG facts and logical rules simultaneously; a key ingredient of this approach, called KALE, is to represent and model facts and rules in a unified framework

③ vector embeddings are introduced for entity pairs rather than individual entities, making it particularly useful for relation extraction

(5) Other Information

Entity Attributes; Temporal Information; Graph Structures; Evidence from Other Relational Learning Methods

5. Applications in Downstream Tasks

…待补充

6. Concluding Remarks

…待补充