工具使用篇: 2020.10.23-2020.10.31 Tensorflow学习总结

1.如何利用tensorflow构建模型

通过 keras.Sequential() 搭建模型框架。

通过 keras.compile() 设置优化器(梯度下降方法)以及损失函数等。

通过 keras.fit() 训练模型。

通过 keras.evaluate() 查看模型训练效果

2.优化器 https://keras.io/zh/optimizers/

keras.optimizers.SGD(lr=0.01, momentum=0.0, decay=0.0, nesterov=False)

keras.optimizers.RMSprop(lr=0.001, rho=0.9, epsilon=None, decay=0.0)

keras.optimizers.Adagrad(lr=0.01, epsilon=None, decay=0.0)

keras.optimizers.Adadelta(lr=1.0, rho=0.95, epsilon=None, decay=0.0)

keras.optimizers.Adam(lr=0.001, beta_1=0.9, beta_2=0.999, epsilon=None, decay=0.0, amsgrad=False)

keras.optimizers.Adamax(lr=0.002, beta_1=0.9, beta_2=0.999, epsilon=None, decay=0.0)

keras.optimizers.Nadam(lr=0.002, beta_1=0.9, beta_2=0.999, epsilon=None, schedule_decay=0.004)

3.损失函数 https://keras.io/zh/losses/#_1

model.compile(loss=losses.mean_squared_error, optimizer='sgd')

loss有以下:

mean_squared_error

mean_absolute_error

mean_absolute_percentage_error

mean_absolute_logarithmic_error

hinge

categorical_hinge

logcosh

categorical_crossentropy

sparse_categorical_crossentropy

binary_crossentropy

kullback_leibler_divergence

poisson

cosin_proximity

4.其他

(1)在jupyterlab中,输入 %config IPCompleter.greedy==True 后,可以使用Tab键查看成员或当成员只有一个时自动补全

(2)设置回调函数可以使正在训练的模型在满足一定条件时自动终止,具体参考https://keras.io/zh/callbacks/

(3)如果相统一输入数据格式,那么ImageDataGenerator会很有用,具体参考https://keras.io/zh/preprocessing/image/#flow_from_directory

(4)利用循环调参太麻烦?可以使用 kerastuner 辅助调参

(5)利用*.summary()可以查看网络结构,这个*指的是定义的模型

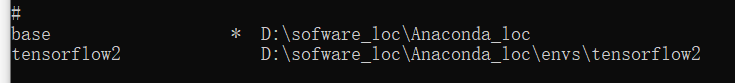

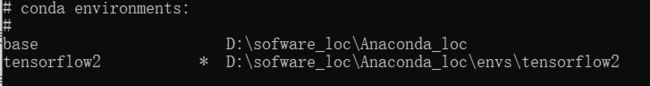

(6)如何在anaconda指定环境中安装第三方库,i)conda info -e 查看有哪些环境,带星号的为当前环境(图1);ii)使用conda activate tensorflow2激活tensorflow环境,tensorflow2是我自己建的环境,你可以把它换成你想激活的就ok(图2);iii)然后就是conda install了

图1

图2

以下代码实例均使用的是JupyterLab

代码实例1:

%config IPCompleter.greedy==True # 设置此部分可以通过tab键快速补全或查看成员,也就是辅助

# 导入各模块

import tensorflow as tf # 使用的是2.1

from tensorflow import keras

import matplotlib.pyplot as plt

import numpy as np

# 设置一个回调,这里是当loss小于0.3时停止训练

class myCallBacks(tf.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs={}):

if(logs.get('loss')<0.3):

print("\nTraining End!")

self.model.stop_training=True

# 导入数据

fashion_mnist = keras.datasets.fashion_mnist

(train_images, train_labels),(test_images, test_labels) = fashion_mnist.load_data()

#将数据各值范围缩小到[0,1]

train_images = train_images/255

test_images = test_images/255

# 构建一个三层模型

model = keras.Sequential()

model.add(keras.layers.Flatten(input_shape=(28,28)))

model.add(keras.layers.Dense(128,activation=tf.nn.leaky_relu))

model.add(keras.layers.Dense(10,activation=tf.nn.softmax))

callbacks = myCallBacks() # 定义一个回调实例

model.compile(optimizer='adam',loss='sparse_categorical_crossentropy', metrics=['accuracy'])

model.fit(train_images, train_labels, epochs=10,callbacks=[callbacks])

# 测试模型效果

model.evaluate(test_images, test_labels)

# np.argmax(model.predict([[test_images[1]/255]]))

# model.summary()

# plt.imshow(train_images[10])

代码实例2:

%config IPCompleter.greedy==True

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow import keras

import tensorflow as tf

# 创建两个数据生成器

train_datagen = ImageDataGenerator(rescale=1/255)

test_datagen = ImageDataGenerator(rescale=1/255)

# 指向数据训练文件夹

train_datagen = train_datagen.flow_from_directory(

'D:/StudyFile/horse-or-human/horse-or-human',

target_size=(300,300),

batch_size=32,

class_mode='binary')

# 指向测试数据文件夹

test_datagen = test_datagen.flow_from_directory(

'D:/StudyFile/horse-or-human/validation-horse-or-human',

target_size=(300,300),

batch_size=32,

class_mode='binary')

# 构建模型

from kerastuner.tuners import Hyperband

from kerastuner.engine.hyperparameters import HyperParameters

hp=HyperParameters()

def tune_model(hp):

model = keras.Sequential([

keras.layers.Conv2D(hp.Choice('filters_layer0',values=[16,64],default=16), (3,3), activation='relu', input_shape=(300,300,3)),

keras.layers.MaxPool2D(2,2),

keras.layers.Conv2D(32, (3,3), activation='relu'),

keras.layers.MaxPooling2D(2,2),

keras.layers.Conv2D(63, (3,3), activation='relu'),

keras.layers.MaxPooling2D(2,2),

keras.layers.Flatten(),

keras.layers.Dense(512, activation='relu'),

keras.layers.Dense(1, activation='sigmoid')])

model.compile(

optimizer=keras.optimizers.RMSprop(lr=0.001),

loss=keras.losses.binary_crossentropy,

metrics=['acc'])

return model

# model.summary()

tuner=Hyperband(

tune_model,

objective='val_acc',

max_epochs=20,

directory='20201030-horse-or-human',

hyperparameters=hp,

project_name='20201030'

)

tuner.search(

train_datagen,

epochs=15,

verbose=1,

validation_data=test_datagen,

validation_steps=8)

# 训练模型

# history = model.fit(

# train_datagen,epochs=15,

# verbose=1,

# validation_data=test_datagen,

# validation_steps=8)