saltstack自动化运维管理的一些操作

saltstack自动化运维管理的一些操作

- saltstack简介

-

- saltstack通信机制

- saltstack安装与配置

- saltstack远程执行

-

- 远程执行shell命令

- 编写远程执行模块

- 配置管理

- grains

-

- 信息查询

- 自定义grains项

-

- 在/etc/salt/minion中定义

- 在/etc/salt/grains中定义

- 在salt-master端创建_grains目录

- grains匹配运用

-

- 在top文件中匹配

- Jinja模板

-

- Jinja模板使用方式

- pillar

-

- 自定义pillar项

- pillar数据匹配

- 配置keepalived

- 配置zabbix监控

-

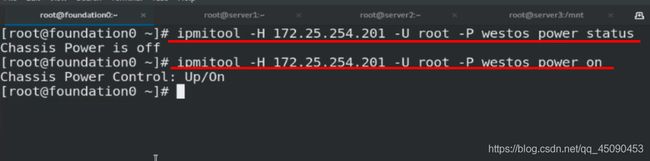

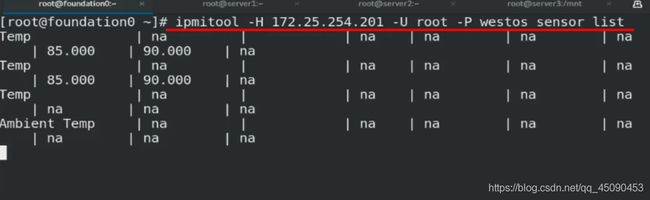

- IPMI

运维管理工具的对比Puppet、Chef、Ansible和SaltStack、Fabric.

saltstack简介

- saltstack是一个配置管理系统,能够维护预定义状态的远程节点。

- saltstack是一个分布式远程执行系统,用来在远程节点上执行命令和查询数据。

- saltstack是运维人员提高工作效率、规范业务配置与操作的利器。

- salt的核心功能

- 使命令发送到远程系统是并行的而不是串行的

- 使用安全加密的协议

- 使用最小最快的网络载荷

- 提供简单的编程接口

- salt同样引入了更加细致化的领域控制系统来远程执行,使得系统成为目标不止可以通过主机名,还可以通过系统属性。

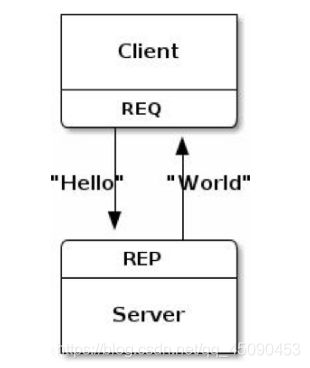

saltstack通信机制

- saltstack 采用 C/S模式,minion与master之间通过ZeroMQ消息队列通信,默认监听4505端口。

- Salt Master运行的第二个网络服务就是ZeroMQ REP系统,默认监听4506端口。

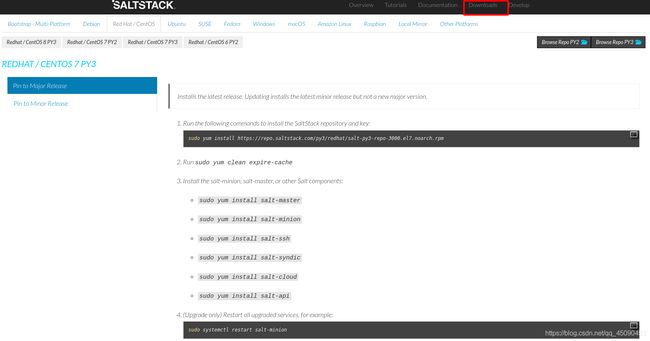

saltstack安装与配置

[root@server1 ~]# yum install https://repo.saltstack.com/yum/redhat/salt-repo-3000.el7.noarch.rpm

[root@server1 ~]# yum list salt-*

[root@server1 ~]# yum install -y salt-master.noarch

[root@server1 ~]# systemctl enable --now salt-master.service

[root@server1 ~]# netstat -antlp

阿里云的源

yum install https://mirrors.aliyun.com/saltstack/yum/redhat/salt-repo-latest-2.el7.noarch.rpm

sed -i "s/repo.saltstack.com/mirrors.aliyun.com\/saltstack/g" /etc/yum.repos.d/salt-latest.repo

[root@server1 yum.repos.d]# cat salt-latest.repo

[salt-latest]

name=SaltStack Latest Release Channel for RHEL/Centos $releasever

baseurl=https://mirrors.aliyun.com/saltstack/yum/redhat/7/$basearch/latest

failovermethod=priority

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/saltstack-signing-key

minion端

[root@server1 yum.repos.d]# scp salt-3000.repo server2:/etc/yum.repos.d/

[root@server1 yum.repos.d]# scp salt-3000.repo server3:/etc/yum.repos.d/

[root@server2 yum.repos.d]# vim salt-3000.repo

[root@server3 yum.repos.d]# vim salt-3000.repo

gpgcheck=0

[root@server2 ~]# sed -i "s/repo.saltstack.com/mirrors.aliyun.com\/saltstack/g" /etc/yum.repos.d/salt-3000.repo

[root@server2 ~]# cat /etc/yum.repos.d/salt-3000.repo

[root@server2 ~]# yum install -y salt-minion.noarch

[root@server3 ~]# yum install -y salt-minion.noarch

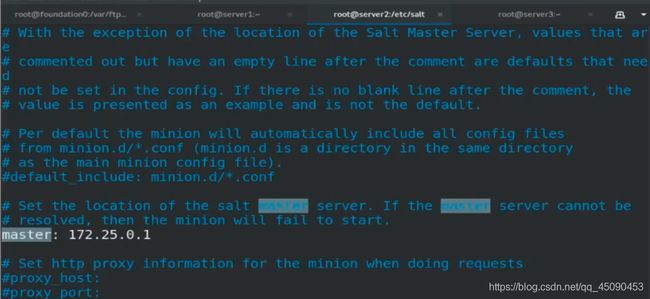

[root@server2 salt]# vim minion

master: 172.25.0.1

[root@server2 salt]# systemctl enable --now salt-minion.service

[root@server3 salt]# vim minion

master: 172.25.0.1

[root@server3 salt]# systemctl enable --now salt-minion.service

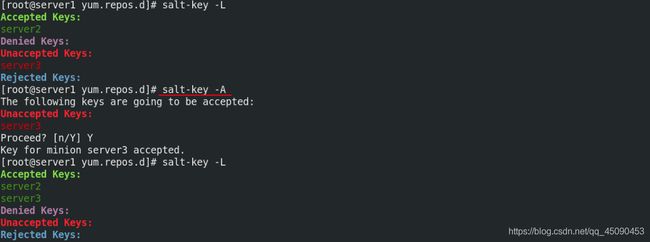

master端执行命令允许minion连接

[root@server1 yum.repos.d]# salt-key -A ##添加主机使其与master连接

[root@server1 yum.repos.d]# salt-key -L ##列出所有连接的主机

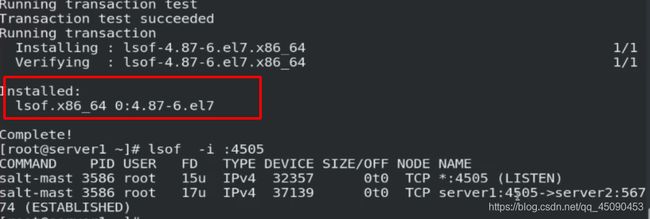

[root@server1 yum.repos.d]# netstat -antlp

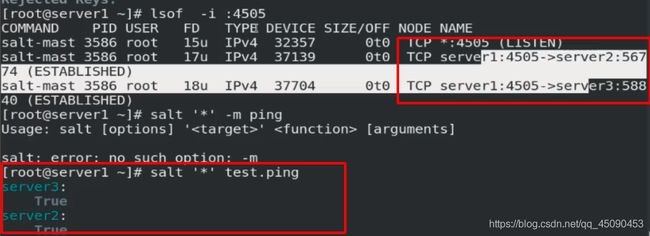

[root@server1 yum.repos.d]# lsof -i :4505

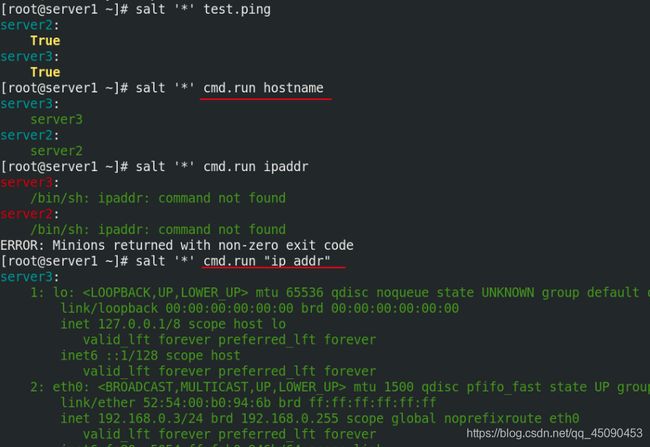

[root@server1 ~]# salt '*' test.ping

[root@server1 ~]# salt '*' cmd.run "ip addr"

[root@server1 ~]# salt '*' cmd.run hostname

[root@server1 salt]# cd /var/cache/salt/

[root@server1 salt]# ls

master

[root@server1 salt]# cd master/

[root@server1 master]# ls

jobs minions proc queues roots syndics tokens

[root@server1 master]# cd jobs/

[root@server1 jobs]# ls

43 5b 8f bb

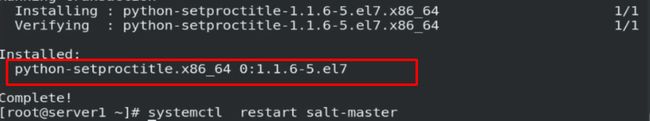

[root@server1 ~]# yum install -y python-setproctitle.x86_64

[root@server1 ~]# systemctl restart salt-master.service

[root@server1 ~]# ps ax #查看进程

[root@server2 salt]# ls

minion_id ##主机名文件 /etc/salt/

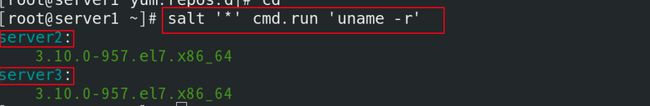

saltstack远程执行

远程执行shell命令

salt内置的执行模块列表.

- Salt命令由三个主要部分构成:

salt '' [arguments] - target: 指定哪些minion, 默认的规则是使用glob匹配minion id.

- salt ‘*’ test.ping

- Targets也可以使用正则表达式:

- salt -E ‘server[1-3]’ test.ping

- Targets也可以指定列表:

- salt -L ‘server2,server3’ test.ping

- function是module提供的功能,Salt内置了大量有效的functions.

- salt ‘*’ cmd.run ‘uname -a’

- arguments通过空格来界定参数:

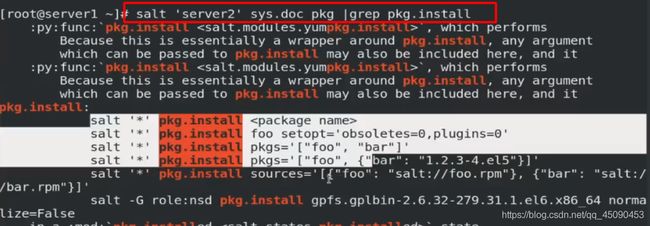

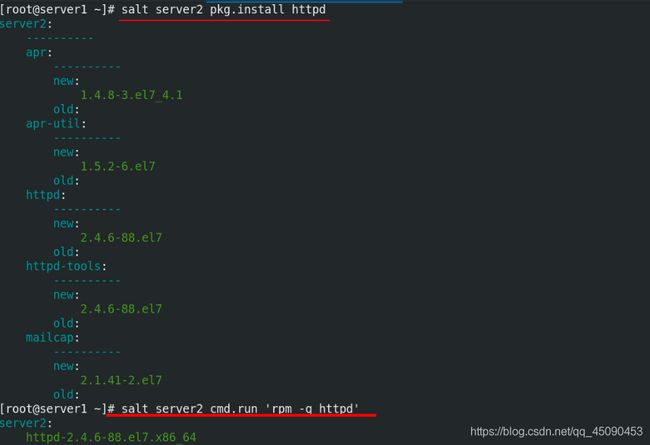

- salt ‘server2’ sys.doc pkg #查看模块文档

- salt ‘server2’ pkg.install httpd

- salt ‘server2’ pkg.remove httpd

[root@server1 ~]# vim index.html

[root@server1 ~]# salt-cp server2 index.html /var/www/html

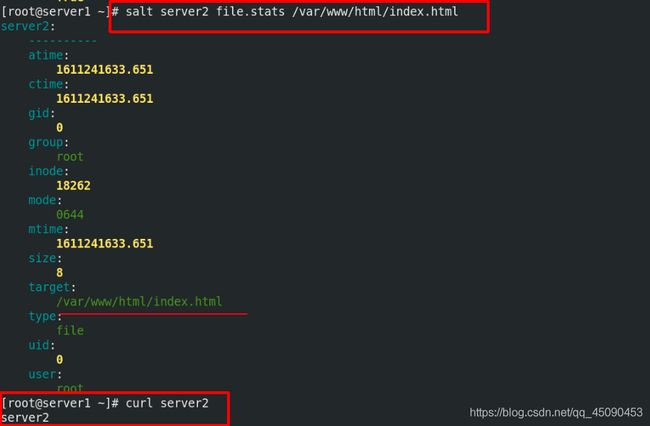

[root@server1 ~]# salt server2 file.stats /var/www/html/index.html

[root@server1 ~]# curl server2

server2

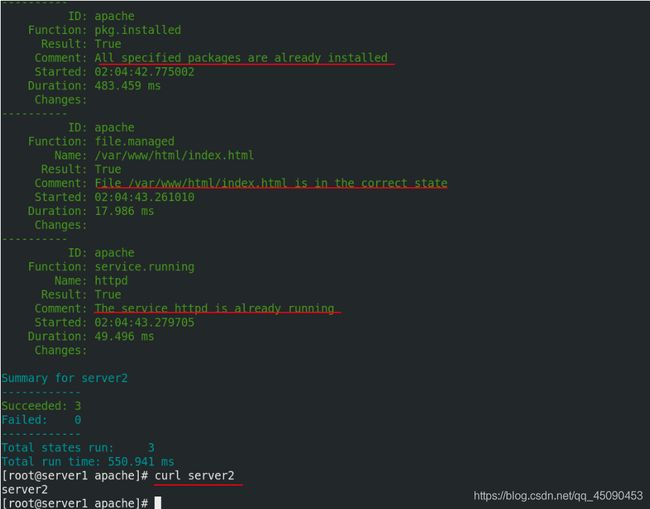

编写远程执行模块

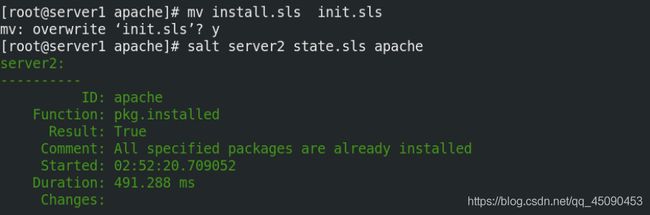

所有的文件以.sls结尾.不能使用tab键

在server2中部署apache

[root@server1 ~]# cd /srv/

[root@server1 srv]# mkdir salt

[root@server1 srv]# cd salt/

[root@server1 salt]# mkdir apache

[root@server1 salt]# mv ~/index.html apache/

[root@server1 salt]# cd apache/

[root@server1 apache]# vim install.sls

[root@server1 apache]# salt server2 state.sls apache.install

[root@server1 apache]# mkdir files

[root@server1 apache]# mv index.html files/

[root@server1 apache]# md5sum files/index.html

01bc6b572ba171d4d3bd89abe9cb9a4c files/index.html

[root@server2 salt]# tree .

[root@server2 salt]# pwd

/var/cache/salt

[root@server2 salt]# cd minion/files/base/apache/

[root@server2 apache]# ls

files install.sls

[root@server2 apache]# cd files/

[root@server2 files]# ls

index.html

[root@server2 files]# md5sum index.html ##文件的md5码一样

01bc6b572ba171d4d3bd89abe9cb9a4c index.html

apache:

pkg.installed:

- pkgs:

- httpd

- php

- php-mysql

file.managed:

- source: salt://apache/files/httpd.conf

- name: /etc/httpd/conf/httpd.conf

service.running:

- name: httpd

- enable: true

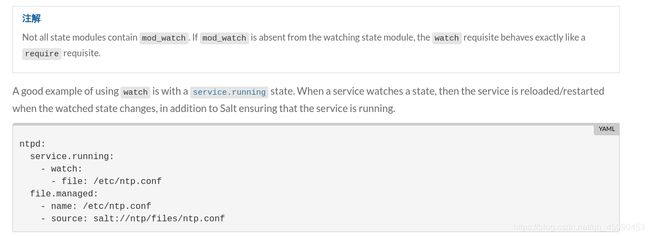

- watch:

- file: apache

#/etc/httpd/conf/httpd.conf:

# file.managed:

# - source: salt://apache/files/httpd.conf

配置管理

- Salt 状态系统的核心是SLS,或者叫SaLt State 文件。

- SLS表示系统将会是什么样的一种状态,而且是以一种很简单的格式来包含这些数据,常被叫做配置管理。

- sls文件命名:

- sls文件以*.sls后缀结尾,但在调用是不用写此后缀。

- 使用子目录来做组织是个很好的选择。

- init.sls 在一个子目录里面表示引导文件,也就表示子目录本身, 所以apache/init.sls 就是表示apache.

- 如果同时存在apache.sls 和 apache/init.sls,则 apache/init.sls 被忽略,apache.sls将被用来表示 apache.

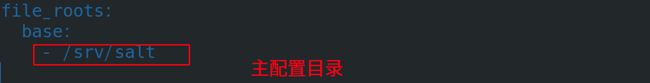

master 端(server1)

vim /etc/salt/master

systemctl restart salt-master

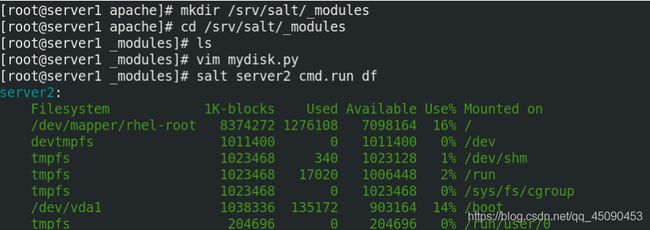

创建模块

[root@server1 apache]# mkdir /srv/salt/_modules ##创建模块目录

[root@server1 apache]# cd /srv/salt/_modules

[root@server1 _modules]# ls

[root@server1 _modules]# vim mydisk.py ##编写模块文件

def df():

return __salt__['cmd.run']('df -h')

[root@server1 _modules]# salt server2 cmd.run df

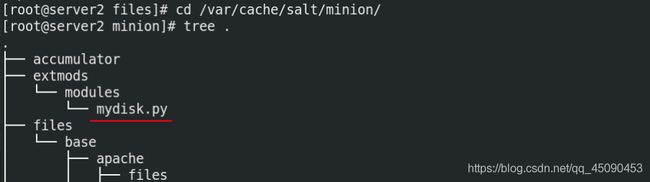

[root@server1 _modules]# salt server2 saltutil.sync_modules##同步模块

server2:

- modules.mydisk

[root@server2 files]# cd /var/cache/salt/minion/

[root@server2 minion]# tree .

grains

- Grains是SaltStack的一个组件,存放在SaltStack的minion端。

- 当salt-minion启动时会把收集到的数据静态存放在Grains当中,只有当minion重启时才会进行数据的更新。

- 由于grains是静态数据,因此不推荐经常去修改它。

- 应用场景:

- 信息查询,可用作CMDB。

- 在target中使用,匹配minion。

- 在state系统中使用,配置管理模块。

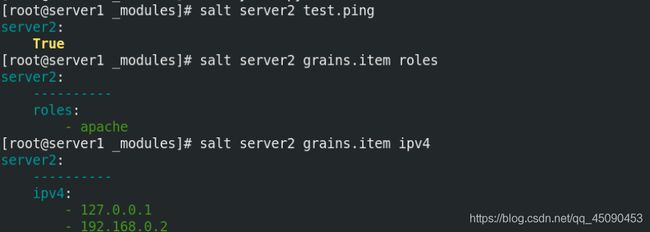

信息查询

用于查询minion端的IP、FQDN等信息。

默认可用的grains:

salt '*' grains.ls列出所有的key

salt '*' grains.items列出所有 key和值

指定key的值salt server2 grains.item ipv4

自定义grains项

在/etc/salt/minion中定义

在minion端服务的主配置文件操作。同步到master端

[root@server2 minion]# cd /etc/salt/

[root@server2 salt]# vim minion

grains:

roles:

- apache

重启salt-minion,否则数据不会更新

[root@server2 salt]# systemctl restart salt-minion

[root@server1 _modules]# salt server2 test.ping

[root@server1 _modules]# salt server2 grains.item ipv4

[root@server1 _modules]# salt server2 grains.item roles

在/etc/salt/grains中定义

在server端编写/etc/salt/grains文件,定义,在master端同步

[root@server3 salt]# pwd

/etc/salt

[root@server3 salt]# vim grains

roles:

- nginx

[root@server3 salt]# salt server3 saltutil.sync_grains ##同步数据

[root@server1 _modules]# salt '*' grains.item roles

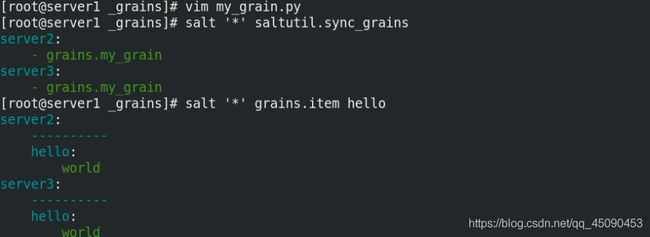

在salt-master端创建_grains目录

在master端创建_grains目录 编辑文件同步到minion

[root@server1 _modules]# mkdir /srv/salt/_grains

[root@server1 _modules]# cd /srv/salt/_grains

[root@server1 _grains]# vim my_grain.py

def my_grain():

grains = {

}

grains['salt'] = 'stack'

grains['hello'] = 'world'

return grains

[root@server1 _grains]# salt '*' saltutil.sync_grains

[root@server1 _grains]# salt '*' grains.item hello

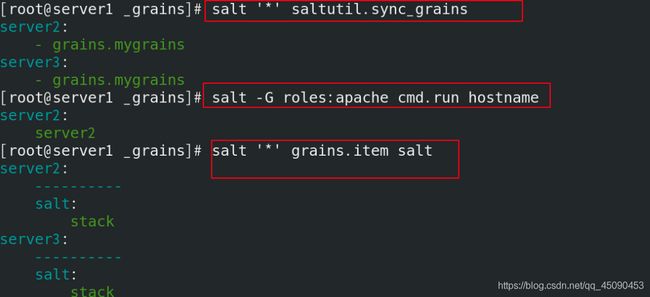

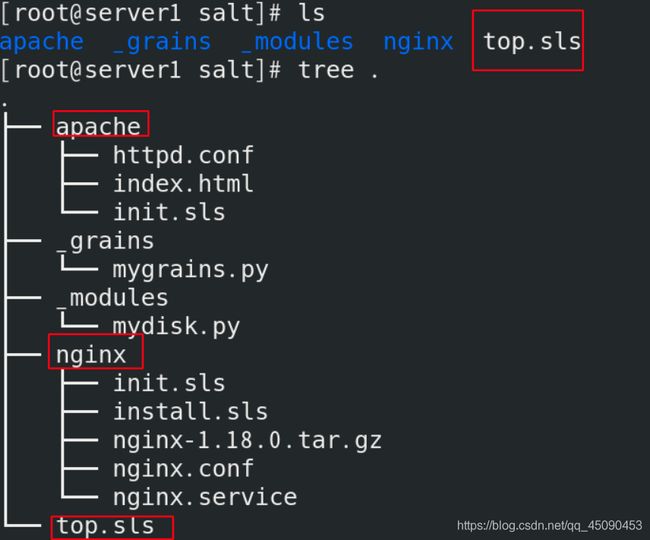

grains匹配运用

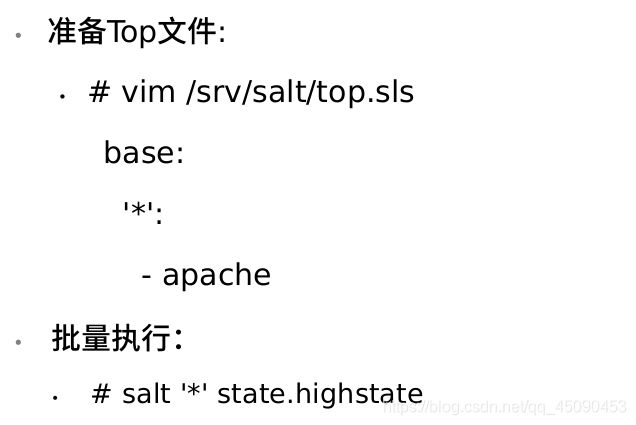

在top文件中匹配

grains定义生效后才能在top.sls中应用

grains被定义好后,用top匹配,即server2去执行apache ,server3去执行nginx

[root@server1 files]# scp server3:/usr/local/nginx/conf/nginx.conf .

[root@server1 salt]# cat top.sls

base:

'roles:apache':

- match: grain

- apache

'roles:nginx':

- match: grain

- nginx

[root@server1 apache]# cat init.sls

apache:

pkg.installed:

- pkgs:

- httpd

- php

- php-mysql

file.managed:

- source: salt://apache/httpd.conf

- name: /etc/httpd/conf/httpd.conf

service.running:

- name: httpd

- enable: true

- watch:

- file: apache

/var/www/html/index.html:

file.managed:

- source: salt://apache/index.html

[root@server1 nginx]# cat init.sls

include:

- nginx.install #####将文件包函进去,即nginx目录下的install.sls

/usr/local/nginx/conf/nginx.conf: 目的文件路径

file.managed:

- source: salt://nginx/nginx.conf

nginx-service:

user.present:

- name: nginx

- shell: /sbin/nologin

- home: /usr/local/nginx

- createhome: false

file.managed:

- source: salt://nginx/nginx.service

- name: /usr/lib/systemd/system/nginx.service

service.running:

- name: nginx

- enable: true

- reload: true

- watch:

- file: /usr/local/nginx/conf/nginx.conf

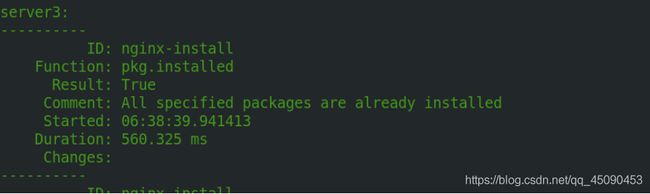

[root@server1 nginx]# cat install.sls

nginx-install:

pkg.installed:

- pkgs:

- gcc

- pcre-devel

- openssl-devel

file.managed:

- source: salt://nginx/nginx-1.18.0.tar.gz

- name: /mnt/nginx-1.18.0.tar.gz

cmd.run:

- name: cd /mnt && tar zxf nginx-1.18.0.tar.gz && cd nginx-1.18.0 && ./configure --prefix=/usr/local/nginx --with-http_ssl_module &> /dev/null && make &> /dev/null && make install &> /dev/null

- creates: /usr/local/nginx

[root@server1 nginx]# cat nginx.service

[Unit]

Description=The NGINX HTTP and reverse proxy server

After=syslog.target network.target remote-fs.target nss-lookup.target

[Service]

Type=forking

PIDFile=/usr/local/nginx/logs/nginx.pid

ExecStartPre=/usr/local/nginx/sbin/nginx -t

ExecStart=/usr/local/nginx/sbin/nginx

ExecReload=/usr/local/nginx/sbin/nginx -s reload

ExecStop=/bin/kill -s QUIT $MAINPID

PrivateTmp=true

[Install]

WantedBy=multi-user.target

[root@server1 nginx]# salt '*' state.highstate

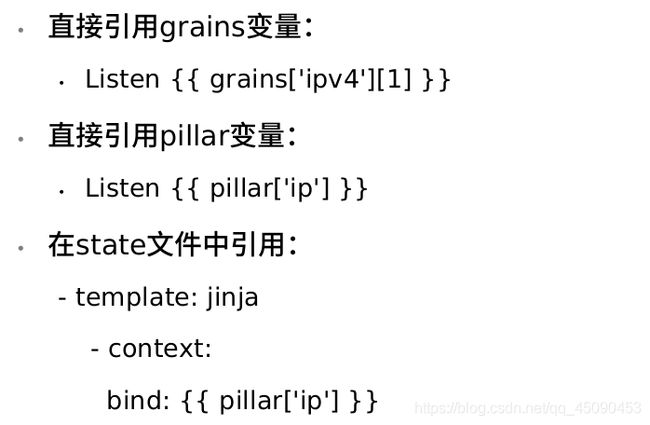

Jinja模板

SALT.RENDERERS.JINJA.

- Jinja是一种基于python的模板引擎,在SLS文件里可以直接使用jinja模板来做一些操作。

- 通过jinja模板可以为不同服务器定义各自的变量。

- 两种分隔符: {% … %} 和 { { … }},前者用于执行诸如 for 循环 或赋值的语句,后者把表达式的结果打印到模板上。

Jinja模板使用方式

Jinja最基本的用法是使用控制结构包装条件

[root@server1 salt]# vim test.sls

/mnt/testfile:

file.append:

{

% if grains['fqdn'] == 'server2' %}

- text: server2

{

% elif grains['fqdn'] == 'server3' %}

- text: server3

{

% endif %}

[root@server1 salt]# salt '*' state.sls test

[root@server2 mnt]# cat /mnt/testfile

server2

[root@server3 ~]# cat /mnt/testfile

server3

Jinja在普通文件的使用

[root@server1 apache]# vim init.sls

/var/www/html/index.html:

file.managed:

- source: salt://apache/files/httpd.conf

- name: /etc/httpd/conf/httpd.conf

- template: jinja

- context:

port: 80

bind: {

{

grains['ipv4'][-1] }}

/var/www/html/index.html:

file.managed:

- source: salt://apache/files/index.html

- template: jinja

- context:

NAME: {

{

grains['ipv4'][-1] }}

[root@server1 apache]# vim files/httpd.conf

Listen {

{

bind }}:{

{

port }} ## 直接引用grains变量

[root@server1 apache]# vim files/index.html

{

{

grains['os'] }} - {

{

grains['fqdn'] }}

{

{

NAME }}

[root@server1 apache]# salt server2 state.sls apache

[root@server2 html]# cat index.html

RedHat - server2

192.168.0.2

pillar

在PILLAR中存储静态数据.

- pillar和grains一样也是一个数据系统,但是应用场景不同。

- pillar是将信息动态的存放在master端,主要存放私密、敏感信息(如用户名密码等),而且可以指定某一个minion才可以看到对应的信息。

- pillar更加适合在配置管理中运用。

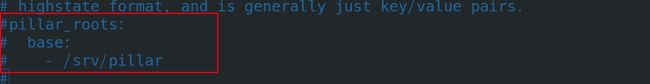

定义pillar基础目录

master端

vim /etc/salt/master

pillar_roots:

base:

- /srv/pillar

mkdir /srv/pillar

systemctl restart salt-master ##重启salt-master服务

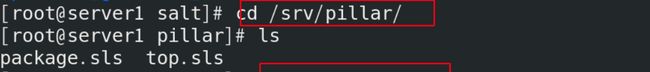

自定义pillar项

vim /srv/pillar/top.sls

base:

'*':

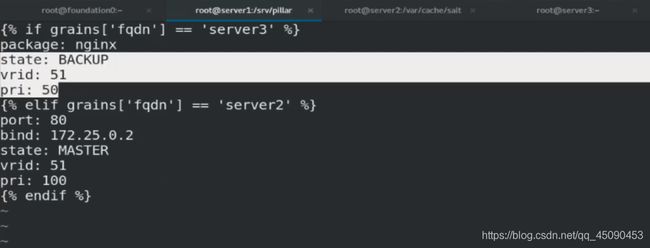

- packages

vim /srv/pillar/package.sls

{

% if grains[‘fqdn’] == ‘server3’ %}

package: nginx

{

% elif grains[‘fqdn’] == ‘server2’ %}

port: 80

bind: 172.25.10.2

{

% endif %}

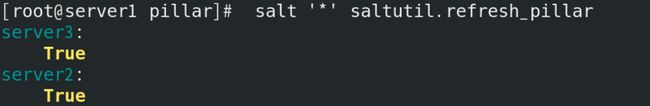

刷新pillar数据:

salt '*' saltutil.refresh_pillar

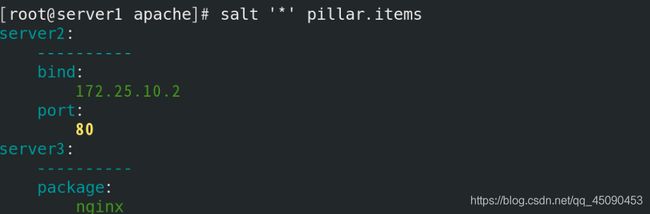

查询pillar数据:

salt ‘*’ pillar.items

salt '*’ pillar.item roles

pillar数据匹配

命令行中匹配

salt -I ‘package:nginx’ test.ping

state系统中使用

vim /etc/httpd/conf/httpd.conf

{

% from 'apache/lib.sls' import port %} jinja模板的import方式

Listen {

{

bind }}:{

{

port }}

[root@server1 pillar]# salt server2 state.sls apache

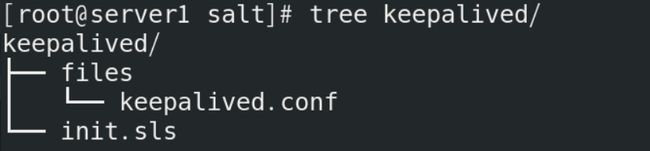

配置keepalived

[root@server1 keepalived]# cat init.sls

kp-install:

pkg.installed:

- name: keepalived

file.managed:

- name: /etc/keepalived/keepalived.conf

- source: salt://keepalived/files/keepalived.conf

- template: jinja

- context:

STATE: {

{

pillar['state'] }}

VRID: {

{

pillar['vrid'] }}

PRI: {

{

pillar['pri'] }}

service.running:

- name: keepalived

- enable: true

- reload: true

- watch:

- file: kp-install

[root@server1 keepalived]# cat files/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state {

{

STATE }}

interface eth0

virtual_router_id {

{

VRID }}

priority {

{

PRI }}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.10.100

}

}

salt '*' state.sls keepalived 或者加进top 文件中 ,一起运行[root@server1 salt]# salt '*' state.highstate

[root@server1 salt]# cat top.sls

base:

'roles:apache':

- match: grain

- apache

- keepalived

'roles:nginx':

- match: grain

- nginx

- keepalived

package.sls

[root@server2 keepalived]# systemctl stop keepalived

![]()

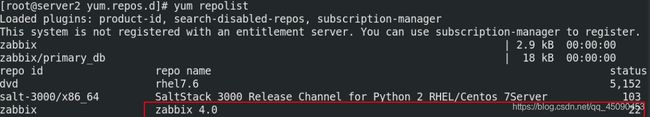

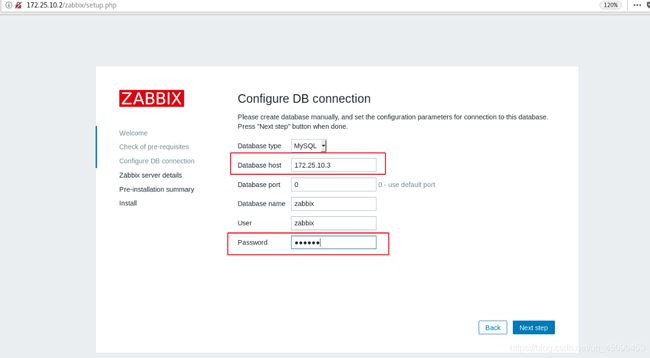

配置zabbix监控

数据库的配置,及导入数据到数据库中

zabbix仓库源的搭建

[root@server1 apache]# cd /srv/salt/

[root@server1 salt]# mkdir zabbix-server

[root@server1 salt]# cd zabbix-server/

[root@server1 zabbix-server]# ls

[root@server1 zabbix-server]# vim init.sls

[root@server1 zabbix-server]# mkdir files

[root@server1 zabbix-server]# cd files/

[root@server1 files]# scp server2:/etc/zabbix/zabbix_server.conf .

[root@server1 files]# vim zabbix_server.conf

DBHost=192.168.0.3

DBPassword=westos

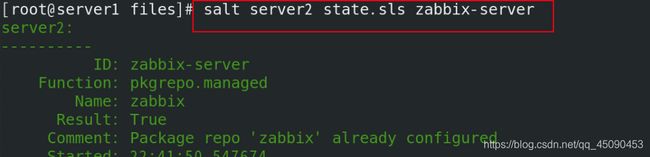

[root@server1 zabbix-server]# salt server2 state.sls zabbix-server

响应:

[root@server2 zabbix]# mysql -h 192.168.0.3 -u zabbix -p

MariaDB [(none)]> use zabbix

MariaDB [zabbix]> show tables;

[root@server1 zabbix-server]# cat init.sls

zabbix-server:

pkgrepo.managed:

- name: zabbix

- humanname: zabbix 4.0

- baseurl: http://172.25.254.250/pub/docs/zabbix/4.0

- gpgcheck: 0

pkg.installed:

- pkgs:

- zabbix-server-mysql

- zabbix-agent

- zabbix-web-mysql

file.managed:

- name: /etc/zabbix/zabbix_server.conf

- source: salt://zabbix-server/files/zabbix_server.conf

service.running:

- name: zabbix-server

- enable: true

- watch:

- file: zabbix-server

zabbix-agent:

service.running

zabbix-web:

file.managed:

- name: /etc/httpd/conf.d/zabbix.conf

- source: salt://zabbix-server/files/zabbix.conf

service.running:

- name: httpd

- enable: true

- watch:

- file: zabbix-web

/etc/zabbix/web/zabbix.conf.php:

file.managed:

- source: salt://zabbix-server/files/zabbix.conf.php

[root@server1 mysql]# cat init.sls

mysql-install:

pkg.installed:

- pkgs:

- mariadb-server

- MySQL-python

file.managed:

- name: /etc/my.cnf

- source: salt://mysql/files/my.cnf

service.running:

- name: mariadb

- enable: true

- watch:

- file: mysql-install

mysql-config:

mysql_database.present:

- name: zabbix

mysql_user.present:

- name: zabbix

- host: '%'

- password: "westos"

mysql_grants.present:

- grant: all privileges

- database: zabbix.*

- user: zabbix

- host: '%'

file.managed:

- name: /mnt/create.sql

- source: salt://mysql/files/create.sql

cmd.run:

- name: mysql zabbix < /mnt/create.sql && touch /mnt/zabbix.lock

- creates: /mnt/zabbix.lock

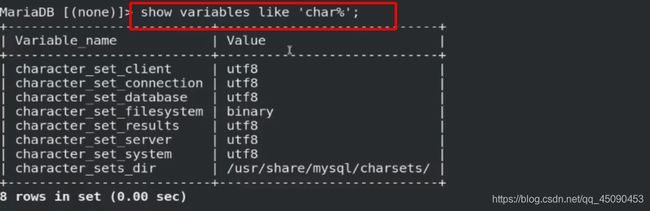

先安装完数据库就会生成/etc/my.cnf文件 .复制后添加[root@server1 files]# scp server3:/etc/my.cnf .

[root@server1 files]# vim my.cnf 先执行前面,安装完就会生成此文件,添加

10 log-bin=mysql-bin

11 character-set-server=utf8

先执行zabbix-server的安装,当安装完成后就会出现此文件

[root@server2 zabbix-server-mysql-4.0.5]# scp /etc/zabbix/zabbix-server-mysql-4.0.5/create.sql.gz server1:/srv/salt/mysql/files/

[root@server1 files]# gunzip create.sql.gz

[root@server1 files]# ls

create.sql my.cnf

[root@server1 mysql]# salt server3 state.sls mysql

响应:

[root@server3 ~]# mysql

MariaDB [(none)]> show variables like 'char%';

MariaDB [(none)]> select * from mysql.user;

MariaDB [(none)]> use zabbix

MariaDB [zabbix]> show tables;

[root@foundation50 ~]# mysql -h 192.168.0.3 -u zabbix -p

Enter password:

[root@server3 ~]# cd /mnt

[root@server3 mnt]# ls

create.sql

[root@server1 files]# vim zabbix.conf

20 php_value date.timezone Asia/Shanghai

[root@server1 files]# vim zabbix_server.conf

91 DBHost=172.25.10.3

100 DBName=zabbix

116 DBUser=zabbix

124 DBPassword=westos

[root@server1 files]# vim zabbix.conf.php

当在页面中完成初始化操作,此文件会记录,删掉,需重新初始化

在做初始化操作的时候

[root@server1 salt]# vim top.sls

base:

'roles:apache':

- match: grain

- apache

- keepalived

- zabbix-server

'roles:nginx':

- match: grain

- nginx

- keepalived

- mysql

[root@server1 salt]# salt '*' state.highstate