Flume 自定义Source、Sink和Interceptor

1. 自定义source

http://archive.cloudera.com/cdh5/cdh/5/flume-ng-1.6.0-cdh5.15.1/FlumeDeveloperGuide.html#source

一个简单的自定义source

package com.wxx.bigdata.hadoop.mapreduce.flume;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.EventDeliveryException;

import org.apache.flume.PollableSource;

import org.apache.flume.conf.Configurable;

import org.apache.flume.event.SimpleEvent;

import org.apache.flume.source.AbstractSource;

/**

* 自定义flume的数据源

*/

public class CustomerSource extends AbstractSource implements Configurable, PollableSource {

private String prefix;

private String suffix;

/**

* 处理event

* @return

* @throws EventDeliveryException

*/

@Override

public Status process() throws EventDeliveryException {

Status status = null;

try {

for(int i = 1; i <= 100; i++){

SimpleEvent simpleEvent = new SimpleEvent();

simpleEvent.setBody((prefix + i + suffix).getBytes());

// 不需要关注事务,processEvent()方法中,由channel去处理

getChannelProcessor().processEvent(simpleEvent);

}

status = Status.READY;

} catch (Exception e) {

e.printStackTrace();

status = Status.BACKOFF;

} finally {

}

try {

Thread.sleep(5000);

} catch (InterruptedException e) {

e.printStackTrace();

}

//返回状态的结果

return status;

}

@Override

public long getBackOffSleepIncrement() {

return 0;

}

@Override

public long getMaxBackOffSleepInterval() {

return 0;

}

/**

* 获取agent传入的信息

* @param context

*/

@Override

public void configure(Context context) {

this.prefix = context.getString("prefix", "ruozedata");

this.suffix = context.getString("suffix");

}

}

打jar包上传到/home/hadoop/app/apache-flume-1.6.0-cdh5.15.1-bin/lib

启动脚本

flume-ng agent \

--name a1 \

--conf-file /home/hadoop/script/flume/customer/customer_source.conf \

--conf $FLUME_HOME/conf \

-Dflume.root.logger=INFO,console

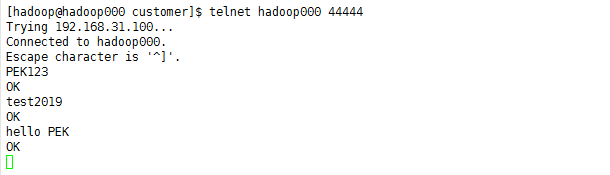

结果如下图

mysql自定义Source请参考: https://github.com/keedio/flume-ng-sql-source

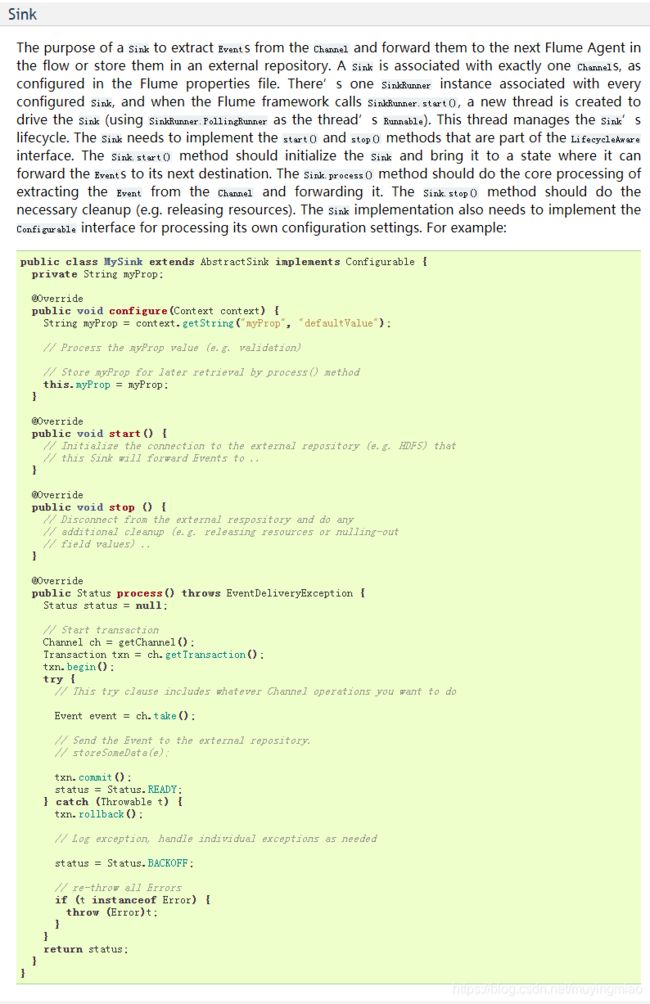

2. 自定义Sink

官网地址如下

http://archive.cloudera.com/cdh5/cdh/5/flume-ng-1.6.0-cdh5.15.1/FlumeDeveloperGuide.html#sink

代码如下

package com.wxx.bigdata.hadoop.mapreduce.flume;

import org.apache.flume.*;

import org.apache.flume.conf.Configurable;

import org.apache.flume.sink.AbstractSink;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

*

*/

public class CustomerSink extends AbstractSink implements Configurable {

private static final Logger logger = LoggerFactory.getLogger(CustomerSink.class);

private String prefix;

private String suffix;

@Override

public Status process() throws EventDeliveryException {

Status status = null;

Channel channel = getChannel();

Transaction transaction = channel.getTransaction();

transaction.begin();

try {

Event event ;

while (true){

event = channel.take();

if(event != null){

break;

}

}

String body = new String(event.getBody());

logger.info(prefix + body + suffix);

transaction.commit();

status = Status.READY;

}catch (Exception e){

transaction.rollback();

status = Status.BACKOFF;

}finally {

transaction.close();

}

return status;

}

@Override

public void configure(Context context) {

this.prefix = context.getString("prefix", "customer");

this.suffix = context.getString("suffix");

}

}

将文件打成jar包上传到$FLUME_HOME/lib

Flmue配置文件为

#定义agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

#定义source

a1.sources.r1.type = netcat

a1.sources.r1.bind = hadoop000

a1.sources.r1.port = 44444

#定义channnel

a1.channels.c1.type = memory

#定义sink

a1.sinks.k1.type = com.wxx.bigdata.hadoop.mapreduce.flume.CustomerSink

a1.sinks.k1.prefix = TEST

a1.sinks.k1.suffix = 2019

#定义配置关系

a1.sinks.k1.channel = c1

a1.sources.r1.channels = c1启动脚本

flume-ng agent \

--name a1 \

--conf-file /home/hadoop/script/flume/customer/customer_sink.conf \

--conf $FLUME_HOME/conf \

-Dflume.root.logger=INFO,console

3. 自定义拦截器

a1 的数据经过拦截器处理后,如果body中包含"PEK",则发送到a2,否则发送到a3

代码如下

package com.wxx.bigdata.hadoop.mapreduce.flume;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import org.apache.flume.interceptor.InterceptorBuilderFactory;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

public class CustomerInterceptor implements Interceptor {

private List events;

@Override

public void initialize() {

events = new ArrayList<>();

}

@Override

public Event intercept(Event event) {

Map headers = event.getHeaders();

String body = new String(event.getBody());

if(body.contains("PEK")){

headers.put("type","PEK");

}else{

headers.put("type","OTHER");

}

return event;

}

@Override

public List intercept(List list) {

events.clear();

for(Event event : list){

events.add(intercept(event));

}

return events;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder{

@Override

public Interceptor build() {

return new CustomerInterceptor();

}

@Override

public void configure(Context context) {

}

}

}

三个agnet的配置文件如下

#定义agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1 c2

#定义source

a1.sources.r1.type = netcat

a1.sources.r1.bind = hadoop000

a1.sources.r1.port = 44444

#interceptors

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = com.wxx.bigdata.hadoop.mapreduce.flume.CustomerInterceptor$Builder

#定义selector

a1.sources.r1.selector.type = multiplexing

a1.sources.r1.selector.header = type

a1.sources.r1.selector.mapping.PEK = c1

a1.sources.r1.selector.mapping.OTHER = c2

#定义channnel

a1.channels.c1.type = memory

a1.channels.c2.type = memory

#定义sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop000

a1.sinks.k1.port = 44445

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop000

a1.sinks.k2.port = 44446

#定义配置关系

a1.sources.r1.channels = c1 c2

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c2

#定义agent

a2.sources = r1

a2.sinks = k1

a2.channels = c1

#定义source

a2.sources.r1.type = avro

a2.sources.r1.bind = hadoop000

a2.sources.r1.port = 44445

#定义channnel

a2.channels.c1.type = memory

#定义sink

a2.sinks.k1.type = logger

#定义配置关系

a2.sinks.k1.channel = c1

a2.sources.r1.channels = c1

#定义agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

#定义source

a1.sources.r1.type = avro

a1.sources.r1.bind = hadoop000

a1.sources.r1.port = 44446

#定义channnel

a1.channels.c1.type = memory

#定义sink

a1.sinks.k1.type = logger

#定义配置关系

a1.sinks.k1.channel = c1

a1.sources.r1.channels = c1启动脚本如下

flume-ng agent \

--name a3 \

--conf-file /home/hadoop/script/flume/customer/customer_interceptor03.conf \

--conf $FLUME_HOME/conf \

-Dflume.root.logger=INFO,console

flume-ng agent \

--name a2 \

--conf-file /home/hadoop/script/flume/customer/customer_interceptor02.conf \

--conf $FLUME_HOME/conf \

-Dflume.root.logger=INFO,console

flume-ng agent \

--name a1 \

--conf-file /home/hadoop/script/flume/customer/customer_interceptor01.conf \

--conf $FLUME_HOME/conf \

-Dflume.root.logger=INFO,console