sklearn机器学习之分类决策树(泰坦尼克号幸存者数据集)

1.导入相应包

import pandas as pd

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

import numpy as np

2.准备数据集(需要数据集可以评论)

#不同路径下选择不同路径

data = pd.read_csv(r"D:\download\sklearnjqxx_jb51\【机器学习】菜菜的sklearn课堂(1-12全课)\01 决策树课件数据源码\data.csv", index_col=0)

data.head()

3.查看数据信息

data.info()

查看数据信息如下:

Int64Index: 891 entries, 1 to 891

Data columns (total 11 columns):

Column Non-Null Count Dtype

--- ------ -------------- -----

0 Survived 891 non-null int64

1 Pclass 891 non-null int64

2 Name 891 non-null object

3 Sex 891 non-null object

4 Age 714 non-null float64

5 SibSp 891 non-null int64

6 Parch 891 non-null int64

7 Ticket 891 non-null object

8 Fare 891 non-null float64

9 Cabin 204 non-null object

10 Embarked 889 non-null object

dtypes: float64(2), int64(4), object(5)

memory usage: 83.5+ KB

3.数据预处理

#删除缺失值过多的列,和观察判断来说和预测的y没有关系的列

data.drop(['Name', 'Ticket', 'Cabin'], axis=1, inplace=True)

data.head()

#处理缺失值,对缺失值较多的列进行填补,有一些特征只确实一两个值,可以采取直接删除记录的方法

data['Age'] = data['Age'].fillna(data['Age'].mean())

data = data.dropna()

#将分类变量转换为数值型

data['Sex'] = data['Sex'].map({

'male' : 1, 'female' : 0})

#也可采用下面这条语句进行转换

#data['sex'] = (data['sex']=='male').astype('int')

#将三分类变量转换为数值型变量,也可直接采用map函数或apply

labels = data["Embarked"].unique().tolist()

data["Embarked"] = data["Embarked"].apply(lambda x: labels.index(x))

data.head()

4.提取标签和特征矩阵,分测试集和训练集

X = data.iloc[:, data.columns != "Survived"]

y = data.iloc[:, data.columns == "Survived"]

#利用内置函数划分训练集测试集

Xtrain, Xtest, Ytrain, Ytest = train_test_split(X, y, test_size=0.3)

5.导入模型,粗略跑一下查看结果

#clf精度计算

clf = DecisionTreeClassifier(random_state=25)

clf.fit(Xtrain, Ytrain)

score = clf.score(Xtest, Ytest)

score

#十折交叉验证

score = cross_val_score(clf, X, y, cv=10).mean()

score

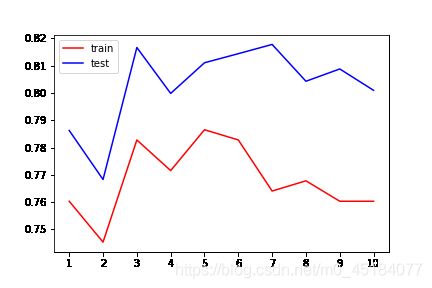

6.在不同max_depth下观察模型的拟合状况

tr = []

te = []

for i in range(10):

clf = DecisionTreeClassifier(random_state=25

,max_depth=i+1

,criterion='entropy')

clf.fit(Xtrain, Ytrain)

#记录两种结果

score_tr = clf.score(Xtest, Ytest)

score_te = cross_val_score(clf, X, y, cv=10).mean()

tr.append(score_tr)

te.append(score_te)

7.结果可视化

plt.figure()

plt.plot(range(1, 11), tr, color='red', label='train')

plt.plot(range(1, 11), te, color='blue', label='test')

plt.legend()

plt.xticks(range(1, 11))

plt.show()

结果图如下

我们从上图发现我们的结果有一些欠拟合,我们下一步采用进一步网格搜索对参数进行优化。

8.用网格搜索调整参数

gini_thresholds = np.linspace(0,0.5,20)

parameters = {

'splitter':('best','random')

,'criterion':("gini","entropy")

,"max_depth":[*range(1,10)]

,'min_samples_leaf':[*range(1,50,5)]

,'min_impurity_decrease':[*np.linspace(0,0.5,20)]

}

clf = DecisionTreeClassifier(random_state=25)

GS = GridSearchCV(clf, parameters, cv=10)

GS.fit(Xtrain,Ytrain)

GS.best_params_

GS.best_score_

输出的最佳参数为

{'criterion': 'gini',

'max_depth': 9,

'min_impurity_decrease': 0.0,

'min_samples_leaf': 6,

'splitter': 'best'}

最佳正确率为0.8458013312852021。