win10系统上LibTorch的安装和使用(cuda10.1版本)

https://www.bilibili.com/video/BV14v411b733#reply3775162098

win10系统上LibTorch的安装和使用(cuda10.1版本)

链接:libtorch_cuda10.1_win10

提取码:1234

链接:cuda10.1 + cudnn10.1

提取码:1234

L i b T o r c h 的 安 装 和 使 用 LibTorch的安装和使用 LibTorch的安装和使用

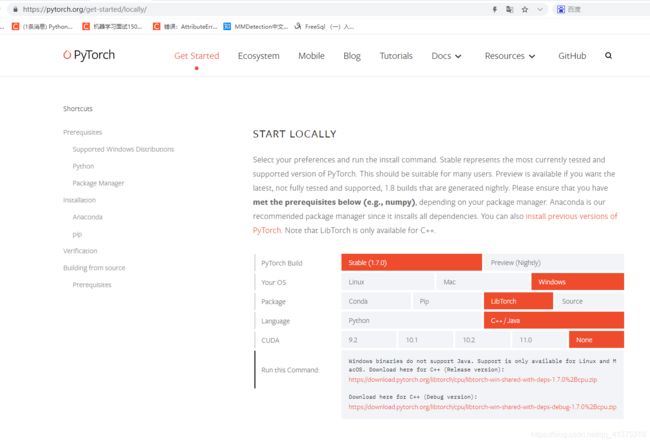

https://pytorch.org/get-started/locally/

LibTorch就在torch的安装界面,Package选择LibTorch就行

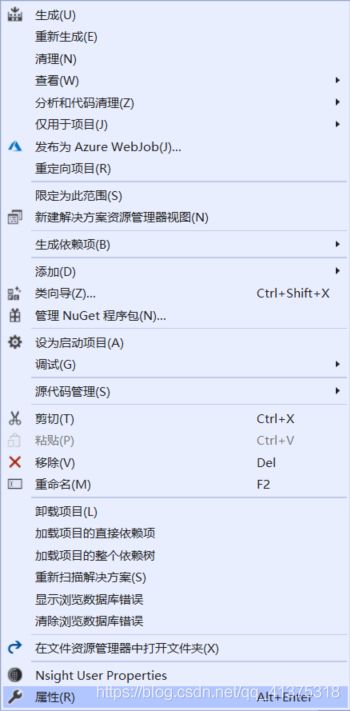

首先,VS2019,编译的时候记得选x64,坑爹玩意,害我晚上11.09才搞完,一晚上没了。

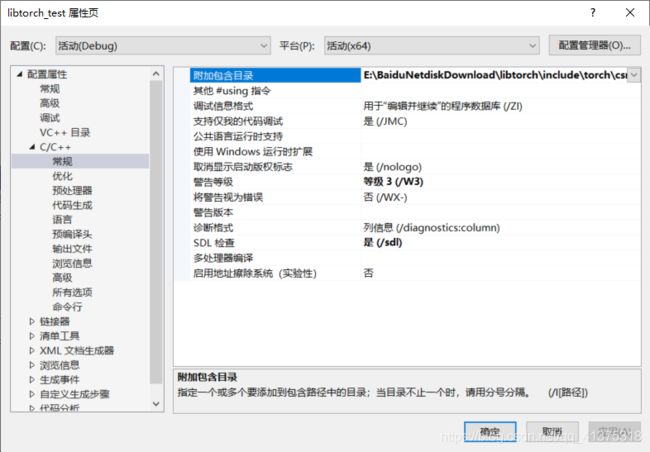

E:\BaiduNetdiskDownload\libtorch\include

E:\BaiduNetdiskDownload\libtorch\include\torch\csrc\api\include

E:\BaiduNetdiskDownload\libtorch\lib

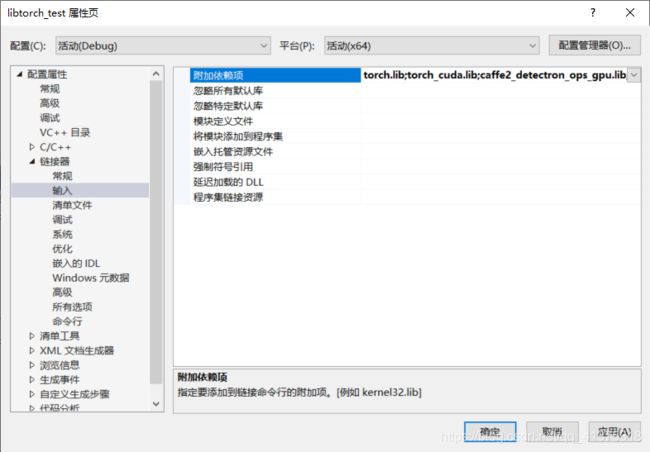

配置libtorch GPU VS2019,添加的lib依赖

GPU版

torch.lib

torch_cuda.lib

caffe2_detectron_ops_gpu.lib

caffe2_module_test_dynamic.lib

torch_cpu.lib

c10_cuda.lib

caffe2_nvrtc.lib

mkldnn.lib

c10.lib

dnnl.lib

libprotoc.lib

libprotobuf.lib

libprotobuf-lite.lib

fbgemm.lib

asmjit.lib

cpuinfo.lib

clog.lib

CPU版

asmjit.lib

c10.lib

c10d.lib

caffe2_detectron_ops.lib

caffe2_module_test_dynamic.lib

clog.lib

cpuinfo.lib

dnnl.lib

fbgemm.lib

gloo.lib

libprotobufd.lib

libprotobuf-lited.lib

libprotocd.lib

mkldnn.lib

torch.lib

torch_cpu.lib

PATH=E:\BaiduNetdiskDownload\libtorch\lib;%PATH%

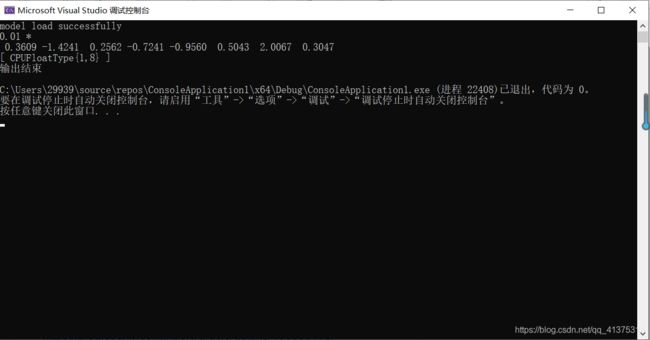

#include #include #include module =

// torch::jit::load("E:/HM_DL/torch_test/traced_resnet_model.pt");

using torch::jit::script::Module;

Module module =

torch::jit::load("C:/Users/major/Desktop/AlexNet_model.pt");

std::cout << "model load successfully\n";

// Create a vector of inputs.

std::vector<torch::jit::IValue> inputs;

inputs.push_back(torch::ones({

1, 3, 224, 224 }));

// Execute the model and turn its output into a tensor.

at::Tensor output = module.forward(inputs).toTensor();

std::cout << output << '\n';

std::cout << "输出结束\n";

;

}

参考文献(看这两篇差不多了)

https://blog.csdn.net/yanfeng1022/article/details/106481312

https://blog.csdn.net/nanbei1/article/details/106390178/