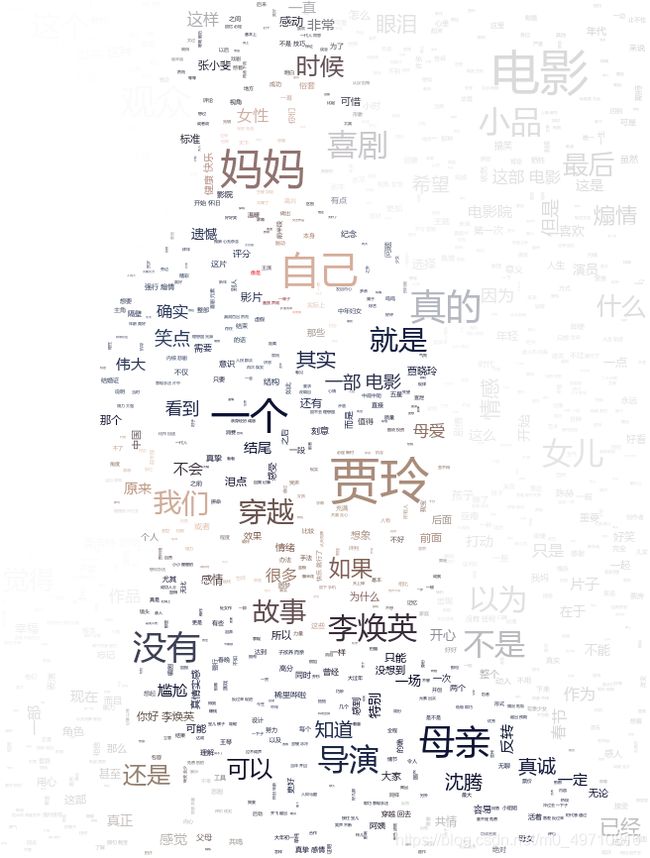

爬取《你好,李焕英》影评,并生成词云图

自学了python爬虫,最近在实践,就拿《你好,李焕英》的豆瓣影评来试试手吧!

思路:

首先是爬取豆瓣影评的短评,保存下来

豆瓣影评每页显示20条评论,我爬取了前面50页的评论,先浏览找到翻页规律,批量生成网页url链接,然后解析每个页面,用的BeautifulSoup,提取评论文字,保存为txt文件

然后将评论生成词云

代码里注释的很清晰了,就不赘述了

上代码

爬取评论的代码

#引用time库、random库、requests库、BeautifulSoup4

import time

import random

import requests

from bs4 import BeautifulSoup

def get_info(url):

dict = {

}

UA = [

"Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/35.0.1916.153 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:30.0) Gecko/20100101 Firefox/30.0",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.75.14 (KHTML, like Gecko) Version/7.0.3 Safari/537.75.14",

"Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 6.2; Win64; x64; Trident/6.0)",

'Mozilla/5.0 (Windows; U; Windows NT 5.1; it; rv:1.8.1.11) Gecko/20071127 Firefox/2.0.0.11',

'Opera/9.25 (Windows NT 5.1; U; en)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)',

'Mozilla/5.0 (compatible; Konqueror/3.5; Linux) KHTML/3.5.5 (like Gecko) (Kubuntu)',

'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.8.0.12) Gecko/20070731 Ubuntu/dapper-security Firefox/1.5.0.12',

'Lynx/2.8.5rel.1 libwww-FM/2.14 SSL-MM/1.4.1 GNUTLS/1.2.9',

"Mozilla/5.0 (X11; Linux i686) AppleWebKit/535.7 (KHTML, like Gecko) Ubuntu/11.04 Chromium/16.0.912.77 Chrome/16.0.912.77 Safari/535.7",

"Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:10.0) Gecko/20100101 Firefox/10.0 "

]

user_agent = random.choice(UA)

#请求页面

r = requests.get(url,headers={

'User-Agent':user_agent})

#创建BeautifulSoup对象

soup = BeautifulSoup(r.text,'lxml')

#找到所有的class属性为comment-item的标签

comment_items = soup.select('.comment-item')

#遍历所有符合要求的标签

for comment_item in comment_items:

#找到包含短评内容的标签

shorts = comment_item.select('.short')

#找到包含时间的标签

times = comment_item.select('.comment-time')

#遍历找到的标签

for short,time in zip(shorts,times):

#提取文字

short_text = short.get_text()

#提取时间

time_num = time['title']

#把文字保存下来#使用前先创建一个txt文件

with open('你好李焕英.txt','a+',encoding='utf-8') as f:

f.write(short_text + '\n')

print(short_text,time_num)

dict = {

'short':short_text,

'time':time_num

}

return dict

if __name__ == '__main__':

#生成大量的url链接

urls = ['https://movie.douban.com/subject/34841067/comments?start={}&limit=20&status=P&sort=new_score'.format(str(i)) for i in range(0,1000,20)]

for url in urls:

get_info(url)

time.sleep(1)

生成词云的代码

import jieba

from wordcloud import wordcloud,WordCloud,ImageColorGenerator

from matplotlib import colors

from imageio import imread

#打开模板图片,赋值给mask

mask = imread("火.png")

#打开文本文件,只读模式,utf8编码

f = open("你好李焕英.txt","r",encoding="utf-8")

t = f.read()

#提取模板颜色

image_colors = ImageColorGenerator(mask)

#关闭文件

f.close()

#文本分词,赋值给列表

ls = jieba.lcut(t)

#以空格分隔列表里的每个元素

txt = " ".join(ls)

#定义词云字体,形状,界面高、宽,背景色,最大文字数量,最大字号,字号递增为1,最大字号为6

w = wordcloud.WordCloud(font_path = "msyh.ttc",mask=mask,\

width = 1000,height = 700,background_color = "white",\

max_words = 1000,min_font_size=3,font_step=3,\

max_font_size=50,)

w.generate(txt)

#修改词云字体颜色为提取的模板颜色

w_color = w.recolor(color_func=image_colors)

#导出为png文件

w.to_file("你好,李焕英词云.png")