Hive学习笔记(十一)—— Hive 实战之谷粒影音

文章目录

- 10.1 需求描述

- 10.2 项目

-

- 10.2.1 数据结构

- 10.2.2 ETL 原始数据

- 10.3 准备工作

-

- 10.3.1 创建表

- 10.3.2 导入 ETL 后的数据

- 10.3.3 向 ORC 表插入数据

- 10.4 业务分析

-

- 10.4.1 统计视频观看数 Top10

- 10.4.2 统计视频类别热度 Top10

- 10.4.3 统计出视频观看数最高的 20 个视频的所属类别以及类别包含Top20 视频的个数

- 10.4.4 统计视频观看数 Top50 所关联视频的所属类别 Rank

- 10.4.5 统计每个类别中的视频热度 Top10,以 Music 为例

- 10.4.6 统计每个类别中视频流量 Top10,以 Music 为例

- 10.4.7 统计上传视频最多的用户 Top10 以及他们上传的观看次数在前 20 的视频

- 10.4.8 统计每个类别视频观看数 Top10

- 常见错误及解决方案

10.1 需求描述

统计硅谷影音视频网站的常规指标,各种 TopN 指标:

–统计视频观看数 Top10

–统计视频类别热度 Top10

–统计视频观看数 Top20 所属类别以及类别包含的 Top20 的视频个数

–统计视频观看数 Top50 所关联视频的所属类别 Rank

–统计每个类别中的视频热度 Top10

–统计每个类别中视频流量 Top10

–统计上传视频最多的用户 Top10 以及他们上传的观看次数在前 20 视频

–统计每个类别视频观看数 Top10

10.2 项目

10.2.1 数据结构

1.视频表

| 字段 | 备注 | 详细描述 |

|---|---|---|

| video id | 视频唯一 id | 11 位字符串 |

| uploader | 视频上传者 | 上传视频的用户名 String |

| age | 视频年龄 | 视频在平台上的整数天 |

| category | 视频类别 | 上传视频指定的视频分类 |

| length | 视频长度 | 整形数字标识的视频长度 |

| views | 观看次数 | 视频被浏览的次数 |

| rate | 视频评分 | 满分 5 分 |

| ratings | 流量 | 视频的流量,整型数字 |

| conments | 评论数 | 一个视频的整数评论数 |

| related ids | 相关视频 id | 相关视频的 id,最多 20 个 |

2.用户表

| 字段 | 备注 | 字段类型 |

|---|---|---|

| uploader | 上传者用户名 | string |

| videos | 上传视频数 | int |

| friends | 朋友数量 | int |

10.2.2 ETL 原始数据

通过观察原始数据形式,可以发现,视频可以有多个所属分类,每个所属分类用&符号分割,且分割的两边有空格字符,同时相关视频也是可以有多个元素,多个相关视频又用“\t” 进行分割。为了分析数据时方便对存在多个子元素的数据进行操作,我们首先进行数据重组清洗操作。即:将所有的类别用“&”分割,同时去掉两边空格,多个相关视频 id 也使用“&”进行分割。

新建项目guli-video

导入依赖

<dependencies>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>2.7.2version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-commonartifactId>

<version>2.7.2version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-hdfsartifactId>

<version>2.7.2version>

dependency>

dependencies>

新建ETLUtil清洗类

package com.dahuan.util;

public class ETLUtil {

/**

* 1,过滤掉长度不够的,小于9个字段

* 2,去掉类别字段的空格

* 3,修改相关视频ID字段的分隔符,有 ‘\t’ 替换为 ‘&’

*

* @param oriStr:输入参数的原始数据,

* @return : 过滤后的数据

*/

public static String etlStr(String oriStr) {

/**

* StringBuffer是可变类,

* 和线程安全的字符串操作类,

* 任何对它指向的字符串的操作都不会产生新的对象。

* 每个StringBuffer对象都有一定的缓冲区容量,

* 当字符串大小没有超过容量时,

* 不会分配新的容量,

* 当字符串大小超过容量时,

* 会自动增加容量

*/

StringBuffer sb = new StringBuffer();

//1.切割oriStr

String[] fields = oriStr.split( "\t" );

//2.对字段长度进行过滤

if (fields.length < 9) {

return null;

}

//3.去掉类别字段的空格 (类别字段下标是4,故索引为3)

fields[3] = fields[3].replaceAll( " ", "" );//替换

//4.修改相关视频ID字段的分隔符,有 ‘\t’ 替换为 ‘&’

for (int i = 0; i < fields.length; i++) {

//对非相关ID进行处理

if (i < 9){

if (i == fields.length - 1){

sb.append( fields[i] );

}else {

sb.append( fields[i]).append("\t");

}

}else {

//对相关ID字段进行处理

if (i == fields.length - 1){

sb.append( fields[i] );

}else {

sb.append( fields[i]).append("&");

}

}

}

//5.返回结果

return sb.toString();

}

}

新建ETLMapper类

package com.dahuan.mr;

import com.dahuan.util.ETLUtil;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class ETLMapper extends Mapper<LongWritable, Text, NullWritable,Text> {

//定义全局的value

private Text v = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//TODO 获取数据

String oriStr = value.toString();

//TODO 过滤数据

String etlStr = ETLUtil.etlStr( oriStr );

//TODO 写出

if (etlStr == null){

return;

}

v.set( etlStr );

context.write( NullWritable.get(),v);

}

}

新建ETLDriver类

package com.dahuan.mr;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class ETLDriver implements Tool {

private Configuration configuration;

@Override

public int run(String[] args) throws Exception {

//1.获取Job对象

Job job = Job.getInstance( configuration );

//2.设置Jar包路径

job.setJarByClass( ETLDriver.class );

//3.设置Mapper类&输出KV类型

job.setMapperClass( ETLMapper.class );

job.setMapOutputKeyClass( NullWritable.class );

job.setMapOutputValueClass( Text.class );

//4.设置最终输出的KV类型

job.setOutputKeyClass( NullWritable.class );

job.setOutputValueClass( Text.class );

//5.设置输入输出的路径

FileInputFormat.setInputPaths( job, new Path( args[0] ) );

FileOutputFormat.setOutputPath( job, new Path( args[1] ) );

//6.提交任务

boolean result = job.waitForCompletion( true );

return result ? 0 : 1;

}

//使Configuration相等于方法的Configuration

@Override

public void setConf(Configuration conf) {

configuration = conf;

}

//返回 Configuration

@Override

public Configuration getConf() {

return configuration;

}

public static void main(String[] args) {

//构建配置信息

Configuration configuration = new Configuration();

try {

//看run源码就知道添加什么了

int run = ToolRunner.run( configuration, new ETLDriver(), args );

System.out.println(run);

} catch (Exception e) {

e.printStackTrace();

}

}

}

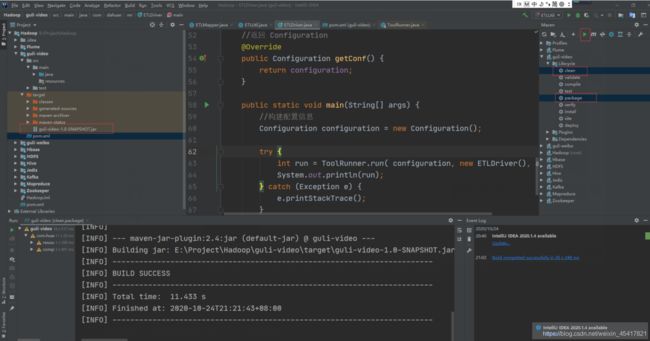

打包jar

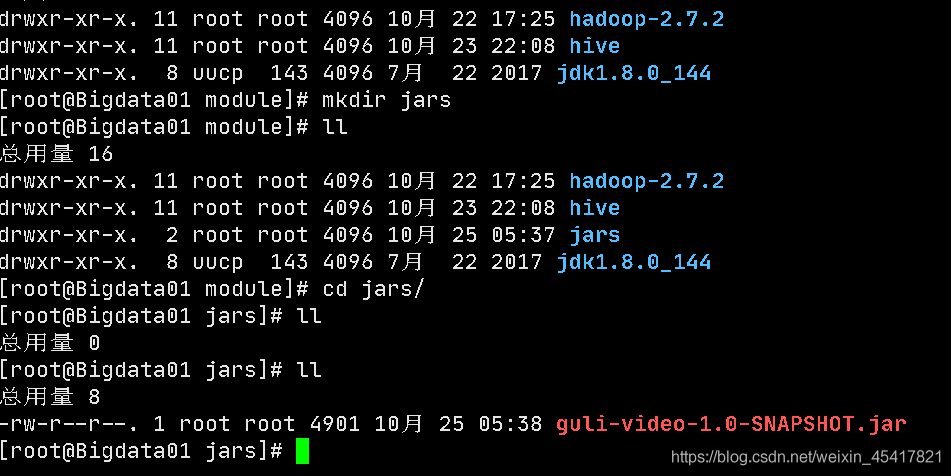

新建jars目录,将jar包放入jars目录中

上传到集群后输入如下命令

[root@Bigdata01 jars]# ll

总用量 8

-rw-r--r--. 1 root root 4901 10月 25 05:38 guli-video-1.0-SNAPSHOT.jar

[root@Bigdata01 jars]# yarn jar guli-video-1.0-SNAPSHOT.jar \

> com.dahuan.mr.ETLDriver /video/2008/0222 /guliout

10.3 准备工作

10.3.1 创建表

创建表:gulivideo_ori,gulivideo_user_ori,

创建表:gulivideo_orc,gulivideo_user_orc

gulivideo_ori:

create table gulivideo_ori(

videoId string,

uploader string,

age int,

category array<string>,

length int,

views int,

rate float,

ratings int,

comments int,

relatedId array<string>)

row format delimited

fields terminated by "\t"

collection items terminated by "&"

stored as textfile;

gulivideo_user_ori:

create table gulivideo_user_ori(

uploader string,

videos int,

friends int)

row format delimited

fields terminated by "\t"

stored as textfile;

然后把原始数据插入到 orc 表中

gulivideo_orc:

create table gulivideo_orc(

videoId string,

uploader string,

age int,

category array<string>,

length int,

views int,

rate float,

ratings int,

comments int,

relatedId array<string>)

row format delimited fields terminated by "\t"

collection items terminated by "&"

stored as orc;

gulivideo_user_orc:

create table gulivideo_user_orc(

uploader string,

videos int,

friends int)

row format delimited

fields terminated by "\t"

stored as orc;

10.3.2 导入 ETL 后的数据

然后将集群合并好的数据导入到gulivideo_ori表中

hive (default)> load data inpath '/guliout/part-r-00000' into table gulivideo_ori;

Loading data to table default.gulivideo_ori

Table default.gulivideo_ori stats: [numFiles=1, totalSize=212238254]

OK

Time taken: 1.67 seconds

检查gulivideo_ori表效果

hive (default)> select * from gulivideo_ori limit 3;

OK

gulivideo_ori.videoid gulivideo_ori.uploader gulivideo_ori.age gulivideo_ori.category gulivideo_ori.length gulivideo_ori.views gulivideo_ori.rate gulivideo_ori.ratings gulivideo_ori.comments gulivideo_ori.relatedid

o4x-VW_rCSE HollyW00d81 581 ["Entertainment"] 74 3534116 4.48 9538 7756 ["o4x-VW_rCSE","d2FEj5BCmmM","8kOs3J0a2aI","7ump9ir4w-I","w4lMCVUbAyA","cNt29huGNoc","1JVqsS16Hw8","ax58nnnNu2o","CFHDCz3x58M","qq-AALY0DE8","2VHU9CBNTaA","KLzMKnrBVWE","sMXQ8KC4w-Y","igecQ61MPP4","B3scImOTl7U","X1Qg9gQKEzI","7krlgBd8-J8","naKnVbWBwLQ","rmWvPbmbs8U","LMDik7Nc7PE"]

P1OXAQHv09E smosh 434 ["Comedy"] 59 3068566 4.55 15530 7919 ["uGiGFQDS7mQ","j1aBQPCZoNE","WsmC6GXMj3I","pjgxSfhgQVE","T8vAZsCNJn8","7taTSPQUUMc","pkCCDp7Uc8c","NfajJLln0Zk","tD-ytSD-A_c","eHt1hQYZa2Y","qP9zpln4JVk","zK7p3o_Mqz4","ji2qlWmhblw","Hyu9HcqTcjE","YJ2W-GnuS0U","NHf2igxB8oo","rNfoeY7F6ig","XXugNPRMg-M","rpIAHWcCJVY","3V2msHD0zAg"]

N0TR0Irx4Y0 Brookers 228 ["Comedy"] 140 3836122 3.16 12342 8066 ["N0TR0Irx4Y0","hX21wbRAkx4","OnN9sX_Plvs","ygakq6lAogg","J3Jebemn-jM","bDgGYUA6Fro","aYcIG0Kmjxs","kMYX4JWke34","Cy8hppgAMR0","fzDTn342L3Q","kOq6sFmoUr0","HToQAB2kE3s","uuCr-nXLCRo","KDrJSNIGNDQ","pb13ggOw9CU","nDGRoqfwaIo","F2XZg0ocMPo","AMRBGt2fQGU","sKq0q8JdriI","bHnAETS5ssE"]

Time taken: 1.086 seconds, Fetched: 3 row(s)

将集群中的user.txt表导入到gulivideo_user_ori中

hive (default)> load data inpath '/user/2008/0903/user.txt' into table gulivideo_user_ori;

Loading data to table default.gulivideo_user_ori

Table default.gulivideo_user_ori stats: [numFiles=1, totalSize=36498078]

OK

Time taken: 0.812 seconds

检查gulivideo_user_ori表效果

hive (default)> select * from gulivideo_user_ori limit 5;

OK

gulivideo_user_ori.uploader gulivideo_user_ori.videos gulivideo_user_ori.friends

barelypolitical 151 5106

bonk65 89 144

camelcars 26 674

cubskickass34 13 126

boydism08 32 50

Time taken: 0.202 seconds, Fetched: 5 row(s)

10.3.3 向 ORC 表插入数据

gulivideo_orc:

insert into table gulivideo_orc select * from gulivideo_ori;

gulivideo_user_orc:

insert into table gulivideo_user_orc select * from gulivideo_user_ori;

10.4 业务分析

10.4.1 统计视频观看数 Top10

SELECT videoId, views

FROM gulivideo_orc

ORDER BY views DESC

LIMIT 10;

结果如下:

videoid views

dMH0bHeiRNg 42513417

0XxI-hvPRRA 20282464

1dmVU08zVpA 16087899

RB-wUgnyGv0 15712924

QjA5faZF1A8 15256922

-_CSo1gOd48 13199833

49IDp76kjPw 11970018

tYnn51C3X_w 11823701

pv5zWaTEVkI 11672017

D2kJZOfq7zk 11184051

Time taken: 56.775 seconds, Fetched: 10 row(s)

10.4.2 统计视频类别热度 Top10

思路:

- 即统计每个类别有多少个视频,显示出包含视频最多的前 10 个类别。

- 我们需要按照类别 group by 聚合,然后 count 组内的 videoId 个数即可。

- 因为当前表结构为:一个视频对应一个或多个类别。所以如果要 group by 类别,需要先

将类别进行列转行(展开),然后再进行 count 即可。 - 最后按照热度排序,显示前 10 条。

某类视频的个数作为视频类别热度

1.使用UDTF函数将类别列炸开

hive (default)> select

> videoId,category_name

> from

> gulivideo_orc

> lateral view explode(category) tmp as category_name

> limit 5;

2.按照category_name进行分组,

统计每种类别视频的总数,同时按照该总数进行倒序排名

取前10

hive (default)> select

> category_name,count(*) category_count

> from (select

> videoId,category_name

> from

> gulivideo_orc

> lateral view explode(category) tmp as category_name)t1

> group by

> category_name

> order by category_count desc

> limit 10;

结果如下:

category_name category_count

Music 179049

Entertainment 127674

Comedy 87818

Animation 73293

Film 73293

Sports 67329

Gadgets 59817

Games 59817

Blogs 48890

People 48890

Time taken: 107.829 seconds, Fetched: 10 row(s)

10.4.3 统计出视频观看数最高的 20 个视频的所属类别以及类别包含Top20 视频的个数

思路:

- 先找到观看数最高的 20 个视频所属条目的所有信息,降序排列

- 把这 20 条信息中的 category 分裂出来(列转行) 3) 最后查询视频分类名称和该分类下有多少个 Top20 的视频

1.统计视频观看数Top20

SELECT videoId,

views,

category

FROM gulivideo_orc

ORDER BY views DESC limit 20;t1

2.对t1表中的category进行炸裂

SELECT videoId,

category_name

FROM t1 lateral view explode(category) tmp AS category_name;

SELECT videoId,

category_name

FROM

(SELECT videoId,

views,

category

FROM gulivideo_orc

ORDER BY views DESC limit 20)t1 lateral view explode(category) tmp AS category_name;t2

3.对t2表进行分组(category_name)求和(总数)

SELECT category_name,

count(*) category_count

FROM t2

GROUP BY category_name

ORDER BY category_count desc

#最终sql

SELECT category_name,

count(*) category_count

FROM

(SELECT videoId,

category_name

FROM

(SELECT videoId,

views,

category

FROM gulivideo_orc

ORDER BY views DESC limit 20)t1 lateral view explode(category) tmp AS category_name)t2

GROUP BY category_name

ORDER BY category_count desc;

结果

OK

category_name category_count

Entertainment 6

Comedy 6

Music 5

People 2

Blogs 2

UNA 1

Time taken: 160.625 seconds, Fetched: 6 row(s)

10.4.4 统计视频观看数 Top50 所关联视频的所属类别 Rank

思路:

1.统计视频观看数Top50

SELECT relatedId,

views

FROM gulivideo_orc

ORDER BY views DESC limit 50;t1

2.对t1表中的relatedId进行炸裂

SELECT related_id

FROM t1 lateral view explode(relatedId) tmp AS related_id

GROUP BY related_id;t2

SELECT related_id

FROM

(SELECT relatedId,

views

FROM gulivideo_orc

ORDER BY views DESC limit 50)t1 lateral view explode(relatedId) tmp AS related_id

GROUP BY related_id;t2

3.取出观看数前50视频关联ID视频的类别

SELECT category

FROM t2

JOIN gulivideo_orc orc

ON t2.related_id=orc.videoId;t3

SELECT category

FROM

(SELECT related_id

FROM

(SELECT relatedId,

views

FROM gulivideo_orc

ORDER BY views DESC limit 50)t1 lateral view explode(relatedId) tmp AS related_id

GROUP BY related_id)t2

JOIN gulivideo_orc orc

ON t2.related_id=orc.videoId;

4.对t3表中的category进行炸裂

SELECT explode(category)

FROM t3

SELECT explode(category)

FROM

(SELECT category

FROM

(SELECT related_id

FROM

(SELECT relatedId,

views

FROM gulivideo_orc

ORDER BY views DESC limit 50)t1 lateral view explode(relatedId) tmp AS related_id

GROUP BY related_id)t2

JOIN gulivideo_orc orc

ON t2.related_id=orc.videoId)t3;t4

5.分组(类别)求和(总数)

SELECT category_name,

count(*) category_count

FROM t4

GROUP BY category_name

ORDER BY category_count desc;

#最终SQL

SELECT category_name,

count(*) category_count

FROM

(SELECT explode(category) category_name

FROM

(SELECT category

FROM

(SELECT related_id

FROM

(SELECT relatedId,

views

FROM gulivideo_orc

ORDER BY views DESC limit 50)t1 lateral view explode(relatedId) tmp AS related_id

GROUP BY related_id)t2

JOIN gulivideo_orc orc

ON t2.related_id=orc.videoId)t3)t4

GROUP BY category_name

ORDER BY category_count desc;

10.4.5 统计每个类别中的视频热度 Top10,以 Music 为例

思路:

- 要想统计 Music 类别中的视频热度 Top10,需要先找到 Music 类别,那么就需要将 category

展开,所以可以创建一张表用于存放 categoryId 展开的数据。 - 向 category 展开的表中插入数据。

- 统计对应类别(Music)中的视频热度。

最终代码:

创建表类别表:

create table gulivideo_category(

videoId string,

uploader string,

age int,

categoryId string,

length int,

views int,

rate float,

ratings int,

comments int,

relatedId array<string>)

row format delimited

fields terminated by "\t"

collection items terminated by "&"

stored as orc;

向类别表中插入数据:

insert into table gulivideo_category

select

videoId,

uploader,

age,

categoryId,

length,

views,

rate,

ratings,

comments,

relatedId

from

gulivideo_orc lateral view explode(category) catetory as

categoryId;

统计 Music 类别的 Top10(也可以统计其他)

select

videoId,

views

from

gulivideo_category

where

categoryId = "Music"

order by

views

desc limit

10;

10.4.6 统计每个类别中视频流量 Top10,以 Music 为例

思路:

- 创建视频类别展开表(categoryId 列转行后的表)

- 按照 ratings 排序即可

最终代码:

select

videoId,

views,

ratings

from

gulivideo_category

where

categoryId = "Music"

order by

ratings

desc limit

10;

10.4.7 统计上传视频最多的用户 Top10 以及他们上传的观看次数在前 20 的视频

1.统计上传视频最多的用户Top10

select

uploader,videos

from gulivideo_user_orc

order by videos desc

limit 10;t1

2.取出这10个人上传的所有视频,按照观看次数进行排名,取出前20

select

video.videoId,

video.views

from t1

join

gulivideo_orc video

on t1.uploader=video.uploader

order by views desc

limit 20;

10.4.8 统计每个类别视频观看数 Top10

思路:

- 先得到 categoryId 展开的表数据

- 子查询按照 categoryId 进行分区,然后分区内排序,并生成递增数字,该递增数字这一

列起名为 rank 列 - 通过子查询产生的临时表,查询 rank 值小于等于 10 的数据行即可。

最终代码:

#1.给每一种类别根据视频观看数添加rank值(倒序)

select

categoryId,videoId,views,

rank() over(partition by categoryId order by views desc) rk

from

gulivideo_category;

#2.过滤前十

select

categoryId,videoId,views

from (select

categoryId,videoId,views,

rank() over(partition by categoryId order by views desc) rk

from

gulivideo_category)t1

where rk <= 10;

常见错误及解决方案

1)SecureCRT 7.3 出现乱码或者删除不掉数据,免安装版的 SecureCRT 卸或者用虚拟机直接操作或者换安装版的 SecureCRT

2)连接不上 mysql 数据库

(1)导错驱动包,应该把 mysql-connector-java-5.1.27-bin.jar 导入/opt/module/hive/lib 的不是这个包。错把 mysql-connector-java-5.1.27.tar.gz 导入 hive/lib 包下。

(2)修改 user 表中的主机名称没有都修改为%,而是修改为 localhost

3)hive 默认的输入格式处理是 CombineHiveInputFormat,会对小文件进行合并。

hive (default)> set hive.input.format;

hive.input.format=org.apache.hadoop.hive.ql.io.CombineHiveInputFo

rmat

可以采用 HiveInputFormat 就会根据分区数输出相应的文件。

hive (default)> set

hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

4)不能执行 mapreduce 程序可能是 hadoop 的 yarn 没开启。

5)启动 mysql 服务时,报 MySQL server PID file could not be found! 异常。 在/var/lock/subsys/mysql 路径下创建 hadoop102.pid,并在文件中添加内容:4396

6)报 service mysql status MySQL is not running, but lock file (/var/lock/subsys/mysql[失败])异 常。

解决方案:在/var/lib/mysql 目录下创建: -rw-rw----. 1 mysql mysql 5 12 月 22 16:41 hadoop102.pid 文件,并修改权限为 777。

7)JVM 堆内存溢出

描述:java.lang.OutOfMemoryError: Java heap space

解决:在 yarn-site.xml 中加入如下代码

<property>

<name>yarn.scheduler.maximum-allocation-mbname>

<value>2048value>

property>

<property>

<name>yarn.scheduler.minimum-allocation-mbname>

<value>2048value>

property>

<property>

<name>yarn.nodemanager.vmem-pmem-rationame>

<value>2.1value>

property>

<property>

<name>mapred.child.java.optsname>

<value>-Xmx1024mvalue>

property>