pytorch--深度学习神经网络中可视化工具Visdom的使用

0.使用visdom服务

先用 python -m visdom.server命令打开visdom服务。

然后登录网页 http://localhost:8097 ,就能够进入到visdom服务中。

1.展示图片

单张图片:

import cv2

import visdom

vis = visdom.Visdom(env='default2',server='http://127.0.0.1',port=8097)#建立visdom实例

image_name = r'E:\datasets\mydata_1216\test\00000.png'

image = cv2.imread(image_name)

image = image.transpose(2,0,1)[::-1,...]#visdom是把通道数放前面的,所以先换通道数,再把BGR换成RGB

vis.images(image,win='win',opts=dict(title='picture')) #把图片放到visdom服务器以显示出来

由于visdom处理方式是通道数是摆在长和宽前面的,而一般图片是(长,宽,通道数),所以得转过来。而且opencv读入图片的方式的BGR的,visdom处理的是RGB的,所以要把BGR转成RGB。

显示效果:

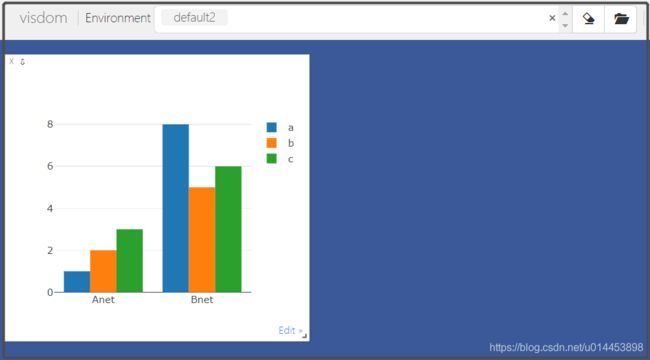

2.展示柱状图

import numpy as np

import visdom

vis = visdom.Visdom(env='default2',server='http://127.0.0.1',port=8097)#建立visdom实例

def bar(name,data,pins,legendnum,*args): #data表示要输入的数据,pins 表示有多少套数据,legendnum表示每套有多少个数据

args = list(args)

l = len(args)

l2 = int(len(args)/2)

args1 = args[0:l2]

args2 = args[l2:l]

data = data.reshape(pins,legendnum)

vis.bar(

X=data,win=name,opts=dict(stackd=False,rownames=args1,legend=args2)

)

bar('bar',np.array([1,2,3,8,5,6]),2,3,'Anet','Bnet','a','b','c') #列表转成numpy格式的原因是,numpy才能使用reshape

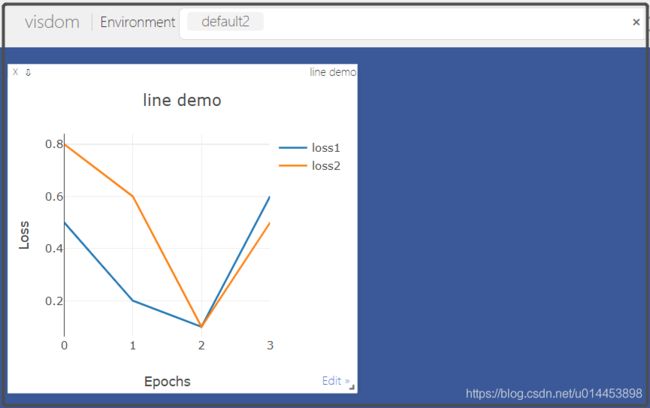

3.展示折线图

画静态折线:

import numpy as np

import visdom

vis = visdom.Visdom(env='default2',server='http://127.0.0.1',port=8097)#建立visdom实例

epochs = 4

x=np.array(range(epochs))

loss1 = np.array([0.5,0.2,0.1,0.6])

loss2 = np.array([0.8,0.6,0.1,0.5])

vis.line(Y=np.column_stack((loss1,loss2)),

X=np.column_stack((x,x)),win='line',opts=dict(legend=["loss1", "loss2"],

title='line demo',

xlabel='Epochs',

ylabel='Loss',))动态画折线:

import time

import numpy as np

import visdom

vis = visdom.Visdom(env='default2',server='http://127.0.0.1',port=8097)#建立visdom实例

epochs = 10

x,y = 0,0

win = vis.line(X=np.array([x]),Y=np.array([y]),opts=(dict(title='lines')))

for i in range(epochs):

y+=i

time.sleep(1)

vis.line(X=np.array([i]),Y=np.array([y]),win=win,update='append')动态画多条折线:

import time

import numpy as np

import visdom

vis = visdom.Visdom(env='default2',server='http://127.0.0.1',port=8097)#建立visdom实例

epochs = 10

loss1 = 0

loss2 = 0

acc = 0

for i in range(epochs):

x=np.array([i])

loss1 += i

loss1 = np.array(loss1)

loss2 += 2*i

loss2 = np.array(loss2)

acc += 0.5*i

acc = np.array(acc)

time.sleep(1)

vis.line(Y=np.column_stack((loss1,loss2,acc)),

X=np.column_stack((x,x,x)),win='line',update='append',opts=dict(legend=["loss1", "loss2","acc"],

title='line demo',

xlabel='Epochs',

ylabel='Value',))

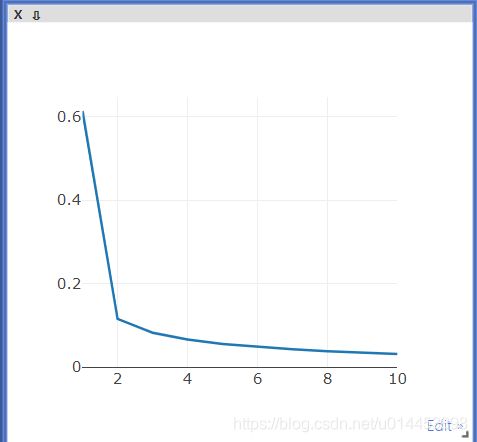

4.结合神经网络(CNN对mnist数据集)绘制其损失曲线:

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

from torch.autograd import Variable

import visdom

import numpy as np

lr = 0.01 # 学习率

momentum = 0.5

log_interval = 10 # 跑多少次batch进行一次日志记录

epochs = 10

batch_size = 64

test_batch_size = 1000

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Sequential( # input_size=(1*28*28)

nn.Conv2d(1, 6, 5, 1, 2), # padding=2保证输入输出尺寸相同

nn.ReLU(), # input_size=(6*28*28)

nn.MaxPool2d(kernel_size=2, stride=2), # output_size=(6*14*14)

)

self.conv2 = nn.Sequential(

nn.Conv2d(6, 16, 5),

nn.ReLU(), # input_size=(16*10*10)

nn.MaxPool2d(2, 2) # output_size=(16*5*5)

)

self.fc1 = nn.Sequential(

nn.Linear(16 * 5 * 5, 120),

nn.ReLU()

)

self.fc2 = nn.Sequential(

nn.Linear(120, 84),

nn.ReLU()

)

self.fc3 = nn.Linear(84, 10)

# 定义前向传播过程,输入为x

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

# nn.Linear()的输入输出都是维度为一的值,所以要把多维度的tensor展平成一维

x = x.view(x.size()[0], -1)

x = self.fc1(x)

x = self.fc2(x)

x = self.fc3(x)

return x # F.softmax(x, dim=1)

def train(epoch): # 定义每个epoch的训练细节

model.train() # 设置为trainning模式

loss_total = 0

for batch_idx, (data, target) in enumerate(train_loader):

data = data.to(device)

target = target.to(device)

data, target = Variable(data), Variable(target) # 把数据转换成Variable

optimizer.zero_grad() # 优化器梯度初始化为零

output = model(data) # 把数据输入网络并得到输出,即进行前向传播

loss = F.cross_entropy(output, target) # 交叉熵损失函数

loss_total+=loss

loss.backward() # 反向传播梯度

optimizer.step() # 结束一次前传+反传之后,更新参数

if batch_idx % log_interval == 0: # 准备打印相关信息

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

aver_loss = loss_total/len(train_loader)

x = np.array([epoch])

loss1 = np.array([aver_loss.item()])

vis.line(X=x, Y=loss1, win='windows', update='append')

if __name__ == '__main__':

vis = visdom.Visdom(env='cnn', server='http://127.0.0.1', port=8097) # 建立visdom实例

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 启用GPU

train_loader = torch.utils.data.DataLoader( # 加载训练数据

datasets.MNIST('../data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,)) # 数据集给出的均值和标准差系数,每个数据集都不同的,都数据集提供方给出的

])),

batch_size=batch_size, shuffle=True)

model = LeNet() # 实例化一个网络对象

model = model.to(device)

optimizer = optim.SGD(model.parameters(), lr=lr, momentum=momentum) # 初始化优化器

for epoch in range(1, epochs + 1): # 以epoch为单位进行循环

train(epoch)