大家好,我是Iggi。

今天我给大家分享的是MapReduce2-3.1.1版本的Join实验。

关于MapReduce的一段文字简介请自行查阅我的实验示例:MapReduce2-3.1.1 实验示例 单词计数(一)

好,下面进入正题。介绍Java操作MapReduce2组件完成两个结果集的Join的操作。

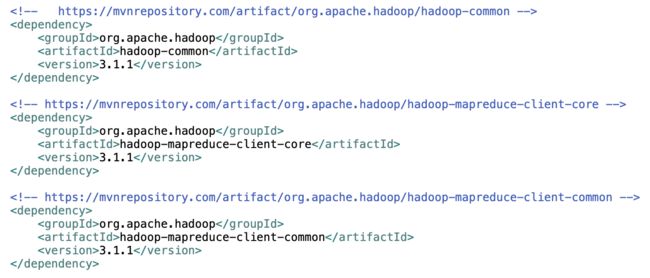

首先,使用IDE建立Maven工程,建立工程时没有特殊说明,按照向导提示点击完成即可。重要的是在pom.xml文件中添加依赖包,内容如下图:

待系统下载好依赖的jar包后便可以编写程序了。

展示实验代码:

package linose.mapreduce.join;

import java.io.IOException;

import java.io.OutputStreamWriter;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.LocatedFileStatus;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.RemoteIterator;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

//import org.apache.log4j.BasicConfigurator;

public class AppJoin {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

/**

* 设定MapReduce示例拥有HDFS的操作权限

*/

System.setProperty("HADOOP_USER_NAME", "hdfs");

/**

* 为了清楚的看到输出结果,暂将集群调试信息缺省。

* 如果想查阅集群调试信息,取消注释即可。

*/

//BasicConfigurator.configure();

/**

* MapReude实验准备阶段:

* 定义HDFS文件路径

*/

String defaultFS = "hdfs://master2.linose.cloud.beijing.com:8020";

String inputPath1 = defaultFS + "/index.dirs/data1.txt";

String inputPath2 = defaultFS + "/index.dirs/data2.txt";

String outputPath = defaultFS + "/index.dirs/joinresult.txt";

/**

* 定义输入路径,输出路径

*/

Path inputHdfsPath1 = new Path(inputPath1);

Path inputHdfsPath2 = new Path(inputPath2);

Path outputHdfsPath = new Path(outputPath);

/**

* 生产配置,并获取HDFS对象

*/

Configuration conf = new Configuration();

conf.set("fs.defaultFS", defaultFS);

FileSystem system = FileSystem.get(conf);

/**

* 如果实验数据文件不存在则创建数据文件

*/

system.delete(inputHdfsPath1, false);

if (!system.exists(inputHdfsPath1)) {

FSDataOutputStream outputStream = system.create(inputHdfsPath1);

OutputStreamWriter file = new OutputStreamWriter(outputStream);

file.write("2019-05-13 13:00\t001\tIggi\tbeijing\t010\n");

file.write("2019-05-13 14:00\t002\tchap\tbeijing\t010\n");

file.write("2019-05-13 15:00\t003\ttxingsg\tbeijing\t010\n");

file.write("2019-05-13 16:00\t004\tthedd\tbeijing\t010\n");

file.write("2019-05-13 17:00\t005\ttlix\tbeijing\t010\n");

file.close();

outputStream.close();

}

system.delete(inputHdfsPath2, false);

if (!system.exists(inputHdfsPath2)) {

FSDataOutputStream outputStream = system.create(inputHdfsPath2);

OutputStreamWriter file = new OutputStreamWriter(outputStream);

file.write("002\tjava\n");

file.write("003\tjava\n");

file.write("004\tproduct\n");

file.write("005\tmanager\n");

file.close();

outputStream.close();

}

/**

* 如果实验结果目录存在,遍历文件内容全部删除

*/

if (system.exists(outputHdfsPath)) {

RemoteIterator fsIterator = system.listFiles(outputHdfsPath, true);

LocatedFileStatus fileStatus;

while (fsIterator.hasNext()) {

fileStatus = fsIterator.next();

system.delete(fileStatus.getPath(), false);

}

system.delete(outputHdfsPath, false);

}

/**

* 创建MapReduce任务并设定Job名称

*/

Job job = Job.getInstance(conf, "Join Data Set");

job.setJarByClass(JoinMapper.class);

/**

* 设置输入文件、输出文件

*/

FileInputFormat.addInputPath(job, inputHdfsPath1);

FileInputFormat.addInputPath(job, inputHdfsPath2);

FileOutputFormat.setOutputPath(job, outputHdfsPath);

/**

* 指定Reduce类输出类型Key类型与Value类型

*/

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

/**

* 指定Map类输出类型Key类型与Value类型

*/

job.setMapOutputKeyClass(TextPair.class);

job.setMapOutputValueClass(Text.class);

/**

* 指定自定义Map类,Reduce类,Partitioner类、Comparator类

*/

job.setMapperClass(JoinMapper.mrJoinMapper.class);

job.setReducerClass(JoinMapper.mrJoinReduce.class);

job.setPartitionerClass(JoinMapper.mrJoinPartitioner.class);

job.setGroupingComparatorClass(JoinMapper.mrJoinComparator.class);

/**

* 提交作业

*/

job.waitForCompletion(true);

/**

* 然后轮询进度,直到作业完成。

*/

float progress = 0.0f;

do {

progress = job.setupProgress();

System.out.println("Word Count Ver2: 的当前进度:" + progress * 100);

Thread.sleep(1000);

} while (progress != 1.0f && !job.isComplete());

/**

* 如果成功,查看输出文件内容

*/

if (job.isSuccessful()) {

RemoteIterator fsIterator = system.listFiles(outputHdfsPath, true);

LocatedFileStatus fileStatus;

while (fsIterator.hasNext()) {

fileStatus = fsIterator.next();

FSDataInputStream outputStream = system.open(fileStatus.getPath());

IOUtils.copyBytes(outputStream, System.out, conf, false);

outputStream.close();

System.out.println("--------------------------------------------");

}

}

}

}

展示MapReduce2-3.1.1组件编写TextPair测试类:

package linose.mapreduce.join;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.WritableComparable;

import linose.mapreduce.secondarysort.IntPair;

public class TextPair implements WritableComparable{

/**

* 定义:指定键、匹配键

*/

private Text pairKey;

private Text specificKey;

public TextPair() {

set(new Text(), new Text());

}

public TextPair(Text pairKey, Text specificKey) {

set(pairKey, specificKey);

}

public TextPair(String pairKey, String specificKey) {

set(new Text(pairKey), new Text(specificKey));

}

public void set(Text pairKey, Text specificKey) {

this.pairKey = pairKey;

this.specificKey = specificKey;

}

public Text getPairKey() {

return pairKey;

}

public Text getSpecificKey() {

return specificKey;

}

public void readFields(DataInput input) throws IOException {

pairKey.readFields(input);

specificKey.readFields(input);

}

public void write(DataOutput output) throws IOException {

pairKey.write(output);

specificKey.write(output);

}

public int compareTo(TextPair o) {

int compare = pairKey.compareTo(o.pairKey);

if (0 != compare) {

return compare;

}

return specificKey.compareTo(o.specificKey);

}

public int hashCode() {

return pairKey.hashCode()*163 + specificKey.hashCode();

}

public boolean equals(Object o) {

if (o instanceof IntPair) {

TextPair pair = (TextPair)o;

return pairKey.equals(pair.pairKey) && specificKey.equals(pair.specificKey);

}

return false;

}

public String toString() {

return pairKey + "\t" + specificKey;

}

}

展示MapReduce2-3.1.1组件编写Join测试类:

package linose.mapreduce.join;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Partitioner;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

public class JoinMapper {

public static class mrJoinMapper extends Mapper {

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

FileSplit file = (FileSplit) context.getInputSplit();

String path = file.getPath().toString();

if (path.contains("data1.txt")) {

String values[] = value.toString().split("\t");

if (values.length < 3) {

return;

}

String SpecificKey = values[0];

String pairKey = values[1];

StringBuffer buffer = new StringBuffer();

buffer.append(SpecificKey);

buffer.append("\t");

for (int i = 2; i < values.length; ++i) {

buffer.append(values[i]);

buffer.append("\t");

}

TextPair outputKey = new TextPair(pairKey, "1");

Text outputValue = new Text(buffer.toString());

context.write(outputKey, outputValue);

}

if (path.contains("data2.txt")) {

String values[] = value.toString().split("\t");

if (values.length < 2) {

return;

}

String pairKey = values[0];

StringBuffer buffer = new StringBuffer();

for (int i = 1; i < values.length; ++i) {

buffer.append(values[i]);

buffer.append("\t");

}

TextPair outputKey = new TextPair(pairKey, "0");

Text outputValue = new Text(buffer.toString());

context.write(outputKey, outputValue);

}

}

}

public static class mrJoinReduce extends Reducer {

protected void reduce(TextPair key, Iterable values, Context context) throws IOException, InterruptedException {

Text pairKey = key.getPairKey();

String val = values.iterator().next().toString();

while (values.iterator().hasNext()) {

Text pairValue = new Text(values.iterator().next().toString() + "\t" + val);

context.write(pairKey, pairValue);

}

}

}

public static class mrJoinPartitioner extends Partitioner {

@Override

public int getPartition(TextPair key, Text value, int partition) {

return Math.abs(key.getPairKey().hashCode() * 127) % partition;

}

}

public static class mrJoinComparator extends WritableComparator {

public mrJoinComparator() {

super(TextPair.class, true);

}

public int compare(@SuppressWarnings("rawtypes") WritableComparable data1, @SuppressWarnings("rawtypes") WritableComparable data2) {

TextPair d1 = (TextPair)data1;

TextPair d2 = (TextPair)data2;

return d1.getPairKey().compareTo(d2.getPairKey());

}

}

}

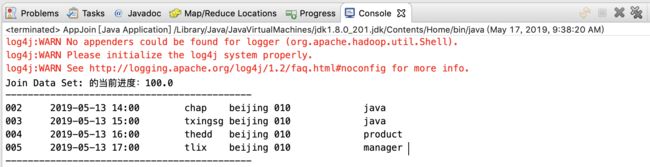

下图为测试结果:

至此,MapReduce2-3.1.1 Join实验示例演示完毕。