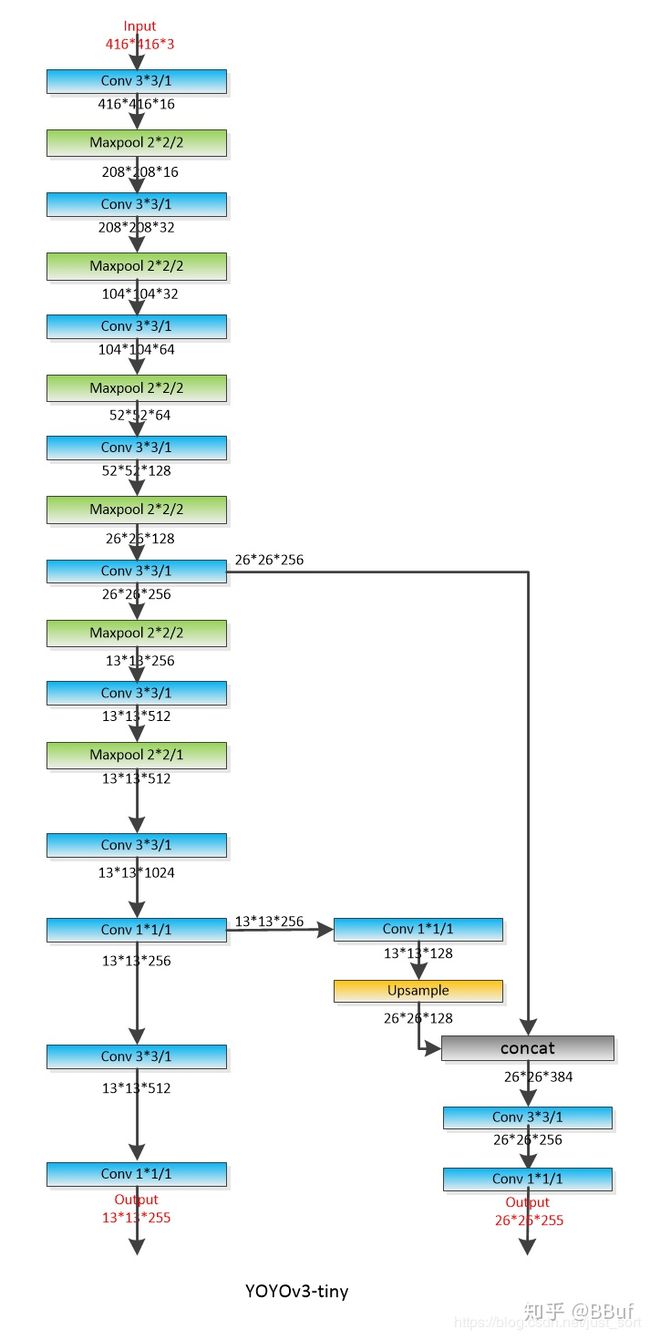

手动实现yolov3-tiny模型结构

import torch

import torch.nn as nn

import numpy as np

from torchsummary import summary

import torch.nn.functional as F

class convBatchReluBlock(nn.Module):

def __init__(self, in_c, out_c, k, s, p):

super().__init__()

self.cblr = nn.Sequential(

nn.Conv2d(in_c, out_c, kernel_size=k, stride=s, padding=p),

nn.BatchNorm2d(out_c, momentum=0.03, eps=1E-4),

nn.LeakyReLU(0.1, inplace=True)

)

def forward(self, x):

return self.cblr(x)

class yolo3tiny(nn.Module):

def __init__(self):

super().__init__()

self.con1 = convBatchReluBlock(3,16,3,1,1)

self.max1 = nn.MaxPool2d(2,2,0)

self.con2 = convBatchReluBlock(16,32,3,1,1)

self.max2 = nn.MaxPool2d(2,2,0)

self.con3 = convBatchReluBlock(32,64,3,1,1)

self.max3 = nn.MaxPool2d(2,2,0)

self.con4 = convBatchReluBlock(64,128,3,1,1)

self.max4 = nn.MaxPool2d(2,2,0)

self.con5 = convBatchReluBlock(128,256,3,1,1)#开始分支

#分支1 继续提取深层的语义信息 并在深层的特征上检测相对于大的目标

self.maxA0 = nn.MaxPool2d(2,2,0)

self.conA1 = convBatchReluBlock(256,512,3,1,1)

self.zeropad = nn.ZeroPad2d((0, 1, 0, 1))

self.maxA1 = nn.MaxPool2d(2,1,0)

self.conA2 = convBatchReluBlock(512,1024,3,1,1)

self.conA3 = convBatchReluBlock(1024,256,1,1,0)

self.conA4 = convBatchReluBlock(256, 512,3,1,1)

self.yolo1 = convBatchReluBlock(512,255,1,1,0)

# 分支2 将 self.conA3 特征层 使用反卷积进行上采样之后 与 self.con5 层的特征进行融合 在这一层次检测相对较小的物体

self.conB1 = convBatchReluBlock(256,128,1,1,0)

self.upsample = nn.Upsample(scale_factor=2)

#融合之后 256 + 128 = 384

self.conB2 = convBatchReluBlock(384,256,3,1,1)

self.yolo2 = convBatchReluBlock(256,255,1,1,0)

def forward(self, x):

x = self.con1(x)

x = self.max1(x)

x = self.max2(self.con2(x))

x = self.max3(self.con3(x))

x = self.max4(self.con4(x))

con5 = self.con5(x)

#分支1

y1 = self.maxA0(con5)

y1 = self.maxA1(self.zeropad(self.conA1(y1)))

con_A3 = self.conA3(self.conA2(y1))

y1 = self.conA4(con_A3)

y1 = self.yolo1(y1)

#分支2

y2 = self.conB1(con_A3)

y2 = self.upsample(y2)

y2 = torch.cat((y2,con5),1)

y2 = self.conB2(y2)

y2 = self.yolo2(y2)

return (y1, y2)

if __name__ == '__main__':

x = torch.randn(1, 3, 416, 416)

model = yolo3tiny()

y1, y2 = model(x)

print(y1.shape, y2.shape)

summary(model, (3, 416, 416),device="cpu")

y1和y2的形状

torch.Size([1, 255, 13, 13]) torch.Size([1, 255, 26, 26])

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 16, 416, 416] 448

BatchNorm2d-2 [-1, 16, 416, 416] 32

LeakyReLU-3 [-1, 16, 416, 416] 0

convBatchReluBlock-4 [-1, 16, 416, 416] 0

MaxPool2d-5 [-1, 16, 208, 208] 0

Conv2d-6 [-1, 32, 208, 208] 4,640

BatchNorm2d-7 [-1, 32, 208, 208] 64

LeakyReLU-8 [-1, 32, 208, 208] 0

convBatchReluBlock-9 [-1, 32, 208, 208] 0

MaxPool2d-10 [-1, 32, 104, 104] 0

Conv2d-11 [-1, 64, 104, 104] 18,496

BatchNorm2d-12 [-1, 64, 104, 104] 128

LeakyReLU-13 [-1, 64, 104, 104] 0

convBatchReluBlock-14 [-1, 64, 104, 104] 0

MaxPool2d-15 [-1, 64, 52, 52] 0

Conv2d-16 [-1, 128, 52, 52] 73,856

BatchNorm2d-17 [-1, 128, 52, 52] 256

LeakyReLU-18 [-1, 128, 52, 52] 0

convBatchReluBlock-19 [-1, 128, 52, 52] 0

MaxPool2d-20 [-1, 128, 26, 26] 0

Conv2d-21 [-1, 256, 26, 26] 295,168

BatchNorm2d-22 [-1, 256, 26, 26] 512

LeakyReLU-23 [-1, 256, 26, 26] 0

convBatchReluBlock-24 [-1, 256, 26, 26] 0

MaxPool2d-25 [-1, 256, 13, 13] 0

Conv2d-26 [-1, 512, 13, 13] 1,180,160

BatchNorm2d-27 [-1, 512, 13, 13] 1,024

LeakyReLU-28 [-1, 512, 13, 13] 0

convBatchReluBlock-29 [-1, 512, 13, 13] 0

ZeroPad2d-30 [-1, 512, 14, 14] 0

MaxPool2d-31 [-1, 512, 13, 13] 0

Conv2d-32 [-1, 1024, 13, 13] 4,719,616

BatchNorm2d-33 [-1, 1024, 13, 13] 2,048

LeakyReLU-34 [-1, 1024, 13, 13] 0

convBatchReluBlock-35 [-1, 1024, 13, 13] 0

Conv2d-36 [-1, 256, 13, 13] 262,400

BatchNorm2d-37 [-1, 256, 13, 13] 512

LeakyReLU-38 [-1, 256, 13, 13] 0

convBatchReluBlock-39 [-1, 256, 13, 13] 0

Conv2d-40 [-1, 512, 13, 13] 1,180,160

BatchNorm2d-41 [-1, 512, 13, 13] 1,024

LeakyReLU-42 [-1, 512, 13, 13] 0

convBatchReluBlock-43 [-1, 512, 13, 13] 0

Conv2d-44 [-1, 255, 13, 13] 130,815

BatchNorm2d-45 [-1, 255, 13, 13] 510

LeakyReLU-46 [-1, 255, 13, 13] 0

convBatchReluBlock-47 [-1, 255, 13, 13] 0

Conv2d-48 [-1, 128, 13, 13] 32,896

BatchNorm2d-49 [-1, 128, 13, 13] 256

LeakyReLU-50 [-1, 128, 13, 13] 0

convBatchReluBlock-51 [-1, 128, 13, 13] 0

Upsample-52 [-1, 128, 26, 26] 0

Conv2d-53 [-1, 256, 26, 26] 884,992

BatchNorm2d-54 [-1, 256, 26, 26] 512

LeakyReLU-55 [-1, 256, 26, 26] 0

convBatchReluBlock-56 [-1, 256, 26, 26] 0

Conv2d-57 [-1, 255, 26, 26] 65,535

BatchNorm2d-58 [-1, 255, 26, 26] 510

LeakyReLU-59 [-1, 255, 26, 26] 0

convBatchReluBlock-60 [-1, 255, 26, 26] 0

================================================================

Total params: 8,856,570

Trainable params: 8,856,570

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 1.98

Forward/backward pass size (MB): 200.44

Params size (MB): 33.79

Estimated Total Size (MB): 236.20

----------------------------------------------------------------