李航老师《统计学习方法》第二版第七章课后题答案

1、比较感知机的对偶形式与线性可分支持向量机的对偶形式。

1.1、感知机的对偶形式

由于李航老师书上的感知机的对偶形式有点问题,这里先对其进行一下改进

最后学习到的感知机的参数是:

w = ∑ i = 1 N α i y i x i (1) w = \sum_{i = 1}^{N}\alpha _{i} y_{i} x_{i} \tag{1} w=i=1∑Nαiyixi(1)

b = ∑ i = 1 N α i y i (2) b = \sum_{i = 1}^{N}\alpha _{i} y_{i} \tag{2} b=i=1∑Nαiyi(2)

其中 α i = n i η \alpha _{i} = n_{i}\eta αi=niη, n i n_{i} ni是表示样本 ( x i , y i ) (x_{i},y_{i}) (xi,yi)的更新的次数, N N N是样本的个数。

我们需要将上式(1),(2)都带入到感知机模型中,于是得到感知机的模型是:

- 输入:线性可分的数据集 T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . ( x N , y N ) } T=\{(x_{1},y_{1}),(x_{2},y_{2}),...(x_{N},y_{N})\} T={ (x1,y1),(x2,y2),...(xN,yN)},学习率 η \eta η

- 输出:感知机模型 f ( x ) = s i g n ( ∑ i = 1 N n i η y i x i + ∑ i = 1 N n i η y i ) f(x) = sign( \sum_{i = 1}^{N}n_{i}\eta y_{i} x_{i}+\sum_{i = 1}^{N}n_{i}\eta y_{i}) f(x)=sign(∑i=1Nniηyixi+∑i=1Nniηyi)

- 初始化 n = ( 0 , 0 , . . . , 0 ) , 第 i 个 位 置 表 示 n i 的 初 始 值 n = (0,0,...,0),第i个位置表示n_{i}的初始值 n=(0,0,...,0),第i个位置表示ni的初始值

- 在训练集 T T T中选取数据点 ( x i , y i ) (x_{i},y_{i}) (xi,yi)

- 如果是误分类的数据点或者数据点在平面上,也就是 y i ( ∑ i = 1 N n i η y i x i + ∑ i = 1 N n i η y i ) < = 0 y_{i}(\sum_{i = 1}^{N}n_{i}\eta y_{i} x_{i}+\sum_{i = 1}^{N}n_{i}\eta y_{i})<=0 yi(∑i=1Nniηyixi+∑i=1Nniηyi)<=0,则有 n i = n i + 1 n_{i} = n_{i}+1 ni=ni+1

- 转到第四步直到没有误分类数据。

1.2、线性可分支持向量机的对偶性形式

- 输入训练数据集

- 输出分离超平面和分类决策函数

- 构造并且求解最优化约束 m i n α 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j ( x i ∙ x j ) − ∑ i = 1 N α i s . t . ∑ i = 1 N α i y i = 0 ; α i > = 0 ; i = 1 , 2 , . . . , N \underset{\alpha }{min}\ \frac{1}{2} \sum_{i = 1}^{N} \sum_{j = 1}^{N}\alpha _{i}\alpha _{j}y_{i}y_{j}(x_{i}\bullet x_{j}) -\sum_{i=1}^{N}\alpha _{i}\\ s.t. \ \sum_{i = 1}^{N}\alpha _{i}y_{i} = 0;\\\alpha _{i}>=0;\\i=1,2,...,N αmin 21i=1∑Nj=1∑Nαiαjyiyj(xi∙xj)−i=1∑Nαis.t. i=1∑Nαiyi=0;αi>=0;i=1,2,...,N求得关于 α \alpha α的最优解。

- 计算参数关于 α \alpha α的具体表达 w = ∑ i = 1 N α i y i x i w = \sum_{i=1}^{N}\alpha_{i}y_{i}x_{i} w=i=1∑Nαiyixi并且选择一个 α \alpha α的正分量 α j \alpha_{j} αj,计算 b = y j − ∑ i = 1 N α i y i ( x i ∙ x j ) b = y_{j}-\sum_{i=1}^{N}\alpha_{i}y_{i}(x_{i}\bullet x_{j}) b=yj−i=1∑Nαiyi(xi∙xj)

- 上面的一步就已经求得了分离超平面和分类决策函数了。

1.3、两者的比较

对于感知机的学习,是基于梯度下降算法的,每次只选取一个数据点在满足条件的情况下进行参数更新,迭代更新。而支持向量机的学习是基于条件约束规划,通过对偶问题来更新,在数据集不大时,可以一次性求得模型的参数的具体表达。支持向量机可以在理论上求得解析解。

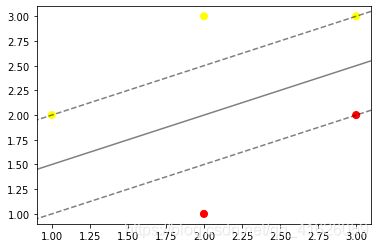

2、已知正例点 x 1 = ( 1 , 2 ) , x 2 = ( 2 , 3 ) , x 3 = ( 3 , 3 ) x_{1}=(1,2),x_{2}=(2,3),x_{3}=(3,3) x1=(1,2),x2=(2,3),x3=(3,3),负例点是 x 4 = ( 2 , 1 ) , x 5 = ( 3 , 2 ) x_{4}=(2,1),x_{5}=(3,2) x4=(2,1),x5=(3,2),试求最大间隔分离超平面和分类决策函数,并在图上画出分离超平面、间隔边界以及支持向量。

import matplotlib.pyplot as plt

import numpy as np

from sklearn.svm import SVC

def plot_svc_decision_function(X,y,model, ax=None, plot_support=True):

plt.scatter(X[:,0],X[:,1],c=y,s=50,cmap='autumn') #将图像展示出来

if ax is None:

ax = plt.gca()

xlim = ax.get_xlim()

ylim = ax.get_ylim()

x = np.linspace(xlim[0], xlim[1], 30)

y = np.linspace(ylim[0], ylim[1], 30)

Y, X = np.meshgrid(y, x)

xy = np.vstack([X.ravel(), Y.ravel()]).T

P = model.decision_function(xy).reshape(X.shape)

ax.contour(X, Y, P, colors='k',levels=[-1, 0, 1], alpha=0.5,linestyles=['--', '-', '--'])

ax.set_xlim(xlim)

ax.set_ylim(ylim)

if __name__ == '__main__':

X = np.array([[1, 2],

[2, 3],

[3, 3],

[2, 1],

[3, 2]])

y = np.array([1, 1, 1, 0, 0])

model = SVC(kernel='linear', C=1E10)

model.fit(X, y)

plot_svc_decision_function(X, y, model)

比较遗憾的是,自己实现了一下针对这个题目的线性可分支持向量机算法,因为算法里面有个求解约束规划,我使用的是惩罚函数方法,搭建了一个Pytorch模型,但是求解不成功,下面将代码放出来,如果有大神指点,万分荣幸!!

下面是我自己实现的代码,希望有大神指点一二,万分感谢

import torch

import numpy as np

import torch.nn as nn

import torch.optim as optim

import matplotlib.pyplot as plt

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

class max_m(nn.Module):

def __init__(self, M = 10**5, dim = 2, data_nums = 5):

super(max_m, self).__init__()

self.w = nn.Parameter(torch.randn(dim, 1))

self.bias = nn.Parameter(torch.randn(1))

self.M = M

self.data_nums = data_nums

def forward(self,X, Y):#这个是不需要Gram矩阵的

X = torch.from_numpy(X).float()

Y = torch.from_numpy(Y).float().T

out = torch.mm(X, self.w) + self.bias

y_o = Y * out - 1

y_o_low_0 = torch.where(y_o < 0, y_o, torch.FloatTensor([[0] * self.data_nums]).T)

#下面开始构造损失函数

# print(y_o)

loss = 0.5*torch.norm(self.w, p = 2) ** 2 - self.M * torch.sum(y_o_low_0)

return loss

def learn(X, Y,M = 10 ** 5, epoch = 1000, lr = 0.01):

svm_loss = max_m(M = M)

optim_adam = optim.Adam(svm_loss.parameters(), lr = lr)

svm_loss.train()

for e in range(epoch):

loss = svm_loss(X, Y)

optim_adam.zero_grad()

loss.backward()

optim_adam.step()

if e % 100 == 0:

d = svm_loss.state_dict()

print('w is :', d['w'])

print('b is :', d['bias'])

# print('loss is :', loss)

d = svm_loss.state_dict()

w = d['w']

bias = d['bias']

w = w.detach().numpy()

bias = bias.detach().numpy()

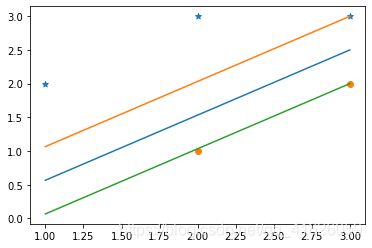

plt.scatter(X[:3, 0], X[:3, 1], marker = '*')

plt.scatter(X[3:, 0], X[3:, 1], marker = 'o')

x_new = [1, 3]

y_new = [-(w[0]/w[1])*x_new[0] - (bias/w[1]), -(w[0]/w[1])*x_new[1] - (bias/w[1])]

y_new1 = [-(w[0]/w[1])*x_new[0] + (1 - bias)/w[1], -(w[0]/w[1])*x_new[1] + (1 - bias)/w[1]]

y_new2 = [-(w[0]/w[1])*x_new[0] - (1 + bias)/w[1], -(w[0]/w[1])*x_new[1] - (1 + bias)/w[1]]

plt.plot(x_new, y_new)

plt.plot(x_new, y_new1)

plt.plot(x_new, y_new2)

print('y_new is :', y_new)

print('y_new1 is :', y_new1)

print('y_new2 is :', y_new2)

print(w)

print(bias)

if __name__ == "__main__":

dim = 2

M = 10 ** 8

lr = 0.0005

epoch = 10000

X = np.array([[1, 2],

[2, 3],

[3, 3],

[2, 1],

[3, 2]])

Y = np.array([[1, 1, 1, -1, -1]])

learn(X, Y, M = M, epoch = epoch, lr = lr)

3、线性支持向量机也可以定义为以下形式: m i n w , b , ξ 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i 2 s . t . y i ( w ∗ x i ) ≥ 1 − ξ i , i = 1 , 2 , . . . , N ξ i ≥ 0 , i = 1 , 2 , . . . , N \underset{w,b,\xi }{min} \ \frac{1}{2}\left \| w \right \| ^{2} + C\sum_{i = 1}^{N}\xi _{i}^{2}\\s.t. \ y_{i}(w*x_{i})\ge 1 -\xi _{i},\ i = 1,2,...,N\\\xi _{i} \ge 0,\ i = 1,2,...,N w,b,ξmin 21∥w∥2+Ci=1∑Nξi2s.t. yi(w∗xi)≥1−ξi, i=1,2,...,Nξi≥0, i=1,2,...,N试求出其对偶形式。

解:

我们先引入拉格朗日函数,其中 α ≥ 0 , β ≥ 0 \alpha \ge0, \beta \ge 0 α≥0,β≥0

L ( w , ξ , α , β ) = 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i 2 − ∑ i = 1 N α i ( y i ( w ∗ x i ) − 1 + ξ i ) − ∑ i = 1 N β i ξ i L(w,\xi ,\alpha ,\beta ) = \ \frac{1}{2}\left \| w \right \| ^{2} + C\sum_{i = 1}^{N}\xi _{i}^{2}-\sum_{i = 1}^{N}\alpha _{i}(y_{i}(w*x_{i})-1 +\xi _{i})-\sum_{i=1}^{N}\beta _{i}\xi _{i} L(w,ξ,α,β)= 21∥w∥2+Ci=1∑Nξi2−i=1∑Nαi(yi(w∗xi)−1+ξi)−i=1∑Nβiξi

那么原始问题就是

m i n w , ξ m a x α , β L ( w , ξ , α , β ) = 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i 2 − ∑ i = 1 N α i ( y i ( w ∗ x i ) − 1 + ξ i ) − ∑ i = 1 N β i ξ i \underset{w,\xi }{min}\ \underset{\alpha ,\beta }{max} L(w,\xi ,\alpha ,\beta ) = \ \frac{1}{2}\left \| w \right \| ^{2} + C\sum_{i = 1}^{N}\xi _{i}^{2}-\sum_{i = 1}^{N}\alpha _{i}(y_{i}(w*x_{i})-1 +\xi _{i})-\sum_{i=1}^{N}\beta _{i}\xi _{i} w,ξmin α,βmaxL(w,ξ,α,β)= 21∥w∥2+Ci=1∑Nξi2−i=1∑Nαi(yi(w∗xi)−1+ξi)−i=1∑Nβiξi

原始问题是先关于 α , β \alpha, \beta α,β极大化,这是因为,假如有一个数据点 ( x i , y i ) (x_{i},y_{i}) (xi,yi)不满足条件 y i ( w ∗ x i ) ≥ 1 − ξ i y_{i}(w*x_{i})\ge 1-\xi _{i} yi(w∗xi)≥1−ξi,也就是 y i ( w ∗ x i ) ≤ 1 − ξ i y_{i}(w*x_{i})\le 1-\xi _{i} yi(w∗xi)≤1−ξi,因为我们是先极大化,那么在极大化的时候,就是让对应的 α i \alpha_{i} αi趋近于 + ∞ +\infty +∞,只要让其他的 α , β \alpha, \beta α,β均为0,此时的目标函数的值就是 + ∞ +\infty +∞.此时 m a x α , β L ( w , ξ , α , β ) = 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i 2 − ∑ i = 1 N α i ( y i ( w ∗ x i ) − 1 + ξ i ) − ∑ i = 1 N β i ξ i = + ∞ \underset{\alpha ,\beta }{max} L(w,\xi ,\alpha ,\beta ) = \ \frac{1}{2}\left \| w \right \| ^{2} + C\sum_{i = 1}^{N}\xi _{i}^{2}-\sum_{i = 1}^{N}\alpha _{i}(y_{i}(w*x_{i})-1 +\xi _{i})-\sum_{i=1}^{N}\beta _{i}\xi _{i} \\=+\infty α,βmaxL(w,ξ,α,β)= 21∥w∥2+Ci=1∑Nξi2−i=1∑Nαi(yi(w∗xi)−1+ξi)−i=1∑Nβiξi=+∞

如果是数据点满足约束条件,因为是极大化,就是让所有的 α , β \alpha, \beta α,β均为0,此时

m a x α , β L ( w , ξ , α , β ) = 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i 2 − ∑ i = 1 N α i ( y i ( w ∗ x i ) − 1 + ξ i ) − ∑ i = 1 N β i ξ i = 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i 2 \underset{\alpha ,\beta }{max} L(w,\xi ,\alpha ,\beta ) = \ \frac{1}{2}\left \| w \right \| ^{2} + C\sum_{i = 1}^{N}\xi _{i}^{2}-\sum_{i = 1}^{N}\alpha _{i}(y_{i}(w*x_{i})-1 +\xi _{i})-\sum_{i=1}^{N}\beta _{i}\xi _{i} \\=\ \frac{1}{2}\left \| w \right \| ^{2} + C\sum_{i = 1}^{N}\xi _{i}^{2} α,βmaxL(w,ξ,α,β)= 21∥w∥2+Ci=1∑Nξi2−i=1∑Nαi(yi(w∗xi)−1+ξi)−i=1∑Nβiξi= 21∥w∥2+Ci=1∑Nξi2

所以有

m a x α , β L ( w , ξ , α , β ) = { 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i 2 , 数 据 点 满 足 约 束 条 件 + ∞ , 其 他 不 满 足 约 束 条 件 情 况 \underset{\alpha ,\beta }{max} L(w,\xi ,\alpha ,\beta ) =\left\{ \begin{aligned} &&\frac{1}{2}\left \| w \right \| ^{2} + C\sum_{i = 1}^{N}\xi _{i}^{2},数据点满足约束条件 \\ &&+\infty,其他不满足约束条件情况 \end{aligned} \right. α,βmaxL(w,ξ,α,β)=⎩⎪⎪⎨⎪⎪⎧21∥w∥2+Ci=1∑Nξi2,数据点满足约束条件+∞,其他不满足约束条件情况

因此再对 m a x α , β L ( w , ξ , α , β ) \underset{\alpha ,\beta }{max} L(w,\xi ,\alpha ,\beta ) α,βmaxL(w,ξ,α,β)进行一次极小化调整参数 w w w,使所有的数据满足约束条件,就可以得到

m i n w , ξ m a x α , β L ( w , ξ , α , β ) = 1 2 ∥ w ∥ 2 + C ∑ i = 1 N ξ i 2 \underset{w,\xi }{min}\ \underset{\alpha ,\beta }{max} L(w,\xi ,\alpha ,\beta )=\frac{1}{2}\left \| w \right \| ^{2} + C\sum_{i = 1}^{N}\xi _{i}^{2} w,ξmin α,βmaxL(w,ξ,α,β)=21∥w∥2+Ci=1∑Nξi2

那么对偶问题就是调整极大化和极小化的顺序,也就是

m a x α , β m i n w , ξ L ( w , ξ , α , β ) \underset{\alpha ,\beta }{max}\ \underset{w,\xi }{min} L(w,\xi ,\alpha ,\beta ) α,βmax w,ξminL(w,ξ,α,β)

也就是先关于 w , ξ w,\xi w,ξ求导,令其导数为0,求出 w , ξ w,\xi w,ξ关于 α , β \alpha,\beta α,β的表达式,然后再代入原式即可得到对偶问题。除了有点麻烦之外,好像没啥难的。

4、证明内积的正整数幂函数: K ( x , z ) = ( x ∙ z ) p K(x,z)=(x\bullet z)^p K(x,z)=(x∙z)p是正定核函数,这里的p是正整数, x , z ∈ R n x,z\in R^{n} x,z∈Rn.

证明:

我们这里需要构造一个长度为 n p n^p np的函数向量

f ( x 1 , . . . , x n ) = ( x 1 p , . . . , x n p , x 1 p − 1 x 2 , x 1 p − 1 x 3 , . . . , x 1 p − 1 x n , . . . ) f(x_{1}, ..., x_{n})=(x_{1}^{p},...,x_{n}^{p},x_{1}^{p-1}x_{2},x_{1}^{p-1}x_{3},...,x_{1}^{p-1}x_{n},...) f(x1,...,xn)=(x1p,...,xnp,x1p−1x2,x1p−1x3,...,x1p−1xn,...)

是一个有序的函数向量,举个例子来说明如何构造

设 x = x 1 , x 2 , p = 2 x={x_{1},x_{2}}, p=2 x=x1,x2,p=2,那么 f ( x 1 , x 2 ) = ( x 1 2 , x 2 2 , x 1 x 2 , x 2 x 1 ) f(x_{1},x_{2})=(x_{1}^2,x_{2}^2,x_1x_2,x_2x_1) f(x1,x2)=(x12,x22,x1x2,x2x1)

那么对于 z = ( z 1 , z 2 ) z=(z_{1},z_{2}) z=(z1,z2),有 f ( z 1 , z 2 ) = ( z 1 2 , z 2 2 , z 1 z 2 , z 2 z 1 ) f(z_{1},z_{2})=(z_{1}^2,z_{2}^2,z_1z_2,z_2z_1) f(z1,z2)=(z12,z22,z1z2,z2z1)

所以 K ( x , z ) = x 1 2 z 1 2 + 2 x 1 x 2 z 1 z 2 + x 2 2 z 2 2 = f ( x 1 , x 2 ) ∙ f ( z 1 , z 2 ) K(x,z) = x_{1}^2z_{1}^2+2x_1x_2z_1z_2+x_{2}^{2}z_{2}^2=f(x_1,x_2)\bullet f(z_1,z_2) K(x,z)=x12z12+2x1x2z1z2+x22z22=f(x1,x2)∙f(z1,z2)

其中 ∙ \bullet ∙表示内积。

其他的也均可以这样构造,注意内积和乘法之间的区别。

如果使用核函数另外一种定义,也就是证明Gram矩阵是半正定矩阵的方法将会很难处理。