【论文笔记07】A Survey on Differentially Private Machine Learning 差分隐私机器学习综述, IEEE CIM 2020

目录导引

- 系列传送

- A Survey on Differentially Private Machine Learning

-

- Abstract

- I Introduction

- II Backgrounds [Defs & Theorems]

- III Differential Privacy in Traditional ML

-

- III.1

-

- Supervised Learning

- Unsupervised Learning

- Online Learning

- III.2

-

- Differentially Private ERM

- Differentially Private Distributed Optimization

- IV Differential Privacy in Deep Learning

- Reference

系列传送

我的论文笔记频道

【Active Learning】

【论文笔记01】Learning Loss for Active Learning, CVPR 2019

【论文笔记02】Active Learning For Convolutional Neural Networks: A Core-Set Approch, ICLR 2018

【论文笔记03】Variational Adversarial Active Learning, ICCV 2019

【论文笔记04】Ranked Batch-Mode Active Learning,ICCV 2016

【Transfer Learning】

【论文笔记05】Active Transfer Learning, IEEE T CIRC SYST VID 2020

【论文笔记06】Domain-Adversarial Training of Neural Networks, JMLR 2016

【Differential Privacy】

【论文笔记07】A Survey on Differentially Private Machine Learning, IEEE CIM 2020

A Survey on Differentially Private Machine Learning

原文传送

Abstract

Machine learning models suffer from a potential risk of learking private information contained in training data. 机器学习虽然在很多应用中表现得很好,但是他们有可能泄露一些训练数据中地私人信息。

As one of the mainstream private-preserving techniques, differential privacy provides a promising way to prevent the leaking of individual-level privacy. 差分隐私是一种主流的隐私保护技术,有效地防护了个体层级的信息隐私。[何谓individual-level, 从后面的定义可以很清晰地理解。直观上说,就是增减一个 D D D 集合中的个体数据不会带来 M ( D ) \mathcal{M}(D) M(D)上的变化。]

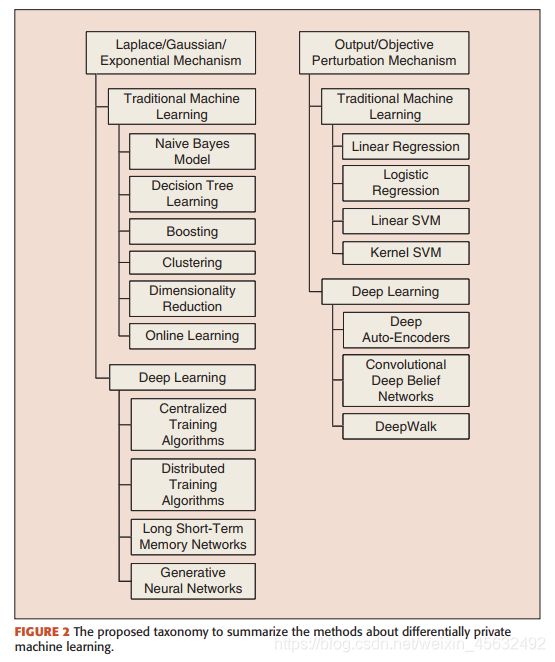

This work provides a comprehensive survey on the existing works that incorporate differential privacy with maching learning and categorizes them into two broad categories.

- The Laplace/Gaussian/exponential mechanism

- The output/objective perturbation mechanism

In the former, a calibrated[标刻度的,校准的] amount of noise is added to the non-private model. And in the latter, the output or the objective function is perturbed by random noise.

I Introduction

Motivation

The datasets used for learning desirable models in ML methods may contain sensitive individual information. 机器学习算法用到的数据集可能有隐私信息。

- The text typed on a mobile device may include schedules, profiles, usernames, passwords, text dialogues, search queries and medical histories, etc.

- Areas like targeted advertisements and personalized recommendations.

- In medicine and finance, each institute only has access to limited amount of data, and large datasets are often crowdsourced.[源自大众的,意思是数据集从民众身上获取,含有真实的敏感信息]

Ideally, the sensitive individual information should not be leaked in the process of training ML models. 既然这些数据是含有隐私成分的,我们不希望在训练过程中泄露其中的信息。

In other words, we allow the parameters of machine learning models to learn general patterns (people who smoke are more likely to suffer from lung cancer), rather than facts about specific training samples (he had lung cancer).

Unfortunately, shallow models like support vector machine and logistic regression are capable of memorizing secret information of the training dataset. Deep models like convolutional neural networks are able to exactly memorize arbitrary labels of the training data

Attacks against machine learning

这篇综述列举了很多关于对机器学习模型进行攻击以窃取训练数据的例子。

- Model inversion attacks that exploit confidence information and basic countermeasures, 利用信任信息和基本对策的模型反转攻击[2] This paper designed a membership inference attack that can estimate whether the training dataset contains a specific data record via the black-box access to the model. 这篇文章设计了一种会员推断攻击方法,通过一个连接到模型的黑箱,可以估计训练数据集中是否存在一个特定的数据记录。

- Stealing machine learning models via prediction APIs 通过预测API来窃取机器学习模型[3] This work presented a model inversion attack that can reveal individual faces given the API of the face recognition system and the name of the user to be identified. 这篇论文则发明了一种可以通过给定的API发掘个体faces以及用户名称的模型反转攻击。

- Privacy in pharmacogenetics: An endtoend case study of personalized warfarin dosing 药物遗传学中的隐私:个性化华法林给药的端到端案例研究[4] The decision probability of the classification model can be used for the model extraction attack, which implicitly steals the sensitive training data. Attackers abuse the pharmacogenetic model to inversely infer the patients’ genetic markers. 分类模型的决策概率也可以被用来做模型窃取攻击,隐性窃取敏感的训练数据。攻击者滥用药物遗传学模型来反向推断患者的遗传标记。

- Hacking smart machines with smarter ones: How to extract meaningful data from machine learning classifiers 用更聪明的模型来破解/入侵只能模型:如何从机器学习分类其中提取有意义的数据[5] This work suggests that adversaries can maliciously acquire unexpected but useful information from the machine learning classifiers. 敌对者可以恶意地从机器学习分类器中获得非预期但是有用的信息。

The meaning of Differency Privacy

Why differential privacy has recently been considered as a promising strategy 很有前途 for privacy preserving in machine learning? There are roughly three major reasons:

- (1) Differential privacy can provide a provable privacy guarantee for individuals, which benefits from the most solid theoretical basis compared with other privacy-preserving models.

- (2) Differential privacy achieves privacy preserving in machine learning by adding a calibrated amount of noise to the model or output results according to the concrete mechanisms instead of simply anonymizing the individual data.

- (3) Differential privacy can make a graceful compromise between privacy and utility by adjusting a privacy budget index, in which the smaller the value of the privacy budget, the stronger privacy guarantee it provides. For data owners, differentially private machine learning further ensures that the adversaries are incapable to infer any information about a single record with high confidence from the released machine learning models or output results, even if an adversary knows all the remaining records in this dataset.

An illustration of Differentially Private Machine Learning:

The categories of Differentially Private Machine Learning methods:

II Backgrounds [Defs & Theorems]

Definition 1 (Differential Privacy)

Theorem 1 (Sequential Composition Theorem)

Theorem 2 (Parallel Composition Theorem)

Definition 2 (Global Sensitivity)

Definition 3 (Local Sensitivity)

Definition 4 (Laplace Mechanism)

Definition 5 (Gaussian Mechanism)

Definition 6 (Exponential Mechanism)

III Differential Privacy in Traditional ML

III.1

The Laplace mechanism, the Gaussian mechanism and the exponential mechanism are three classical differential privacy mechanisms. The privacy of individual data can be preserved by combining the Laplace mechanism or the Gaussian mechanism or the exponential mechanism with specific machine learning algorithms.

Supervised Learning

Unsupervised Learning

Online Learning

III.2

The output and objective perturbation mechanisms are two generic differentially private methods to achieve privacy preserving.

The output perturbation mechanism is performed by adding an amount of noise to the model output while the objective perturbation mechanism can be implemented by adding noise to the objective function and optimizing the perturbed objective function.

Differentially Private ERM

ERM: Empirical Risk Minimization

这个方法有一体化加入理论bound分析的可能性!

Linear Regression

Differentially Private Distributed Optimization

Distributed Optimization 分布式优化

IV Differential Privacy in Deep Learning

Reference

[1] Gong, Maoguo, et al. “A survey on differentially private machine learning.” IEEE Computational Intelligence Magazine 15 (2020): 49-64. R. Shokri, M. Stronati, C. Song, and V. Shmatikov, “Membership inference attacks against machine learning models,” in Proc. IEEE Symp. Security and Privacy, San Jose, CA, May 2017. doi: 10.1109/SP.2017.41.

[2] M. Fredrikson, S. Jha, and T. Ristenpart, “Model inversion attacks that exploit confidence information and basic countermeasures,” in Proc. 22nd ACM SIGSAC Conf. Computer Communication Security, Denver, CO, Oct. 2015, pp. 1322–1333. doi: 10.1145/2810103.2813677.

[3] F. Tramèr, F. Zhang, A. Juels, M. K. Reiter, and T. Ristenpart, “Stealing machine learning models via prediction APIs,” in Proc. 25th Security Symp., Austin, TX, Aug. 2016, pp. 601–618. doi: 10.5555/3241094.3241142.

[4] M. Fredrikson, E. Lantz, S. Jha, S. Lin, D. Page, and T. Ristenpart, “Privacy in pharmacogenetics: An endtoend case study of personalized warfarin dosing,” in Proc. 23rd Security Symp., San Diego, CA, Aug. 2014, pp. 17–32.

[5] G. Ateniese, G. Felici, L. V. Mancini, A. Spognardi, A. Villani, and D. Vitali, “Hacking smart machines with smarter ones: How to extract meaningful data from machine learning classifiers,” Int. J. Secur. Netw., vol. 10, no. 3, pp. 137–150, 2015. doi: 10.1504/IJSN.2015.071829.