部署Hadoop(3.3.0)伪分布式集群

前言:

本文主要介绍部署Hadoop(3.3.0)伪分布式集群

注:本文部署伪分布式集群的前提是已经装好hadoop(3.3.0)以及jvm。

一、什么叫做伪分布式?

顾名思义,伪分布就是假分布式,假就假在只有一台机器而不是多台机器来完成一个任务,但是模拟了分布式的这个过程,所以伪分布式下Hadoop也就是虽然在一个机器上配置了hadoop的所有节点,但伪分布式完成了所有分布式所必须的事件。伪分布式Hadoop和单机版最大区别就在于需要配置HDFS。

二、配置伪分布式Hadoop集群

1.修改配置文件core-site.xml

文件位置: /home/hadoop/hadoop/etc/hadoop/

[hadoop@server1 hadoop]$ vim core-site.xml

fs.defaultFS hdfs://localhost:9000

2.修改配置文件hdfs-site.xml

文件位置:/home/hadoop/hadoop/etc/hadoop/

[hadoop@server1 hadoop]$ vim hdfs-site.xml

dfs.replication

1 ##自己充当节点,设置为1;

3.生成密钥进行免密登陆

[hadoop@localhost ~]$ ssh-keygen #一路回车即可;

[hadoop@localhost ~]$ ssh-copy-id localhost #分发钥匙;

Now try logging into the machine, with: "ssh 'localhost'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@localhost ~]$ ssh localhost #尝试连接

Last login: Wed Oct 28 23:01:23 2020

[hadoop@localhost ~]$ exit #已经免密登陆成功,退出登陆;

logout

Connection to localhost closed.

4.格式化HDFS,并开启服务

注:安装成功之后需要格式化,非必要条件切忌重复格式化,否则会出现问题;

[root@localhost hadoop]# pwd

/home/hadoop/hadoop

[root@localhost hadoop]# bin/hdfs namenode -format

5.开启服务的时候报错,日志没有写入的权限

[root@localhost hadoop]# pwd

/home/hadoop/hadoop/sbin

[hadoop@localhost sbin]$ ./start-dfs.sh #开启服务

Starting namenodes on [localhost]

localhost: ERROR: Unable to write in /home/hadoop/hadoop-3.3.0/logs. Aborting.

Starting datanodes

localhost: ERROR: Unable to write in /home/hadoop/hadoop-3.3.0/logs. Aborting.

Starting datanodes

localhost: ERROR: Unable to write in /home/hadoop/hadoop-3.3.0/logs. Aborting.

Starting secondary namenodes [localhost.localdomain]

localhost.localdomain: ERROR: Unable to write in /home/hadoop/hadoop-3.3.0/logs. Aborting.

解决思路:

#进入超户进行修改777权限

[root@localhost sbin]# chmod 777 /home/hadoop/hadoop-3.3.0/logs/SecurityAuth-root.audit

[root@localhost sbin]# ll /home/hadoop/hadoop-3.3.0/logs

total 0

-rwxrwxrwx. 1 root root 0 Oct 28 23:05 SecurityAuth-root.audit

重新开启服务:

[hadoop@localhost sbin]$ pwd

/home/hadoop/hadoop/sbin

[hadoop@localhost sbin]$ ./start-dfs.sh

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [localhost.localdomain]

6.此时看到已经开启namenode ,datanode

[hadoop@localhost sbin]$ jps

3328 NameNode

3442 DataNode

3767 Jps

3642 SecondaryNameNode

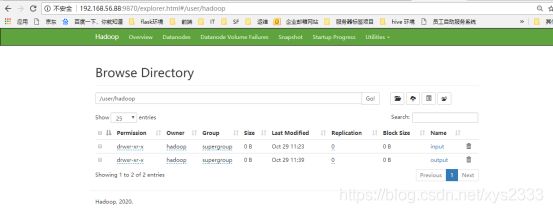

7.浏览器查看

http://192.168.56.88:9870

#关闭防火墙在浏览器上输入地址才可以看到web页面

[root@localhost sbin]# systemctl stop firewalld

8.测试

#创建目录,上传

[hadoop@localhost hadoop]$ bin/hdfs dfs -mkdir -p /user/hadoop

[hadoop@localhost hadoop]$ bin/hdfs dfs -ls

[hadoop@localhost hadoop]$ bin/hdfs dfs -put input

[hadoop@localhost hadoop]$ bin/hdfs dfs -ls

Found 1 items

drwxr-xr-x - hadoop supergroup 0 2020-10-28 23:23 input

9.删除input以及output文件夹

[root@localhost hadoop]# pwd

/home/hadoop/hadoop

[root@localhost hadoop]# ls

bin include lib LICENSE-binary LICENSE.txt NOTICE-binary output sbin

etc input libexec licenses-binary logs NOTICE.txt README.txt share

[root@localhost hadoop]# rm -fr input/ output/

[root@localhost hadoop]# ls

bin include libexec licenses-binary logs NOTICE.txt sbin

etc lib LICENSE-binary LICENSE.txt NOTICE-binary README.txt share

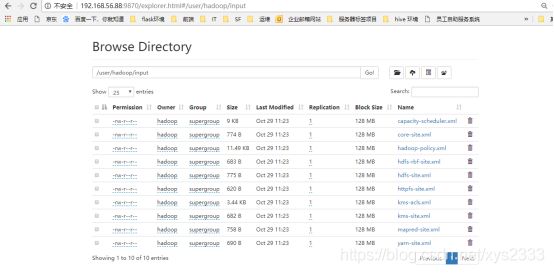

**此时input和output不会出现在当前目录下,而是上传到了分布式文件系统中,网页上可以看到**

10.从分布式上面get目录

[root@localhost hadoop]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar grep input output 'dfs[a-z.]+'

[root@localhost hadoop]# ls

bin etc include lib libexec LICENSE-binary licenses-binary LICENSE.txt logs NOTICE-binary N

[root@localhost hadoop]# bin/hdfs dfs -get /user/hadoop/output

[root@localhost hadoop]# ls

bin include libexec licenses-binary logs NOTICE.txt README.txt share

etc lib LICENSE-binary LICENSE.txt NOTICE-binary output sbin

[root@localhost hadoop]# cd output/

[root@localhost output]# ls

part-r-00000 _SUCCESS

[root@localhost output]# cat *

- Dfsadmin

DFSAdmin 命令用来管理HDFS集群。这些命令只有HDSF的管理员才能使用。

文章到这里就已经结束了,欢迎关注后续!!!