图像分类网络5——AlexNet实现猫狗大战

目录

- 1 AlexNet简介

-

- 1.1 结构

- 1.2 六大特点

- 2 猫狗大战数据集

- 3 代码实现

1 AlexNet简介

AlexNet是2012年ImageNet竞赛冠军获得者Hinton和他的学生Alex Krizhevsky设计的。也是在那年之后,更多的更深的神经网络被提出,比如优秀的vgg,GoogLeNet。 这对于传统的机器学习分类算法而言,已经相当的出色。

论文地址:

http://www.cs.toronto.edu/~fritz/absps/imagenet.pdf

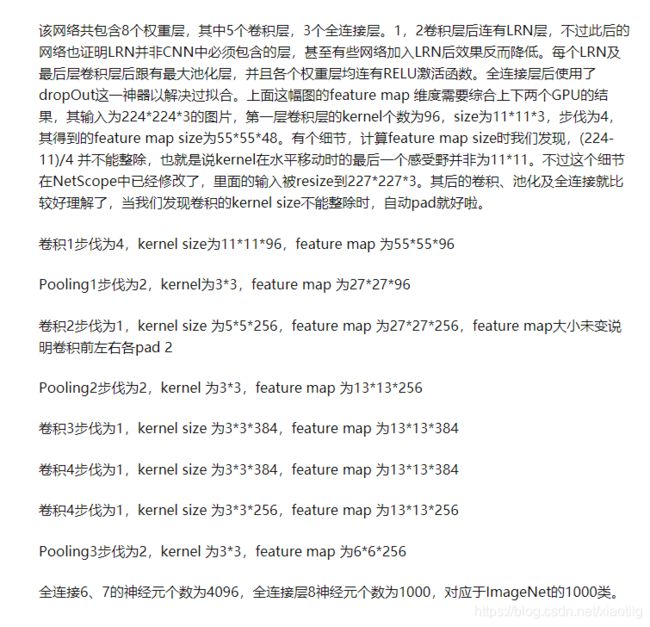

1.1 结构

这两篇文章很好:

https://blog.csdn.net/qq_24695385/article/details/80368618

https://zhuanlan.zhihu.com/p/27222043

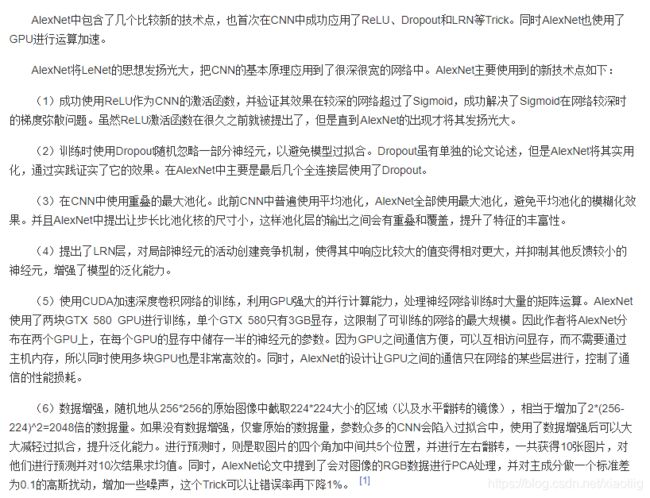

1.2 六大特点

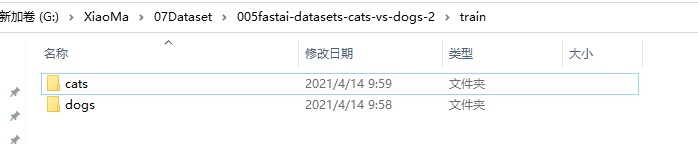

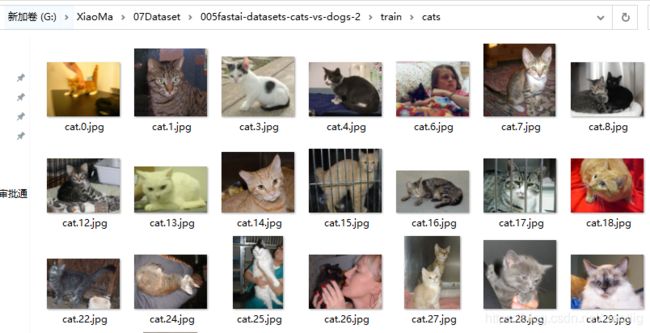

2 猫狗大战数据集

训练文件中,一个猫的文件夹,一个狗的文件夹,分别有11500张

3 代码实现

这个模型有些bug还没有解决,流程是对的

import tensorflow as tf

import os

# Alexnet实现猫狗大战

class CatOrDog(object):

"""猫狗分类

"""

num_epochs = 1

batch_size = 32

learning_rate = 0.001

# 训练目录

train_cats_dir = r'G:\XiaoMa\07Dataset\005fastai-datasets-cats-vs-dogs-2\train\cats'

train_dogs_dir = r'G:\XiaoMa\07Dataset\005fastai-datasets-cats-vs-dogs-2\train\dogs'

# 验证目录

test_cats_dir = r'G:\XiaoMa\07Dataset\005fastai-datasets-cats-vs-dogs-2\valid\cats'

test_dogs_dir = r'G:\XiaoMa\07Dataset\005fastai-datasets-cats-vs-dogs-2\valid\dogs'

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(96, 11, strides=4, activation='relu', padding="same", input_shape=(256, 256, 3)),

tf.keras.layers.MaxPooling2D(pool_size=(3,3), strides=2),

tf.keras.layers.Conv2D(256, 5, activation='relu', padding="same"),

tf.keras.layers.MaxPooling2D(pool_size=(3,3), strides=2),

tf.keras.layers.Conv2D(384, 3, activation='relu', padding="same"),

tf.keras.layers.Conv2D(384, 3, activation='relu', padding="same"),

tf.keras.layers.Conv2D(256, 3, activation='relu', padding="same"),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(2, activation='softmax')

])

"""

self.model = tf.keras.Sequential([

tf.keras.layers.Conv2D(32, 3, activation='relu', input_shape=(256, 256, 3)),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(32, 5, activation='relu'),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(2, activation='softmax')

])

"""

def __init__(self):

# 1、读取训练集的猫狗文件

self.train_cat_filenames = tf.constant([CatOrDog.train_cats_dir + filename

for filename in os.listdir(CatOrDog.train_cats_dir)])

self.train_dog_filenames = tf.constant([CatOrDog.train_dogs_dir + filename

for filename in os.listdir(CatOrDog.train_dogs_dir)])

# 2、猫狗文件列表合并,并且初始化猫狗的目标值,0为猫,1为狗

self.train_filenames = tf.concat([self.train_cat_filenames, self.train_dog_filenames], axis=-1)

self.train_labels = tf.concat([

tf.zeros(self.train_cat_filenames.shape, dtype=tf.int32),

tf.ones(self.train_dog_filenames.shape, dtype=tf.int32)],

axis=-1)

def get_batch(self):

"""获取dataset批次数据

:return:

"""

train_dataset = tf.data.Dataset.from_tensor_slices((self.train_filenames, self.train_labels))

print(train_dataset)

# 进行数据的map, 随机,批次和预存储

train_dataset = train_dataset.map(

map_func=self._decode_and_resize,

num_parallel_calls=tf.data.experimental.AUTOTUNE)

train_dataset = train_dataset.shuffle(buffer_size=20000)

train_dataset = train_dataset.batch(CatOrDog.batch_size)

train_dataset = train_dataset.prefetch(tf.data.experimental.AUTOTUNE)

return train_dataset

# 图片处理函数,读取,解码并且进行输入形状修改

def _decode_and_resize(self,filename, label):

image_string = tf.io.read_file(filename)

image_decoded = tf.image.decode_jpeg(image_string)

image_resized = tf.image.resize(image_decoded, [256, 256]) / 255.0

return image_resized, label

def train(self, train_dataset):

"""训练过程

:return:

"""

self.model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=CatOrDog.learning_rate),

loss=tf.keras.losses.sparse_categorical_crossentropy,

metrics=[tf.keras.metrics.sparse_categorical_accuracy]

)

self.model.fit(train_dataset, epochs=CatOrDog.num_epochs)

self.model.save_weights("./ckpt/cat_or_dogs.h5")

def test(self):

# 1、构建测试数据集

test_cat_filenames = tf.constant([CatOrDog.test_cats_dir + filename

for filename in os.listdir(CatOrDog.test_cats_dir)])

test_dog_filenames = tf.constant([CatOrDog.test_dogs_dir + filename

for filename in os.listdir(CatOrDog.test_dogs_dir)])

test_filenames = tf.concat([test_cat_filenames, test_dog_filenames], axis=-1)

test_labels = tf.concat([

tf.zeros(test_cat_filenames.shape, dtype=tf.int32),

tf.ones(test_dog_filenames.shape, dtype=tf.int32)],

axis=-1)

# 2、构建dataset

test_dataset = tf.data.Dataset.from_tensor_slices((test_filenames, test_labels))

test_dataset = test_dataset.map(self._decode_and_resize)

test_dataset = test_dataset.batch(self.batch_size)

# 3、加载模型进行评估

if os.path.exists("./ckpt/cat_or_dogs.h5"):

self.model.load_weights("./ckpt/cat_or_dogs.h5")

print(self.model.metrics_names)

print(self.model.evaluate(test_dataset))

if __name__ == "__main__":

cat_dos = CatOrDog()

train_dataset = cat_dos.get_batch()

cat_dos.train(train_dataset)

cat_dos.model.summary()

print("训练结束")

cat_dos.test()