初体验scrapy-爬取豆瓣250电影数据

文章目录

- 前言

- 一、scrapy如何安装

- 二、scrapy项目实战

-

- 1.创建scrapy项目

- 2.明确目标

- 3.制作爬虫

- 4.存储内容

- 5.运行爬虫

- 6.在项目里新建一个main.py,方便爬虫运行

- 7.将豆瓣电影数据保存到json文件中

- 8.将电影数据保存到csv文件中

- 总结

前言

Scrapy 是一套基于基于Twisted的异步处理框架,纯python实现的爬虫框架,用户只需要定制开发几个模块就可以轻松的实现一个爬虫,用来抓取网页内容以及各种图片,非常之方便

一、scrapy如何安装

pip install scrapy

https://docs.scrapy.org/en/latest/intro/install.html

二、scrapy项目实战

1.创建scrapy项目

scrapy startproject firstScrapy

cd firstScrapy

scrapy genspider DoupanSipder movie.douban.com

2.明确目标

爬取豆瓣电影数据

3.制作爬虫

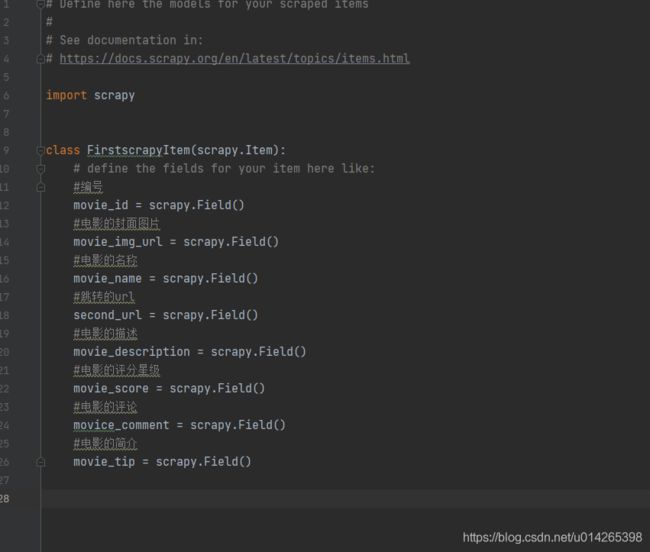

import scrapy

class FirstscrapyItem(scrapy.Item):

# define the fields for your item here like:

#编号

movie_id = scrapy.Field()

#电影的封面图片

movie_img_url = scrapy.Field()

#电影的名称

movie_name = scrapy.Field()

#跳转的url

second_url = scrapy.Field()

#电影的描述

movie_description = scrapy.Field()

#电影的评分星级

movie_score = scrapy.Field()

#电影的评论

movice_comment = scrapy.Field()

#电影的简介

movie_tip = scrapy.Field()

解析爬虫

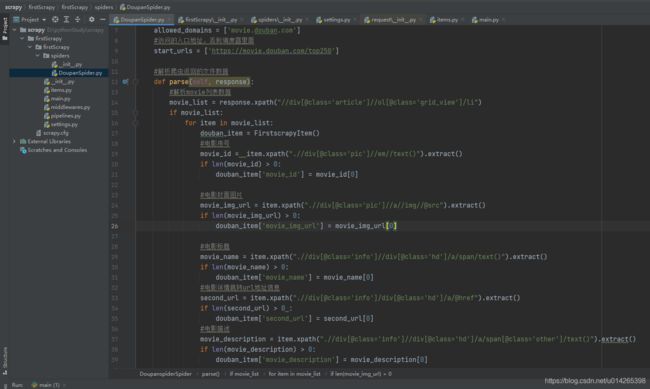

import scrapy

from firstScrapy.items import FirstscrapyItem

class DoupanspiderSpider(scrapy.Spider):

#爬虫名称

name = 'douban_spider'

#允许访问的域名

allowed_domains = ['movie.douban.com']

#访问的入口地址,丢到调度器里面

start_urls = ['https://movie.douban.com/top250']

#解析爬虫返回的文件数据

def parse(self, response):

#解析movie列表数据

movie_list = response.xpath("//div[@class='article']//ol[@class='grid_view']/li")

if movie_list:

for item in movie_list:

douban_item = FirstscrapyItem()

#电影序号

movie_id = item.xpath(".//div[@class='pic']//em//text()").extract()

if len(movie_id) > 0:

douban_item['movie_id'] = movie_id[0]

#电影封面图片

movie_img_url = item.xpath(".//div[@class='pic']//a//img//@src").extract()

if len(movie_img_url) > 0:

douban_item['movie_img_url'] = movie_img_url[0]

#电影标题

movie_name = item.xpath(".//div[@class='info']//div[@class='hd']/a/span/text()").extract()

if len(movie_name) > 0:

douban_item['movie_name'] = movie_name[0]

#电影详情跳转url地址信息

second_url = item.xpath(".//div[@class='info']/div[@class='hd']/a/@href").extract()

if len(second_url) > 0 :

douban_item['second_url'] = second_url[0]

#电影描述

movie_description = item.xpath(".//div[@class='info']//div[@class='hd']/a/span[@class='other']/text()").extract()

if len(movie_description) > 0:

douban_item['movie_description'] = movie_description[0]

#电影评分

movie_score = item.xpath(".//div[@class='info']//div[@class='bd']//div[@class='star']//span[@class='rating_num']/text()").extract()

if len(movie_score) > 0:

douban_item['movie_score'] = movie_score[0]

#电影评论

movie_comment = item.xpath(".//div[@class='info']//div[@class='bd']//div[@class='star']//span/text()").extract()

if len(movie_comment) > 0:

douban_item['movie_comment'] = movie_comment[1]

#电影总结

movie_tip = item.xpath(".//div[@class='info']//div[@class='bd']//p[@class='quote']/span/text()").extract()

if len(movie_tip) >0 :

douban_item['movie_tip'] = movie_tip[0]

#将数据交给管道处理

yield douban_item

#获取下一页的数据

next_link = response.xpath("//span[@class='next']/link/@href").extract()

if len(next_link) > 0 :

next_link = next_link[0]

#继续访问下一页

url = "https://movie.douban.com/top250"+next_link

print(url)

yield scrapy.Request(url,callback=self.parse)

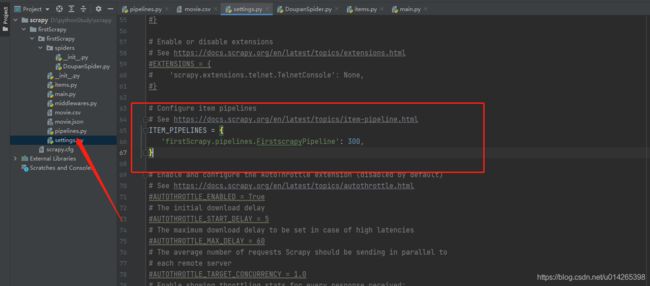

4.存储内容

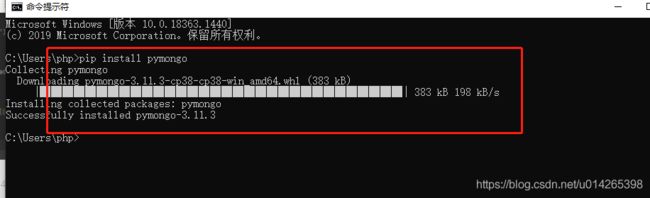

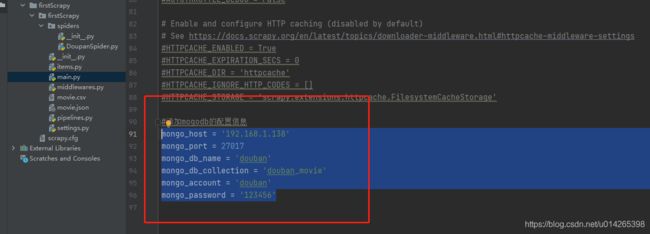

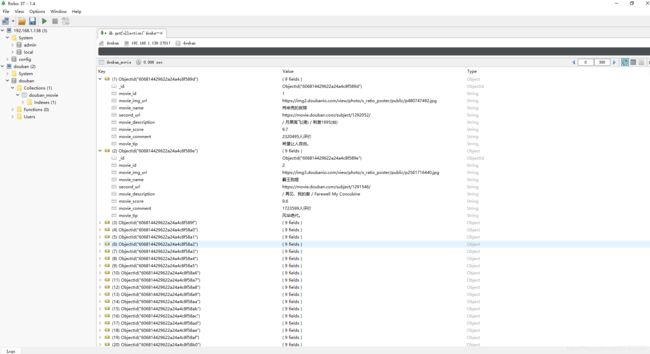

将爬取的数据存储到mogodb中,这里使用dokcer 服务提供的mongodb

pip install pymongo

安装MongoDB详见

https://blog.csdn.net/u014265398/article/details/105949379?ops_request_misc=%257B%2522request%255Fid%2522%253A%2522161743195416780264056654%2522%252C%2522scm%2522%253A%252220140713.130102334.pc%255Fblog.%2522%257D&request_id=161743195416780264056654&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2blogfirst_rank_v2~rank_v29-5-105949379.nonecase&utm_term=mogodb&spm=1018.2226.3001.4450

创建一个豆瓣用户数据库账号

db.createUser({

user: 'douban', pwd: '123456', roles: [{

role: "dbOwner", db: "douban" }] })

db.grantRolesToUser('douban', [{

role: 'dbOwner', db: 'douban' }])

db.auth('douban','123456')

from itemadapter import ItemAdapter

import pymongo

from firstScrapy.settings import mongo_host,mongo_port,mongo_db_name,mongo_db_collection,mongo_account,mongo_password

class FirstscrapyPipeline:

#定义初始化方法

def __init__(self):

db_name = mongo_db_name

collection_name = mongo_db_collection

#获取mogodb连接信息

# client = pymongo.MongoClient('mongodb://douban:123456@localhost:27017/')

client = pymongo.MongoClient('mongodb://%s:%s@%s:%s/'%(mongo_account,mongo_password,mongo_host,mongo_port))

#获取数据表

mydb=client[db_name]

self.post = mydb[collection_name]

#数据入库

def process_item(self, item, spider):

#将字典数据转换为字典类型

data = dict(item)

self.post.insert(data)

return item # 必须实现返回

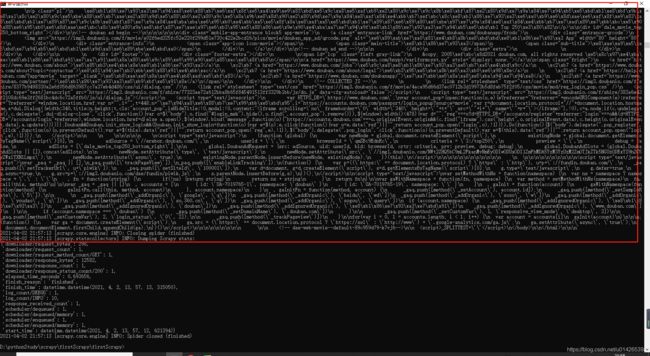

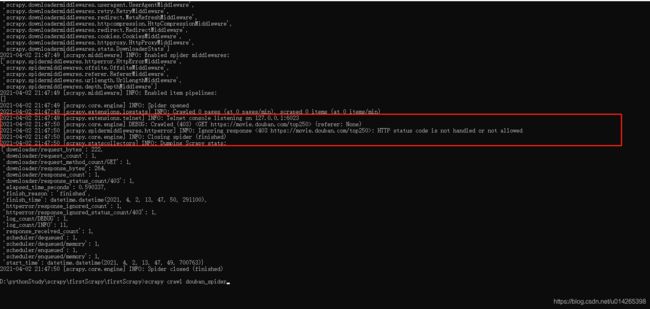

5.运行爬虫

scrapy crawl douban_spider

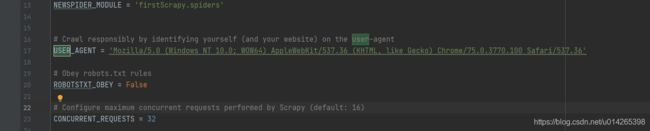

出现这个主要是settings.py 配置里面的user-aget没有配置,没有开启UA伪装

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36'

6.在项目里新建一个main.py,方便爬虫运行

from scrapy import cmdline

cmdline.execute('scrapy crawl douban_spider'.split())

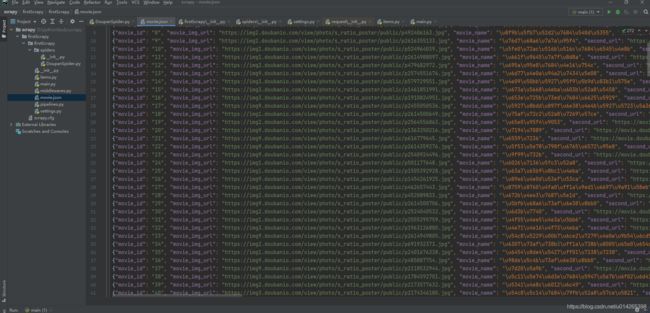

7.将豆瓣电影数据保存到json文件中

在项目根目录下执行如下命令

scrapy crawl douban_spider -o movie.json

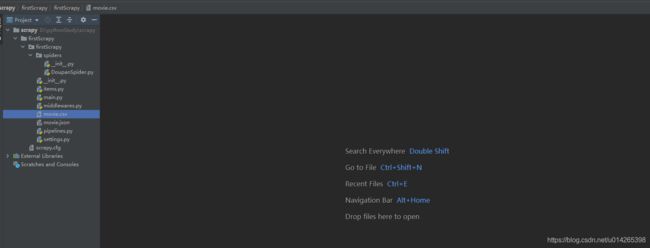

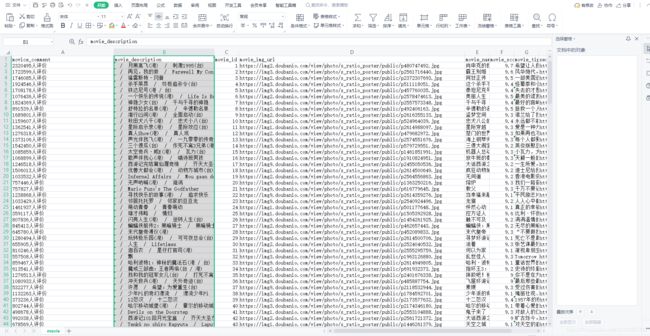

8.将电影数据保存到csv文件中

scrapy crawl douban_spider -o movie.csv

总结

当遇到要爬取多层网页的时候可以使用scrapy提供的方法传参,示例如下:

yield scrapy.Request(choice_url,callback=self.parse_other,dont_filter=True,meta={

'videoItem':videoItem,'firstResponse':firstResponse})

link 参数:爬取下个网页的url地址

callback:指定解析的回调函数

meta:可以把当前的页面的参数传递给下一个页面的解析方法,下一个页面在回调函数里面的response里面获取meta对象即可

def parse_other(self,response):

meta = dict(response.meta)

firstResponse = meta['firstResponse']