Flink实战(七十三):flink-sql使用(一)简介(一)入门

声明:本系列博客是根据SGG的视频整理而成,非常适合大家入门学习。

《2021年最新版大数据面试题全面开启更新》

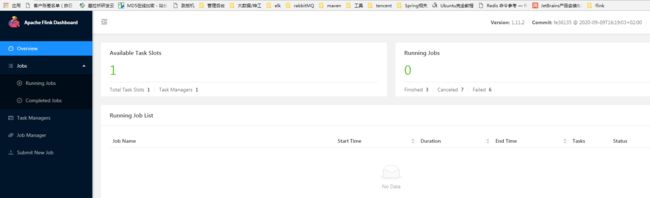

1. 启动flink-sql-client

1) 启动flink (1.10 版本)

sudo ./bin/start-cluster.sh

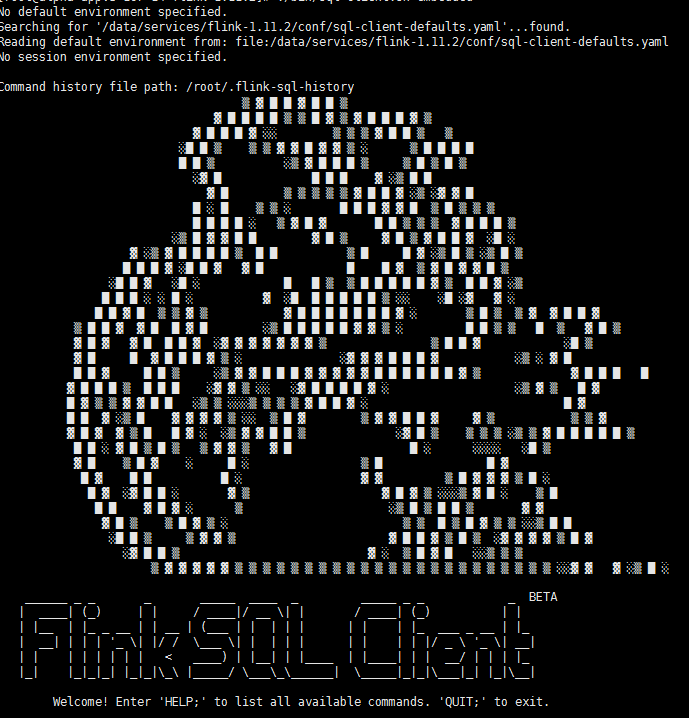

2) 启动 sql-client

sudo ./bin/sql-client.sh embedded

启动成功 会进入 flink sql> 命令行界面 ( 输入 quit; 退出)

2 基本用法

Flink SQL> SELECT name, COUNT(*) AS cnt

FROM (VALUES ('Bob'), ('Alice'), ('Greg'), ('Bob')) AS nameTable(name)

GROUP BY name;

3 连接kafka

3.1 增加扩展包(默认的只支持csv,file等文件系统)

flink-json-1.10.2.jar (https://repo1.maven.org/maven2/org/apache/flink/flink-json/1.10.2/flink-json-1.10.2.jar)

flink-sql-connector-kafka_2.11-1.10.2.jar (https://repo1.maven.org/maven2/org/apache/flink/flink-sql-connector-kafka_2.11/1.10.2/flink-sql-connector-kafka_2.11-1.10.2.jar)

将jar包flink 文件夹下的lib文件夹下

3.2 启动sql-client

./bin/sql-client.sh embedded -l lib/

-l,--library

3.3 创建 kafka Table

![]()

CREATE TABLE CustomerStatusChangedEvent(customerId int,

oStatus int,

nStatus int)with('connector.type' = 'kafka',

'connector.version' = 'universal',

'connector.properties.group.id' = 'g2.group1',

'connector.properties.bootstrap.servers' = '192.168.1.85:9092,192.168.1.86:9092',

'connector.properties.zookeeper.connect' = '192.168.1.85:2181',

'connector.topic' = 'customer_statusChangedEvent',

'connector.startup-mode' = 'earliest-offset',

'format.type' = 'json');

![]()

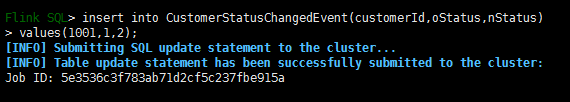

3.4 插入数据

insert into CustomerStatusChangedEvent(customerId,oStatus,nStatus) values(1001,1,2),(1002,10,2),(1003,1,20);

3.4 执行查询

select * from CustomerStatusChangedEvent ;