Hive的安装和部署

文章目录

- Hive的安装和部署

-

- 一、下载、上传并解压Hive安装包

- 二、修改配置文件

- 三、启动Hive

- 四、案例:Hive实现WordCount

- 五、案例:Hive实现二手房统计分析

Hive的安装和部署

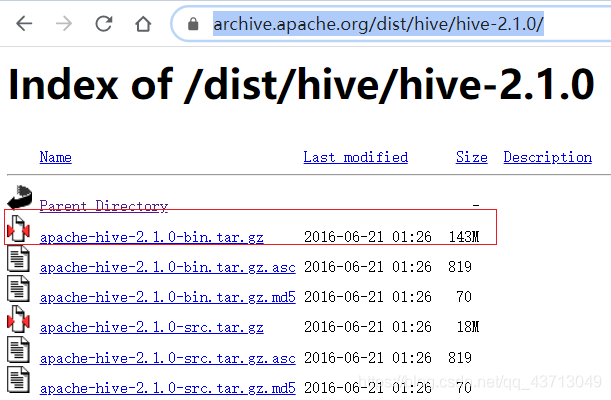

一、下载、上传并解压Hive安装包

1.下载Hive和MySQl连接驱动

Hive下载地址

mysql连接驱动下载地址

本次下载的Hive版本是:hive-2.1.0-bin-tar.gz

MySQ连接驱动版本是:mysql-connector-java-5.1.38.jar

2.将本地下载的Hive安装包和MySQL连接驱动包上传到linux上

#1.切换到上传的指定目录

cd /export/software/

#2.上传

rz

3.解压Hive安装包到指定位置

#1.解压到指定目录

tar -zxvf apache-hive-2.1.0-bin.tar.gz 5

-C /export/server/

#2.切换到指定目录

cd /export/server/

#3.重命名hive文件

mv apache-hive-2.1.0-bin hive-2.1.0-bin

二、修改配置文件

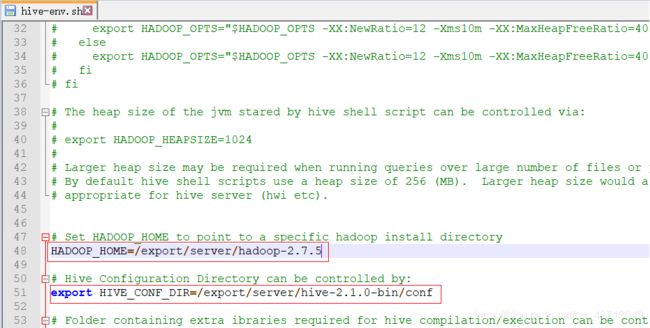

1.编辑hive-env.sh文件

#1.切换到指定目录

cd /export/server/hive-2.1.0-bin/conf/

#2.重命名hive文件

mv hive-env.sh.template hive-env.sh

#3.修改hive-evn.sh文件第48行

HADOOP_HOME=/export/server/hadoop-2.7.5

#4.修改hive-evn.sh文件51行

export HIVE_CONF_DIR=/export/server/hive-2.1.0-bin/conf

2.将提供的hive-site.xml放入conf目录中,并修改hive-site.xml

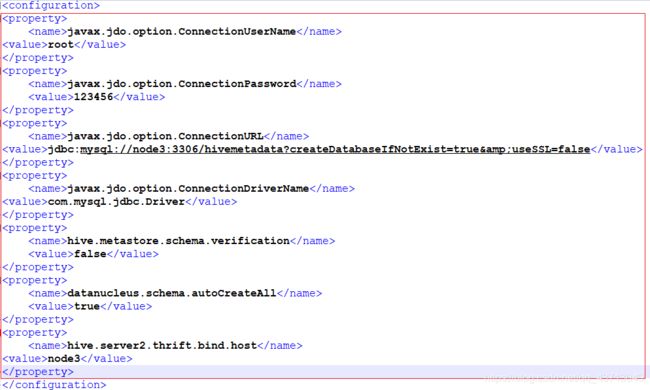

提供的hive-site.xml内容如下:

添加内容如下:

javax.jdo.option.ConnectionUserName

root

javax.jdo.option.ConnectionPassword

123456

javax.jdo.option.ConnectionURL

jdbc:mysql://node3:3306/hivemetadata?createDatabaseIfNotExist=true&useSSL=false

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

hive.metastore.schema.verification

false

datanucleus.schema.autoCreateAll

true

hive.server2.thrift.bind.host

node3

#1.复制mysql-connector-java-5.1.38.jar到指定目录

cp /export/software/mysql-connector-java-5.1.38.jar /export/server/hive-2.1.0-bin/lib/

#2.切换到指定目录下

cd /export/server/hive-2.1.0-bin/

#3.查看是否复制成功

ll lib/

4.配置Hive的环境变量

#1.编辑profile文件

vim /etc/profile

#2.添加以下内容

#HIVE_HOME

export HIVE_HOME=/export/server/hive-2.1.0-bin

export PATH=:$PATH:$HIVE_HOME/bin

#3.刷新环境变量

source /etc/profile

#1.启动hdfs

start-dfs.sh

#2.启动yarn

start-yarn.sh

三、启动Hive

1.创建HDFS相关目录

#1.创建Hive所有表的数据的存储目录

hdfs dfs -mkdir -p /user/hive/warehouse

#2.修改tmp目录的权限

hdfs dfs -chmod g+w /tmp

#3.修改warehouse目录的权限

hdfs dfs -chmod g+w /user/hive/warehouse

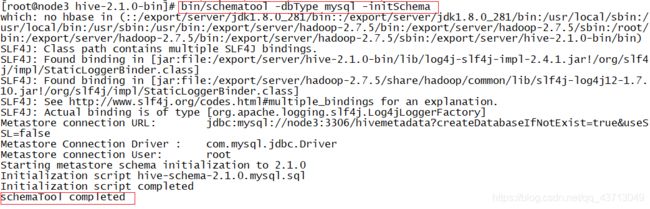

2.初始化Hive元数据

#1.切换到指定目录

cd /export/server/hive-2.1.0-bin/

#2.初始化元数据

bin/schematool -dbType mysql -initSchema

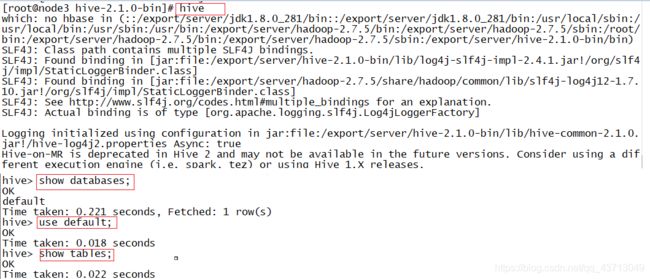

3.启动Hive

#1.启动Hive

hive

#2.查看所有数据库

show databases;

#3.使用当前数据库

use default;

#3.查看数据库下所有表

show tables;

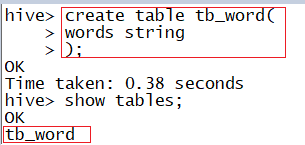

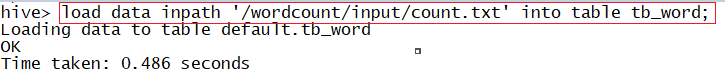

四、案例:Hive实现WordCount

1.创建表:

create table tb_word(

words string

);

load data inpath '/wordcount/input/count.txt' into

table tb_word;

create table tb_word2 as select explode(split(words," "))

as word from tb_word;

select word,count(*) as numb from tb_word2 group by word

order by numb desc;

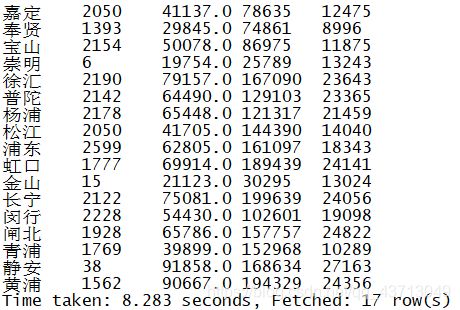

五、案例:Hive实现二手房统计分析

1.创建表:

create table tb_house(

xiaoqu string,

huxing string,

area double,

region string,

floor string,

fangxiang string,

t_price int,

s_price int,

buildinfo string

) row format delimited fields terminated by ',';

2.加载本地文件数据到tb_house表中

load data local inpath '/export/data/secondhouse.csv' into

table tb_house;

3.SQL分析处理

select

region,

count(*) as numb,

round(avg(s_price),0) as avgprice,

max(s_price) as maxprice,

min(s_price) as minprice

from tb_house

group by region;