本来在Maven项目下尝试,一直无法找到缺少什么jar包。无奈之下,只能一次性导入全部的jar包。

- IDEA

- HADOOP2.9.0

前提需要

- 安装 jdk 并且配置环境变量

- 解压hadoop2.9.0 到指定目录,并且配置环境变量

HADOOP_HOME=C:\Work\hadoop-2.9.0

HADOOP_BIN_PATH=%HADOOP_HOME%\bin

HADOOP_PREFIX=C:\Work\hadoop-2.9.0

另外,PATH变量在最后追加;%HADOOP_HOME%\bin

hadoop版本对应的hadoop.dll、winutils.exe

下载地址 https://github.com/steveloughran/winutils

hadoop2.9.0 可以使用 该网址上的 3.0版本。

分别放到目录“C:\Windows\System32”和“$HADOOP_HOME\bin”下搭建集群(本文实验环境:Windows10 + VMware)

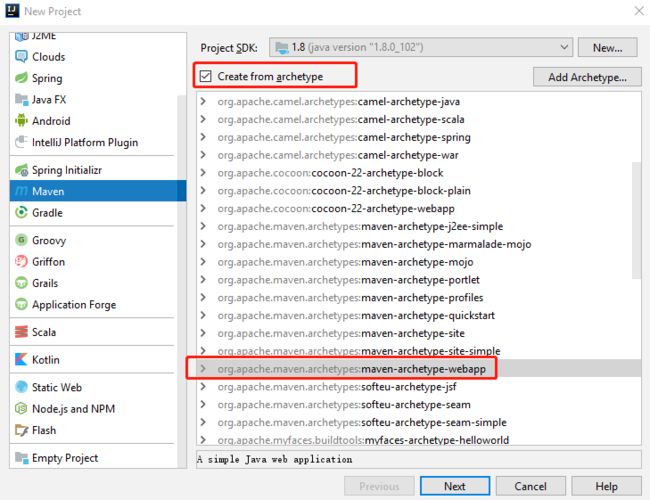

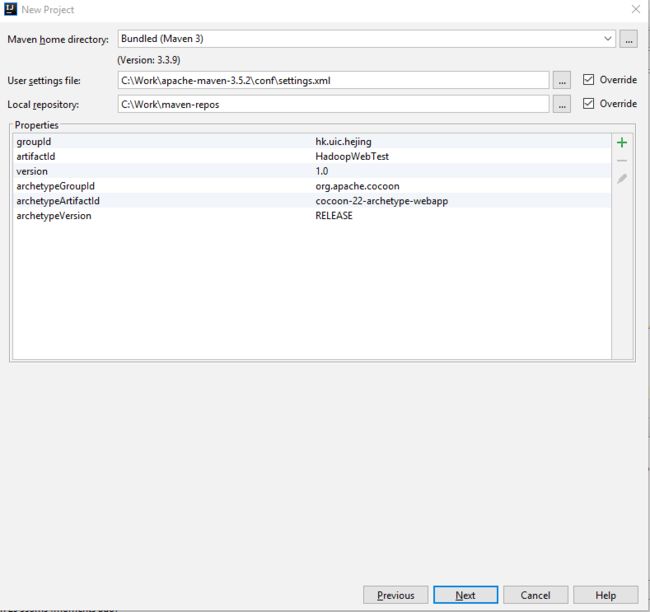

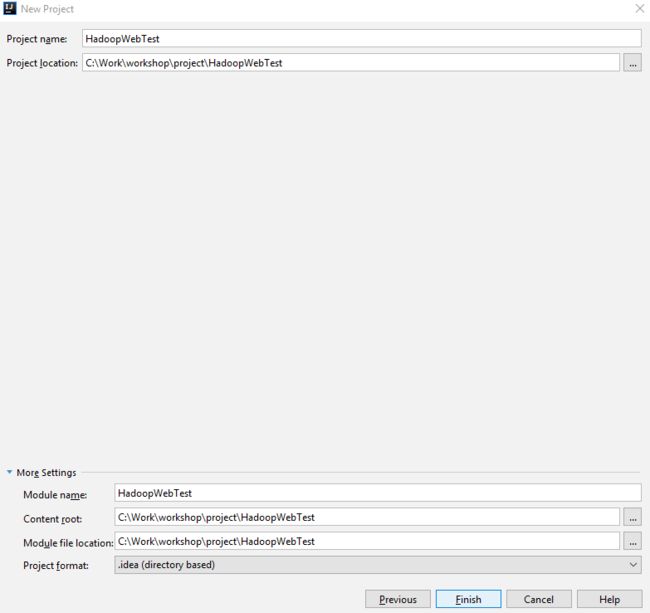

用IDEA 创建一个maven webapp 项目

以上项目基本信息根据个人设置。

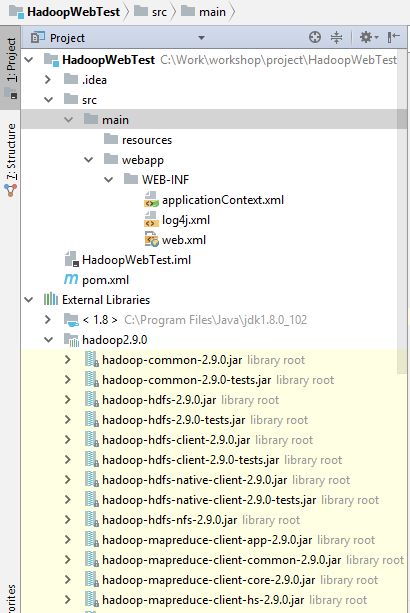

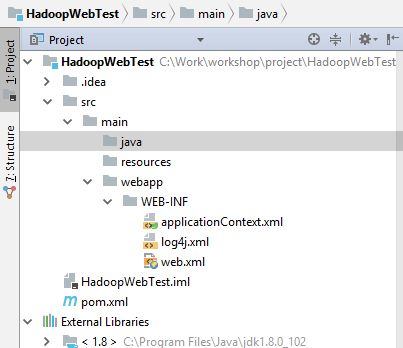

生成的目录结构如下图

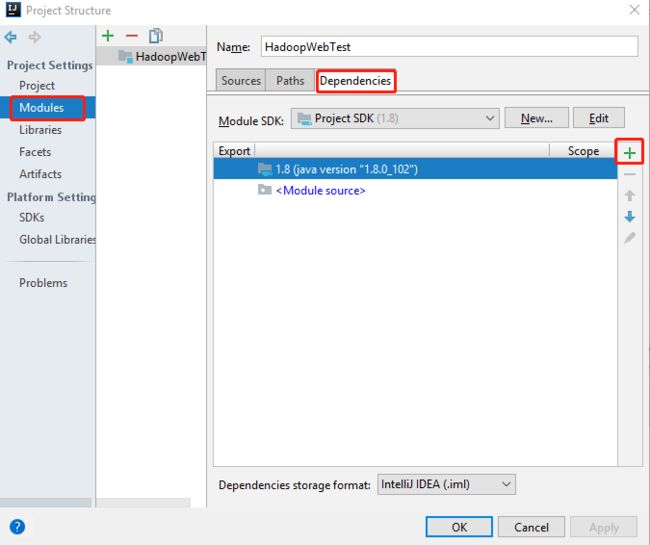

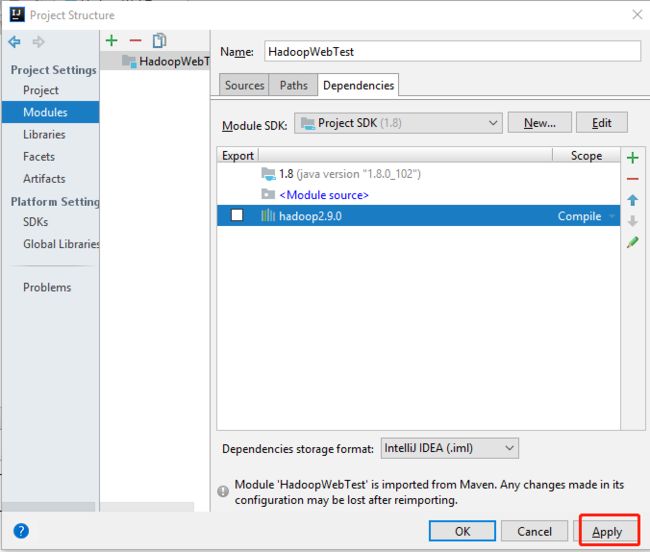

添加hadoop 需要的jar包(o(╥﹏╥)o 这里一次导入所有的包...)

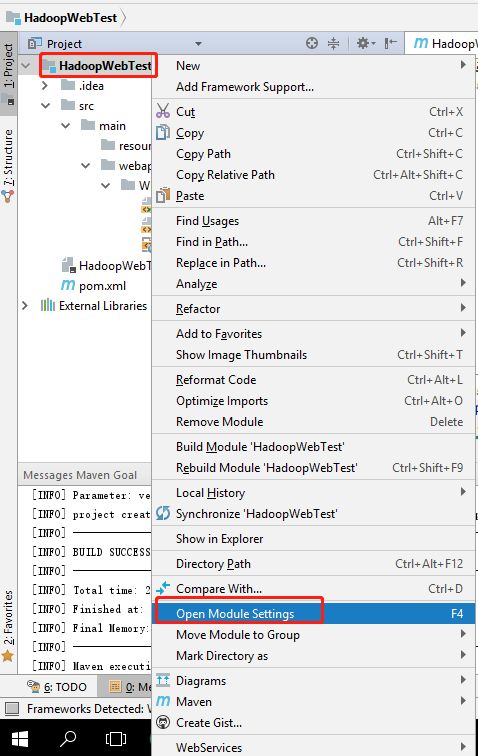

鼠标右键项目,选择 Open Module Settings 或者快键键 F4

打开Project Structure界面。

点击 ‘+’ 选择 添加 jars or directory

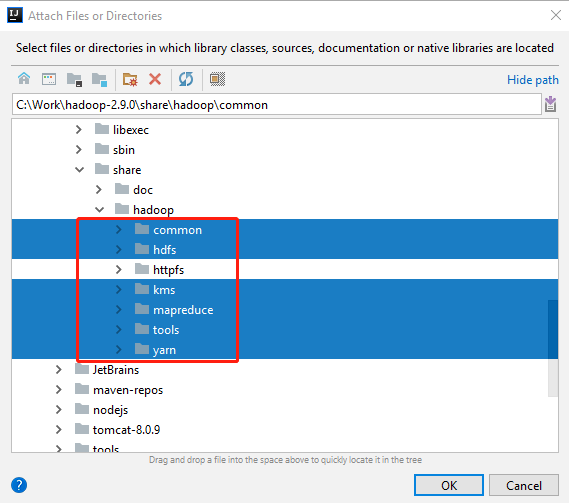

找到本地的 hadoop2.9.0 的目录下的所需要的jar, 位置在(C:\Work\hadoop-2.9.0\share\hadoop\)

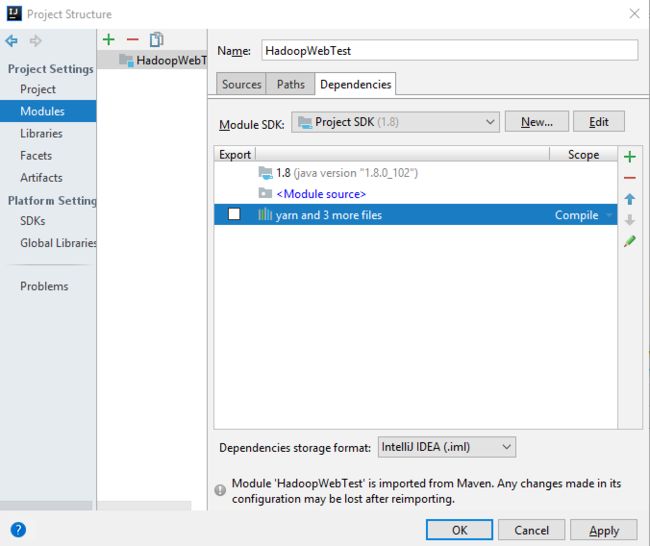

得到

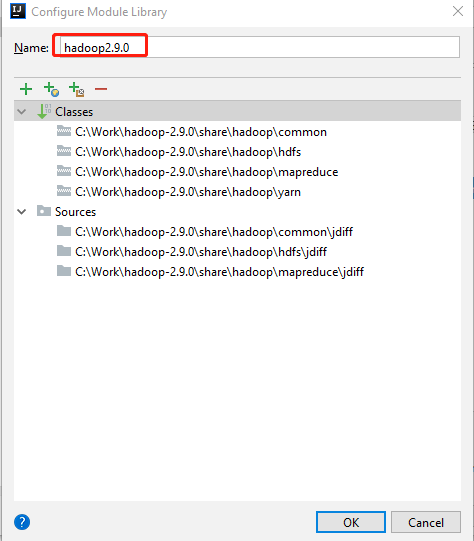

起个名字方便管理

这样就添加所有的 jar 包。

编写 MapReduce 程序。

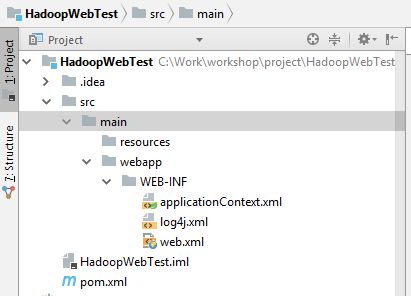

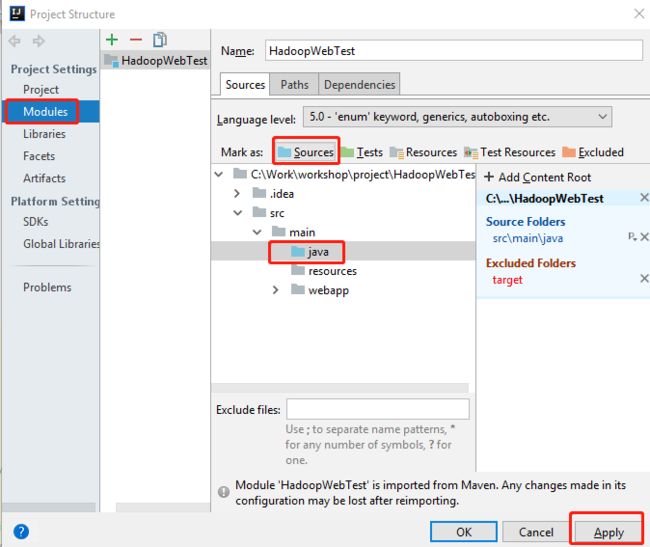

此时文件结构是没有 存放代码的 Sources 文件夹。

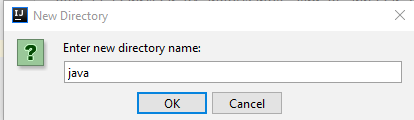

鼠标右键 main -> New -> Directory

此时的 java 仅仅是个文件夹,还需要设置它为 Sources

鼠标右键项目,选择 Open Module Settings 或者快键键 F4

打开Project Structure界面。

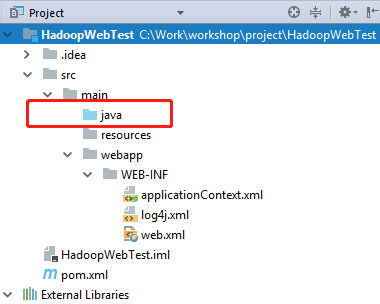

设置成功java 文件夹会是蓝色的。

PartitionerApp.java

package hk.uic.hadoop.testweb;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Partitioner;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class PartitionerApp {

/**

* Map: 读取输入的文件

* LongWritable 偏移量

* Text 一行一行的文本数据

* Text 字符串 + 数字 如 home 1

* LongWritable {1,1,1,1,1}

*/

public static class MyMapper extends Mapper {

LongWritable one = new LongWritable(1);

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 接收到的每一行数据

String line = value.toString();

//按照指定分割符号进行拆分

String[] words = line.split(" ");

context.write(new Text(words[0]), new LongWritable(Long.parseLong(words[1])));

}

}

/**

* Reduce: 归并操作

*/

public static class MyReducer extends Reducer {

@Override

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

long sum = 0;

for (LongWritable value : values) {

// 求key 出现的次数总和

sum += value.get();

}

// 最终统计结果的输出

context.write(key, new LongWritable(sum));

}

}

public static class MyPartitioner extends Partitioner {

@Override

public int getPartition(Text key, LongWritable value, int numPartitions) {

if (key.toString().equals("Apple")) {

return 0;

}

if (key.toString().equals("Orange")) {

return 1;

}

if (key.toString().equals("Pear")) {

return 2;

}

return 3;

}

}

/**

* 定义 Driver : 封装了MapReduce作业的所有信息

*

* @param args

*/

public static void main(String[] args) throws Exception {

System.setProperty("hadoop.home.dir", "C:\\Work\\hadoop-2.9.0");

//懒得在IDEA配置 args 参数

args = new String[2];

args[0] = "hdfs://192.168.19.128:8020/springhdfs/fruits.txt";

args[1] = "hdfs://192.168.19.128:8020/output/fruits";

// 创建 Configuration

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS", "hdfs://192.168.19.128:8020");

// 清除已存在的文件目录

Path outputPath = new Path(args[1]);

FileSystem fileSystem = FileSystem.get(configuration);

if (fileSystem.exists(outputPath)) {

fileSystem.delete(outputPath, true);

System.out.println("outputPath: " + args[1] + " exists, but has been deleted.");

}

// 创建 Job

Job job = Job.getInstance(configuration, "wordcount");

// 设置Job 的处理类

job.setJarByClass(PartitionerApp.class);

// 设置作业处理的输入路径, 通过参数获得

FileInputFormat.setInputPaths(job, new Path(args[0]));

// 设置map 相关参数

job.setMapperClass(MyMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

// 设置reduce 相关参数

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

// 设置job的Partitioner

job.setPartitionerClass(MyPartitioner.class);

// 设置4个reducer, 每个类别一个

job.setNumReduceTasks(4);

//设置作业处理的输出路径

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

添加完代码此时,你如果尝试运行代码,可能会出现这样的错误。

"C:\Program Files\Java\jdk1.8.0_102\bin\java" "-javaagent:C:\Work\JetBrains\IntelliJ IDEA 2017.2.3\lib\idea_rt.jar=57859:C:\Work\JetBrains\IntelliJ IDEA 2017.2.3\bin" -Dfile.encoding=UTF-8 -classpath "C:\Program Files\Java\jdk1.8.0_102\jre\lib\charsets.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\deploy.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\access-bridge-64.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\cldrdata.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\dnsns.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\jaccess.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\jfxrt.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\localedata.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\nashorn.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\sunec.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\sunjce_provider.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\sunmscapi.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\sunpkcs11.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\zipfs.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\javaws.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\jce.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\jfr.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\jfxswt.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\jsse.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\management-agent.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\plugin.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\resources.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\rt.jar;C:\Work\workshop\project\HadoopWebTest\target\classes;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-ui-2.9.0.war;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-api-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-client-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-common-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-registry-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-tests-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-common-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-router-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-web-proxy-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-nodemanager-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-resourcemanager-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-sharedcachemanager-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-applications-distributedshell-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-timeline-pluginstorage-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-applicationhistoryservice-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-applications-unmanaged-am-launcher-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\common\hadoop-nfs-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\common\hadoop-common-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\common\hadoop-common-2.9.0-tests.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-nfs-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-2.9.0-tests.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-client-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-client-2.9.0-tests.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-native-client-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-native-client-2.9.0-tests.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-examples-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-hs-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-app-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-core-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-common-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-shuffle-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-jobclient-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-hs-plugins-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-jobclient-2.9.0-tests.jar" hk.uic.hadoop.testweb.PartitionerApp

Exception in thread "main" java.lang.NoClassDefFoundError: com/ctc/wstx/io/InputBootstrapper

at hk.uic.hadoop.testweb.PartitionerApp.main(PartitionerApp.java:98)

Caused by: java.lang.ClassNotFoundException: com.ctc.wstx.io.InputBootstrapper

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 1 more

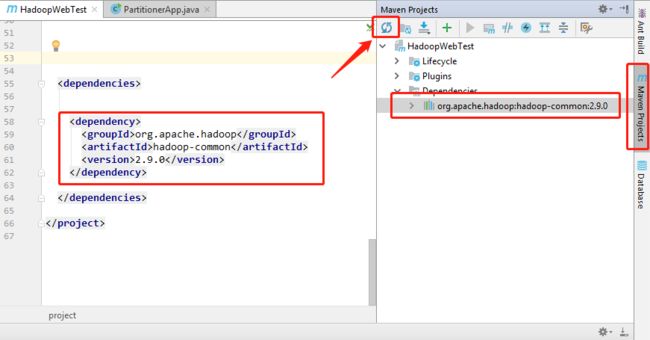

前面其实明明已经添加了所有的 jar 包,不知道为何还会出现这样的错误,不知道是我下载解压的 hadoop2.9.0 有问题还是其他原因。 然后 google 了好多博客还是不能解决。 最后,无意中在maven 中再 添加一个 hadoop-common的包 居然就好了。

pom.xml 内

4.0.0

war

HadoopWebTest

hk.uic.hejing

HadoopWebTest

1.0

org.mortbay.jetty

maven-jetty-plugin

6.1.7

8888

30000

${project.build.directory}/${pom.artifactId}-${pom.version}

/

org.apache.hadoop

hadoop-common

2.9.0

刷新导入成功之后,再尝试运行。

"C:\Program Files\Java\jdk1.8.0_102\bin\java" "-javaagent:C:\Work\JetBrains\IntelliJ IDEA 2017.2.3\lib\idea_rt.jar=58143:C:\Work\JetBrains\IntelliJ IDEA 2017.2.3\bin" -Dfile.encoding=UTF-8 -classpath "C:\Program Files\Java\jdk1.8.0_102\jre\lib\charsets.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\deploy.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\access-bridge-64.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\cldrdata.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\dnsns.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\jaccess.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\jfxrt.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\localedata.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\nashorn.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\sunec.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\sunjce_provider.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\sunmscapi.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\sunpkcs11.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\ext\zipfs.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\javaws.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\jce.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\jfr.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\jfxswt.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\jsse.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\management-agent.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\plugin.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\resources.jar;C:\Program Files\Java\jdk1.8.0_102\jre\lib\rt.jar;C:\Work\workshop\project\HadoopWebTest\target\classes;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-ui-2.9.0.war;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-api-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-client-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-common-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-registry-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-tests-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-common-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-router-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-web-proxy-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-nodemanager-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-resourcemanager-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-sharedcachemanager-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-applications-distributedshell-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-timeline-pluginstorage-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-server-applicationhistoryservice-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\yarn\hadoop-yarn-applications-unmanaged-am-launcher-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\common\hadoop-nfs-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\common\hadoop-common-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\common\hadoop-common-2.9.0-tests.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-nfs-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-2.9.0-tests.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-client-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-client-2.9.0-tests.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-native-client-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\hdfs\hadoop-hdfs-native-client-2.9.0-tests.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-examples-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-hs-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-app-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-core-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-common-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-shuffle-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-jobclient-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-hs-plugins-2.9.0.jar;C:\Work\hadoop-2.9.0\share\hadoop\mapreduce\hadoop-mapreduce-client-jobclient-2.9.0-tests.jar;C:\Work\maven-repos\org\apache\hadoop\hadoop-common\2.9.0\hadoop-common-2.9.0.jar;C:\Work\maven-repos\org\apache\hadoop\hadoop-annotations\2.9.0\hadoop-annotations-2.9.0.jar;C:\Program Files\Java\jdk1.8.0_102\lib\tools.jar;C:\Work\maven-repos\com\google\guava\guava\11.0.2\guava-11.0.2.jar;C:\Work\maven-repos\commons-cli\commons-cli\1.2\commons-cli-1.2.jar;C:\Work\maven-repos\org\apache\commons\commons-math3\3.1.1\commons-math3-3.1.1.jar;C:\Work\maven-repos\xmlenc\xmlenc\0.52\xmlenc-0.52.jar;C:\Work\maven-repos\org\apache\httpcomponents\httpclient\4.5.2\httpclient-4.5.2.jar;C:\Work\maven-repos\org\apache\httpcomponents\httpcore\4.4.4\httpcore-4.4.4.jar;C:\Work\maven-repos\commons-codec\commons-codec\1.4\commons-codec-1.4.jar;C:\Work\maven-repos\commons-io\commons-io\2.4\commons-io-2.4.jar;C:\Work\maven-repos\commons-net\commons-net\3.1\commons-net-3.1.jar;C:\Work\maven-repos\commons-collections\commons-collections\3.2.2\commons-collections-3.2.2.jar;C:\Work\maven-repos\javax\servlet\servlet-api\2.5\servlet-api-2.5.jar;C:\Work\maven-repos\org\mortbay\jetty\jetty\6.1.26\jetty-6.1.26.jar;C:\Work\maven-repos\org\mortbay\jetty\jetty-util\6.1.26\jetty-util-6.1.26.jar;C:\Work\maven-repos\org\mortbay\jetty\jetty-sslengine\6.1.26\jetty-sslengine-6.1.26.jar;C:\Work\maven-repos\javax\servlet\jsp\jsp-api\2.1\jsp-api-2.1.jar;C:\Work\maven-repos\com\sun\jersey\jersey-core\1.9\jersey-core-1.9.jar;C:\Work\maven-repos\com\sun\jersey\jersey-json\1.9\jersey-json-1.9.jar;C:\Work\maven-repos\org\codehaus\jettison\jettison\1.1\jettison-1.1.jar;C:\Work\maven-repos\com\sun\xml\bind\jaxb-impl\2.2.3-1\jaxb-impl-2.2.3-1.jar;C:\Work\maven-repos\javax\xml\bind\jaxb-api\2.2.2\jaxb-api-2.2.2.jar;C:\Work\maven-repos\javax\xml\stream\stax-api\1.0-2\stax-api-1.0-2.jar;C:\Work\maven-repos\javax\activation\activation\1.1\activation-1.1.jar;C:\Work\maven-repos\org\codehaus\jackson\jackson-jaxrs\1.8.3\jackson-jaxrs-1.8.3.jar;C:\Work\maven-repos\org\codehaus\jackson\jackson-xc\1.8.3\jackson-xc-1.8.3.jar;C:\Work\maven-repos\com\sun\jersey\jersey-server\1.9\jersey-server-1.9.jar;C:\Work\maven-repos\asm\asm\3.1\asm-3.1.jar;C:\Work\maven-repos\commons-logging\commons-logging\1.1.3\commons-logging-1.1.3.jar;C:\Work\maven-repos\log4j\log4j\1.2.17\log4j-1.2.17.jar;C:\Work\maven-repos\net\java\dev\jets3t\jets3t\0.9.0\jets3t-0.9.0.jar;C:\Work\maven-repos\com\jamesmurty\utils\java-xmlbuilder\0.4\java-xmlbuilder-0.4.jar;C:\Work\maven-repos\commons-lang\commons-lang\2.6\commons-lang-2.6.jar;C:\Work\maven-repos\commons-configuration\commons-configuration\1.6\commons-configuration-1.6.jar;C:\Work\maven-repos\commons-digester\commons-digester\1.8\commons-digester-1.8.jar;C:\Work\maven-repos\commons-beanutils\commons-beanutils\1.7.0\commons-beanutils-1.7.0.jar;C:\Work\maven-repos\commons-beanutils\commons-beanutils-core\1.8.0\commons-beanutils-core-1.8.0.jar;C:\Work\maven-repos\org\apache\commons\commons-lang3\3.4\commons-lang3-3.4.jar;C:\Work\maven-repos\org\slf4j\slf4j-api\1.7.25\slf4j-api-1.7.25.jar;C:\Work\maven-repos\org\slf4j\slf4j-log4j12\1.7.25\slf4j-log4j12-1.7.25.jar;C:\Work\maven-repos\org\codehaus\jackson\jackson-core-asl\1.9.13\jackson-core-asl-1.9.13.jar;C:\Work\maven-repos\org\codehaus\jackson\jackson-mapper-asl\1.9.13\jackson-mapper-asl-1.9.13.jar;C:\Work\maven-repos\org\apache\avro\avro\1.7.7\avro-1.7.7.jar;C:\Work\maven-repos\com\thoughtworks\paranamer\paranamer\2.3\paranamer-2.3.jar;C:\Work\maven-repos\org\xerial\snappy\snappy-java\1.0.5\snappy-java-1.0.5.jar;C:\Work\maven-repos\com\google\protobuf\protobuf-java\2.5.0\protobuf-java-2.5.0.jar;C:\Work\maven-repos\com\google\code\gson\gson\2.2.4\gson-2.2.4.jar;C:\Work\maven-repos\org\apache\hadoop\hadoop-auth\2.9.0\hadoop-auth-2.9.0.jar;C:\Work\maven-repos\com\nimbusds\nimbus-jose-jwt\3.9\nimbus-jose-jwt-3.9.jar;C:\Work\maven-repos\net\jcip\jcip-annotations\1.0\jcip-annotations-1.0.jar;C:\Work\maven-repos\net\minidev\json-smart\1.1.1\json-smart-1.1.1.jar;C:\Work\maven-repos\org\apache\directory\server\apacheds-kerberos-codec\2.0.0-M15\apacheds-kerberos-codec-2.0.0-M15.jar;C:\Work\maven-repos\org\apache\directory\server\apacheds-i18n\2.0.0-M15\apacheds-i18n-2.0.0-M15.jar;C:\Work\maven-repos\org\apache\directory\api\api-asn1-api\1.0.0-M20\api-asn1-api-1.0.0-M20.jar;C:\Work\maven-repos\org\apache\directory\api\api-util\1.0.0-M20\api-util-1.0.0-M20.jar;C:\Work\maven-repos\org\apache\curator\curator-framework\2.7.1\curator-framework-2.7.1.jar;C:\Work\maven-repos\com\jcraft\jsch\0.1.54\jsch-0.1.54.jar;C:\Work\maven-repos\org\apache\curator\curator-client\2.7.1\curator-client-2.7.1.jar;C:\Work\maven-repos\org\apache\curator\curator-recipes\2.7.1\curator-recipes-2.7.1.jar;C:\Work\maven-repos\com\google\code\findbugs\jsr305\3.0.0\jsr305-3.0.0.jar;C:\Work\maven-repos\org\apache\htrace\htrace-core4\4.1.0-incubating\htrace-core4-4.1.0-incubating.jar;C:\Work\maven-repos\org\apache\zookeeper\zookeeper\3.4.6\zookeeper-3.4.6.jar;C:\Work\maven-repos\io\netty\netty\3.7.0.Final\netty-3.7.0.Final.jar;C:\Work\maven-repos\org\apache\commons\commons-compress\1.4.1\commons-compress-1.4.1.jar;C:\Work\maven-repos\org\tukaani\xz\1.0\xz-1.0.jar;C:\Work\maven-repos\org\codehaus\woodstox\stax2-api\3.1.4\stax2-api-3.1.4.jar;C:\Work\maven-repos\com\fasterxml\woodstox\woodstox-core\5.0.3\woodstox-core-5.0.3.jar" hk.uic.hadoop.testweb.PartitionerApp

outputPath: hdfs://192.168.19.128:8020/output/fruits exists, but has been deleted.

2018-02-12 15:05:55,365 INFO [main] Configuration.deprecation (Configuration.java:logDeprecation(1297)) - session.id is deprecated. Instead, use dfs.metrics.session-id

2018-02-12 15:05:55,371 INFO [main] jvm.JvmMetrics (JvmMetrics.java:init(79)) - Initializing JVM Metrics with processName=JobTracker, sessionId=

2018-02-12 15:05:55,872 WARN [main] mapreduce.JobResourceUploader (JobResourceUploader.java:uploadResourcesInternal(142)) - Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

2018-02-12 15:05:55,913 WARN [main] mapreduce.JobResourceUploader (JobResourceUploader.java:uploadJobJar(470)) - No job jar file set. User classes may not be found. See Job or Job#setJar(String).

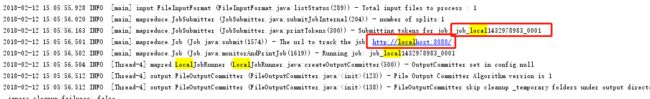

2018-02-12 15:05:55,928 INFO [main] input.FileInputFormat (FileInputFormat.java:listStatus(289)) - Total input files to process : 1

2018-02-12 15:05:56,020 INFO [main] mapreduce.JobSubmitter (JobSubmitter.java:submitJobInternal(204)) - number of splits:1

2018-02-12 15:05:56,163 INFO [main] mapreduce.JobSubmitter (JobSubmitter.java:printTokens(300)) - Submitting tokens for job: job_local1432978983_0001

2018-02-12 15:05:56,501 INFO [main] mapreduce.Job (Job.java:submit(1574)) - The url to track the job: http://localhost:8080/

2018-02-12 15:05:56,502 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1619)) - Running job: job_local1432978983_0001

2018-02-12 15:05:56,504 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:createOutputCommitter(500)) - OutputCommitter set in config null

2018-02-12 15:05:56,512 INFO [Thread-4] output.FileOutputCommitter (FileOutputCommitter.java:(123)) - File Output Committer Algorithm version is 1

2018-02-12 15:05:56,512 INFO [Thread-4] output.FileOutputCommitter (FileOutputCommitter.java:(138)) - FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2018-02-12 15:05:56,514 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:createOutputCommitter(518)) - OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2018-02-12 15:05:56,568 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(477)) - Waiting for map tasks

2018-02-12 15:05:56,569 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:run(251)) - Starting task: attempt_local1432978983_0001_m_000000_0

2018-02-12 15:05:56,606 INFO [LocalJobRunner Map Task Executor #0] output.FileOutputCommitter (FileOutputCommitter.java:(123)) - File Output Committer Algorithm version is 1

2018-02-12 15:05:56,608 INFO [LocalJobRunner Map Task Executor #0] output.FileOutputCommitter (FileOutputCommitter.java:(138)) - FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2018-02-12 15:05:56,624 INFO [LocalJobRunner Map Task Executor #0] util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(168)) - ProcfsBasedProcessTree currently is supported only on Linux.

2018-02-12 15:05:56,970 INFO [LocalJobRunner Map Task Executor #0] mapred.Task (Task.java:initialize(619)) - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@1a0bf416

2018-02-12 15:05:56,980 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:runNewMapper(762)) - Processing split: hdfs://192.168.19.128:8020/springhdfs/fruits.txt:0+69

2018-02-12 15:05:57,028 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:setEquator(1212)) - (EQUATOR) 0 kvi 26214396(104857584)

2018-02-12 15:05:57,028 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:init(1005)) - mapreduce.task.io.sort.mb: 100

2018-02-12 15:05:57,028 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:init(1006)) - soft limit at 83886080

2018-02-12 15:05:57,028 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:init(1007)) - bufstart = 0; bufvoid = 104857600

2018-02-12 15:05:57,028 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:init(1008)) - kvstart = 26214396; length = 6553600

2018-02-12 15:05:57,032 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:createSortingCollector(403)) - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2018-02-12 15:05:57,231 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) -

2018-02-12 15:05:57,234 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:flush(1469)) - Starting flush of map output

2018-02-12 15:05:57,234 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:flush(1491)) - Spilling map output

2018-02-12 15:05:57,235 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:flush(1492)) - bufstart = 0; bufend = 99; bufvoid = 104857600

2018-02-12 15:05:57,235 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:flush(1494)) - kvstart = 26214396(104857584); kvend = 26214372(104857488); length = 25/6553600

2018-02-12 15:05:57,352 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:sortAndSpill(1681)) - Finished spill 0

2018-02-12 15:05:57,363 INFO [LocalJobRunner Map Task Executor #0] mapred.Task (Task.java:done(1099)) - Task:attempt_local1432978983_0001_m_000000_0 is done. And is in the process of committing

2018-02-12 15:05:57,376 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - map

2018-02-12 15:05:57,376 INFO [LocalJobRunner Map Task Executor #0] mapred.Task (Task.java:sendDone(1219)) - Task 'attempt_local1432978983_0001_m_000000_0' done.

2018-02-12 15:05:57,376 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:run(276)) - Finishing task: attempt_local1432978983_0001_m_000000_0

2018-02-12 15:05:57,377 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(485)) - map task executor complete.

2018-02-12 15:05:57,382 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(477)) - Waiting for reduce tasks

2018-02-12 15:05:57,383 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(329)) - Starting task: attempt_local1432978983_0001_r_000000_0

2018-02-12 15:05:57,393 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:(123)) - File Output Committer Algorithm version is 1

2018-02-12 15:05:57,393 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:(138)) - FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2018-02-12 15:05:57,394 INFO [pool-7-thread-1] util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(168)) - ProcfsBasedProcessTree currently is supported only on Linux.

2018-02-12 15:05:57,509 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1640)) - Job job_local1432978983_0001 running in uber mode : false

2018-02-12 15:05:57,511 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1647)) - map 100% reduce 0%

2018-02-12 15:05:57,562 INFO [pool-7-thread-1] mapred.Task (Task.java:initialize(619)) - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@685c12a6

2018-02-12 15:05:57,565 INFO [pool-7-thread-1] mapred.ReduceTask (ReduceTask.java:run(362)) - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@6f0dcd1f

2018-02-12 15:05:57,579 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:(207)) - MergerManager: memoryLimit=1321939712, maxSingleShuffleLimit=330484928, mergeThreshold=872480256, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2018-02-12 15:05:57,581 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(61)) - attempt_local1432978983_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

2018-02-12 15:05:57,616 INFO [localfetcher#1] reduce.LocalFetcher (LocalFetcher.java:copyMapOutput(145)) - localfetcher#1 about to shuffle output of map attempt_local1432978983_0001_m_000000_0 decomp: 34 len: 38 to MEMORY

2018-02-12 15:05:57,623 INFO [localfetcher#1] reduce.InMemoryMapOutput (InMemoryMapOutput.java:doShuffle(93)) - Read 34 bytes from map-output for attempt_local1432978983_0001_m_000000_0

2018-02-12 15:05:57,625 INFO [localfetcher#1] reduce.MergeManagerImpl (MergeManagerImpl.java:closeInMemoryFile(322)) - closeInMemoryFile -> map-output of size: 34, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->34

2018-02-12 15:05:57,627 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(76)) - EventFetcher is interrupted.. Returning

2018-02-12 15:05:57,628 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:57,628 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(694)) - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2018-02-12 15:05:57,774 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments

2018-02-12 15:05:57,775 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 26 bytes

2018-02-12 15:05:57,779 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(761)) - Merged 1 segments, 34 bytes to disk to satisfy reduce memory limit

2018-02-12 15:05:57,782 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(791)) - Merging 1 files, 38 bytes from disk

2018-02-12 15:05:57,784 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(806)) - Merging 0 segments, 0 bytes from memory into reduce

2018-02-12 15:05:57,784 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments

2018-02-12 15:05:57,785 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 26 bytes

2018-02-12 15:05:57,786 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:57,816 INFO [pool-7-thread-1] Configuration.deprecation (Configuration.java:logDeprecation(1297)) - mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

2018-02-12 15:05:57,916 INFO [pool-7-thread-1] mapred.Task (Task.java:done(1099)) - Task:attempt_local1432978983_0001_r_000000_0 is done. And is in the process of committing

2018-02-12 15:05:57,921 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:57,921 INFO [pool-7-thread-1] mapred.Task (Task.java:commit(1260)) - Task attempt_local1432978983_0001_r_000000_0 is allowed to commit now

2018-02-12 15:05:57,937 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:commitTask(582)) - Saved output of task 'attempt_local1432978983_0001_r_000000_0' to hdfs://192.168.19.128:8020/output/fruits/_temporary/0/task_local1432978983_0001_r_000000

2018-02-12 15:05:57,939 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - reduce > reduce

2018-02-12 15:05:57,939 INFO [pool-7-thread-1] mapred.Task (Task.java:sendDone(1219)) - Task 'attempt_local1432978983_0001_r_000000_0' done.

2018-02-12 15:05:57,940 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(352)) - Finishing task: attempt_local1432978983_0001_r_000000_0

2018-02-12 15:05:57,940 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(329)) - Starting task: attempt_local1432978983_0001_r_000001_0

2018-02-12 15:05:57,942 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:(123)) - File Output Committer Algorithm version is 1

2018-02-12 15:05:57,942 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:(138)) - FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2018-02-12 15:05:57,943 INFO [pool-7-thread-1] util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(168)) - ProcfsBasedProcessTree currently is supported only on Linux.

2018-02-12 15:05:58,169 INFO [pool-7-thread-1] mapred.Task (Task.java:initialize(619)) - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@1da33b9d

2018-02-12 15:05:58,169 INFO [pool-7-thread-1] mapred.ReduceTask (ReduceTask.java:run(362)) - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@c854bea

2018-02-12 15:05:58,170 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:(207)) - MergerManager: memoryLimit=1321939712, maxSingleShuffleLimit=330484928, mergeThreshold=872480256, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2018-02-12 15:05:58,172 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(61)) - attempt_local1432978983_0001_r_000001_0 Thread started: EventFetcher for fetching Map Completion Events

2018-02-12 15:05:58,276 INFO [localfetcher#2] reduce.LocalFetcher (LocalFetcher.java:copyMapOutput(145)) - localfetcher#2 about to shuffle output of map attempt_local1432978983_0001_m_000000_0 decomp: 36 len: 40 to MEMORY

2018-02-12 15:05:58,278 INFO [localfetcher#2] reduce.InMemoryMapOutput (InMemoryMapOutput.java:doShuffle(93)) - Read 36 bytes from map-output for attempt_local1432978983_0001_m_000000_0

2018-02-12 15:05:58,278 INFO [localfetcher#2] reduce.MergeManagerImpl (MergeManagerImpl.java:closeInMemoryFile(322)) - closeInMemoryFile -> map-output of size: 36, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->36

2018-02-12 15:05:58,279 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(76)) - EventFetcher is interrupted.. Returning

2018-02-12 15:05:58,280 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:58,280 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(694)) - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2018-02-12 15:05:58,379 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments

2018-02-12 15:05:58,380 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 27 bytes

2018-02-12 15:05:58,383 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(761)) - Merged 1 segments, 36 bytes to disk to satisfy reduce memory limit

2018-02-12 15:05:58,386 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(791)) - Merging 1 files, 40 bytes from disk

2018-02-12 15:05:58,386 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(806)) - Merging 0 segments, 0 bytes from memory into reduce

2018-02-12 15:05:58,386 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments

2018-02-12 15:05:58,388 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 27 bytes

2018-02-12 15:05:58,388 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:58,423 INFO [pool-7-thread-1] mapred.Task (Task.java:done(1099)) - Task:attempt_local1432978983_0001_r_000001_0 is done. And is in the process of committing

2018-02-12 15:05:58,429 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:58,429 INFO [pool-7-thread-1] mapred.Task (Task.java:commit(1260)) - Task attempt_local1432978983_0001_r_000001_0 is allowed to commit now

2018-02-12 15:05:58,443 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:commitTask(582)) - Saved output of task 'attempt_local1432978983_0001_r_000001_0' to hdfs://192.168.19.128:8020/output/fruits/_temporary/0/task_local1432978983_0001_r_000001

2018-02-12 15:05:58,444 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - reduce > reduce

2018-02-12 15:05:58,444 INFO [pool-7-thread-1] mapred.Task (Task.java:sendDone(1219)) - Task 'attempt_local1432978983_0001_r_000001_0' done.

2018-02-12 15:05:58,444 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(352)) - Finishing task: attempt_local1432978983_0001_r_000001_0

2018-02-12 15:05:58,444 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(329)) - Starting task: attempt_local1432978983_0001_r_000002_0

2018-02-12 15:05:58,446 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:(123)) - File Output Committer Algorithm version is 1

2018-02-12 15:05:58,447 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:(138)) - FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2018-02-12 15:05:58,448 INFO [pool-7-thread-1] util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(168)) - ProcfsBasedProcessTree currently is supported only on Linux.

2018-02-12 15:05:58,513 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1647)) - map 100% reduce 100%

2018-02-12 15:05:58,583 INFO [pool-7-thread-1] mapred.Task (Task.java:initialize(619)) - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@59dc1d65

2018-02-12 15:05:58,583 INFO [pool-7-thread-1] mapred.ReduceTask (ReduceTask.java:run(362)) - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@246cd798

2018-02-12 15:05:58,584 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:(207)) - MergerManager: memoryLimit=1321939712, maxSingleShuffleLimit=330484928, mergeThreshold=872480256, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2018-02-12 15:05:58,585 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(61)) - attempt_local1432978983_0001_r_000002_0 Thread started: EventFetcher for fetching Map Completion Events

2018-02-12 15:05:58,651 INFO [localfetcher#3] reduce.LocalFetcher (LocalFetcher.java:copyMapOutput(145)) - localfetcher#3 about to shuffle output of map attempt_local1432978983_0001_m_000000_0 decomp: 32 len: 36 to MEMORY

2018-02-12 15:05:58,652 INFO [localfetcher#3] reduce.InMemoryMapOutput (InMemoryMapOutput.java:doShuffle(93)) - Read 32 bytes from map-output for attempt_local1432978983_0001_m_000000_0

2018-02-12 15:05:58,653 INFO [localfetcher#3] reduce.MergeManagerImpl (MergeManagerImpl.java:closeInMemoryFile(322)) - closeInMemoryFile -> map-output of size: 32, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->32

2018-02-12 15:05:58,653 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(76)) - EventFetcher is interrupted.. Returning

2018-02-12 15:05:58,654 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:58,654 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(694)) - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2018-02-12 15:05:58,766 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments

2018-02-12 15:05:58,767 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 25 bytes

2018-02-12 15:05:58,770 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(761)) - Merged 1 segments, 32 bytes to disk to satisfy reduce memory limit

2018-02-12 15:05:58,771 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(791)) - Merging 1 files, 36 bytes from disk

2018-02-12 15:05:58,772 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(806)) - Merging 0 segments, 0 bytes from memory into reduce

2018-02-12 15:05:58,772 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments

2018-02-12 15:05:58,773 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 25 bytes

2018-02-12 15:05:58,774 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:58,804 INFO [pool-7-thread-1] mapred.Task (Task.java:done(1099)) - Task:attempt_local1432978983_0001_r_000002_0 is done. And is in the process of committing

2018-02-12 15:05:58,806 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:58,807 INFO [pool-7-thread-1] mapred.Task (Task.java:commit(1260)) - Task attempt_local1432978983_0001_r_000002_0 is allowed to commit now

2018-02-12 15:05:58,816 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:commitTask(582)) - Saved output of task 'attempt_local1432978983_0001_r_000002_0' to hdfs://192.168.19.128:8020/output/fruits/_temporary/0/task_local1432978983_0001_r_000002

2018-02-12 15:05:58,817 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - reduce > reduce

2018-02-12 15:05:58,817 INFO [pool-7-thread-1] mapred.Task (Task.java:sendDone(1219)) - Task 'attempt_local1432978983_0001_r_000002_0' done.

2018-02-12 15:05:58,817 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(352)) - Finishing task: attempt_local1432978983_0001_r_000002_0

2018-02-12 15:05:58,817 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(329)) - Starting task: attempt_local1432978983_0001_r_000003_0

2018-02-12 15:05:58,819 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:(123)) - File Output Committer Algorithm version is 1

2018-02-12 15:05:58,819 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:(138)) - FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2018-02-12 15:05:58,819 INFO [pool-7-thread-1] util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(168)) - ProcfsBasedProcessTree currently is supported only on Linux.

2018-02-12 15:05:58,976 INFO [pool-7-thread-1] mapred.Task (Task.java:initialize(619)) - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@44d9a753

2018-02-12 15:05:58,977 INFO [pool-7-thread-1] mapred.ReduceTask (ReduceTask.java:run(362)) - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@6cb6847

2018-02-12 15:05:58,978 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:(207)) - MergerManager: memoryLimit=1321939712, maxSingleShuffleLimit=330484928, mergeThreshold=872480256, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2018-02-12 15:05:58,979 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(61)) - attempt_local1432978983_0001_r_000003_0 Thread started: EventFetcher for fetching Map Completion Events

2018-02-12 15:05:59,037 INFO [localfetcher#4] reduce.LocalFetcher (LocalFetcher.java:copyMapOutput(145)) - localfetcher#4 about to shuffle output of map attempt_local1432978983_0001_m_000000_0 decomp: 19 len: 23 to MEMORY

2018-02-12 15:05:59,039 INFO [localfetcher#4] reduce.InMemoryMapOutput (InMemoryMapOutput.java:doShuffle(93)) - Read 19 bytes from map-output for attempt_local1432978983_0001_m_000000_0

2018-02-12 15:05:59,039 INFO [localfetcher#4] reduce.MergeManagerImpl (MergeManagerImpl.java:closeInMemoryFile(322)) - closeInMemoryFile -> map-output of size: 19, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->19

2018-02-12 15:05:59,040 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(76)) - EventFetcher is interrupted.. Returning

2018-02-12 15:05:59,041 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:59,041 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(694)) - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2018-02-12 15:05:59,167 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments

2018-02-12 15:05:59,168 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 10 bytes

2018-02-12 15:05:59,171 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(761)) - Merged 1 segments, 19 bytes to disk to satisfy reduce memory limit

2018-02-12 15:05:59,172 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(791)) - Merging 1 files, 23 bytes from disk

2018-02-12 15:05:59,172 INFO [pool-7-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(806)) - Merging 0 segments, 0 bytes from memory into reduce

2018-02-12 15:05:59,173 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(606)) - Merging 1 sorted segments

2018-02-12 15:05:59,174 INFO [pool-7-thread-1] mapred.Merger (Merger.java:merge(705)) - Down to the last merge-pass, with 1 segments left of total size: 10 bytes

2018-02-12 15:05:59,174 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:59,197 INFO [pool-7-thread-1] mapred.Task (Task.java:done(1099)) - Task:attempt_local1432978983_0001_r_000003_0 is done. And is in the process of committing

2018-02-12 15:05:59,200 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - 1 / 1 copied.

2018-02-12 15:05:59,200 INFO [pool-7-thread-1] mapred.Task (Task.java:commit(1260)) - Task attempt_local1432978983_0001_r_000003_0 is allowed to commit now

2018-02-12 15:05:59,207 INFO [pool-7-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:commitTask(582)) - Saved output of task 'attempt_local1432978983_0001_r_000003_0' to hdfs://192.168.19.128:8020/output/fruits/_temporary/0/task_local1432978983_0001_r_000003

2018-02-12 15:05:59,208 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(620)) - reduce > reduce

2018-02-12 15:05:59,208 INFO [pool-7-thread-1] mapred.Task (Task.java:sendDone(1219)) - Task 'attempt_local1432978983_0001_r_000003_0' done.

2018-02-12 15:05:59,208 INFO [pool-7-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(352)) - Finishing task: attempt_local1432978983_0001_r_000003_0

2018-02-12 15:05:59,209 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(485)) - reduce task executor complete.

2018-02-12 15:05:59,514 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1658)) - Job job_local1432978983_0001 completed successfully

2018-02-12 15:05:59,539 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1665)) - Counters: 35

File System Counters

FILE: Number of bytes read=3213

FILE: Number of bytes written=2389922

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=345

HDFS: Number of bytes written=101

HDFS: Number of read operations=60

HDFS: Number of large read operations=0

HDFS: Number of write operations=30

Map-Reduce Framework

Map input records=7

Map output records=7

Map output bytes=99

Map output materialized bytes=137

Input split bytes=113

Combine input records=0

Combine output records=0

Reduce input groups=4

Reduce shuffle bytes=137

Reduce input records=7

Reduce output records=4

Spilled Records=14

Shuffled Maps =4

Failed Shuffles=0

Merged Map outputs=4

GC time elapsed (ms)=8

Total committed heap usage (bytes)=1192755200

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=69

File Output Format Counters

Bytes Written=40

Process finished with exit code 0

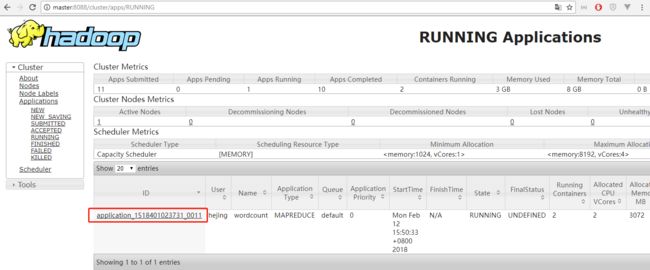

细心的朋友会发现,这样运行其实是在本地运行的,并没有提交到集群上去执行。

任务名称都会带有 local 的字眼。

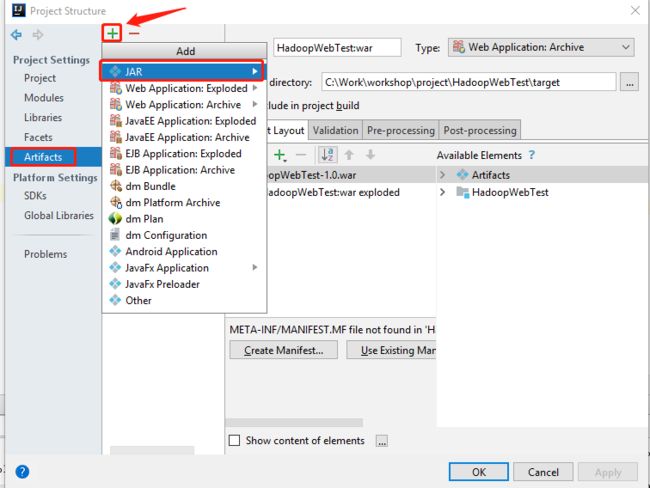

设置提交job 到 集群

需要将写好的代码打包一个jar

在 pom.xml 内

org.apache.maven.plugins

maven-assembly-plugin

2.5.5

true

lib/

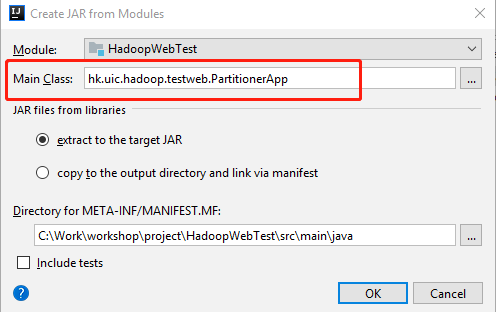

hk.uic.hadoop.testweb.PartitionerApp

jar-with-dependencies

make-assembly

package

single

注意修改 自己的 mainClass

鼠标右键项目,选择 Open Module Settings 或者快键键 F4

选择 From modules with dependencies...

菜单栏 Build–>Build Artifacts

会生成 jar 包

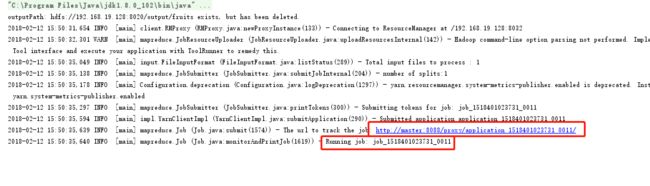

添加这些配置到 main 函数内

configuration.set("mapreduce.app-submission.cross-platform", "true");

configuration.set("yarn.resourcemanager.hostname", "192.168.19.128");

configuration.set("mapreduce.framework.name", "yarn");

configuration.set("yarn.resourcemanager.address", "192.168.19.128:8032");

configuration.set("mapreduce.job.jar","C:\\Work\\workshop\\Hadoop\\HadoopWebTest\\out\\artifacts\\HadoopWebTest_jar\\HadoopWebTest.jar");

完整 PartitionerApp.java

System.setProperty("hadoop.home.dir", "C:\\Work\\hadoop-2.9.0");

//懒得在IDEA配置 args 参数

args = new String[2];

args[0] = "hdfs://192.168.19.128:8020/springhdfs/fruits.txt";

args[1] = "hdfs://192.168.19.128:8020/output/fruits";

// 创建 Configuration

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS", "hdfs://192.168.19.128:8020");

//C:\Work\workshop\Hadoop\HadoopWebTest\out\artifacts\HadoopWebTest_jar

configuration.set("mapreduce.app-submission.cross-platform", "true");

configuration.set("yarn.resourcemanager.hostname", "192.168.19.128");

configuration.set("mapreduce.framework.name", "yarn");

configuration.set("yarn.resourcemanager.address", "192.168.19.128:8032");

configuration.set("mapreduce.job.jar","C:\\Work\\workshop\\Hadoop\\HadoopWebTest\\out\\artifacts\\HadoopWebTest_jar\\HadoopWebTest.jar");

// 清除已存在的文件目录

Path outputPath = new Path(args[1]);

FileSystem fileSystem = FileSystem.get(configuration);

if (fileSystem.exists(outputPath)) {

fileSystem.delete(outputPath, true);

System.out.println("outputPath: " + args[1] + " exists, but has been deleted.");

}

// 创建 Job

Job job = Job.getInstance(configuration, "wordcount");

// 设置Job 的处理类

job.setJarByClass(PartitionerApp.class);

// 设置作业处理的输入路径, 通过参数获得

FileInputFormat.setInputPaths(job, new Path(args[0]));

// 设置map 相关参数

job.setMapperClass(MyMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

// 设置reduce 相关参数

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

// 设置job的Partitioner

job.setPartitionerClass(MyPartitioner.class);

// 设置4个reducer, 每个类别一个

job.setNumReduceTasks(4);

//设置作业处理的输出路径

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

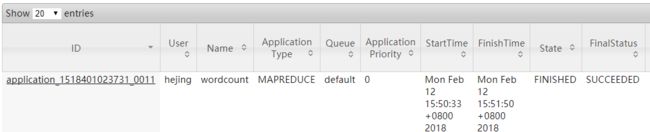

测试运行。

到此,本地开发的 MR job 已经成功从本地提交到 集群上。