Deep Learning & Art: Neural Style Transfer

1 - Problem Statement

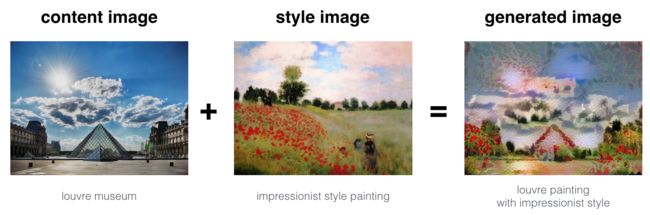

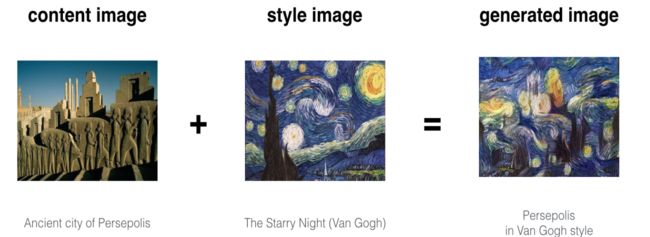

Neural Style Transfer (NST) is one of the most fun techniques in deep learning. As seen below, it merges two images, namely, a "content" image (C) and a "style" image (S), to create a "generated" image (G). The generated image G combines the "content" of the image C with the "style" of image S.

2 - Transfer Learning

Neural Style Transfer (NST) uses a previously trained convolutional network, and builds on top of that. The idea of using a network trained on a different task and applying it to a new task is called transfer learning.

Following the original NST paper (https://arxiv.org/abs/1508.06576), we will use the VGG network. Specifically, we'll use VGG-19, a 19-layer version of the VGG network. This model has already been trained on the very large ImageNet database, and thus has learned to recognize a variety of low level features (at the earlier layers) and high level features (at the deeper layers).

model = load_vgg_model("pretrained-model/imagenet-vgg-verydeep-19.mat")

3 - Neural Style Transfer

We will build the NST algorithm in three steps:

- Build the content cost function $J_{content}(C,G)$

- Build the style cost function $J_{style}(S,G)$

- Put it together to get $J(G) = \alpha J_{content}(C,G) + \beta J_{style}(S,G)$.

3.1 - Computing the content cost

content_image = scipy.misc.imread("images/louvre.jpg")

imshow(content_image)

# GRADED FUNCTION: compute_content_cost

def compute_content_cost(a_C, a_G):

"""

Computes the content cost

Arguments:

a_C -- tensor of dimension (1, n_H, n_W, n_C), hidden layer activations representing content of the image C

a_G -- tensor of dimension (1, n_H, n_W, n_C), hidden layer activations representing content of the image G

Returns:

J_content -- scalar that you compute using equation 1 above.

"""

# Retrieve dimensions from a_G

m, n_H, n_W, n_C = a_G.get_shape().as_list()

# Reshape a_C and a_G

a_C_unrolled = tf.reshape(a_C, [n_H*n_W, n_C])

a_G_unrolled = tf.reshape(a_G, [n_H*n_W, n_C])

# compute the cost with tensorflow

J_content = tf.reduce_sum(tf.square(tf.subtract(a_C_unrolled,a_G_unrolled)))/(4*n_H*n_W*n_C)

return J_content

3.2 - Computing the style cost

style_image = scipy.misc.imread("images/monet_800600.jpg")

imshow(style_image)

# GRADED FUNCTION: gram_matrix

def gram_matrix(A):

"""

Argument:

A -- matrix of shape (n_C, n_H*n_W)

Returns:

GA -- Gram matrix of A, of shape (n_C, n_C)

"""

GA = tf.matmul(A,tf.transpose(A))

return GA

# GRADED FUNCTION: compute_layer_style_cost

def compute_layer_style_cost(a_S, a_G):

"""

Arguments:

a_S -- tensor of dimension (1, n_H, n_W, n_C), hidden layer activations representing style of the image S

a_G -- tensor of dimension (1, n_H, n_W, n_C), hidden layer activations representing style of the image G

Returns:

J_style_layer -- tensor representing a scalar value, style cost defined above by equation (2)

"""

# Retrieve dimensions from a_G

m, n_H, n_W, n_C = a_G.get_shape().as_list()

# Reshape the images to have them of shape (n_C, n_H*n_W)

a_S = tf.reshape(a_S, [n_H*n_W, n_C])

a_G = tf.reshape(a_G, [n_H*n_W, n_C])

# Computing gram_matrices for both images S and G

GS = gram_matrix(tf.transpose(a_S))

GG = gram_matrix(tf.transpose(a_G))

# Computing the loss

J_style_layer = tf.reduce_sum(tf.square(tf.subtract(GS,GG))) / (4* tf.square(tf.to_float(n_H*n_W*n_C)))

return J_style_layer

def compute_style_cost(model, STYLE_LAYERS):

"""

Computes the overall style cost from several chosen layers

Arguments:

model -- our tensorflow model

STYLE_LAYERS -- A python list containing:

- the names of the layers we would like to extract style from

- a coefficient for each of them

Returns:

J_style -- tensor representing a scalar value, style cost defined above by equation (2)

"""

# initialize the overall style cost

J_style = 0

for layer_name, coeff in STYLE_LAYERS:

# Select the output tensor of the currently selected layer

out = model[layer_name]

# Set a_S to be the hidden layer activation from the layer we have selected, by running the session on out

a_S = sess.run(out)

# Set a_G to be the hidden layer activation from same layer. Here, a_G references model[layer_name]

# and isn't evaluated yet. Later in the code, we'll assign the image G as the model input, so that

# when we run the session, this will be the activations drawn from the appropriate layer, with G as input.

a_G = out

# Compute style_cost for the current layer

J_style_layer = compute_layer_style_cost(a_S, a_G)

# Add coeff * J_style_layer of this layer to overall style cost

J_style += coeff * J_style_layer

return J_style

So, the total cost is:

J =alpha * J_content + beta * J_style

4 - Solving the optimization problem

- Create an Interactive Session

- Load the content image

- Load the style image

- Randomly initialize the image to be generated

- Load the VGG16 model

- Build the TensorFlow graph:

- Run the content image through the VGG16 model and compute the content cost

- Run the style image through the VGG16 model and compute the style cost

- Compute the total cost

- Define the optimizer and the learning rate

- Initialize the TensorFlow graph and run it for a large number of iterations, updating the generated image at every step.

# Reset the graph

tf.reset_default_graph()

# Start interactive session

sess = tf.InteractiveSession()

content_image = scipy.misc.imread("images/louvre_small.jpg")

content_image = reshape_and_normalize_image(content_image)

style_image = scipy.misc.imread("images/monet.jpg")

style_image = reshape_and_normalize_image(style_image)

generated_image = generate_noise_image(content_image)

imshow(generated_image[0])

model = load_vgg_model("pretrained-model/imagenet-vgg-verydeep-19.mat")

# Assign the content image to be the input of the VGG model.

sess.run(model['input'].assign(content_image))

# Select the output tensor of layer conv4_2

out = model['conv4_2']

# Set a_C to be the hidden layer activation from the layer we have selected

a_C = sess.run(out)

# Set a_G to be the hidden layer activation from same layer. Here, a_G references model['conv4_2']

# and isn't evaluated yet. Later in the code, we'll assign the image G as the model input, so that

# when we run the session, this will be the activations drawn from the appropriate layer, with G as input.

a_G = out

# Compute the content cost

J_content = compute_content_cost(a_C, a_G)

# Assign the input of the model to be the "style" image

sess.run(model['input'].assign(style_image))

# Compute the style cost

J_style = compute_style_cost(model, STYLE_LAYERS)

J = total_cost(J_content, J_style, alpha = 10, beta = 40)

# define optimizer (1 line)

optimizer = tf.train.AdamOptimizer(2.0)

# define train_step (1 line)

train_step = optimizer.minimize(J)

def model_nn(sess, input_image, num_iterations = 200):

# Initialize global variables (you need to run the session on the initializer)

sess.run(tf.global_variables_initializer())

# Run the noisy input image (initial generated image) through the model. Use assign().

sess.run(model['input'].assign(input_image))

for i in range(num_iterations):

# Run the session on the train_step to minimize the total cost

sess.run(train_step)

# Compute the generated image by running the session on the current model['input']

generated_image = sess.run(model['input'])

# Print every 20 iteration.

if i%20 == 0:

Jt, Jc, Js = sess.run([J, J_content, J_style])

print("Iteration " + str(i) + " :")

print("total cost = " + str(Jt))

print("content cost = " + str(Jc))

print("style cost = " + str(Js))

# save current generated image in the "/output" directory

save_image("output/" + str(i) + ".png", generated_image)

# save last generated image

save_image('output/generated_image.jpg', generated_image)

return generated_image