天涯论坛——python舆情分析汇总(四)

基于天涯论坛的 “新冠疫情”舆情分析

完整数据和代码链接:https://download.csdn.net/download/weixin_43906500/14935218

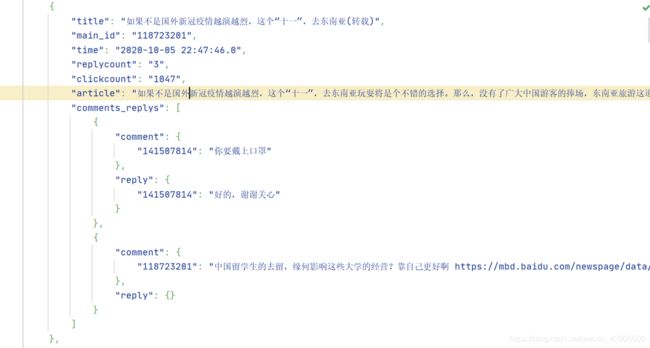

1.天涯论坛数据架构

天涯论坛主页主要分为推荐板块、推荐贴文、搜索板块、其他链接四个部分。对于新冠疫情的相关帖子主要利用搜索板块进行数据获取。

搜索板块实现关键词检索,可检索出75页发帖,每页发帖文章为10篇

根据贴文数据,可以提取发帖人、发帖时间、点击量、回复量以及贴文内容信息

根据帖子下方评论可以提取评论信息,以进行后续的文本分析

用户主页含有用户基本信息,如用户昵称、等级、关注量、粉丝量、天涯分、注册日期等信息

2.舆情分析技术实现

2.1数据获取

使用python爬虫获取数据

from pyquery import PyQuery as pq

import requests

from urllib.parse import quote

from time import sleep

import json

page = 75

key_word = '时政'

def prase_all_page(urls):

"""

解析所有搜索页,获取帖子url,过滤无评论帖子

:param urls:

:return: content_urls

"""

content_urls = []

for url in urls:

sleep(1)

print('正在抓取:', url)

doc = pq(requests.get(url=url, timeout=30).text)

# print(doc)

doc('.searchListOne li:last-child').remove() # 删除最后一个无用li节点

lis = doc('.searchListOne li').items() # 获取content节点生成器

for li in lis:

reverse = li('.source span:last-child').text()

a = li('a:first-child')

content_url = a.attr('href')

# print(content_url)

# print('评论数:', reverse)

content_urls.append(content_url)

return content_urls

def prase_all_content(urls):

"""

获取网页相关信息

:param urls:

:return:

"""

dic = []

i = 0

for url in urls:

print(i)

i = i + 1

try:

dic1 = {}

print('正在解析:', url)

doc = pq(requests.get(url=url, timeout=30).text)

title = doc('.atl-head .atl-title').text()

main_id = doc('.atl-head .atl-menu').attr('_host')

replytime = doc('.atl-head .atl-menu').attr('js_replytime')

loc = replytime.rfind('-')

# print(replytime)

print(replytime[0:4])

if(replytime[0:4]!="2020 "):

continue

print(replytime)

replycount = doc('.atl-head .atl-menu').attr('js_replycount')

clickcount = doc('.atl-head .atl-menu').attr('js_clickcount')

article = next(doc('.bbs-content').items()).text()

dic1["title"] = str(title)

dic1["main_id"] = main_id

dic1["time"] = replytime

dic1["replycount"] = replycount

dic1["clickcount"] = clickcount

dic1["article"] = article

comments_replys = []

comments = doc('.atl-main div:gt(1)').items() # 通栏广告后的评论列表

for comment in comments: # 处理评论

dic3 = {}

dic4 = {}

dic5 = {}

host_id = comment.attr('_hostid')

# user_name = comment.attr('_host')

comment_text = comment('.bbs-content').text()

replys = comment('.item-reply-view li').items() # 评论回复

if replys != None:

for reply in replys:

rid = reply.attr('_rid')

rtext = reply('.ir-content').text()

if rid:

if rid != main_id and rid != host_id:

dic5[host_id] = rtext

if host_id:

k = comment_text.rfind("----------------------------")

if (k != -1):

comment_text = comment_text[k + 29:]

dic4[host_id] = comment_text

dic3['comment'] = dic4

dic3['reply'] = dic5

comments_replys.append(dic3)

dic1["comments_replys"] = comments_replys

dic.append(dic1)

except:

continue

string = json.dumps(dic, ensure_ascii=False, indent=4)

print(string)

file_name = key_word + ".json"

f = open(file_name,'w',encoding='utf-8')

json.dump(dic,f,ensure_ascii=False, indent=4)

def run(key, page):

"""

:param key:

:param page:

:return:

"""

start_urls = []

for p in range(1, page+1):

url = 'http://search.tianya.cn/bbs?q={}&pn={}'.format(quote(key), p)

start_urls.append(url)

content_urls = prase_all_page(start_urls)

# print(content_urls)

prase_all_content(content_urls)

if __name__ == '__main__':

run(key_word, page)结果如下:

2.2趋势分析

通过对发帖时间的统计,获取每个月发帖数量;通过对每月发帖文章进行统计,获取每月发帖关键词;而后进行趋势分析

import json

from collections import Counter

from pyecharts.charts import Bar

import jieba

from pyecharts import options as opts

#去除停用词

def get_stopwords():

stopwords = [line.strip() for line in open("stopword.txt", 'r',encoding="utf-8").readlines()]

stopwords_other = ['\n',' ']

stopwords.extend(stopwords_other)

return stopwords

def get_article_count_plus():

with open("data.json",'r',encoding='utf-8') as load_f:

load_dict = json.load(load_f)

stopwords = get_stopwords()

list = []

dic_word = {}

for dic in load_dict:

time = dic['time']

loc = time.rfind('-')

list.append(time[0:7])

article = dic['article']

seg_list = jieba.lcut(article)

month = time[0:7]

if month in dic_word.keys():

dic_word[month].extend(seg_list)

else:

dic_word[month] = []

dic = dict(Counter(list))

d = sorted(dic.items(), key=lambda d:d[0])

key_word_used = []

key_word = []

for k in d:

m = k[0]

list = [i for i in dic_word[m] if i not in stopwords]

word_count = Counter(list)

word_list = word_count.most_common(12)

for i in word_list:

if(i[0] not in key_word_used):

key_word.append(i[0])

key_word_used.append(i[0])

break

columns = [i[0] for i in d]

data = [i[1] for i in d]

col = []

for i in range(len(columns)):

c1 = columns[i].find('-')

m = columns[i][c1+1:] + '(' + key_word[i] + ')'

col.append(m)

print(col)

print(data)

return col,data

if __name__ == "__main__":

col,data = get_article_count_plus()

c = (

Bar()

.add_xaxis(col)

.add_yaxis("发帖量", data)

.set_global_opts(title_opts=opts.TitleOpts(title="发帖量及关键词统计", subtitle="柱状图"))

)

c.render("article_conut_plus.html")可视化结果如下

2.3词云绘制

通过对所有文章使用jieba分词、去除停用词并进行词频统计,而后利用python pyecharts库进行词云绘制

import jieba

import json

from wordcloud import WordCloud

from collections import Counter

from pyecharts import options as opts

from pyecharts.charts import Page, WordCloud

from pyecharts.globals import SymbolType

#去除停用词

stopwords = [line.strip() for line in open("stopword.txt", 'r',encoding="utf-8").readlines()]

stopwords_other = ['\n',' ']

stopwords.extend(stopwords_other)

with open("data.json",'r',encoding='utf-8') as load_f:

load_dict = json.load(load_f)

list = []

for dic in load_dict:

article = dic['article']

seg_list = jieba.lcut(article)

list.extend(seg_list)

list = [i for i in list if i not in stopwords]

word = Counter(list).most_common(1000)

print(word)

c = (

WordCloud()

.add(series_name="关键词分析", data_pair=word, word_size_range=[16, 66])

.set_global_opts(

title_opts=opts.TitleOpts(title_textstyle_opts=opts.TextStyleOpts(font_size=35)),

tooltip_opts=opts.TooltipOpts(is_show=True),

)

)

# print(c.dump_options_with_quotes())

c.render("wordcloud.html")

结果如下所示

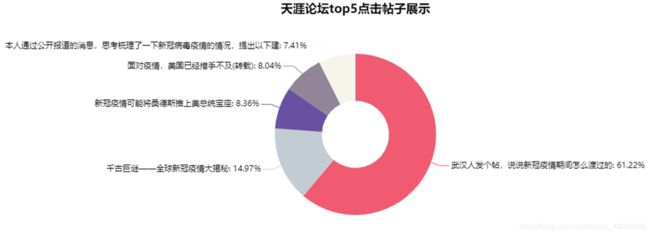

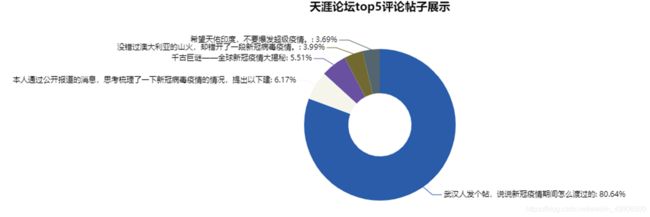

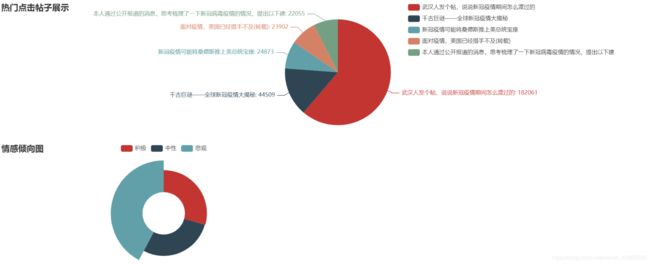

2.4热帖分析

通过统计点击量、回复量排名靠前的热门帖子进行分析发现热点问题

import json

from collections import Counter

from pyecharts import options as opts

from pyecharts.charts import Pie

from pyecharts.faker import Faker

with open("data.json",'r',encoding='utf-8') as load_f:

load_dict = json.load(load_f)

dic_reply = {}

dic_click = {}

for dic in load_dict:

title = dic['title']

replycount = dic['replycount']

clickcount = dic['clickcount']

dic_reply[title] = replycount

dic_click[title] = clickcount

d_reply = sorted(dic_reply.items(), key=lambda d:int(d[1]), reverse=True)

d_click = sorted(dic_click.items(), key=lambda d:int(d[1]), reverse=True)

columns_reply = [i[0] for i in d_reply[0:5]]

columns_click = [i[0] for i in d_click[0:5]]

data_reply = [i[1] for i in d_reply[0:5]]

data_click = [i[1] for i in d_click[0:5]]

print(columns_reply)

print(data_reply)

print(columns_click)

print(data_click)

c = (

Pie(init_opts=opts.InitOpts(width="1440px", height="300px"))

.add(

"",

[

list(z)

for z in zip(

columns_reply,

data_reply,

)

],

center=["40%", "50%"],

)

.set_global_opts(

title_opts=opts.TitleOpts(title="top5回复帖子饼图"),

legend_opts=opts.LegendOpts(type_="scroll", pos_left="60%", orient="vertical"),

)

.set_series_opts(label_opts=opts.LabelOpts(formatter="{b}: {c}"))

.render("top5_reply.html")

)

d = (

Pie(init_opts=opts.InitOpts(width="1440px", height="300px"))

.add(

"",

[

list(z)

for z in zip(

columns_click,

data_click,

)

],

center=["40%", "50%"],

)

.set_global_opts(

title_opts=opts.TitleOpts(title="top5点击帖子饼图"),

legend_opts=opts.LegendOpts(type_="scroll", pos_left="60%", orient="vertical"),

)

.set_series_opts(label_opts=opts.LabelOpts(formatter="{b}: {c}"))

.render("top5_click.html")

)

结果如下:

2.5热门用户分析

对于发帖数和回帖数排名靠前的用户进行统计分析,以发现关键用户。

在实现过程中可以对关键用户个人信息使用爬虫提取,在爬取过程中使用cookies进行绕过。

热门博主代码如下

import requests

from bs4 import BeautifulSoup

import json

from collections import Counter

from pyecharts import options as opts

from pyecharts.charts import Pie

cookie = 'time=ct=1610555536.729; Hm_lvt_80579b57bf1b16bdf88364b13221a8bd=1609228044,1610554556,1610555141,1610555254; __guid=1434027248; __ptime=1610555531697; ADVC=392bceef4d2e93; ASL=18641,0oiia,3d9e983d3d9e98c13d9e942a3d9ed050; __auc=55701b92176ad3bce02e5e424be; __guid2=1434027248; Hm_lvt_bc5755e0609123f78d0e816bf7dee255=1609228049,1610326081,1610497121,1610551336; tianya1=126713,1610555147,3,28373; Hm_lpvt_bc5755e0609123f78d0e816bf7dee255=1610555532; ADVS=3935d4945646c6; __cid=CN; __asc=726f17c2176fc883ee50ffe5d05; Hm_lpvt_80579b57bf1b16bdf88364b13221a8bd=1610555254; __u_a=v2.2.0; sso=r=130844634&sid=&wsid=AADC8CA26685270609A71D68B0C3B5D3; deid=aa235e182e6a467e38046812fb4bbf69; user=w=ty%u005f%u6768%u67f3296&id=143507379&f=1; temp=k=286001744&s=&t=1610555522&b=4e19d5da79254fd0c322681054220b5f&ct=1610555522&et=1613147522; temp4=rm=3d4dbe37ab69472242e3bf28296f30b0; u_tip=; bbs_msg=1610555532971_143507379_0_0_0_0; ty_msg=1610555533265_143507379_3_0_0_0_0_0_3_0_0_0_0_0'

cookieDict = {}

cookies = cookie.split("; ")

for co in cookies:

co = co.strip()

p = co.split('=')

value = co.replace(p[0] + '=', '').replace('"', '')

cookieDict[p[0]] = value

with open("data.json",'r',encoding='utf-8') as load_f:

load_dict = json.load(load_f)

list_1 = []

for dic in load_dict:

main_id = dic['main_id']

list_1.append(main_id)

dic = dict(Counter(list_1))

d = sorted(dic.items(), key=lambda d:d[1],reverse=True)

# print(d[0:10])

columns = [i[0] for i in d[0:10]]

data = [i[1] for i in d[0:10]]

name = []

for id in columns:

r = requests.get("http://www.tianya.cn/"+id,cookies=cookieDict)

soup = BeautifulSoup(r.text,'html.parser')

name.append(soup.find('h2').a.text)

c = (

Pie()

.add(

"",

[list(z) for z in zip(name, data)],

radius=["40%", "75%"],

)

.set_global_opts(

title_opts=opts.TitleOpts(title="Pie-Radius"),

legend_opts=opts.LegendOpts(orient="vertical", pos_top="15%", pos_left="2%",is_show=False),

)

.set_series_opts(label_opts=opts.LabelOpts(formatter="{b}: {c}"))

.render("top10_active_blogger.html")

)

热门答主代码如下

import json

from collections import Counter

from pyecharts.charts import Pie

import requests

from bs4 import BeautifulSoup

from pyecharts import options as opts

with open('data.json','r',encoding='utf-8') as load_f:

load_dict = json.load(load_f)

list_answer = []

for dic in load_dict:

comments_replys = dic['comments_replys']

if comments_replys is not None:

for d in comments_replys:

try:

if d['comment']:

for k,v in d['comment'].items():

list_answer.append(k)

print(k)

if d['reply']:

for k,v in d['reply'].items():

list_answer.append(k)

print(k)

except:

continue

dic_answer = Counter(list_answer).most_common(10)

columns_answer = [i[0] for i in dic_answer]

data_answer = [i[1] for i in dic_answer]

print(columns_answer)

name_answer = []

for id in columns_answer:

r = requests.get("http://www.tianya.cn/"+id)

soup = BeautifulSoup(r.text,'html.parser')

name_answer.append(soup.find('h2').a.text)

c = (

Pie()

.add(

"",

[list(z) for z in zip(name_answer, data_answer)],

radius=["40%", "75%"],

)

.set_global_opts(

title_opts=opts.TitleOpts(title="活跃用户展示"),

legend_opts=opts.LegendOpts(orient="vertical", pos_top="15%", pos_left="2%",is_show=False),

)

.set_series_opts(label_opts=opts.LabelOpts(formatter="{b}: {c}"))

)

c.render("top10_active_answer.html")

结果展示如下

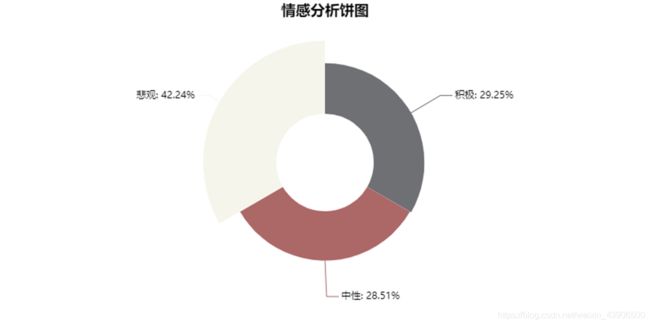

2.6情感分析

情感分析主要分为两个方向,第一个是由情感词典和句法结构来做的。第二个是根据机器学习中深度学习的方法来做的(例如LSTM、CNN、LSTM+CNN、BERT+CNN等)。由于算力和时间限制,我们组采用情感词典方法。首先使用了基于BosonNLP情感词典的情感分析,效果不是很好。而后采用github开源的多分类情感词典hellonlp/sentiment_analysis_dict,分类效果较好

import json

import pandas as pd

import jieba

from sentiment_analysis_dict.networks import SentimentAnalysis

from pyecharts import options as opts

from pyecharts.charts import Pie

SA = SentimentAnalysis()

def predict(sent):

result = 0

score1,score0 = SA.normalization_score(sent)

if score1 == score0:

result = 0

elif score1 > score0:

result = 1

elif score1 < score0:

result = -1

return result

def getscore(text):

df = pd.read_table(r"BosonNLP.txt", sep=" ", names=['key', 'score'])

key = df['key'].values.tolist()

score = df['score'].values.tolist()

# jieba分词

segs = jieba.lcut(text, cut_all=False) # 返回list

# 计算得分

score_list = [score[key.index(x)] for x in segs if (x in key)]

return sum(score_list)

with open('data.json','r',encoding='utf-8') as load_f:

load_dict = json.load(load_f)

positive = 0

negative = 0

neutral = 0

for dic in load_dict:

comments_replys = dic['comments_replys']

if comments_replys is not None:

for d in comments_replys:

try:

if d['comment']:

for k,v in d['comment'].items():

n = predict(v)

if(n > 0):

positive = positive + 1

elif(n==0):

neutral = neutral + 1

else:

negative = negative + 1

if d['reply']:

for k,v in d['reply'].items():

n = predict(v)

if (n > 0):

positive = positive + 1

elif (n == 0):

neutral = neutral + 1

else:

negative = negative + 1

except:

continue

attr = ["积极", "中性", "悲观"]

v = [positive,neutral,negative]

c = (

Pie()

.add(

"",

[list(z) for z in zip(attr, v)],

radius=["30%", "75%"],

center=["25%", "50%"],

rosetype="radius",

label_opts=opts.LabelOpts(is_show=False),

)

.set_global_opts(title_opts=opts.TitleOpts(title="情感分析饼图"))

.render("emotion_analysis.html")

)结果如下:

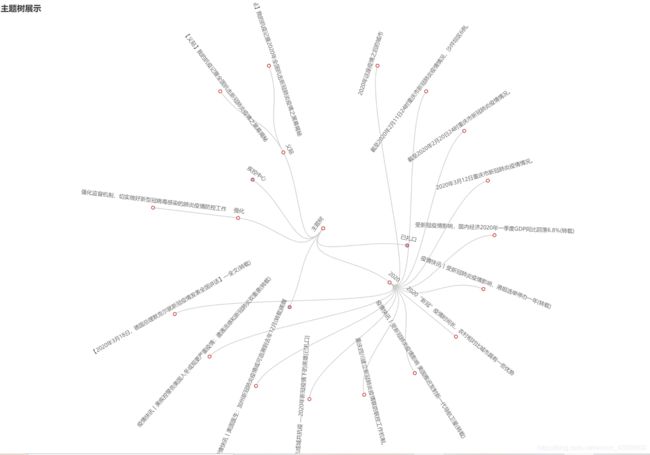

2.7主题发现

首先,使用jieba分词;而后,去除停用词;构建词向量空间;将单词出现的次数转化为权值(TF-IDF);用K-means算法进行聚类

import json

import jieba

import numpy as np

from sklearn.cluster import KMeans

from collections import Counter

from pyecharts import options as opts

from pyecharts.charts import Tree

stopwords = [line.strip() for line in open("stopword.txt", 'r',encoding="utf-8").readlines()]

stopwords_other = ['\n',' ']

stopwords.extend(stopwords_other)

with open("data.json",'r',encoding='utf-8') as load_f:

load_dict = json.load(load_f)

title_list = []

title_word_list = set()

for dic in load_dict:

title = dic['title']

if title in title_list:

continue

word_cut = jieba.lcut(title)

word_cut = [i for i in word_cut if i not in stopwords]

title_word_list |= set(word_cut)

title_list.append(title)

title_list = list(set(title_list))

title_word_list = list(title_word_list)

vsm_list = []

for title in title_list:

temp_vector = []

# print(title)

for word in title_word_list:

temp_vector.append(title.count(word)*1.0)

vsm_list.append(temp_vector)

docs_matrix = np.array(vsm_list)

column_sum = [float(len(np.nonzero(docs_matrix[:,i])[0]))

for i in range(docs_matrix.shape[1])]

column_sum = np.array(column_sum)

column_sum = docs_matrix.shape[0] / column_sum

idf = np.log(column_sum)

idf = np.diag(idf)

tfidf = np.dot(docs_matrix,idf)

clf = KMeans(n_clusters=20)

clf.fit(tfidf)

centers = clf.cluster_centers_

labels = clf.labels_

# print(labels)

list_divide = {}

for i in range(len(labels)):

id = str(labels[i])

if id in list_divide.keys():

list_divide[id].append(title_list[i])

else:

list_divide[id] = []

list_divide_plus = {}

word_used = []

key_word = []

for k,v in list_divide.items():

word = []

for i in v:

word.extend(jieba.lcut(i))

word = [i for i in word if i not in stopwords]

word_count = Counter(word)

word = word_count.most_common(20)

for i in word:

if(i[0] not in word_used):

key_word.append(i[0])

list_divide_plus[i[0]] = v

word_used.append(i[0])

break

dic_data = []

dic_tree = {}

dic_tree["name"] = "主题树"

tree_list = []

for k,v in list_divide_plus.items():

dic = {}

dic["name"] = k

list = []

for v1 in v:

dic1 = {}

dic1["name"] = v1

list.append(dic1)

dic["children"] = list

tree_list.append(dic)

dic_tree["children"] = tree_list

dic_data.append(dic_tree)

# print(dic_data)

c = (

Tree()

.add("", dic_data)

.set_global_opts(title_opts=opts.TitleOpts(title="主题树展示"))

.render("subject_classification.html")

)

结果如下

3.高级汇总

主要代码分为三个部分:

1是爬虫爬取部分;

2是数据提取部分;

3是web flask可视化展示部分

后续可对三部分进行完整整合,增加爬取模块界面和数据上传界面,从而实现完全自动化对天涯论坛的特定板块实施舆情分析

3.1数据提取部分完整代码

import requests

from bs4 import BeautifulSoup

import json

import jieba

from sentiment_analysis_dict.networks import SentimentAnalysis

import numpy as np

from sklearn.cluster import KMeans

from collections import Counter

def predict(sent,SA):

"""

1: positif

0: neutral

-1: negatif

"""

result = 0

score1,score0 = SA.normalization_score(sent)

if score1 == score0:

result = 0

elif score1 > score0:

result = 1

elif score1 < score0:

result = -1

return result

#去除停用词

def get_stopwords():

stopwords = [line.strip() for line in open("stopword.txt", 'r',encoding="utf-8").readlines()]

stopwords_other = ['\n',' ']

stopwords.extend(stopwords_other)

return stopwords

def get_stopwords1():

stopwords = [line.strip() for line in open("stopword.txt", 'r',encoding="utf-8").readlines()]

stopwords_other = ['\n',' ','疫情','新冠','肺炎','转载','美国','抗击','防控','中国']

stopwords.extend(stopwords_other)

return stopwords

def get_all_data():

SA = SentimentAnalysis()

cookie = 'time=ct=1610555536.729; Hm_lvt_80579b57bf1b16bdf88364b13221a8bd=1609228044,1610554556,1610555141,1610555254; __guid=1434027248; __ptime=1610555531697; ADVC=392bceef4d2e93; ASL=18641,0oiia,3d9e983d3d9e98c13d9e942a3d9ed050; __auc=55701b92176ad3bce02e5e424be; __guid2=1434027248; Hm_lvt_bc5755e0609123f78d0e816bf7dee255=1609228049,1610326081,1610497121,1610551336; tianya1=126713,1610555147,3,28373; Hm_lpvt_bc5755e0609123f78d0e816bf7dee255=1610555532; ADVS=3935d4945646c6; __cid=CN; __asc=726f17c2176fc883ee50ffe5d05; Hm_lpvt_80579b57bf1b16bdf88364b13221a8bd=1610555254; __u_a=v2.2.0; sso=r=130844634&sid=&wsid=AADC8CA26685270609A71D68B0C3B5D3; deid=aa235e182e6a467e38046812fb4bbf69; user=w=ty%u005f%u6768%u67f3296&id=143507379&f=1; temp=k=286001744&s=&t=1610555522&b=4e19d5da79254fd0c322681054220b5f&ct=1610555522&et=1613147522; temp4=rm=3d4dbe37ab69472242e3bf28296f30b0; u_tip=; bbs_msg=1610555532971_143507379_0_0_0_0; ty_msg=1610555533265_143507379_3_0_0_0_0_0_3_0_0_0_0_0'

cookieDict = {}

cookies = cookie.split("; ")

for co in cookies:

co = co.strip()

p = co.split('=')

value = co.replace(p[0] + '=', '').replace('"', '')

cookieDict[p[0]] = value

with open("明星.json",'r',encoding='utf-8') as load_f:

load_dict = json.load(load_f)

stopwords = get_stopwords()

stopwords1 = get_stopwords1()

print(type(stopwords))

print(type(stopwords1))

print(stopwords)

print(stopwords1)

dic_reply = {}

dic_click = {}

list_time = []

list_word = []

dic_word = {}

list_id = []

list_answer = []

positive = 0

negative = 0

neutral = 0

title_list = []

title_word_list = set()

for dic in load_dict:

# show 5 top10帖主展示

main_id = dic['main_id']

list_id.append(main_id)

# show 5 top10贴主展示

dic_main_id = Counter(list_id).most_common(10)

columns_main_id = [i[0] for i in dic_main_id]

data_main_id = [i[1] for i in dic_main_id]

name_main_id = []

for id in columns_main_id:

l_main_id = []

r = requests.get("http://www.tianya.cn/" + id,cookies=cookieDict)

soup = BeautifulSoup(r.text, 'html.parser')

# name_main_id.append(soup.find('h2').a.text)

grade = soup.find_all("div",class_='ds')[0].a.text

data = soup.find_all("div", class_='link-box')

userinfo = soup.find_all("div", class_='userinfo')

follow = data[0].p.a.text

fun = data[1].p.a.text

date = soup.select('#home_wrapper > div.left-area > div:nth-child(1) > div.userinfo > p:nth-child(2)')

time = date[0].text.strip("注册日期")

l_main_id.append(soup.find('h2').a.text)

l_main_id.append(grade)

l_main_id.append(follow)

l_main_id.append(fun)

l_main_id.append(time)

name_main_id.append(l_main_id)

print("5.top10贴主展示", name_main_id, data_main_id)

for dic in load_dict:

# show 1 获取发帖时间,计算随时间变化发帖数量及关键字走势

time_1 = dic['time']

loc = time_1.rfind('-')

list_time.append(time_1[0:7])

article = dic['article']

seg_list = jieba.lcut(article)

month = time_1[0:7]

if month in dic_word.keys():

dic_word[month].extend(seg_list)

else:

dic_word[month] = []

# show 2 获取所有帖子的词云图

list_word.extend(seg_list)

# show 3_4 top5评论点击帖子展示

title = dic['title']

replycount = dic['replycount']

clickcount = dic['clickcount']

dic_reply[title] = replycount

dic_click[title] = clickcount

# show 8 文章主题分类展示

# stopwords1= stopwords.extend(["疫情","防控"])

word_cut = jieba.lcut(title)

word_cut = [i for i in word_cut if i not in stopwords1]

title_word_list |= set(word_cut)

title_list.append(title)

# show 6 top10答主展示

comments_replys = dic['comments_replys']

if comments_replys is not None:

for d in comments_replys:

try:

if d['comment']:

for k, v in d['comment'].items():

list_answer.append(k)

# show 7 用户评论情感分析展示

n = predict(v,SA)

if (n > 0):

positive = positive + 1

elif (n == 0):

neutral = neutral + 1

else:

negative = negative + 1

if d['reply']:

for k, v in d['reply'].items():

list_answer.append(k)

# show 7 用户评论情感分析展示

n = predict(v,SA)

if (n > 0):

positive = positive + 1

elif (n == 0):

neutral = neutral + 1

else:

negative = negative + 1

except:

continue

# show 2 获取所有帖子的词云图

list_1 = [i for i in list_word if i not in stopwords]

cloud_word = Counter(list_1).most_common(100)

print("2.词云数据",cloud_word)

# show 3 top5评论点击帖子展示

d_reply = sorted(dic_reply.items(), key=lambda d: int(d[1]), reverse=True)

d_click = sorted(dic_click.items(), key=lambda d: int(d[1]), reverse=True)

columns_reply = [i[0] for i in d_reply[0:5]]

columns_click = [i[0] for i in d_click[0:5]]

data_reply = [i[1] for i in d_reply[0:5]]

data_click = [i[1] for i in d_click[0:5]]

print("3_4.top5评论点击",columns_reply,data_reply,columns_click,data_click)

# show 7 用户评论情感分析展示

emotion_column = ["积极", "中性", "悲观"]

emotion_data = [positive, neutral, negative]

print("7.用户评论情感分析展示", emotion_column, emotion_data)

# show 1 获取发帖时间,计算随时间变化发帖数量及关键字走势

dic = dict(Counter(list_time))

d = sorted(dic.items(), key=lambda d:d[0])

key_word_used = []

key_word = []

le = len(d)

# print(list_time)

# print(d)

# print(le)

for k in d:

m = k[0]

list_1 = [i for i in dic_word[m] if i not in stopwords]

word_count = Counter(list_1)

word_list = word_count.most_common(le)

# print(word_list)

for i in word_list:

if(i[0] not in key_word_used):

key_word.append(i[0])

key_word_used.append(i[0])

break

columns_article = [i[0] for i in d]

data_article = [i[1] for i in d]

columns_article_plus = []

print(columns_article)

print(key_word)

for i in range(len(columns_article)):

c1 = columns_article[i].find('-')

# print(c1)

# print(columns_article[i][c1+1:])

# print(key_word[i])

# m = columns_article[i][c1+1:] + '(' + key_word[i] + ')'

m = columns_article[i] + '(' + key_word[i] + ')'

columns_article_plus.append(m)

print("1.发帖数量变化",columns_article_plus,data_article)

# show 8 文章主题分类展示

title_list = list(set(title_list))

title_word_list = list(title_word_list)

vsm_list = []

for title in title_list:

temp_vector = []

# print(title)

for word in title_word_list:

temp_vector.append(title.count(word) * 1.0)

vsm_list.append(temp_vector)

docs_matrix = np.array(vsm_list)

column_sum = [float(len(np.nonzero(docs_matrix[:, i])[0])) for i in range(docs_matrix.shape[1])]

column_sum = np.array(column_sum)

column_sum = docs_matrix.shape[0] / column_sum

idf = np.log(column_sum)

idf = np.diag(idf)

tfidf = np.dot(docs_matrix, idf)

clf = KMeans(n_clusters=20)

clf.fit(tfidf)

centers = clf.cluster_centers_

labels = clf.labels_

# print(labels)

list_divide = {}

for i in range(len(labels)):

id = str(labels[i])

if id in list_divide.keys():

list_divide[id].append(title_list[i])

else:

list_divide[id] = []

# print(list_divide)

list_divide_plus = {}

word_used = []

key_word = []

for k, v in list_divide.items():

word = []

for i in v:

word.extend(jieba.lcut(i))

word = [i for i in word if i not in stopwords1]

word_count = Counter(word)

word = word_count.most_common(20)

for i in word:

if (i[0] not in word_used):

key_word.append(i[0])

list_divide_plus[i[0]] = v

word_used.append(i[0])

break

dic_data = []

dic_tree = {}

dic_tree["name"] = "主题树"

tree_list = []

for k, v in list_divide_plus.items():

dic = {}

dic["name"] = k

list_1 = []

for v1 in v:

dic1 = {}

dic1["name"] = v1

list_1.append(dic1)

dic["children"] = list_1

tree_list.append(dic)

dic_tree["children"] = tree_list

dic_data.append(dic_tree)

print("8.文章主题分类展示",dic_data)

# show 6 top10答主展示

dic_answer = Counter(list_answer).most_common(10)

columns_answer = [i[0] for i in dic_answer]

data_answer = [i[1] for i in dic_answer]

name_answer = []

for id in columns_answer:

l_main_id = []

r = requests.get("http://www.tianya.cn/" + id,cookies=cookieDict)

soup = BeautifulSoup(r.text, 'html.parser')

# name_main_id.append(soup.find('h2').a.text)

grade = soup.find_all("div",class_='ds')[0].a.text

data = soup.find_all("div", class_='link-box')

userinfo = soup.find_all("div", class_='userinfo')

follow = data[0].p.a.text

fun = data[1].p.a.text

date = soup.select('#home_wrapper > div.left-area > div:nth-child(1) > div.userinfo > p:nth-child(2)')

time = date[0].text.strip("注册日期")

l_main_id.append(soup.find('h2').a.text)

l_main_id.append(grade)

l_main_id.append(follow)

l_main_id.append(fun)

l_main_id.append(time)

name_answer.append(l_main_id)

print("6.top10答主展示", name_answer, data_answer)

return columns_article_plus,data_article,\

cloud_word,\

columns_reply,data_reply,\

columns_click,data_click,\

name_main_id, data_main_id,\

name_answer, data_answer,\

emotion_column, emotion_data,\

dic_data

if __name__ == "__main__":

print(get_all_data())

3.2flask web界面展示部分

from jinja2 import Markup, Environment, FileSystemLoader

from pyecharts.globals import CurrentConfig

from flask import Flask, render_template

import all_data

from pyecharts.charts import Bar,Pie

from pyecharts.components import Table

from wordcloud import WordCloud

from pyecharts import options as opts

from pyecharts.charts import Tree

from pyecharts.options import ComponentTitleOpts

import json

from pyecharts.charts import WordCloud

CurrentConfig.GLOBAL_ENV = Environment(loader=FileSystemLoader("./templates"))

columns_article_plus, data_article, \

word_cloud, \

columns_reply, data_reply, \

columns_click, data_click, \

name_main_id, data_main_id, \

name_answer, data_answer, \

emotion_column, emotion_data, \

dic_data = all_data.get_all_data()

name_blogger = [i[0] for i in name_main_id]

name_answer_1 = [i[0] for i in name_answer]

app = Flask(__name__, template_folder=".")

@app.route("/")

def index():

pos = emotion_data[0]

neu = emotion_data[1]

neg = emotion_data[2]

pos_0 = pos / (pos + neu + neg)

pos_1 = neu / (pos + neu + neg)

pos_2 = neg / (pos + neu + neg)

return render_template('templates/index.html', pos=pos, neu=neu, neg=neg, pos_0=pos_0, pos_1=pos_1, pos_2=pos_2)

@app.route("/get_article_count_plus")

def get_article_count_plus():

c = (

Bar()

.add_xaxis(columns_article_plus)

.add_yaxis("发帖量", data_article)

.set_global_opts(title_opts=opts.TitleOpts(title="发帖量及关键词统计", subtitle="柱状图"))

)

return c.dump_options_with_quotes()

@app.route("/get_word_cloud")

def get_word_cloud():

d = (

WordCloud()

.add(series_name="关键词分析", data_pair=word_cloud, word_size_range=[16, 66])

.set_global_opts(

title_opts=opts.TitleOpts(title="词云图"),

)

)

return d.dump_options_with_quotes()

@app.route("/get_top_reply")

def get_top_reply():

e = (

Pie(init_opts=opts.InitOpts(width="1440px", height="300px"))

.add(

"",

[

list(z)

for z in zip(

columns_reply,

data_reply,

)

],

center=["50%", "50%"],

)

.set_global_opts(

title_opts=opts.TitleOpts(title="热门回复帖子展示"),

legend_opts=opts.LegendOpts(type_="scroll", pos_left="60%", orient="vertical"),

)

.set_series_opts(label_opts=opts.LabelOpts(formatter="{b}: {c}"))

)

return e.dump_options_with_quotes()

@app.route("/get_top_click")

def get_top_click():

f = (

Pie(init_opts=opts.InitOpts(width="1440px", height="300px"))

.add(

"",

[

list(z)

for z in zip(

columns_click,

data_click,

)

],

center=["50%", "50%"],

)

.set_global_opts(

title_opts=opts.TitleOpts(title="热门点击帖子展示"),

legend_opts=opts.LegendOpts(type_="scroll", pos_left="60%", orient="vertical"),

)

.set_series_opts(label_opts=opts.LabelOpts(formatter="{b}: {c}"))

)

return f.dump_options_with_quotes()

@app.route("/get_active_blogger")

def get_active_blogger():

c = (

Pie()

.add(

"",

[list(z) for z in zip(name_blogger, data_main_id)],

radius=["40%", "75%"],

)

.set_global_opts(

title_opts=opts.TitleOpts(title="活跃博主展示"),

legend_opts=opts.LegendOpts(orient="vertical", pos_top="15%", pos_left="2%",is_show=False),

)

.set_series_opts(label_opts=opts.LabelOpts(formatter="{b}: {c}"))

# .render("5.top10_active_blogger.html")

)

return c.dump_options_with_quotes()

@app.route("/get_active_answer")

def get_active_answer():

c = (

Pie()

.add(

"",

[list(z) for z in zip(name_answer_1, data_answer)],

radius=["40%", "75%"],

)

.set_global_opts(

title_opts=opts.TitleOpts(title="活跃用户展示"),

legend_opts=opts.LegendOpts(orient="vertical", pos_top="15%", pos_left="2%",is_show=False),

)

.set_series_opts(label_opts=opts.LabelOpts(formatter="{b}: {c}"))

)

return c.dump_options_with_quotes()\

@app.route("/get_emotion")

def get_emotion():

c = (

Pie()

.add(

"",

[list(z) for z in zip(emotion_column, emotion_data)],

radius=["30%", "75%"],

center=["50%", "50%"],

rosetype="radius",

label_opts=opts.LabelOpts(is_show=False),

)

.set_global_opts(title_opts=opts.TitleOpts(title="情感倾向图"))

)

return c.dump_options_with_quotes()

@app.route("/get_subject")

def get_subject():

c = (

Tree()

.add("", dic_data, collapse_interval=2, layout="radial")

.set_global_opts(title_opts=opts.TitleOpts(title="主题树展示"))

)

return c.dump_options_with_quotes()

@app.route("/get_blogger_data")

def get_blogger_data():

dic = {}

for i in range(0,len(name_main_id)):

value = [data_main_id[i]]

value.extend(name_main_id[i][1:])

dic[name_main_id[i][0]] = value

return dic

@app.route("/get_answer_data")

def get_answer_data():

dic = {}

for i in range(0, len(name_answer)):

value = [data_answer[i]]

value.extend(name_answer[i][1:])

dic[name_answer[i][0]] = value

return dic

if __name__ == "__main__":

app.run(debug=False)3.3最终展示效果

1.新冠疫情关键词舆情展示结果

2.明星关键词舆情展示结果