我完成的C#关于在lucene下的中文切词

经过一天的研究,终于完成了在lucene.net下可以使用的中文切词方法。感到有些复杂,不过我还是拿下了。颇有点成就感的,发上来跟大家分享一下!

在实现了中文切词的基础方法上,我将其封装在继承lucene的Analyzer类下

chineseAnalzer的方法就不用多说了。

using

System;

using

System;

using

System.Collections.Generic;

using

System.Collections.Generic;

using

System.Text;

using

System.Text;

using

Lucene.Net.Analysis;

using

Lucene.Net.Analysis;

using

Lucene.Net.Analysis.Standard;

using

Lucene.Net.Analysis.Standard;

namespace

Lucene.Fanswo

namespace

Lucene.Fanswo

{

{

/// <summary>

/// <summary>

///

///

/// </summary>

/// </summary>

public class ChineseAnalyzer:Analyzer

public class ChineseAnalyzer:Analyzer

{

{

//private System.Collections.Hashtable stopSet;

//private System.Collections.Hashtable stopSet;

public static readonly System.String[] CHINESE_ENGLISH_STOP_WORDS = new System.String[] { "a", "an", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "s", "such", "t", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with", "我", "我们" };

public static readonly System.String[] CHINESE_ENGLISH_STOP_WORDS = new System.String[] { "a", "an", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "s", "such", "t", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with", "我", "我们" };

/// <summary>Constructs a {@link StandardTokenizer} filtered by a {@link

/// <summary>Constructs a {@link StandardTokenizer} filtered by a {@link

/// StandardFilter}, a {@link LowerCaseFilter} and a {@link StopFilter}.

/// StandardFilter}, a {@link LowerCaseFilter} and a {@link StopFilter}.

/// </summary>

/// </summary>

public override TokenStream TokenStream(System.String fieldName, System.IO.TextReader reader)

public override TokenStream TokenStream(System.String fieldName, System.IO.TextReader reader)

{

{

TokenStream result = new ChineseTokenizer(reader);

TokenStream result = new ChineseTokenizer(reader);

result = new StandardFilter(result);

result = new StandardFilter(result);

result = new LowerCaseFilter(result);

result = new LowerCaseFilter(result);

result = new StopFilter(result, CHINESE_ENGLISH_STOP_WORDS);

result = new StopFilter(result, CHINESE_ENGLISH_STOP_WORDS);

return result;

return result;

}

}

}

}

}

}

ChineseTokenizer类的实现:

ChineseTokenizer类的实现:

这里通过词典来正向匹配字符,返回lucene下定义的token流

using

System;

using

System;

using

System.Collections.Generic;

using

System.Collections.Generic;

using

System.Text;

using

System.Text;

using

Lucene.Net.Analysis;

using

Lucene.Net.Analysis;

using

System.Collections;

using

System.Collections;

using

System.Text.RegularExpressions;

using

System.Text.RegularExpressions;

using

System.IO;

using

System.IO;

namespace

Lucene.Fanswo

namespace

Lucene.Fanswo

{

{

class ChineseTokenizer : Tokenizer

class ChineseTokenizer : Tokenizer

{

{

private int offset = 0, bufferIndex = 0, dataLen = 0;//偏移量,当前字符的位置,字符长度

private int offset = 0, bufferIndex = 0, dataLen = 0;//偏移量,当前字符的位置,字符长度

private int start;//开始位置

private int start;//开始位置

/// <summary>

/// <summary>

/// 存在字符内容

/// 存在字符内容

/// </summary>

/// </summary>

private string text;

private string text;

/// <summary>

/// <summary>

/// 切词所花费的时间

/// 切词所花费的时间

/// </summary>

/// </summary>

public double TextSeg_Span = 0;

public double TextSeg_Span = 0;

/// <summary>Constructs a tokenizer for this Reader. </summary>

/// <summary>Constructs a tokenizer for this Reader. </summary>

public ChineseTokenizer(System.IO.TextReader reader)

public ChineseTokenizer(System.IO.TextReader reader)

{

{

this.input = reader;

this.input = reader;

text = input.ReadToEnd();

text = input.ReadToEnd();

dataLen = text.Length;

dataLen = text.Length;

}

}

/// <summary>进行切词,返回数据流中下一个token或者数据流为空时返回null

/// <summary>进行切词,返回数据流中下一个token或者数据流为空时返回null

/// </summary>

/// </summary>

///

///

public override Token Next()

public override Token Next()

{

{

Token token = null;

Token token = null;

WordTree tree = new WordTree();

WordTree tree = new WordTree();

//读取词库

//读取词库

tree.LoadDict();

tree.LoadDict();

//初始化词库,为树形

//初始化词库,为树形

Hashtable t_chartable = WordTree.chartable;

Hashtable t_chartable = WordTree.chartable;

string ReWord = "";

string ReWord = "";

string char_s;

string char_s;

start = offset;

start = offset;

bufferIndex = start;

bufferIndex = start;

while (true)

while (true)

{

{

//开始位置超过字符长度退出循环

//开始位置超过字符长度退出循环

if (start >= dataLen)

if (start >= dataLen)

{

{

break;

break;

}

}

//获取一个词

//获取一个词

char_s = text.Substring(start, 1);

char_s = text.Substring(start, 1);

if (string.IsNullOrEmpty(char_s.Trim()))

if (string.IsNullOrEmpty(char_s.Trim()))

{

{

start++;

start++;

continue;

continue;

}

}

//字符不在字典中

//字符不在字典中

if (!t_chartable.Contains(char_s))

if (!t_chartable.Contains(char_s))

{

{

if (ReWord == "")

if (ReWord == "")

{

{

int j = start + 1;

int j = start + 1;

switch (tree.GetCharType(char_s))

switch (tree.GetCharType(char_s))

{

{

case 0://中文单词

case 0://中文单词

ReWord += char_s;

ReWord += char_s;

break;

break;

case 1://英文单词

case 1://英文单词

j = start + 1;

j = start + 1;

while (j < dataLen)

while (j < dataLen)

{

{

if (tree.GetCharType(text.Substring(j, 1)) != 1)

if (tree.GetCharType(text.Substring(j, 1)) != 1)

break;

break;

j++;

j++;

}

}

ReWord += text.Substring(start, j - offset);

ReWord += text.Substring(start, j - offset);

break;

break;

case 2://数字

case 2://数字

j = start + 1;

j = start + 1;

while (j < dataLen)

while (j < dataLen)

{

{

if (tree.GetCharType(text.Substring(j, 1)) != 2)

if (tree.GetCharType(text.Substring(j, 1)) != 2)

break;

break;

j++;

j++;

}

}

ReWord += text.Substring(start, j - offset);

ReWord += text.Substring(start, j - offset);

break;

break;

default:

default:

ReWord += char_s;//其他字符单词

ReWord += char_s;//其他字符单词

break;

break;

}

}

offset = j;//设置取下一个词的开始位置

offset = j;//设置取下一个词的开始位置

}

}

else

else

{

{

offset = start;//设置取下一个词的开始位置

offset = start;//设置取下一个词的开始位置

}

}

//返回token对象

//返回token对象

return new Token(ReWord, bufferIndex, bufferIndex + ReWord.Length - 1);

return new Token(ReWord, bufferIndex, bufferIndex + ReWord.Length - 1);

}

}

//字符在字典中

//字符在字典中

ReWord += char_s;

ReWord += char_s;

//取得属于当前字符的词典树

//取得属于当前字符的词典树

t_chartable = (Hashtable)t_chartable[char_s];

t_chartable = (Hashtable)t_chartable[char_s];

//设置下一循环取下一个词的开始位置

//设置下一循环取下一个词的开始位置

start++;

start++;

if (start == dataLen)

if (start == dataLen)

{

{

offset = dataLen;

offset = dataLen;

return new Token(ReWord, bufferIndex, bufferIndex + ReWord.Length - 1);

return new Token(ReWord, bufferIndex, bufferIndex + ReWord.Length - 1);

}

}

}

}

return token;

return token;

}

}

}

}

}

}

测试的代码:

using

System;

using

System;

using

System.Collections.Generic;

using

System.Collections.Generic;

using

System.Text;

using

System.Text;

using

Analyzer

=

Lucene.Net.Analysis.Analyzer;

using

Analyzer

=

Lucene.Net.Analysis.Analyzer;

using

SimpleAnalyzer

=

Lucene.Net.Analysis.SimpleAnalyzer;

using

SimpleAnalyzer

=

Lucene.Net.Analysis.SimpleAnalyzer;

using

StandardAnalyzer

=

Lucene.Net.Analysis.Standard.StandardAnalyzer;

using

StandardAnalyzer

=

Lucene.Net.Analysis.Standard.StandardAnalyzer;

using

Token

=

Lucene.Net.Analysis.Token;

using

Token

=

Lucene.Net.Analysis.Token;

using

TokenStream

=

Lucene.Net.Analysis.TokenStream;

using

TokenStream

=

Lucene.Net.Analysis.TokenStream;

namespace

MyLuceneTest

namespace

MyLuceneTest

{

{

class Program

class Program

{

{

[STAThread]

[STAThread]

public static void Main(System.String[] args)

public static void Main(System.String[] args)

{

{

try

try

{

{

Test("中华人民共和国在1949年建立,从此开始了新中国的伟大篇章。长春市长春节致词", true);

Test("中华人民共和国在1949年建立,从此开始了新中国的伟大篇章。长春市长春节致词", true);

}

}

catch (System.Exception e)

catch (System.Exception e)

{

{

System.Console.Out.WriteLine(" caught a " + e.GetType() + "\n with message: " + e.Message + e.ToString());

System.Console.Out.WriteLine(" caught a " + e.GetType() + "\n with message: " + e.Message + e.ToString());

}

}

}

}

internal static void Test(System.String text, bool verbose)

internal static void Test(System.String text, bool verbose)

{

{

System.Console.Out.WriteLine(" Tokenizing string: " + text);

System.Console.Out.WriteLine(" Tokenizing string: " + text);

Test(new System.IO.StringReader(text), verbose, text.Length);

Test(new System.IO.StringReader(text), verbose, text.Length);

}

}

internal static void Test(System.IO.TextReader reader, bool verbose, long bytes)

internal static void Test(System.IO.TextReader reader, bool verbose, long bytes)

{

{

//Analyzer analyzer = new StandardAnalyzer();

//Analyzer analyzer = new StandardAnalyzer();

Analyzer analyzer = new Lucene.Fanswo.ChineseAnalyzer();

Analyzer analyzer = new Lucene.Fanswo.ChineseAnalyzer();

TokenStream stream = analyzer.TokenStream(null, reader);

TokenStream stream = analyzer.TokenStream(null, reader);

System.DateTime start = System.DateTime.Now;

System.DateTime start = System.DateTime.Now;

int count = 0;

int count = 0;

for (Token t = stream.Next(); t != null; t = stream.Next())

for (Token t = stream.Next(); t != null; t = stream.Next())

{

{

if (verbose)

if (verbose)

{

{

System.Console.Out.WriteLine("Token=" + t.ToString());

System.Console.Out.WriteLine("Token=" + t.ToString());

}

}

count++;

count++;

}

}

System.DateTime end = System.DateTime.Now;

System.DateTime end = System.DateTime.Now;

long time = end.Ticks - start.Ticks;

long time = end.Ticks - start.Ticks;

System.Console.Out.WriteLine(time + " milliseconds to extract " + count + " tokens");

System.Console.Out.WriteLine(time + " milliseconds to extract " + count + " tokens");

System.Console.Out.WriteLine((time * 1000.0) / count + " microseconds/token");

System.Console.Out.WriteLine((time * 1000.0) / count + " microseconds/token");

System.Console.Out.WriteLine((bytes * 1000.0 * 60.0 * 60.0) / (time * 1000000.0) + " megabytes/hour");

System.Console.Out.WriteLine((bytes * 1000.0 * 60.0 * 60.0) / (time * 1000000.0) + " megabytes/hour");

}

}

}

}

}

}

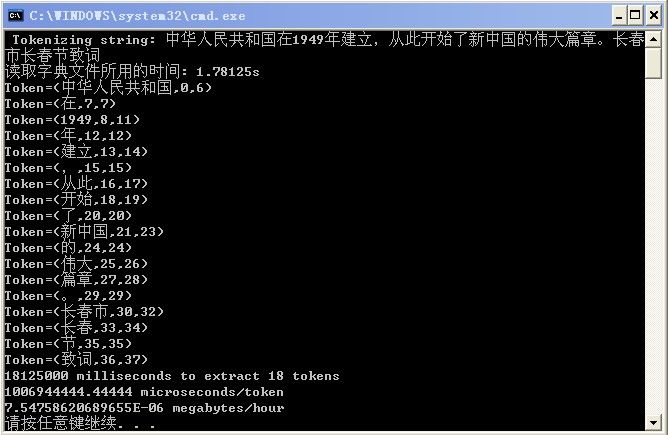

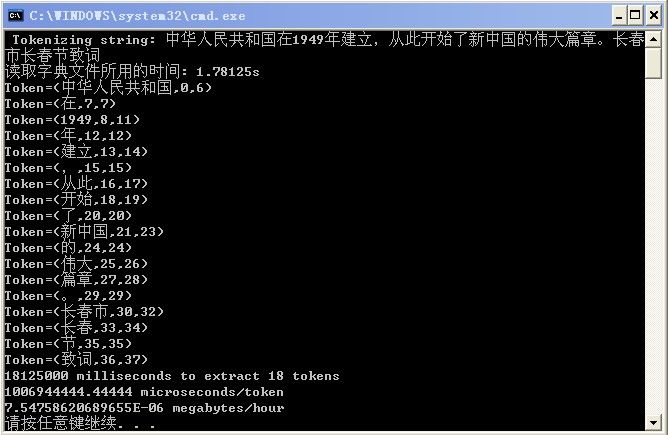

测试结果:

完毕!

分词的郊率上还有待在算法上提高。还有中文的标点符号没有处理,我将进一步完善。

本人文采不好,写不出很多文字,只有以代码代替一下我的言语。兄弟姐妹们给点意见哦。谢谢!

原码下载:http://files.cnblogs.com/harryguo/LucuneSearch.rar

在实现了中文切词的基础方法上,我将其封装在继承lucene的Analyzer类下

chineseAnalzer的方法就不用多说了。

using

System;

using

System; using

System.Collections.Generic;

using

System.Collections.Generic; using

System.Text;

using

System.Text;

using

Lucene.Net.Analysis;

using

Lucene.Net.Analysis; using

Lucene.Net.Analysis.Standard;

using

Lucene.Net.Analysis.Standard;

namespace

Lucene.Fanswo

namespace

Lucene.Fanswo {

{ /// <summary>

/// <summary> ///

///  /// </summary>

/// </summary> public class ChineseAnalyzer:Analyzer

public class ChineseAnalyzer:Analyzer {

{ //private System.Collections.Hashtable stopSet;

//private System.Collections.Hashtable stopSet; public static readonly System.String[] CHINESE_ENGLISH_STOP_WORDS = new System.String[] { "a", "an", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "s", "such", "t", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with", "我", "我们" };

public static readonly System.String[] CHINESE_ENGLISH_STOP_WORDS = new System.String[] { "a", "an", "and", "are", "as", "at", "be", "but", "by", "for", "if", "in", "into", "is", "it", "no", "not", "of", "on", "or", "s", "such", "t", "that", "the", "their", "then", "there", "these", "they", "this", "to", "was", "will", "with", "我", "我们" };

/// <summary>Constructs a {@link StandardTokenizer} filtered by a {@link

/// <summary>Constructs a {@link StandardTokenizer} filtered by a {@link /// StandardFilter}, a {@link LowerCaseFilter} and a {@link StopFilter}.

/// StandardFilter}, a {@link LowerCaseFilter} and a {@link StopFilter}.  /// </summary>

/// </summary> public override TokenStream TokenStream(System.String fieldName, System.IO.TextReader reader)

public override TokenStream TokenStream(System.String fieldName, System.IO.TextReader reader) {

{ TokenStream result = new ChineseTokenizer(reader);

TokenStream result = new ChineseTokenizer(reader); result = new StandardFilter(result);

result = new StandardFilter(result); result = new LowerCaseFilter(result);

result = new LowerCaseFilter(result); result = new StopFilter(result, CHINESE_ENGLISH_STOP_WORDS);

result = new StopFilter(result, CHINESE_ENGLISH_STOP_WORDS); return result;

return result; }

}

}

} }

}

这里通过词典来正向匹配字符,返回lucene下定义的token流

using

System;

using

System; using

System.Collections.Generic;

using

System.Collections.Generic; using

System.Text;

using

System.Text; using

Lucene.Net.Analysis;

using

Lucene.Net.Analysis; using

System.Collections;

using

System.Collections; using

System.Text.RegularExpressions;

using

System.Text.RegularExpressions; using

System.IO;

using

System.IO;

namespace

Lucene.Fanswo

namespace

Lucene.Fanswo {

{ class ChineseTokenizer : Tokenizer

class ChineseTokenizer : Tokenizer {

{

private int offset = 0, bufferIndex = 0, dataLen = 0;//偏移量,当前字符的位置,字符长度

private int offset = 0, bufferIndex = 0, dataLen = 0;//偏移量,当前字符的位置,字符长度

private int start;//开始位置

private int start;//开始位置 /// <summary>

/// <summary> /// 存在字符内容

/// 存在字符内容 /// </summary>

/// </summary> private string text;

private string text;

/// <summary>

/// <summary> /// 切词所花费的时间

/// 切词所花费的时间 /// </summary>

/// </summary> public double TextSeg_Span = 0;

public double TextSeg_Span = 0;

/// <summary>Constructs a tokenizer for this Reader. </summary>

/// <summary>Constructs a tokenizer for this Reader. </summary> public ChineseTokenizer(System.IO.TextReader reader)

public ChineseTokenizer(System.IO.TextReader reader) {

{ this.input = reader;

this.input = reader; text = input.ReadToEnd();

text = input.ReadToEnd(); dataLen = text.Length;

dataLen = text.Length; }

}

/// <summary>进行切词,返回数据流中下一个token或者数据流为空时返回null

/// <summary>进行切词,返回数据流中下一个token或者数据流为空时返回null /// </summary>

/// </summary> ///

///  public override Token Next()

public override Token Next() {

{ Token token = null;

Token token = null; WordTree tree = new WordTree();

WordTree tree = new WordTree(); //读取词库

//读取词库 tree.LoadDict();

tree.LoadDict(); //初始化词库,为树形

//初始化词库,为树形 Hashtable t_chartable = WordTree.chartable;

Hashtable t_chartable = WordTree.chartable; string ReWord = "";

string ReWord = ""; string char_s;

string char_s; start = offset;

start = offset; bufferIndex = start;

bufferIndex = start;

while (true)

while (true) {

{ //开始位置超过字符长度退出循环

//开始位置超过字符长度退出循环 if (start >= dataLen)

if (start >= dataLen) {

{ break;

break; }

} //获取一个词

//获取一个词 char_s = text.Substring(start, 1);

char_s = text.Substring(start, 1); if (string.IsNullOrEmpty(char_s.Trim()))

if (string.IsNullOrEmpty(char_s.Trim())) {

{ start++;

start++; continue;

continue; }

} //字符不在字典中

//字符不在字典中 if (!t_chartable.Contains(char_s))

if (!t_chartable.Contains(char_s)) {

{ if (ReWord == "")

if (ReWord == "") {

{ int j = start + 1;

int j = start + 1; switch (tree.GetCharType(char_s))

switch (tree.GetCharType(char_s)) {

{ case 0://中文单词

case 0://中文单词 ReWord += char_s;

ReWord += char_s; break;

break; case 1://英文单词

case 1://英文单词 j = start + 1;

j = start + 1; while (j < dataLen)

while (j < dataLen) {

{ if (tree.GetCharType(text.Substring(j, 1)) != 1)

if (tree.GetCharType(text.Substring(j, 1)) != 1) break;

break;

j++;

j++; }

} ReWord += text.Substring(start, j - offset);

ReWord += text.Substring(start, j - offset);

break;

break; case 2://数字

case 2://数字 j = start + 1;

j = start + 1; while (j < dataLen)

while (j < dataLen) {

{ if (tree.GetCharType(text.Substring(j, 1)) != 2)

if (tree.GetCharType(text.Substring(j, 1)) != 2) break;

break;

j++;

j++; }

} ReWord += text.Substring(start, j - offset);

ReWord += text.Substring(start, j - offset);

break;

break;

default:

default: ReWord += char_s;//其他字符单词

ReWord += char_s;//其他字符单词 break;

break; }

}

offset = j;//设置取下一个词的开始位置

offset = j;//设置取下一个词的开始位置 }

} else

else {

{ offset = start;//设置取下一个词的开始位置

offset = start;//设置取下一个词的开始位置 }

}

//返回token对象

//返回token对象 return new Token(ReWord, bufferIndex, bufferIndex + ReWord.Length - 1);

return new Token(ReWord, bufferIndex, bufferIndex + ReWord.Length - 1); }

} //字符在字典中

//字符在字典中 ReWord += char_s;

ReWord += char_s; //取得属于当前字符的词典树

//取得属于当前字符的词典树 t_chartable = (Hashtable)t_chartable[char_s];

t_chartable = (Hashtable)t_chartable[char_s]; //设置下一循环取下一个词的开始位置

//设置下一循环取下一个词的开始位置 start++;

start++; if (start == dataLen)

if (start == dataLen) {

{ offset = dataLen;

offset = dataLen; return new Token(ReWord, bufferIndex, bufferIndex + ReWord.Length - 1);

return new Token(ReWord, bufferIndex, bufferIndex + ReWord.Length - 1); }

} }

} return token;

return token; }

}

}

} }

}

测试的代码:

using

System;

using

System; using

System.Collections.Generic;

using

System.Collections.Generic; using

System.Text;

using

System.Text;

using

Analyzer

=

Lucene.Net.Analysis.Analyzer;

using

Analyzer

=

Lucene.Net.Analysis.Analyzer; using

SimpleAnalyzer

=

Lucene.Net.Analysis.SimpleAnalyzer;

using

SimpleAnalyzer

=

Lucene.Net.Analysis.SimpleAnalyzer; using

StandardAnalyzer

=

Lucene.Net.Analysis.Standard.StandardAnalyzer;

using

StandardAnalyzer

=

Lucene.Net.Analysis.Standard.StandardAnalyzer; using

Token

=

Lucene.Net.Analysis.Token;

using

Token

=

Lucene.Net.Analysis.Token; using

TokenStream

=

Lucene.Net.Analysis.TokenStream;

using

TokenStream

=

Lucene.Net.Analysis.TokenStream;

namespace

MyLuceneTest

namespace

MyLuceneTest {

{ class Program

class Program {

{ [STAThread]

[STAThread] public static void Main(System.String[] args)

public static void Main(System.String[] args) {

{ try

try {

{ Test("中华人民共和国在1949年建立,从此开始了新中国的伟大篇章。长春市长春节致词", true);

Test("中华人民共和国在1949年建立,从此开始了新中国的伟大篇章。长春市长春节致词", true); }

} catch (System.Exception e)

catch (System.Exception e) {

{ System.Console.Out.WriteLine(" caught a " + e.GetType() + "\n with message: " + e.Message + e.ToString());

System.Console.Out.WriteLine(" caught a " + e.GetType() + "\n with message: " + e.Message + e.ToString()); }

} }

}

internal static void Test(System.String text, bool verbose)

internal static void Test(System.String text, bool verbose) {

{ System.Console.Out.WriteLine(" Tokenizing string: " + text);

System.Console.Out.WriteLine(" Tokenizing string: " + text); Test(new System.IO.StringReader(text), verbose, text.Length);

Test(new System.IO.StringReader(text), verbose, text.Length); }

}

internal static void Test(System.IO.TextReader reader, bool verbose, long bytes)

internal static void Test(System.IO.TextReader reader, bool verbose, long bytes) {

{ //Analyzer analyzer = new StandardAnalyzer();

//Analyzer analyzer = new StandardAnalyzer(); Analyzer analyzer = new Lucene.Fanswo.ChineseAnalyzer();

Analyzer analyzer = new Lucene.Fanswo.ChineseAnalyzer(); TokenStream stream = analyzer.TokenStream(null, reader);

TokenStream stream = analyzer.TokenStream(null, reader);

System.DateTime start = System.DateTime.Now;

System.DateTime start = System.DateTime.Now;

int count = 0;

int count = 0; for (Token t = stream.Next(); t != null; t = stream.Next())

for (Token t = stream.Next(); t != null; t = stream.Next()) {

{ if (verbose)

if (verbose) {

{ System.Console.Out.WriteLine("Token=" + t.ToString());

System.Console.Out.WriteLine("Token=" + t.ToString()); }

} count++;

count++; }

}

System.DateTime end = System.DateTime.Now;

System.DateTime end = System.DateTime.Now;

long time = end.Ticks - start.Ticks;

long time = end.Ticks - start.Ticks; System.Console.Out.WriteLine(time + " milliseconds to extract " + count + " tokens");

System.Console.Out.WriteLine(time + " milliseconds to extract " + count + " tokens"); System.Console.Out.WriteLine((time * 1000.0) / count + " microseconds/token");

System.Console.Out.WriteLine((time * 1000.0) / count + " microseconds/token"); System.Console.Out.WriteLine((bytes * 1000.0 * 60.0 * 60.0) / (time * 1000000.0) + " megabytes/hour");

System.Console.Out.WriteLine((bytes * 1000.0 * 60.0 * 60.0) / (time * 1000000.0) + " megabytes/hour"); }

} }

} }

}

测试结果:

完毕!

分词的郊率上还有待在算法上提高。还有中文的标点符号没有处理,我将进一步完善。

本人文采不好,写不出很多文字,只有以代码代替一下我的言语。兄弟姐妹们给点意见哦。谢谢!

原码下载:http://files.cnblogs.com/harryguo/LucuneSearch.rar