摘要

单机搭hadoop+hbase流程记录

引用学习:

1、hadoop 2.7.4 单机版安装

2、HBase环境搭建

安装程序准备:

hadoop-2.7.7 : https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/

hbase-2.1.7 : https://hbase.apache.org/downloads.html

安装hadoop

(JAVA环境不说了,自行配置)

1、解压

tar -zxvf hadoop-2.7.7.tar.gz

mkdir /usr/local/hadoop

mv hadoop-2.7.7 /usr/local/hadoop

# 软连接

ln -s /usr/local/hadoop/bin/hdfs /usr/bin/hdfs

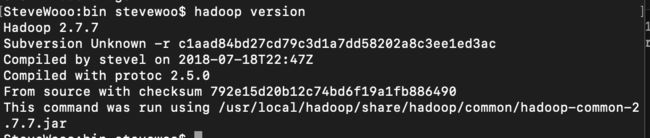

验证:

hadoop version

2、配置文件

- hadoop-env.sh

# 具体拿一下自己的JAVA_HOME路径

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_202.jdk/Contents/Home

- yarn-env.sh

# 具体拿一下自己的JAVA_HOME路径

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_202.jdk/Contents/Home

- core-site.xml

fs.default.name

hdfs://localhost:9000

HDFS的URI

hadoop.tmp.dir

/usr/local/hadoop/tmp

本地的hadoop临时文件夹

此处需要创建 /usr/lcoal/hadoop/tmp 文件夹

- hdfs-site.xml

dfs.name.dir

/usr/local/hadoop/data0/hadoop/hdfs/name

namenode上存储hdfs名字空间元数据

dfs.data.dir

/usr/local/hadoop/data0/hadoop/hdfs/data

datanode上数据块的物理存储位置

dfs.replication

1

副本个数,配置默认是3,应小于datanode机器数量

需要创建 /usr/local/hadoop/data0/hadoop 文件夹

- mapred-site.xml

mapreduce.framework.name

yarn

- yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.resourcemanager.webapp.address

192.168.31.250:8099

webapp的地址配置成本机的地址即可

3、启动hadoop

# 初始化

bin/hdfs namenode –format

# 启动服务

$HADOOP_HOME/bin/start-all.sh

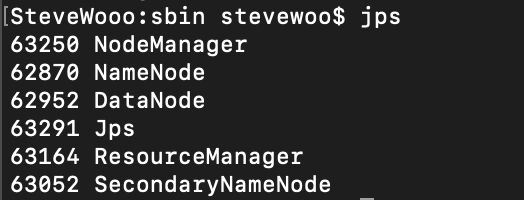

判断是否启动成功:

4、hdfs命令使用:

# 创建文件与查看

hadoop fs -mkdir /xxx

hadoop fs -ls /

# 文件上传

hadoop fs -put ./data/* /data/

# 文件删除

hadoop fs -rm -r /data/*

5、一些学习脚本

mapper.py

#!/usr/bin/env python

import sys

for line in sys.stdin:

line = line.strip()

words = line.split()

for word in words:

print '%s\t%s' % (word, 1)

reducer.py

#!/usr/bin/env python

from operator import itemgetter

import sys

current_word = None

current_count = 0

word = None

for line in sys.stdin:

line = line.strip()

word, count = line.split('\t', 1)

count = int(count)

if current_word == word:

current_count += count

else:

if current_word:

print '%s\t%s' % (current_word, current_count)

current_count = count

current_word = word

if current_word == word:

print '%s\t%s' % (current_word, current_count)

run.sh

STREAM=/usr/local/hadoop/share/hadoop/tools/lib/hadoop-streaming-2.7.7.jar

WORKPLACE=/usr/local/hadoop/workplace

hadoop fs -rm -r /data/output

hadoop jar $STREAM \

-files $WORKPLACE/script/mapper.py,$WORKPLACE/script/reducer.py \

-mapper $WORKPLACE/script/mapper.py \

-reducer $WORKPLACE/script/reducer.py \

-input /data/*.json \

-output /data/output

当然,要先把字典(*.json)上传到hdfs的/data文件中。

*.json格式如下

test1 test1 test1 test1 test1 test2 test1 test1 test2 test2 test2 test2 test3 test3 test3 test3 test3 test3 test3 test3

安装hbase

1、解压

我把解压后的文件夹放了在

$HADOOP_HOME/softs/hbase

2、配置

- hbase-site.xml

hbase.rootdir

/usr/local/hadoop/soft/hbase

hbase.zookeeper.property.dataDir

/usr/local/hadoop/softs/hbase/zookeeper

hbase.cluster.distributed

true

hbase.master.info.port

60010

注意,这里的路径都要配置成自己解压的路径。

3、启动

bash hbase-daemon.sh start zookeeper

bash hbase-daemon.sh start master

bash hbase-daemon.sh start regionserver

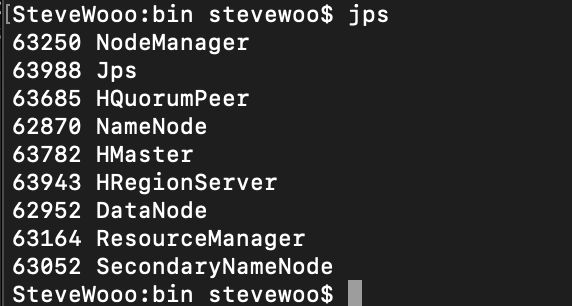

验证启动完成:

以及本地控制台:

http://127.0.0.1:60010/master-status

4、一些数据操作方式

- 进入hbase shell

hbase shell

- 创建 user 表:

create 'user', 'info'

- 删除表

disable 'user'

drop 'user'

- 增删改查数据

# 插入keyid为'id001'的用户,其中有一个属性'name',值为'SteveWooo'

put 'user','id001','info:name','SteveWooo'

# 查看'id001'用户数据

get 'user','id001'

# 为'id001'用户新增一个属性age

put 'user','id001','info:age','18'

# 修改'id001'用户的age

put 'user','id001','info:age','19'

# 删除'id001'用户数据

deleteall 'user','id001'