常规的基于CNN的图像分类网络如Lenet、Alexnet、VGGnet等都是单分类模型,本文记录在ubuntu16.04下如何对传统的单分类模型进行调整,实现多标签分类的效果,这里主要指的是对固定长度字符串的识别,相同原理可用于验证码识别和车牌识别。

声明:本文代码主要来自于以下两篇博文:

- 深度学习caffe实战(一)验证码识别

- 车牌识别中的不分割字符的端到端(End-to-End)识别

下面整理了使用caffe完成多标签分类(multi-label classification)模型训练测试的整个流程,主要分为4个部分:

- 如何制作多标签分类数据集;

- 修改caffe源代码,实现多标签数据集的转换和读取;

- 修改分类模型Alexnet,实现多标签分类;

- 模型的训练和测试。

1.如何制作多标签分类数据集

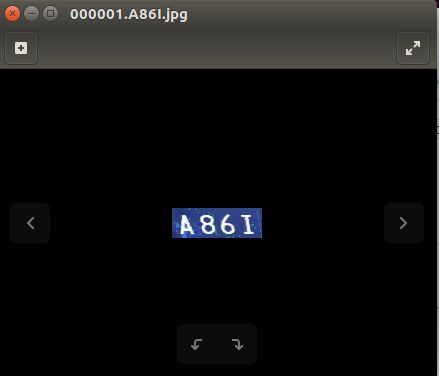

制作的数据集图片类似于:

这里的每张图片中包含4个字符(0-9或者A-Z),通过对代码的简单修改,可以扩展成任意长度。

为了简单,将车牌识别中的不分割字符的端到端(End-to-End)识别中的源代码修改简化。

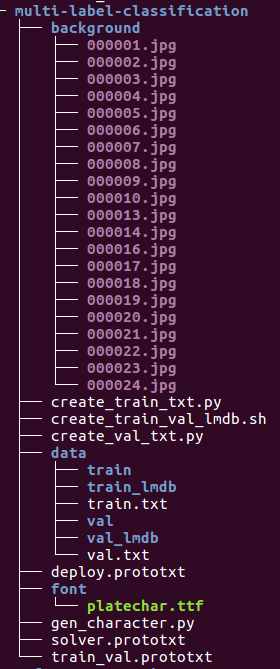

首先建立一个名为multi-label-classification的文件夹,下面的子目录/子文件如下:

其中蓝色的是文件夹,其他颜色的是文件。

生成多标签字符图片的思路大概是:

首先确定字符串的长度,即想要生成包含几个标签的图片;

根据字符串的长度,确定图像的尺寸;比如我生成4个字符的图片,再考虑单个字符和长宽比,字符间的间隙,以及字体的大小,确定4字符图像的长和宽是90x30;

需要找到一种.ttf格式的字体,这根据实际情况选择合适的字体;

-

接下来,需要确定图像要用什么样的背景;比如我随便找了十几种颜色的背景图片(放在../background/文件夹下),部分显示如下,每张都是90x30大小。

- 最后考虑需要对字符串图像做什么处理,比如随机旋转,畸变处理,加入噪声,模糊等等,用于增强模型的泛化能力。

下面是gen_character.py的代码:

#coding=utf-8

import PIL

from PIL import ImageFont

from PIL import Image

from PIL import ImageDraw

import cv2

import numpy as np

import os

from math import *

chars = ["0", "1", "2", "3", "4", "5", "6", "7", "8", "9", "A","B", "C", "D", "E", "F", "G", "H", "I",

"J", "K", "L", "M", "N", "O", "P", "Q", "R", "S", "T", "U", "V", "W", "X","Y", "Z"];

# 引入畸变,将使字符随机地向左或者向右倾斜一个随机的角度(4-10个像素值)

def distortionRandom(img):

w = img.shape[1]

h = img.shape[0]

pts1 = np.float32([[0, 0], [0, h], [w, 0], [w, h]])

pos_or_neg = np.random.random_integers(0,1)

distortion_value = np.random.random_integers(4,10)

if(pos_or_neg==0):

pts2 = np.float32([[0, 0], [distortion_value, h], [w-distortion_value, 0], [w, h]])

else:

pts2 = np.float32([[distortion_value, 0], [0, h], [w, 0], [w-distortion_value, h]])

M = cv2.getPerspectiveTransform(pts1, pts2)

dst = cv2.warpPerspective(img, M, (w,h))

return dst

# 在背景图像块中写入一个字符

def GenCh(f,val):

img=Image.new("RGB", (16,28),(255,255,255))

draw = ImageDraw.Draw(img)

draw.text((2, 0),val.decode('utf-8'),(0,0,0),font=f, align="center")

A = np.array(img)

A = cv2.resize(A, (22,28))

return A

# 定义一个类GenCharacter,用于生成固定长度多标签图片

class GenCharacter:

def __init__(self,font):

# 初始化所用的字符字体

self.fontE = ImageFont.truetype(font,28,0)

# 初始化多标签图片的大小为90x30

self.img=np.array(Image.new("RGB", (90,30),(255,255,255)))

# 初始化标签图片所用的背景,这里在./background/文件夹中准备了十几张90x30的不同背景

# 全部读取到一个list中,生成多标签图片时随机选择某一个背景

self.bgs = []

for file in os.listdir("./background/"):

bg = cv2.resize(cv2.imread("./background/"+file),(90,30))

self.bgs.append(bg)

# 将长度为4的字符串写入90x30的图片中

def draw(self,val):

offset = 2

for i in range(4):

base = offset + i*22

self.img[0:28, base:base+22]= GenCh(self.fontE,val[i])

return self.img

# 生成一张带随机背景的随机字符串

def generate(self,text):

if len(text) == 4:

fg = self.draw(text.decode(encoding="utf-8"))

fg = cv2.bitwise_not(fg)

k = np.random.random_integers(0,len(self.bgs)-1)

com = cv2.bitwise_or(fg,self.bgs[k])

com = distortionRandom(com)

com = cv2.bitwise_or(com,self.bgs[k])

return com

# 随机生成长度为4的字符串

def genCharacterString(self):

CharacterStr = ""

box = [0,0,0,0]

for unit,cpos in zip(box,xrange(len(box))):

CharacterStr += chars[np.random.random_integers(0,35)]

return CharacterStr

# 生成指定批次大小的多标签图片,病保存到指定文件夹

def genBatch(self, batchSize,outputPath):

if (not os.path.exists(outputPath)):

os.mkdir(outputPath)

for i in xrange(batchSize):

CharacterStr = G.genCharacterString()

img = G.generate(CharacterStr)

filename = os.path.join(outputPath, str(i).zfill(6) + '.' + CharacterStr + ".jpg")

cv2.imwrite(filename, img)

G = GenCharacter('./font/platechar.ttf')

G.genBatch(30000,"./data/train")

G.genBatch(10000,"./data/val")

直接在/multi-label-classification/文件夹下打开bash,执行

python ./gen_character.py

生成30000张训练集图片和10000张验证集图片。

如何生成train.txt和val.txt文本文件?

使用过caffe分类模型的同学应该清楚,除了图片文件之外,还需要保存有图片名和对应gt-label的train.txt和val.txt文本文件,写了一个简单的python脚本实现:

create_train_txt.py:

#coding=utf-8

#根据图像名的特点如000001.5GSB.jpg,生成gt-label文件

import os

train_src_path = "data/train/"

train_dst_file = "data/train.txt"

if __name__ == '__main__':

train_file = open(train_dst_file, 'w')

k=0

for file in os.listdir(train_src_path):

lines = file

strs = file.split('.')

for i in range(4):

cha = strs[1][i]

# '0'-'9'对应的ASCII码值是48-57,'A'-'Z'对应的ASCII码值是65-90,

# 这里为了方便,将'0'-'9'减去48映射到0-9;将'A'-'Z'减去55映射到10-35,

if ord(cha)>=65:

num = ord(cha)-55

else:

num = ord(cha)-48

lines+=' '+str(num)

lines+='\n'

train_file.writelines(lines)

k+=1

train_file.close()

print('there are %d images in total' % int(k))

print('done')

create_train_txt.py文件放在/multi-label-classification/文件夹下,在/multi-label-classification/文件夹下打开bash,执行

python ./create_train_txt.py

将在/multi-label-classification/data/下面生成train.txt文件。

将上面代码中路径名的train改成val,相同的方法,生成val.txt文件。

比如train.txt文件的部分内容如下:

接下来,需要将多标签的训练集和验证集转换成LMDB格式,这一步需要对/caffe/tools/convert_imageset.cpp文件做修改,所以这一步留到后面进行。

2.修改caffe源代码,实现多标签数据集的转换和读取

下载的caffe源码中有一个/caffe/tools/convert_imageset.cpp文件,使用它可以将图像图像格式的数据集转换成LMDB格式,但它只能处理单标签的数据集,为了处理多标签数据集,需要修改convert_imageset.cpp文件;而convert_imageset.cpp的实现涉及到io.hpp和io.cpp中的函数,于是要修改io.hpp和io.cpp。

同样,caffe的Data层也只能读取单标签的数据集,为了处理多标签数据集,需要修改data_layer.cpp文件。

另外,需要在caffe.proto中添加一个参数。

总的来说,需要修改以下几个文件:

- /caffe/tools/convert_imageset.cpp

- /caffe/include/caffe/util/io.hpp

- /caffe/src/caffe/util/io.cpp

- /caffe/src/caffe/proto/caffe.proto

- /caffe/src/caffe/layers/data_layer.cpp

原来的代码用/* ... */注释掉,新增的代码用////////////// ...... //////////////////包围起来

修改/caffe/tools/convert_imageset.cpp,在约74行处:

/*

std::ifstream infile(argv[2]);

std::vector > lines;

std::string line;

size_t pos;

int label;

while (std::getline(infile, line)) {

pos = line.find_last_of(' ');

label = atoi(line.substr(pos + 1).c_str());

lines.push_back(std::make_pair(line.substr(0, pos), label));

}

*/

////////////////////////////

std::ifstream infile(argv[2]);

std::vector > > lines;

std::string filename;

vector labels(4);

while (infile >> filename >> labels[0] >> labels[1] >> labels[2] >> labels[3]){

lines.push_back(std::make_pair(filename, labels));

}

///////////////////////////

修改/caffe/include/caffe/util/io.hpp。

在其中新加入/////// ..... ///////内的两个成员函数声明,不删除原来的任何代码,下面的前两个函数声明是原来文件中就有的,可以看到,原来代码中的label参数是int类型,只能处理单标签字符;新增的两个成员函数就是参考上面两个函数,将const int label参数改成了std::vector,以接受多标签字符。

bool ReadImageToDatum(const string& filename, const int label,

const int height, const int width, const bool is_color,

const std::string & encoding, Datum* datum);

bool ReadFileToDatum(const string& filename, const int label, Datum* datum);

//////////////////////////////////////////

bool ReadImageToDatum(const string& filename, std::vector labels,

const int height, const int width, const bool is_color,

const std::string & encoding, Datum* datum);

bool ReadFileLabelsToDatum(const string& filename, std::vector labels,

Datum* datum);

///////////////////////////////////

修改/caffe/src/caffe/util/io.cpp。

在ReadImageToDatum()函数实现下面添加下面函数实现,约143行处:

//////////////////////////////////////////////////////////////////////////

bool ReadImageToDatum(const string& filename, std::vector labels,

const int height, const int width, const bool is_color,

const std::string & encoding, Datum* datum)

{

std::cout << filename << " " << labels[0] << " " << labels[1] << " " << labels[2] << " " << labels[3] << std::endl;

cv::Mat cv_img = ReadImageToCVMat(filename, height, width, is_color);

if (cv_img.data) {

if (encoding.size()) {

if ((cv_img.channels() == 3) == is_color && !height && !width &&

matchExt(filename, encoding))

//return ReadFileToDatum(filename, label, datum);

return ReadFileLabelsToDatum(filename, labels, datum);//ReadFileToDatum -> ReadFileLabelsToDatum

std::vector buf;

cv::imencode("." + encoding, cv_img, buf);

datum->set_data(std::string(reinterpret_cast(&buf[0]),

buf.size()));

//datum->set_label(label);

datum->clear_labels();

datum->add_labels(labels[0]);

datum->add_labels(labels[1]);

datum->add_labels(labels[2]);

datum->add_labels(labels[3]);

//////////////////

datum->set_encoded(true);

return true;

}

CVMatToDatum(cv_img, datum);

//datum->set_label(label);

datum->clear_labels();

datum->add_labels(labels[0]);

datum->add_labels(labels[1]);

datum->add_labels(labels[2]);

datum->add_labels(labels[3]);

//////////////////

return true;

}

else {

return false;

}

}

/////////////////////////////////////////////////////////////////////

在ReadFileToDatum()函数实现下面添加下面的函数实现,约209行处:

//////////////////////////////////////////////////////////////////////

bool ReadFileLabelsToDatum(const string& filename, std::vector labels,

Datum* datum)

{

std::streampos size;

fstream file(filename.c_str(), ios::in | ios::binary | ios::ate);

if (file.is_open()) {

size = file.tellg();

std::string buffer(size, ' ');

file.seekg(0, ios::beg);

file.read(&buffer[0], size);

file.close();

datum->set_data(buffer);

//datum->set_label(label);

datum->clear_labels();

datum->add_labels(labels[0]);

datum->add_labels(labels[1]);

datum->add_labels(labels[2]);

datum->add_labels(labels[3]);

//////////////////

datum->set_encoded(true);

return true;

}

else {

return false;

}

}

///////////////////////////////////////////////////////

修改/caffe/src/caffe/proto/caffe.proto。

在下面的源代码中添加一行代码,即添加一个labels,是repeated类型的,以便接受多标签数据集。

message Datum {

optional int32 channels = 1;

optional int32 height = 2;

optional int32 width = 3;

// the actual image data, in bytes

optional bytes data = 4;

optional int32 label = 5;

// Optionally, the datum could also hold float data.

repeated float float_data = 6;

// If true data contains an encoded image that need to be decoded

optional bool encoded = 7 [default = false];

//////////////////////////////////

repeated float labels = 8;

//////////////////////////////////

}

修改/caffe/src/caffe/layers/data_layer.cpp。

约49行处:

// label

/*

if (this->output_labels_) {

vector label_shape(1, batch_size);

top[1]->Reshape(label_shape);

for (int i = 0; i < this->prefetch_.size(); ++i) {

this->prefetch_[i]->label_.Reshape(label_shape);

}

}

*/

/////////////////////////////////////////////////

if (this->output_labels_){

top[1]->Reshape(batch_size, 4, 1, 1);

for (int i = 0; i < this->prefetch_.size(); ++i) {

this->prefetch_[i]->label_.Reshape(batch_size, 4, 1, 1);

}

}

//////////////////////////////////////////////////

约128行处:

// Copy label.

/*

if (this->output_labels_) {

Dtype* top_label = batch->label_.mutable_cpu_data();

top_label[item_id] = datum.label();

}

*/

///////////////////////////////////////////////

if (this->output_labels_) {

Dtype* top_label = batch->label_.mutable_cpu_data();

for (int i = 0; i < 4; i++)

top_label[item_id * 4 + i] = datum.labels(i);

}

///////////////////////////////////////////////

修改完成,在caffe根目录执行:

make clean

make all -j8

将修改后的caffe重新编译。

将原始数据集转换成LMDB格式

修改编译caffe后,就可以使用convert_imageset工具将原始数据集转换成LMDB格式了。

执行脚本create_train_val_lmdb.sh进行完成数据集转换。

create_train_val_lmdb.sh内容:

echo "create train lmdb..."

/home/ys/caffe/build/tools/convert_imageset \

--resize_height=227 \

--resize_width=227 \

--backend="lmdb" \

--shuffle \

/home/ys/caffe/models/multi-label-classification/data/train/ \

/home/ys/caffe/models/multi-label-classification/data/train.txt \

/home/ys/caffe/models/multi-label-classification/data/train_lmdb

echo "done"

echo "create val lmdb..."

/home/ys/caffe/build/tools/convert_imageset \

--resize_height=227 \

--resize_width=227 \

--backend="lmdb" \

--shuffle \

/home/ys/caffe/models/multi-label-classification/data/val/ \

/home/ys/caffe/models/multi-label-classification/data/val.txt \

/home/ys/caffe/models/multi-label-classification/data/val_lmdb

echo "done"

文件路径根据自己的实际情况更改。

3.修改分类模型Alexnet,实现多标签分类

在/caffe/models/bvlc_alexnet/下有经典的Alexnet模型,其train_val.prototxt模型结构如下:

将其修改后的train_val.prototxt模型结构如下:

Data层不改动,在Data层后面新增了一个Slice层,将Data层读取的多标签分解:

layer {

name: "slicers"

type: "Slice"

bottom: "label"

top: "label_1"

top: "label_2"

top: "label_3"

top: "label_4"

slice_param {

axis: 1

slice_point: 1

slice_point: 2

slice_point: 3

}

}

之后的Conv1层一直到fc6层的Dropout层都不变,然后将后面的fc7层以后的内容改成如下:

layer {

name: "fc7_1"

type: "InnerProduct"

bottom: "fc6"

top: "fc7_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu7_1"

type: "ReLU"

bottom: "fc7_1"

top: "fc7_1"

}

layer {

name: "drop7_1"

type: "Dropout"

bottom: "fc7_1"

top: "fc7_1"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7_2"

type: "InnerProduct"

bottom: "fc6"

top: "fc7_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu7_2"

type: "ReLU"

bottom: "fc7_2"

top: "fc7_2"

}

layer {

name: "drop7_2"

type: "Dropout"

bottom: "fc7_2"

top: "fc7_2"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7_3"

type: "InnerProduct"

bottom: "fc6"

top: "fc7_3"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu7_3"

type: "ReLU"

bottom: "fc7_3"

top: "fc7_3"

}

layer {

name: "drop7_3"

type: "Dropout"

bottom: "fc7_3"

top: "fc7_3"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7_4"

type: "InnerProduct"

bottom: "fc6"

top: "fc7_4"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu7_4"

type: "ReLU"

bottom: "fc7_4"

top: "fc7_4"

}

layer {

name: "drop7_4"

type: "Dropout"

bottom: "fc7_4"

top: "fc7_4"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc8_1"

type: "InnerProduct"

bottom: "fc7_1"

top: "fc8_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 36 #1000->36

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "fc8_2"

type: "InnerProduct"

bottom: "fc7_2"

top: "fc8_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 36 #1000->36

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "fc8_3"

type: "InnerProduct"

bottom: "fc7_3"

top: "fc8_3"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 36 #1000->36

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "fc8_4"

type: "InnerProduct"

bottom: "fc7_4"

top: "fc8_4"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 36 #1000->36

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "accuracy_1"

type: "Accuracy"

bottom: "fc8_1"

bottom: "label_1"

top: "accuracy_1"

include {

phase: TEST

}

}

layer {

name: "accuracy_2"

type: "Accuracy"

bottom: "fc8_2"

bottom: "label_2"

top: "accuracy_2"

include {

phase: TEST

}

}

layer {

name: "accuracy_3"

type: "Accuracy"

bottom: "fc8_3"

bottom: "label_3"

top: "accuracy_3"

include {

phase: TEST

}

}

layer {

name: "accuracy_4"

type: "Accuracy"

bottom: "fc8_4"

bottom: "label_4"

top: "accuracy_4"

include {

phase: TEST

}

}

layer {

name: "loss_1"

type: "SoftmaxWithLoss"

bottom: "fc8_1"

bottom: "label_1"

top: "loss_1"

loss_weight: 0.25

}

layer {

name: "loss_2"

type: "SoftmaxWithLoss"

bottom: "fc8_2"

bottom: "label_2"

top: "loss_2"

loss_weight: 0.25

}

layer {

name: "loss_3"

type: "SoftmaxWithLoss"

bottom: "fc8_3"

bottom: "label_3"

top: "loss_3"

loss_weight: 0.25

}

layer {

name: "loss_4"

type: "SoftmaxWithLoss"

bottom: "fc8_4"

bottom: "label_4"

top: "loss_4"

loss_weight: 0.25

}

也就是层之前的单个分支改成了4个分支。后面分别计算loss和accuracy。

solver.protxt代码:

net: "train_val.prototxt"

test_iter: 100

test_interval: 500

base_lr: 0.01

lr_policy: "step"

gamma: 0.1

stepsize: 6000

display: 10

max_iter: 10000

momentum: 0.9

weight_decay: 0.0005

snapshot: 10000

snapshot_prefix: "multi-label-classification"

solver_mode: GPU

模型修改完成。

4.模型的训练和测试

训练模型

现在,/multi-label-classification/文件夹下有如下内容:

在/multi-label-classification/文件夹下打开bash,执行

/home/ys/caffe/build/tools/caffe train --solver solver.prototxt --gpu 0

开始模型训练,训练好的模型文件保存在了/multi-label-classification/文件夹下。

测试模型

在/multi-label-classification/文件夹下打开bash,执行

/home/ys/caffe/build/tools/caffe test \

-model train_val.prototxt \

-weights multi-label-classification_iter_10000.caffemodel \

-iterations 100

即可查看训练好的模型的测试效果。

使用pycaffe可视化测试结果

参考这篇文章,使用caffe的python接口测试单张图片。

现在在/multi-label-classification/data/test_images/文件夹下有一张测试图片:

使用python脚本pycaffe_test.py加载训练好的caffe模型对这张图片进行预测。

pycaffe_test.py:

#encoding:utf-8

import numpy as np

import sys,os

import caffe

import time

caffe.set_device(0)

caffe.set_mode_gpu()

time_begin = time.time()

# 设置当前的工作环境在caffe下, 根据自己实际情况更改

caffe_root = '/home/ys/caffe/'

# 我们也把caffe/python也添加到当前环境

sys.path.insert(0, caffe_root + 'python')

os.chdir(caffe_root)#更换工作目录

# 设置网络结构

net_file=caffe_root + 'models/multi-label-classification/deploy.prototxt'

# 添加训练之后的参数

caffe_model=caffe_root + 'models/multi-label-classification/multi-label-classification_iter_10000.caffemodel'

# 均值文件

mean_file=caffe_root + 'python/caffe/imagenet/ilsvrc_2012_mean.npy'

# 这里对任何一个程序都是通用的,就是处理图片

# 把上面添加的两个变量都作为参数构造一个Net

net = caffe.Net(net_file,caffe_model,caffe.TEST)

# 得到data的形状,这里的图片是默认matplotlib底层加载的

transformer = caffe.io.Transformer({'data': net.blobs['data'].data.shape})

# matplotlib加载的image是像素[0-1],图片的数据格式[h,w,c],RGB

# caffe加载的图片需要的是[0-255]像素,数据格式[c,h,w],BGR,那么就需要转换

# channel 放到前面

transformer.set_transpose('data', (2,0,1))

transformer.set_mean('data', np.load(mean_file).mean(1).mean(1))

# 图片像素放大到[0-255]

transformer.set_raw_scale('data', 255)

# RGB-->BGR 转换

transformer.set_channel_swap('data', (2,1,0))

# 加载一张测试图片

image_file = caffe_root+'models/multi-label-classification/data/test_images/000001.A86I.jpg'

im=caffe.io.load_image(image_file)

# 用上面的transformer.preprocess来处理刚刚加载图片

net.blobs['data'].data[...] = transformer.preprocess('data',im)

#注意,网络开始向前传播啦

output = net.forward()

# 最终的结果: 当前这个图片的属于哪个物体的概率(列表表示)

output_prob1 = output['prob_1'][0]

output_prob2 = output['prob_2'][0]

output_prob3 = output['prob_3'][0]

output_prob4 = output['prob_4'][0]

# 找出最大的那个概率

chars = ["0", "1", "2", "3", "4", "5", "6", "7", "8", "9", "A","B", "C", "D", "E", "F", "G", "H", "I",

"J", "K", "L", "M", "N", "O", "P", "Q", "R", "S", "T", "U", "V", "W", "X","Y", "Z"];

print 'test image: ', image_file

print 'the predicted result is:', chars[output_prob1.argmax()],' ',chars[output_prob2.argmax()],' ',chars[output_prob3.argmax()],' ',chars[output_prob4.argmax()]

print 'time used: ', round(time.time()-time_begin, 4), 's'

在/multi-label-classification/文件夹下打开bash,执行

python ./pycaffe_test.py

运行结果:

本文用到的代码在 这里。