大数据-Zookeeper:对大数据平台中的各个模块进行集中配置和调度【原理与搭建】

![]()

一、Zookeeper概述

1.1 Zookeeper简介

- 分布式系统: 分布式系统指由很多台计算机组成的一个整体! 这个整体一致对外,并且处理同一请求! 系统对内透明,对外不透明! 内部的每台计算机,都可以相互通信,例如使用RPC/REST 或者是WebService ! 客户端向一个分布式系统发送的一次请求到接受到响应, 有可能会经历多台计算机!

- Zookeeper是一个java编写的开源的分布式的,为分布式应用提供协调服务的存储中间件。

- Zookeeper从设计模式角度来理解:是一个基于观察者模式设计的分布式服务管理框架,它负责存储和管理分布式系统中各个进程与模块都关心的数据(比如HDFS数据存储系统的url,hdfs://hadoop101:9000),然后接受观察者的注册,一旦这些数据的状态发生变化,Zookeeper就将负责通知已经在Zookeeper上注册的那些观察者做出相应的反应,从而实现集群中类似Master/Slave管理模式;

- Zookeeper采取观察者模式设计,可以运行客户端在读取数据时,设置一个观察者一旦观察的节点触发了指定的事件,服务端会通知客户端线程,客户端可以执行回调方法,执行对应的操作;

- Zookeeper=文件系统+通知机制

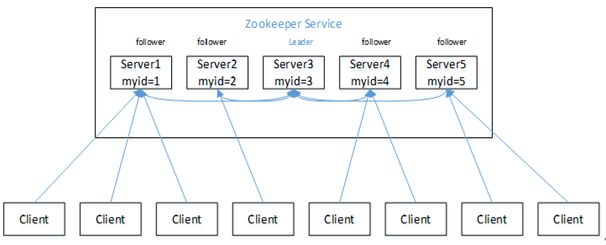

- Zookeeper:一个领导者(Leader),多个跟随者(Follower)组成的集群。

- 集群中只要有半数以上节点存活,Zookeeper集群就能正常服务,否则无法正常服务。Zookeeper集群节点数量一般为奇数。

1.2 Zookeeper特点

- 全局数据一致:每个Server保存一份相同的数据副本,Client无论连接到哪个Server,数据都是一致的。Zookeeper中的数据按照顺序分批入库,且最终一致!

- 数据更新原子性,一次数据更新要么成功,要么失败。

- 单一视图:Client无论连接到哪个Zookeeper节点,数据都是一致的。

- 可靠性:每次对Zookeeper的操作状态都会保存到服务端,每个server保存一份相同的数据副本。

- 更新请求顺序进行,来自同一个Client的更新请求按其发送顺序依次执行。

- 实时性,在一定时间范围内,Client能读到最新数据。

- Zookeeper:一个领导者(leader),多个跟随者(follower)组成的集群。

- Leader只有一个,在集群启动时,自动选举产生!Leader负责进行投票的发起和决议,更新系统状态;选举Leader时,只有数据和Leader保持同步的Follower有权参与竞选Leader!在竞选Leader时,serverid大的Server有优势!

- Follower用于接收客户请求并向客户端返回结果,在选举Leader过程中参与投票

- ZK在设计时,采用了paxos协议设计,这个协议要求,集群中只要有半数以上节点存活,Zookeeper集群就能正常服务。

1.3 Zookeeper数据结构

- ZooKeeper数据模型的结构与Unix文件系统很类似,整体上可以看作是一棵树,每个节点称做一个ZNode。

- 很显然zookeeper集群自身维护了一套数据结构。这个存储结构是一个树形结构,其上的每一个节点,我们称之为"znode",每一个znode默认能够存储1MB的数据,每个ZNode都可以通过其路径唯一标识。

- ZooKeeper是负责存储和管理系统中各个模块都关心的数据(核心的配置信息),这类数据不是特别大,所以1M完全够用。

1.4 Zookeeper应用场景

提供的服务包括:

- 统一命名服务、

- 统一配置管理(推+拉)、

- 统一集群管理、

- 分布式消息同步和协调机制

- 服务器节点动态上下线、

- 软负载均衡

- 集群管理

1.4.1 统一命名服务

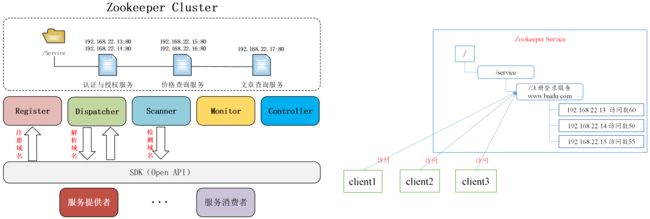

在分布式环境下,经常需要对应用/服务进行统一命名,便于识别。例如:IP不容易记住,而域名容易记住。

1.4.2 统一配置管理(数据发布与订阅)

- 分布式环境下,配置文件同步非常常见。

- 一般要求一个集群中,所有节点的配置信息是一致的,比如 Kafka 集群。

- 对配置文件修改后,希望能够快速同步到各个节点上。

- 配置管理可交由ZooKeeper实现。集中式配置中心(推 + 拉)适用于配置信息多设备共享,会发生动态变化的配置信息。

- 可将配置信息写入ZooKeeper上的一个Znode。

- 应用启动时主动到Zookeeper上获取配置信息,并注册Watcher监听。

- 一旦配置管理员变更了Zookeeper配置节点的内容。

- Zookeeper推送变更通知到各个客户端服务器,触发Watcher回调函数process()。

- 应用根据逻辑,主动获取新的配置信息,更改自身逻辑。

1.4.3 统一集群管理

- 分布式环境中,实时掌握每个节点的状态是必要的。实时掌握每个节点后可根据节点实时状态做出一些调整。

- ZooKeeper可以实现实时监控节点状态变化

- 可将服务器A节点(临时节点)信息写入ZooKeeper上的一个ZNode(比如ZNodeA)。

- 一旦服务器A宕机,该临时节点ZNodeA在Zookeeper上的信息就消失;

- 监听临时节点的ZNodeA可获取它的实时状态变化,从而可以监听整个集群的节点变化状态。

- 有多少机器在工作?

- 每台机器的运行状态收集

- 对集群中设备进行上下线操作

- 分布式任务的状态汇报

…

1.4.4 服务器动态上下线

1.4.5 软负载均衡

- 在Zookeeper中记录每台服务器的访问数,让访问数最少的服务器去处理最新的客户端请求

- Register负责域名的注册,服务启动后将域名信息通过Register注册到Zookeeper相对应的域名服务下。

- Dispatcher负责域名的解析。可以实现软负载均衡。

- Scanner通过定时监测服务状态,动态更新节点地址信息。

- Monitor负责收集服务信息与状态监控。

- Controller提供后台Console,提供配置管理功能。

二、Zookeeper安装

1、Zookeeper单机安装

1.1 安装前准备

- 安装Jdk

- 拷贝Zookeeper安装包到Linux系统下

- 解压到指定目录

[whx@hadoop102 software]$ tar -zxvf zookeeper-3.4.10.tar.gz -C /opt/module/

1.2 配置修改

- 将/opt/module/zookeeper-3.4.10/conf这个路径下的zoo_sample.cfg修改为zoo.cfg;

[whx@hadoop102 conf]$ mv zoo_sample.cfg zoo.cfg - 打开zoo.cfg文件,修改dataDir路径:

修改内容:dataDir=/opt/module/zookeeper-3.4.10/datas[whx@hadoop102 zookeeper-3.4.10]$ vim zoo.cfg - 在/opt/module/zookeeper-3.4.10/这个目录上创建datas文件夹

[whx@hadoop102 zookeeper-3.4.10]$ mkdir datas

1.3 配置Zookeeper环境变量

在/etc/profile系统环境变量中添加Zookeeper环境变量

JAVA_HOME=/opt/module/jdk1.8.0_121

HADOOP_HOME=/opt/module/hadoop-2.7.2

ZOOKEEPER_HOME=/opt/module/hadoop-2.7.2

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin

export PATH JAVA_HOME HADOOP_HOME ZOOKEEPER_HOME

1.4 操作Zookeeper

- 启动Zookeeper

[whx@hadoop102 zookeeper-3.4.10]$ bin/zkServer.sh start - 查看进程是否启动

[whx@hadoop102 zookeeper-3.4.10]$ jps 4020 Jps 4001 QuorumPeerMain - 查看状态

[whx@hadoop102 zookeeper-3.4.10]$ bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: standalone - 启动客户端

[whx@hadoop102 zookeeper-3.4.10]$ bin/zkCli.sh - 退出客户端

[zk: localhost:2181(CONNECTED) 0] quit - 停止Zookeeper

[whx@hadoop102 zookeeper-3.4.10]$ bin/zkServer.sh stop

2.1 Zookeeper分布式安装部署

2.1.1 集群规划

在hadoop101、hadoop102和hadoop103三个节点上部署Zookeeper。

2.1.2 解压安装

- 解压Zookeeper安装包到/opt/module/目录下

[whx@hadoop102 soft]$ tar -zxvf zookeeper-3.4.10.tar.gz -C /opt/module/

2.1.3 配置服务器编号

- 在/opt/module/zookeeper-3.4.10/这个目录下创建datas目录

[whx@hadoop102 zookeeper-3.4.10]$ mkdir -p datas - 在/opt/module/zookeeper-3.4.10/datas目录下创建一个myid的文件,添加myid文件,注意一定要在linux里面创建,在notepad++里面很可能乱码

[whx@hadoop102 datas]$ touch myid - 编辑myid文件,在文件中添加与server对应的编号:102

[whx@hadoop102 datas]$ vi myid102

2.1.4 配置zoo.cfg文件

- 重命名/opt/module/zookeeper-3.4.10/conf这个目录下的zoo_sample.cfg为zoo.cfg

[whx@hadoop102 conf]$ mv zoo_sample.cfg zoo.cfg - 打开zoo.cfg文件

[whx@hadoop102 conf]$ vim zoo.cfg - 修改数据存储路径配置

dataDir=/opt/module/zookeeper-3.4.10/datas - 增加如下配置

配置参数解读#######################cluster########################## server.101=hadoop101:2888:3888 server.102=hadoop102:2888:3888 server.103=hadoop103:2888:3888server.A=B:C:D。- A是一个数字,表示这个是第几号服务器;集群模式下配置一个文件myid,这个文件在datas目录下,这个文件里面有一个数据就是A的值,Zookeeper启动时读取此文件,拿到里面的数据与zoo.cfg里面的配置信息比较从而判断到底是哪个server。

- B是这个服务器的ip地址;

- C是这个服务器与集群中的Leader服务器交换信息的端口;

- D是万一集群中的Leader服务器挂了,需要一个端口来重新进行选举,选出一个新的Leader,而这个端口就是用来执行选举时服务器相互通信的端口。

2.1.5 分发zookeeper-3.4.10目录到hadoop101、hadoop103

分发配置好的/opt/module/zookeeper-3.4.10目录到其他机器上,并分别在hadoop101、hadoop103上修改myid文件中内容为101、103

[whx@hadoop102 module]$ xsync.sh zookeeper-3.4.10/

2.1.6 配置Zookeeper环境变量

在hadoop102服务器的/etc/profile系统环境变量中添加Zookeeper环境变量

JAVA_HOME=/opt/module/jdk1.8.0_121

HADOOP_HOME=/opt/module/hadoop-2.7.2

ZOOKEEPER_HOME=/opt/module/hadoop-2.7.2

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin

export PATH JAVA_HOME HADOOP_HOME ZOOKEEPER_HOME

将hadoop102服务器的/etc/profile分发到hadoop101、hadoop103

2.1.7 集群操作

- 分别启动Zookeeper

[whx@hadoop101 zookeeper-3.4.10]$ bin/zkServer.sh start [whx@hadoop102 zookeeper-3.4.10]$ bin/zkServer.sh start [whx@hadoop103 zookeeper-3.4.10]$ bin/zkServer.sh start - 查看状态

[whx@hadoop102 zookeeper-3.4.10]# bin/zkServer.sh status JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: follower [whx@hadoop103 zookeeper-3.4.10]# bin/zkServer.sh status JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: leader [whx@hadoop104 zookeeper-3.4.5]# bin/zkServer.sh status JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: follower - 用脚本xcall.sh 群起

[whx@hadoop102 ~]$ xcall.sh /opt/module/zookeeper-3.4.10/bin/zkServer.sh start 要执行的命令是/opt/module/zookeeper-3.4.10/bin/zkServer.sh start ----------------------------hadoop101---------------------------------- ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ----------------------------hadoop102---------------------------------- ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ----------------------------hadoop103---------------------------------- ZooKeeper JMX enabled by default Starting zookeeper ... Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg STARTED [whx@hadoop102 ~]$ - 用脚本xcall.sh 群起

[whx@hadoop102 ~]$ xcall.sh /opt/module/zookeeper-3.4.10/bin/zkServer.sh status 要执行的命令是/opt/module/zookeeper-3.4.10/bin/zkServer.sh status ----------------------------hadoop101---------------------------------- ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: follower ----------------------------hadoop102---------------------------------- ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: leader ----------------------------hadoop103---------------------------------- ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: follower [whx@hadoop102 ~]$

三、配置参数解读

# The number of milliseconds of each tick 心跳间隔

tickTime=2000

# The number of ticks that the initial synchronization phase can take 初始化限制:10个心跳间隔(20s内如果还没同步完成,则抛异常)

initLimit=10

# The number of ticks that can pass between sending a request and getting an acknowledgement(每次同步数据的时间限制在5个心跳)

syncLimit=5

# the directory where the snapshot is stored.快照目录

# do not use /tmp for storage, /tmp here is just example sakes.实际应用中不要用tmp目录

#dataDir=/tmp/zookeeper

dataDir=/opt/module/zookeeper-3.4.10/datas

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

Zookeeper中的配置文件zoo.cfg中参数含义解读如下:

1、tickTime:通信心跳数

tickTime =2000:通信心跳数,Zookeeper服务器与客户端心跳时间,单位毫秒

- Zookeeper使用的基本时间,服务器之间或客户端与服务器之间维持心跳的时间间隔,也就是每个tickTime时间就会发送一个心跳,时间单位为毫秒。

- 它用于心跳机制,并且设置最小的session超时时间为两倍心跳时间。(session的最小超时时间是2*tickTime)

2、initLimit:LF初始通信时限

initLimit =10:LF初始通信时限

- 集群中的follower跟随者服务器(F)与leader领导者服务器(L)之间初始连接时能容忍的最多心跳数(tickTime的数量),用它来限定集群中的Zookeeper服务器连接到Leader的时限。

- 投票选举新leader的初始化时间

- Follower在启动过程中,会从Leader同步所有最新数据,然后确定自己能够对外服务的起始状态。

- Leader允许F在initLimit时间内完成这个工作。

3、syncLimit:LF同步通信时限

syncLimit =5:LF同步通信时限

- 集群中Leader与Follower之间的最大响应时间单位,假如响应超过syncLimit * tickTime,Leader认为Follwer死掉,从服务器列表中删除Follwer。

- 在运行过程中,Leader负责与ZK集群中所有机器进行通信,例如通过一些心跳检测机制,来检测机器的存活状态。

- 如果L发出心跳包在syncLimit之后,还没有从F那收到响应,那么就认为这个F已经不在线了。

4、dataDir:数据文件目录+数据持久化路径

dataDir:数据文件目录+数据持久化路径

- 保存内存数据库快照信息的位置,如果没有其他说明,更新的事务日志也保存到数据库。

5、clientPort:客户端连接端口

clientPort =2181:客户端连接端口

- 监听客户端连接的端口

四、Zookeeper内部原理

1、选举机制

- 半数机制:集群中半数以上机器存活,集群可用。所以Zookeeper适合安装奇数台服务器。

- Zookeeper虽然在配置文件中并没有指定Master和Slave。但是,Zookeeper工作时,是有一个节点为Leader,其他则为Follower,Leader是通过内部的选举机制临时产生的。

- 以一个简单的例子来说明整个选举的过程。假设有五台服务器组成的Zookeeper集群,它们的id从1-5,同时它们都是最新启动的,也就是没有历史数据,在存放数据量这一点上,都是一样的。假设这些服务器依序启动,来看看会发生什么,如下图所示。

- 服务器1启动,此时只有它一台服务器启动了,它发出去的报文没有任何响应,所以它的选举状态一直是LOOKING状态。

- 服务器2启动,它与最开始启动的服务器1进行通信,互相交换自己的选举结果,由于两者都没有历史数据,所以id值较大的服务器2胜出,但是由于没有达到超过半数以上的服务器都同意选举它(这个例子中的半数以上是3),所以服务器1、2还是继续保持LOOKING状态。

- 服务器3启动,根据前面的理论分析,服务器3成为服务器1、2、3中的老大,而与上面不同的是,此时有三台服务器选举了它,所以它成为了这次选举的Leader。

- 服务器4启动,根据前面的分析,理论上服务器4应该是服务器1、2、3、4中最大的,但是由于前面已经有半数以上的服务器选举了服务器3,所以它只能接收当小弟的命了。

- 服务器5启动,同4一样当小弟。

2、节点类型

2.1 Znode有两种类型:

- 短暂(ephemeral):客户端和服务器端断开连接后,创建的节点自己删除

- 持久(persistent):客户端和服务器端断开连接后,创建的节点不删除

2.2 Znode有四种形式的目录节点(默认是persistent )

- 创建znode时设置顺序标识,znode名称后会附加一个值,顺序号是一个单调递增的计数器,由父节点维护

- 注意:在分布式系统中,顺序号可以被用于为所有的事件进行全局排序,这样客户端可以通过顺序号推断事件的顺序

- 持久化目录节点(PERSISTENT):客户端与zookeeper断开连接后,该节点依旧存在

- 持久化顺序编号目录节点(PERSISTENT_SEQUENTIAL):客户端与zookeeper断开连接后,该节点依旧存在,只是Zookeeper给该节点名称进行顺序编号

- 临时目录节点(EPHEMERAL):客户端与zookeeper断开连接后,该节点被删除

- 临时顺序编号目录节点(EPHEMERAL_SEQUENTIAL):客户端与zookeeper断开连接后,该节点被删除,只是Zookeeper给该节点名称进行顺序编号

3、Stat结构体

- czxid-创建节点的事务zxid

每次修改ZooKeeper状态都会收到一个zxid形式的时间戳,也就是ZooKeeper事务ID。

事务ID是ZooKeeper中所有修改总的次序。每个修改都有唯一的zxid,如果zxid1小于zxid2,那么zxid1在zxid2之前发生。 - ctime - znode被创建的毫秒数(从1970年开始)

- mzxid - znode最后更新的事务zxid

- mtime - znode最后修改的毫秒数(从1970年开始)

- pZxid-znode最后更新的子节点zxid

- cversion - znode子节点变化号,znode子节点修改次数

- dataversion - znode数据变化号

- aclVersion - znode访问控制列表的变化号

- ephemeralOwner- 如果是临时节点,这个是znode拥有者的session id。如果不是临时节点则是0。

- dataLength- znode的数据长度

- numChildren - znode子节点数量

4、监听器原理

- 首先要有一个main()线程

- 在main线程中创建Zookeeper客户端,这时就会创建两个线程,一个负责网络连接通信(connet),一个负责监听(listener)。

- 通过connect线程将注册的监听事件发送给Zookeeper。

- 在Zookeeper的注册监听器列表中将注册的监听事件添加到列表中。

- Zookeeper监听到有数据或路径变化,就会将这个消息发送给listener线程。

- listener线程内部调用了process()方法。

- 常见的监听

- 监听节点数据的变化:get path [watch]

- 监听子节点增减的变化: ls path [watch]

5、写数据流程

- 客户端可以连接任意的zkserver实例,向server发送写请求命令。比如Client 向 ZooKeeper 的 Server1 上写数据,发送一个写请求。

- 如果Server1不是Leader,那么Server1 会把接受到的请求进一步转发给Leader,因为每个ZooKeeper的Server里面有一个是Leader。这个Leader 会将写请求广播给各个Server,比如Server1和Server2,各个Server写成功后就会通知Leader。

- 当Leader收到半数以上的节点 Server 数据写成功了,那么就说明数据写成功了。如果这里三个节点的话,只要有两个节点数据写成功了,那么就认为数据写成功了。写成功之后,Leader会告诉Server1数据写成功了。

- Server1会进一步通知 Client 数据写成功了,这时就认为整个写操作成功。

五、客户端命令行操作

| 命令基本语法 | 功能描述 |

|---|---|

| help | 显示所有操作命令 |

| ls path [watch] | 使用 ls 命令来查看当前znode中所包含的内容 |

| ls2 path [watch] | 查看当前节点数据并能看到更新次数等数据 |

| create | 普通创建 -s 含有序列 -e 临时(重启或者超时消失) |

| get path [watch] | 获得节点的值 |

| set | 设置节点的具体值 |

| stat | 查看节点状态 |

| delete | 删除节点 |

| rmr | 递归删除节点 |

1、启动客户端

[atguigu@hadoop103 zookeeper-3.4.10]$ bin/zkCli.sh

2、显示所有操作命令

[zk: localhost:2181(CONNECTED) 0] help

ZooKeeper -server host:port cmd args

stat path [watch]

set path data [version]

ls path [watch]

delquota [-n|-b] path

ls2 path [watch]

setAcl path acl

setquota -n|-b val path

history

redo cmdno

printwatches on|off

delete path [version]

sync path

listquota path

rmr path

get path [watch]

create [-s] [-e] path data acl

addauth scheme auth

quit

getAcl path

close

connect host:port

[zk: localhost:2181(CONNECTED) 1] quit

Quitting...

2021-01-29 06:34:49,403 [myid:] - INFO [main:ZooKeeper@684] - Session: 0x1774b1ab1180001 closed

2021-01-29 06:34:49,404 [myid:] - INFO [main-EventThread:ClientCnxn$EventThread@519] - EventThread shut down for session: 0x1774b1ab1180001

[whx@hadoop101 zookeeper-3.4.10]$

其中可以设置观察者(watch)的命令为:

- stat path [watch]

- ls path [watch]:监听当前路径子阶段数据的变化,一旦新增或删除了子节点,会触发事件

- ls2 path [watch]

- get path [watch]:监听指定节点数据的变化

注意: 观察者在设置后,只有当次有效!

3、-server host:port cmd args

执行完命令后就退出ZooKeeper ,不进入ZooKeeper 客户端

[whx@hadoop101 zookeeper-3.4.10]$ bin/zkCli.sh -server hadoop101:2181 ls /

Connecting to hadoop101:2181

2021-01-29 11:19:17,742 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.10-39d3a4f269333c922ed3db283be479f9deacaa0f, built on 03/23/2017 10:13 GMT

2021-01-29 11:19:17,744 [myid:] - INFO [main:Environment@100] - Client environment:host.name=hadoop101

2021-01-29 11:19:17,744 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.8.0_121

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/opt/module/jdk1.8.0_121/jre

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/opt/module/zookeeper-3.4.10/bin/../build/classes:/opt/module/zookeeper-3.4.10/bin/../build/lib/*.jar:/opt/module/zookeeper-3.4.10/bin/../lib/slf4j-log4j12-1.6.1.jar:/opt/module/zookeeper-3.4.10/bin/../lib/slf4j-api-1.6.1.jar:/opt/module/zookeeper-3.4.10/bin/../lib/netty-3.10.5.Final.jar:/opt/module/zookeeper-3.4.10/bin/../lib/log4j-1.2.16.jar:/opt/module/zookeeper-3.4.10/bin/../lib/jline-0.9.94.jar:/opt/module/zookeeper-3.4.10/bin/../zookeeper-3.4.10.jar:/opt/module/zookeeper-3.4.10/bin/../src/java/lib/*.jar:/opt/module/zookeeper-3.4.10/bin/../conf:

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA>

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:os.version=2.6.32-642.el6.x86_64

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:user.name=whx

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/home/whx

2021-01-29 11:19:17,746 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/opt/module/zookeeper-3.4.10

2021-01-29 11:19:17,747 [myid:] - INFO [main:ZooKeeper@438] - Initiating client connection, connectString=hadoop101:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@506c589e

2021-01-29 11:19:17,763 [myid:] - INFO [main-SendThread(hadoop101:2181):ClientCnxn$SendThread@1032] - Opening socket connection to server hadoop101/192.168.1.101:2181. Will not attempt to authenticate using SASL (unknown error)

2021-01-29 11:19:17,816 [myid:] - INFO [main-SendThread(hadoop101:2181):ClientCnxn$SendThread@876] - Socket connection established to hadoop101/192.168.1.101:2181, initiating session

2021-01-29 11:19:17,823 [myid:] - INFO [main-SendThread(hadoop101:2181):ClientCnxn$SendThread@1299] - Session establishment complete on server hadoop101/192.168.1.101:2181, sessionid = 0x1774b393e190001, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zookeeper]

[whx@hadoop101 zookeeper-3.4.10]$

4、查看

4.1 查看当前znode中所包含的内容

- ls path [watch]:列出节点下的子节点

[zk: localhost:2181(CONNECTED) 0] ls /

[zookeeper]

4.2 查看节点状态

- stat path [watch]:查询节点状态

[zk: localhost:2181(CONNECTED) 17] stat /sanguo

cZxid = 0x100000003

ctime = Wed Aug 29 00:03:23 CST 2018

mZxid = 0x100000011

mtime = Wed Aug 29 00:21:23 CST 2018

pZxid = 0x100000014

cversion = 9

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 4

numChildren = 1

4.3 查看当前节点详细数据

- ls2 path [watch]:功能是stat与ls的合并

[zk: localhost:2181(CONNECTED) 1] ls2 /

[zookeeper]

cZxid = 0x0

ctime = Thu Jan 01 08:00:00 CST 1970

mZxid = 0x0

mtime = Thu Jan 01 08:00:00 CST 1970

pZxid = 0x0

cversion = -1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 0

numChildren = 1

4.4 获得节点的值

- get path [watch]

[zk: localhost:2181(CONNECTED) 5] get /sanguo

jinlian

cZxid = 0x100000003

ctime = Wed Aug 29 00:03:23 CST 2018

mZxid = 0x100000003

mtime = Wed Aug 29 00:03:23 CST 2018

pZxid = 0x100000004

cversion = 1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 7

numChildren = 1

[zk: localhost:2181(CONNECTED) 6]

[zk: localhost:2181(CONNECTED) 6] get /sanguo/shuguo

liubei

cZxid = 0x100000004

ctime = Wed Aug 29 00:04:35 CST 2018

mZxid = 0x100000004

mtime = Wed Aug 29 00:04:35 CST 2018

pZxid = 0x100000004

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 6

numChildren = 0

4.5 状态信息

- cZxid = 0x0

- c:表示 create

- Z:表示Zookeeper

- x:

- id:该节点的id

- ctime = Thu Jan 01 08:00:00 CST 1970

- 节点创建时间

- mZxid = 0x6

- 修改时的id

- mtime = Fri Jan 29 07:09:36 CST 2021

- 修改时间

- pZxid = 0x0

- 当前最新修改的子节点的cZxid

- cversion = -1

- dataVersion = 1

- aclVersion = 0

- ephemeralOwner = 0x0 表示永久节点,而非临时节点

- dataLength = 5

- numChildren = 1

5、创建节点

create [-s] [-e] path data acl:创建节点及其内容

- [-s]:表示带序号的节点

- [-e]:表示临时节点

- 创建节点时不带[-s] 、[-e]则表示创建不带序号的永久节点

- [-s] 、[-e] 组合后可以创建4中不同类型的节点

分别创建2个普通节点

[zk: localhost:2181(CONNECTED) 3] create /sanguo "jinlian"

Created /sanguo

[zk: localhost:2181(CONNECTED) 4] create /sanguo/shuguo "liubei"

Created /sanguo/shuguo

6、创建短暂临时节点

[zk: localhost:2181(CONNECTED) 7] create -e /sanguo/wuguo "zhouyu"

Created /sanguo/wuguo

- 在当前客户端是能查看到的

[zk: localhost:2181(CONNECTED) 3] ls /sanguo

[wuguo, shuguo]

- 退出当前客户端然后再重启客户端

[zk: localhost:2181(CONNECTED) 12] quit

[atguigu@hadoop104 zookeeper-3.4.10]$ bin/zkCli.sh

- 再次查看根目录下短暂节点已经删除

[zk: localhost:2181(CONNECTED) 0] ls /sanguo

[shuguo]

7、创建带序号的节点

- 先创建一个普通的根节点/sanguo/weiguo

[zk: localhost:2181(CONNECTED) 1] create /sanguo/weiguo "caocao"

Created /sanguo/weiguo

- 创建带序号的节点

[zk: localhost:2181(CONNECTED) 2] create -s /sanguo/weiguo/xiaoqiao "jinlian"

Created /sanguo/weiguo/xiaoqiao0000000000

[zk: localhost:2181(CONNECTED) 3] create -s /sanguo/weiguo/daqiao "jinlian"

Created /sanguo/weiguo/daqiao0000000001

[zk: localhost:2181(CONNECTED) 4] create -s /sanguo/weiguo/diaocan "jinlian"

Created /sanguo/weiguo/diaocan0000000002

- 如果原来没有序号节点,序号从0开始依次递增。如果原节点下已有2个节点,则再排序时从2开始,以此类推。

8、修改节点数据值

[zk: localhost:2181(CONNECTED) 6] set /sanguo/weiguo "simayi"

9、节点的值变化监听

- 在hadoop101主机上注册监听/sanguo节点数据变化

[zk: localhost:2181(CONNECTED) 26] [zk: localhost:2181(CONNECTED) 8] get /sanguo watch

- 在hadoop103主机上修改/sanguo节点的数据

[zk: localhost:2181(CONNECTED) 1] set /sanguo "xisi"

- 观察hadoop101主机收到数据变化的监听

WATCHER::

WatchedEvent state:SyncConnected type:NodeDataChanged path:/sanguo

10、节点的子节点变化监听(路径变化)

- 在hadoop101主机上注册监听/sanguo节点的子节点变化

[zk: localhost:2181(CONNECTED) 1] ls /sanguo watch

[aa0000000001, server101]

- 在hadoop103主机/sanguo节点上创建子节点

[zk: localhost:2181(CONNECTED) 2] create /sanguo/jin "simayi"

Created /sanguo/jin

- 观察hadoop101主机收到子节点变化的监听

WATCHER::

WatchedEvent state:SyncConnected type:NodeChildrenChanged path:/sanguo

11、删除节点

11.1 删单个节点

- delete path [version]:删单个节点

[zk: localhost:2181(CONNECTED) 4] delete /sanguo/jin

11.2 递归删除节点,循环删除节点及其子节点

- rmr path:循环删除节点及其子节点

[zk: localhost:2181(CONNECTED) 21] ls /

[zookeeper]

[zk: localhost:2181(CONNECTED) 22] create /whx helloworld

Created /whx

[zk: localhost:2181(CONNECTED) 23] ls /

[zookeeper, whx]

[zk: localhost:2181(CONNECTED) 24] create /whx/hadoop 123

Created /whx/hadoop

[zk: localhost:2181(CONNECTED) 25] ls /

[zookeeper, whx]

[zk: localhost:2181(CONNECTED) 26] delete /whx

Node not empty: /whx

[zk: localhost:2181(CONNECTED) 27] rmr /whx

[zk: localhost:2181(CONNECTED) 28] ls /

[zookeeper]

[zk: localhost:2181(CONNECTED) 29]

12、改

set path data [version]

- 修改节点的值

六、四字命令

- zookeeper 支持某些特定的四字命令(The Four Letter Words)与其交互,四字命令大多是查询命令,用来查询 zookeeper 服务的当前状态及相关信息,用户在客户端可以通过 telenet 或者 nc(netcat) 向 zookeeper 提交相应的命令。

- 其中stat、srvr、cons三个命令比较类似:"stat"提供服务器统计和客户端连接的一般信息;"srvr"只有服务的统计信息,"cons"提供客户端连接的更加详细的信息。

- 使用方式,在shell终端输入:echo conf | nc hadoop101 2181

- 使用四字命令之前,需要先安装 nc(contos)或netcat(ubuntu):

[whx@hadoop101 bin]$ echo conf | nc hadoop101 2181

clientPort=2181

dataDir=/opt/module/zookeeper-3.4.10/datas/version-2

dataLogDir=/opt/module/zookeeper-3.4.10/datas/version-2

tickTime=2000

maxClientCnxns=60

minSessionTimeout=4000

maxSessionTimeout=40000

serverId=101

initLimit=10

syncLimit=5

electionAlg=3

electionPort=3888

quorumPort=2888

peerType=0

[whx@hadoop101 bin]$

| 命令 | 实例 | 描述 |

|---|---|---|

| conf | echo conf | nc hadoop101 2181 | (New in 3.3.0)输出相关服务配置的详细信息。比如端口、zk数据及日志配置路径、最大连接数,session超时时间、serverId等 |

| cons | echo cons | nc hadoop101 2181 | (New in 3.3.0)列出所有连接到这台服务器的客户端连接/会话的详细信息。包括“接受/发送”的包数量、session id 、操作延迟、最后的操作执行等信息。 |

| crst | echo crst | nc hadoop101 2181 | (New in 3.3.0)重置当前这台服务器所有连接/会话的统计信息 |

| dump | echo dump | nc hadoop101 2181 | 列出未经处理的会话和临时节点(只在leader上有效)。 |

| envi | echo envi | nc hadoop101 2181 | 输出关于服务器的环境详细信息(不同于conf命令),比如host.name、java.version、java.home、user.dir=/data/zookeeper-3.4.6/bin之类信息 |

| ruok | echo ruok | nc hadoop101 2181 | 测试服务是否处于正确运行状态。如果正常返回"imok",否则返回空。 |

| srst | echo srst | nc hadoop101 2181 | 重置服务器的统计信息 |

| srvr | echo srvr | nc hadoop101 2181 | (New in 3.3.0)输出服务器的详细信息。zk版本、接收/发送包数量、连接数、模式(leader/follower)、节点总数。 |

| stat | echo stat | nc hadoop101 2181 | 输出服务器的详细信息:接收/发送包数量、连接数、模式(leader/follower)、节点总数、延迟。 所有客户端的列表。 |

| wchs | echo wchs | nc hadoop101 2181 | (New in 3.3.0)列出服务器watches的简洁信息:连接总数、watching节点总数和watches总数 |

| wchc | echo wchc | nc hadoop101 2181 | (New in 3.3.0)通过session分组,列出watch的所有节点,它的输出是一个与 watch 相关的会话的节点列表。如果watches数量很大的话,将会产生很大的开销,会影响性能,小心使用。 |

| wchp | echo wchp | nc hadoop101 2181 | (New in 3.3.0)通过路径分组,列出所有的 watch 的session id信息。它输出一个与 session 相关的路径。如果watches数量很大的话,将会产生很大的开销,会影响性能,小心使用。 |

| mntr | echo mntr | nc hadoop101 2181 | (New in 3.4.0)列出集群的健康状态。包括“接受/发送”的包数量、操作延迟、当前服务模式(leader/follower)、节点总数、watch总数、临时节点总数。 |

conf:

clientPort:客户端端口号

dataDir:数据文件目录

dataLogDir:日志文件目录

tickTime:间隔单位时间

maxClientCnxns:最大连接数

minSessionTimeout:最小session超时

maxSessionTimeout:最大session超时

serverId:id

initLimit:初始化时间

syncLimit:心跳时间间隔

electionAlg:选举算法 默认3

electionPort:选举端口

quorumPort:法人端口

peerType:未确认

cons:

ip=ip

port=端口

queued=所在队列

received=收包数

sent=发包数

sid=session id

lop=最后操作

est=连接时间戳

to=超时时间

lcxid=最后id(未确认具体id)

lzxid=最后id(状态变更id)

lresp=最后响应时间戳

llat=最后/最新 延时

minlat=最小延时

maxlat=最大延时

avglat=平均延时

crst:

重置所有连接

dump:

session id : znode path (1对多 , 处于队列中排队的session和临时节点)

envi:

zookeeper.version=版本

host.name=host信息

java.version=java版本

java.vendor=供应商

java.home=jdk目录

java.class.path=classpath

java.library.path=lib path

java.io.tmpdir=temp目录

java.compiler=

os.name=Linux

os.arch=amd64

os.version=2.6.32-358.el6.x86_64

user.name=hhz

user.home=/home/hhz

user.dir=/export/servers/zookeeper-3.4.6

ruok:

查看server是否正常

imok=正常

srst:

重置server状态

srvr:

Zookeeper version:版本

Latency min/avg/max: 延时

Received: 收包

Sent: 发包

Connections: 连接数

Outstanding: 堆积数

Zxid: 操作id

Mode: leader/follower

Node count: 节点数

stat:

Zookeeper version: 3.4.6-1569965, built on 02/20/2014 09:09 GMT

Clients:

/192.168.147.102:56168[1](queued=0,recved=41,sent=41)

/192.168.144.102:34378[1](queued=0,recved=54,sent=54)

/192.168.162.16:43108[1](queued=0,recved=40,sent=40)

/192.168.144.107:39948[1](queued=0,recved=1421,sent=1421)

/192.168.162.16:43112[1](queued=0,recved=54,sent=54)

/192.168.162.16:43107[1](queued=0,recved=54,sent=54)

/192.168.162.16:43110[1](queued=0,recved=53,sent=53)

/192.168.144.98:34702[1](queued=0,recved=41,sent=41)

/192.168.144.98:34135[1](queued=0,recved=61,sent=65)

/192.168.162.16:43109[1](queued=0,recved=54,sent=54)

/192.168.147.102:56038[1](queued=0,recved=165313,sent=165314)

/192.168.147.102:56039[1](queued=0,recved=165526,sent=165527)

/192.168.147.101:44124[1](queued=0,recved=162811,sent=162812)

/192.168.147.102:39271[1](queued=0,recved=41,sent=41)

/192.168.144.107:45476[1](queued=0,recved=166422,sent=166423)

/192.168.144.103:45100[1](queued=0,recved=54,sent=54)

/192.168.162.16:43133[0](queued=0,recved=1,sent=0)

/192.168.144.107:39945[1](queued=0,recved=1825,sent=1825)

/192.168.144.107:39919[1](queued=0,recved=325,sent=325)

/192.168.144.106:47163[1](queued=0,recved=17891,sent=17891)

/192.168.144.107:45488[1](queued=0,recved=166554,sent=166555)

/172.17.36.11:32728[1](queued=0,recved=54,sent=54)

/192.168.162.16:43115[1](queued=0,recved=54,sent=54)

Latency min/avg/max: 0/0/599

Received: 224869

Sent: 224817

Connections: 23

Outstanding: 0

Zxid: 0x68000af707

Mode: follower

Node count: 101081

(同上面的命令整合的信息)

wchs:

connectsions=连接数

watch-paths=watch节点数

watchers=watcher数量

wchc:

session id 对应 path

wchp:

path 对应 session id

mntr:

zk_version=版本

zk_avg_latency=平均延时

zk_max_latency=最大延时

zk_min_latency=最小延时

zk_packets_received=收包数

zk_packets_sent=发包数

zk_num_alive_connections=连接数

zk_outstanding_requests=堆积请求数

zk_server_state=leader/follower 状态

zk_znode_count=znode数量

zk_watch_count=watch数量

zk_ephemerals_count=临时节点(znode)

zk_approximate_data_size=数据大小

zk_open_file_descriptor_count=打开的文件描述符数量

zk_max_file_descriptor_count=最大文件描述符数量

zk_followers=follower数量

zk_synced_followers=同步的follower数量

zk_pending_syncs=准备同步数

七、调整日志设置

1、调整服务端日志保存位置

默认服务端日志保存位置为zookeeper-3.4.10主目录下的zookeeper.out

drwxr-xr-x. 2 whx whx 4096 Jan 29 06:43 bin

-rw-rw-r--. 1 whx whx 84725 Mar 23 2017 build.xml

drwxr-xr-x. 2 whx whx 4096 Jan 29 06:40 conf

drwxr-xr-x. 10 whx whx 4096 Mar 23 2017 contrib

drwxrwxr-x. 3 whx whx 4096 Jan 29 06:26 datas

drwxr-xr-x. 2 whx whx 4096 Mar 23 2017 dist-maven

drwxr-xr-x. 6 whx whx 4096 Mar 23 2017 docs

-rw-rw-r--. 1 whx whx 1709 Mar 23 2017 ivysettings.xml

-rw-rw-r--. 1 whx whx 5691 Mar 23 2017 ivy.xml

drwxr-xr-x. 4 whx whx 4096 Mar 23 2017 lib

-rw-rw-r--. 1 whx whx 11938 Mar 23 2017 LICENSE.txt

-rw-rw-r--. 1 whx whx 3132 Mar 23 2017 NOTICE.txt

-rw-rw-r--. 1 whx whx 1770 Mar 23 2017 README_packaging.txt

-rw-rw-r--. 1 whx whx 1585 Mar 23 2017 README.txt

drwxr-xr-x. 5 whx whx 4096 Mar 23 2017 recipes

drwxr-xr-x. 8 whx whx 4096 Mar 23 2017 src

-rw-rw-r--. 1 whx whx 1456729 Mar 23 2017 zookeeper-3.4.10.jar

-rw-rw-r--. 1 whx whx 819 Mar 23 2017 zookeeper-3.4.10.jar.asc

-rw-rw-r--. 1 whx whx 33 Mar 23 2017 zookeeper-3.4.10.jar.md5

-rw-rw-r--. 1 whx whx 41 Mar 23 2017 zookeeper-3.4.10.jar.sha1

-rw-rw-r--. 1 whx whx 7463 Jan 29 06:34 zookeeper.out

[whx@hadoop101 zookeeper-3.4.10]$

在/zookeeper-3.4.10/bin/zkEnv.sh文件中修改ZOO_LOG_DIR,在zkEnv.sh文件顶部添加:

ZOO_LOG_DIR="/opt/module/zookeeper-3.4.10/logs"

if [ "x${ZOO_LOG_DIR}" = "x" ]

then

ZOO_LOG_DIR="."

fi

2、调整服务端日志级别

[whx@hadoop101 zookeeper-3.4.10]$ cd conf

[whx@hadoop101 conf]$ ll

total 16

-rw-rw-r--. 1 whx whx 535 Mar 23 2017 configuration.xsl

-rw-rw-r--. 1 whx whx 2161 Mar 23 2017 log4j.properties

-rw-rw-r--. 1 whx whx 1147 Jan 29 06:16 zoo.cfg

-rw-rw-r--. 1 whx whx 922 Mar 23 2017 zoo_sample.cfg

[whx@hadoop101 conf]$ vim log4j.properties

[whx@hadoop101 conf]$

# Define some default values that can be overridden by system properties

zookeeper.root.logger=INFO, CONSOLE

zookeeper.console.threshold=INFO

zookeeper.log.dir=.

zookeeper.log.file=zookeeper.log

zookeeper.log.threshold=DEBUG

zookeeper.tracelog.dir=.

zookeeper.tracelog.file=zookeeper_trace.log

#

# ZooKeeper Logging Configuration

#

# Format is " (, )+

# DEFAULT: console appender only

log4j.rootLogger=${zookeeper.root.logger}

# Example with rolling log file

#log4j.rootLogger=DEBUG, CONSOLE, ROLLINGFILE

# Example with rolling log file and tracing

#log4j.rootLogger=TRACE, CONSOLE, ROLLINGFILE, TRACEFILE

#

# Log INFO level and above messages to the console

#

log4j.appender.CONSOLE=org.apache.log4j.ConsoleAppender

log4j.appender.CONSOLE.Threshold=${zookeeper.console.threshold}

log4j.appender.CONSOLE.layout=org.apache.log4j.PatternLayout

log4j.appender.CONSOLE.layout.ConversionPattern=%d{

ISO8601} [myid:%X{

myid}] - %-5p [%t:%C{

1}@%L] - %m%n

#

# Add ROLLINGFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.ROLLINGFILE=org.apache.log4j.RollingFileAppender

log4j.appender.ROLLINGFILE.Threshold=${zookeeper.log.threshold}

log4j.appender.ROLLINGFILE.File=${zookeeper.log.dir}/${zookeeper.log.file}

# Max log file size of 10MB

log4j.appender.ROLLINGFILE.MaxFileSize=10MB

# uncomment the next line to limit number of backup files

#log4j.appender.ROLLINGFILE.MaxBackupIndex=10

log4j.appender.ROLLINGFILE.layout=org.apache.log4j.PatternLayout

log4j.appender.ROLLINGFILE.layout.ConversionPattern=%d{

ISO8601} [myid:%X{

myid}] - %-5p [%t:%C{

1}@%L] - %m%n

#

# Add TRACEFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.TRACEFILE=org.apache.log4j.FileAppender

log4j.appender.TRACEFILE.Threshold=TRACE

log4j.appender.TRACEFILE.File=${zookeeper.tracelog.dir}/${zookeeper.tracelog.file}

log4j.appender.TRACEFILE.layout=org.apache.log4j.PatternLayout

### Notice we are including log4j's NDC here (%x)

log4j.appender.TRACEFILE.layout.ConversionPattern=%d{

ISO8601} [myid:%X{

myid}] - %-5p [%t:%C{

1}@%L][%x] - %m%n

3、调整客户端日志级别

bin/zkEnv.sh 文件第 60行 ZOO_LOG4J_PROP=“ERROR,CONSOLE”

[whx@hadoop101 bin]$ ll

total 36

-rwxr-xr-x. 1 whx whx 232 Mar 23 2017 README.txt

-rwxr-xr-x. 1 whx whx 1937 Mar 23 2017 zkCleanup.sh

-rwxr-xr-x. 1 whx whx 1056 Mar 23 2017 zkCli.cmd

-rwxr-xr-x. 1 whx whx 1534 Mar 23 2017 zkCli.sh

-rwxr-xr-x. 1 whx whx 1628 Mar 23 2017 zkEnv.cmd

-rwxr-xr-x. 1 whx whx 2696 Mar 23 2017 zkEnv.sh

-rwxr-xr-x. 1 whx whx 1089 Mar 23 2017 zkServer.cmd

-rwxr-xr-x. 1 whx whx 6773 Mar 23 2017 zkServer.sh

[whx@hadoop101 bin]$ vim zkEnv.sh

if [ "x${ZOO_LOG4J_PROP}" = "x" ]

then

ZOO_LOG4J_PROP="INFO,CONSOLE"

fi

八、API应用

1、IntellJ环境搭建

- 创建一个Maven工程

- 添加pom文件

<dependencies>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>RELEASEversion>

dependency>

<dependency>

<groupId>org.apache.logging.log4jgroupId>

<artifactId>log4j-coreartifactId>

<version>2.8.2version>

dependency>

<dependency>

<groupId>org.apache.zookeepergroupId>

<artifactId>zookeeperartifactId>

<version>3.4.10version>

dependency>

dependencies>

- 拷贝log4j.properties文件到项目根目录

需要在项目的src/main/resources目录下,新建一个文件,命名为“log4j.properties”,在文件中填入。

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

2、创建ZooKeeper客户端

package test.com.whx;

import java.util.List;

import org.apache.zookeeper.CreateMode;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooDefs.Ids;

import org.apache.zookeeper.ZooKeeper;

import org.apache.zookeeper.data.Stat;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

public class TestZK {

private String connectString="hadoop101:2181,hadoop102:2181";

private int sessionTimeout=6000;

private ZooKeeper zooKeeper;

// zkCli.sh -server xxx:2181

@Before

public void init() throws Exception {

zooKeeper = new ZooKeeper(connectString, sessionTimeout, new Watcher() {

// 创建一个zk的客户端对象

@Override

public void process(WatchedEvent event) {

//回调方法: 一旦watcher观察的path触发了指定的事件,服务端会通知客户端,客户端收到通知后会自动调用process()

// TODO Auto-generated method stub

}

});

System.out.println(zooKeeper);

}

@After

public void close() throws InterruptedException {

if (zooKeeper !=null) {

zooKeeper.close();

}

}

// ls

@Test

public void ls() throws Exception {

Stat stat = new Stat();

List<String> children = zooKeeper.getChildren("/", null, stat);

System.out.println(children);

System.out.println(stat);

}

// create [-s] [-e] path data

@Test

public void create() throws Exception {

zooKeeper.create("/eclipse/child03", "333".getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

}

// get path

@Test

public void get() throws Exception {

byte[] data = zooKeeper.getData("/eclipse", null, null);

System.out.println(new String(data));

}

// set path data

@Test

public void set() throws Exception {

zooKeeper.setData("/eclipse", "hi".getBytes(), -1); // -1 表示忽略版本号的检测

}

// delete path

@Test

public void delete() throws Exception {

zooKeeper.delete("/eclipse", -1);

}

// rmr path

@Test

public void rmr() throws Exception {

String path="/data";

List<String> children = zooKeeper.getChildren(path, false); //先获取当前路径中所有的子node

for (String child : children) {

//删除所有的子节点

zooKeeper.delete(path+"/"+child, -1);

}

zooKeeper.delete(path, -1);

}

// 判断当前节点是否存在

@Test

public void ifNodeExists() throws Exception {

Stat stat = zooKeeper.exists("/data2", false);

System.out.println(stat==null ? "不存在" : "存在");

}

}

3、创建ZooKeeper客户端(设置观察者)

package test.com.whx;

import java.util.List;

import java.util.concurrent.CountDownLatch;

import org.apache.zookeeper.KeeperException;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooKeeper;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

public class TestZKWatch {

private String connectString="hadoop101:2181,hadoop102:2181";

private int sessionTimeout=6000;

private ZooKeeper zooKeeper;

// zkCli.sh -server xxx:2181

@Before

public void init() throws Exception {

// 创建一个zk的客户端对象

zooKeeper = new ZooKeeper(connectString, sessionTimeout, new Watcher() {

//回调方法,一旦watcher观察的path触发了指定的事件,服务端会通知客户端,客户端收到通知后会自动调用process()

@Override

public void process(WatchedEvent event) {

//System.out.println(event.getPath()+"发生了以下事件:"+event.getType()); //默认的观察者

}

});

System.out.println(zooKeeper);

}

@After

public void close() throws InterruptedException {

if (zooKeeper !=null) {

zooKeeper.close();

}

}

//====================================================================================================================

// 监听器的特点: 只有当次有效

// ls path watch

@Test

public void lsAndWatch() throws Exception {

//传入true,默认使用客户端自带的观察者

zooKeeper.getChildren("/eclipse",new Watcher() {

//当前线程自己设置的观察者的回调方法

@Override

public void process(WatchedEvent event) {

System.out.println(event.getPath()+"发生了以下事件:"+event.getType());

List<String> children;

try {

children = zooKeeper.getChildren("/eclipse", null);

System.out.println(event.getPath()+"的新节点:"+children);

} catch (KeeperException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

});

while(true) {

//while循环来保证客户端所在的进程不能死亡

Thread.sleep(5000);

System.out.println("我还活着......");

}

}

//====================================================================================================================

private CountDownLatch cdl=new CountDownLatch(1);

// 监听器的特点: 只有当次有效

// get path watch

@Test

public void getAndWatch() throws Exception {

//zooKeeper = new ZooKeeper(connectString, sessionTimeout, true) 会自动调用Before里面的process

byte[] data = zooKeeper.getData("/eclipse", new Watcher() {

//是Connect线程调用

@Override

public void process(WatchedEvent event) {

// 是Listener线程调用

System.out.println(event.getPath()+"发生了以下事件:"+event.getType());

cdl.countDown();//减 1

}

}, null);

System.out.println("查询到的数据是:"+new String(data));

cdl.await(); //阻塞当前线程,当初始化的值变为0时,当前线程会唤醒

}

//====================================================================================================================

// 持续watch:当前方法不可行,该方法无法实现持续watch,listener线程阻塞了

@Test

public void lsAndAlwaysWatch() throws Exception {

//传入true,默认使用客户端自带的观察者

zooKeeper.getChildren("/eclipse",new Watcher() {

@Override

public void process(WatchedEvent event) {

// process由listener线程调用,listener线程不能阻塞,阻塞后无法再调用process前线程自己设置的观察者

System.out.println(event.getPath()+"发生了以下事件:"+event.getType());

System.out.println(Thread.currentThread().getName()+"---->process()---->我还活着......");

try {

lsAndAlwaysWatch(); // 循环调用

} catch (Exception e) {

e.printStackTrace();

}

}

});

while(true) {

//while循环来保证客户端lsAndAlwaysWatch()方法所在的进程不能死亡

Thread.sleep(5000); // listener线程阻塞在这儿了,无法继续调用lsAndAlwaysWatch()方法

System.out.println(Thread.currentThread().getName()+"---->while(true)---->我还活着......");

}

}

//====================================================================================================================

// 持续watch:此方法可行

@Test

public void testLsAndAlwaysWatchCurrent() throws Exception {

lsAndAlwaysWatchCurrent();

while(true) {

//while循环来保证客户端所在的进程不能死亡

Thread.sleep(5000);

System.out.println(Thread.currentThread().getName()+"---->我还活着......");

}

}

@Test

public void lsAndAlwaysWatchCurrent() throws Exception {

//传入true,默认使用客户端自带的观察者

zooKeeper.getChildren("/eclipse",new Watcher() {

@Override

public void process(WatchedEvent event) {

// process由listener线程调用,listener线程不能阻塞,阻塞后无法再调用process当前线程自己设置的观察者

System.out.println(event.getPath()+"发生了以下事件:"+event.getType());

System.out.println(Thread.currentThread().getName()+"---->我还活着......");

try {

lsAndAlwaysWatchCurrent(); //递归调用

} catch (Exception e) {

e.printStackTrace();

}

}

});

}

}

九、监听服务器节点动态上下线案例

某分布式系统中,主节点可以有多台,可以动态上下线,任意一台客户端都能实时感知到主节点服务器的上下线。

Rountor.java

package main.com.whx;

import java.util.ArrayList;

import java.util.List;

import org.apache.zookeeper.CreateMode;

import org.apache.zookeeper.KeeperException;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooDefs.Ids;

import org.apache.zookeeper.ZooKeeper;

import org.apache.zookeeper.data.Stat;

/**

* 1. 从ZK集群获取当前启动的Server进程有哪些,获取到Server进程的信息;

* 2. 持续监听Server进程的变化,一旦有变化,重新获取Server进程的信息

*/

public class Routor {

private String connectString="hadoop101:2181,hadoop102:2181";

private int sessionTimeout=6000;

private ZooKeeper zooKeeper;

private String basePath="/Servers";

//初始化客户端对象

public void init() throws Exception {

zooKeeper = new ZooKeeper(connectString, sessionTimeout, new Watcher() {

@Override

public void process(WatchedEvent event) {

}

});

}

//检查目录/Servers是否存在,如果不存在,需要创建这个节点

public void check() throws KeeperException, InterruptedException {

Stat stat = zooKeeper.exists(basePath, false);

if (stat == null) {

//不存在,初始化根节点

zooKeeper.create(basePath, "".getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); // /Servers必须是永久节点

}

}

// 获取当前启动的Server进程有哪些,获取到Server进程的信息。递归,持续监听

public List<String> getData() throws KeeperException, InterruptedException {

List<String> result=new ArrayList<>();

List<String> children = zooKeeper.getChildren(basePath, new Watcher() {

@Override

public void process(WatchedEvent event) {

System.out.println(event.getPath()+"发生了以下事件:"+event.getType());

try {

getData(); //递归,持续监听

} catch (KeeperException | InterruptedException e) {

e.printStackTrace();

}

}

});

//获取每个节点中保存的Server的信息

for (String child : children) {

byte[] info = zooKeeper.getData(basePath+"/"+child, null, null);

result.add(new String(info));

}

System.out.println("最新读取到的信息是:"+result);

return result;

}

//其他的业务功能

public void doOtherBusiness() throws InterruptedException {

System.out.println("working......");

while(true) {

//持续工作

Thread.sleep(5000);

System.out.println("working......");

}

}

public static void main(String[] args) throws Exception {

Routor routor = new Routor();

//初始化客户端

routor.init();

//检查根节点是否存在

routor.check();

// 获取数据

routor.getData();

//其他的工作

routor.doOtherBusiness();

}

}

//谁创建了哪个临时节点 这个谁的线程没了 或挂了 节点就消失不见。--自己的测试结论

//要想一直能监听 要保证eventThread线程不死 在process方法递归掉 方法 while true循环不能把它一直困在里面。

Server.java

package main.com.whx;

import org.apache.zookeeper.CreateMode;

import org.apache.zookeeper.KeeperException;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooDefs.Ids;

import org.apache.zookeeper.ZooKeeper;

/**

* 1.每次启动后,在执行自己的核心业务之前,先向zk集群注册一个临时节点,且向临时节点中保存一些关键信息

*/

public class Server {

private String connectString="hadoop101:2181,hadoop102:2181";

private int sessionTimeout=6000;

private ZooKeeper zooKeeper;

private String basePath="/Servers"; //临时节点的目录

//初始化客户端对象

public void init() throws Exception {

zooKeeper = new ZooKeeper(connectString, sessionTimeout, new Watcher() {

@Override

public void process(WatchedEvent event) {

}

});

}

//使用zk客户端注册 “临时” 节点

public void regist(String info) throws KeeperException, InterruptedException {

//节点必须是临时带序号的节点

zooKeeper.create(basePath+"/server", info.getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL);

}

//其他的业务功能

public void doOtherBusiness() throws InterruptedException {

System.out.println("working......");

//持续工作

while(true) {

Thread.sleep(5000);

System.out.println("working......");

}

}

public static void main(String[] args) throws Exception {

Server server = new Server();

//初始化zk客户端对象

server.init();

//注册节点

server.regist(args[0]);

// 执行自己其他的业务功能

server.doOtherBusiness();

}

}