卷积神经网络CNN相关模型python案例(LeNet-5、Inception_v3)

一、卷积神经网络CNN

卷积神经网络是通过卷积层(convolutions)和池化层(pooling)将特征从多个的通道(channel)生成Feature Map,再通过全连接网络(full connections)得到最终输出的一种神经网络结构。

卷积神经网络的结构通常如下:

输 入 − > ( 卷 积 层 c o n v o l u t i o n × N + 采 样 层 p o o l i n g ) × M − > 全 连 接 层 F C × K \mathrm{输入}->(\mathrm{卷积层}convolution\times N+采样层pooling)\times M->\mathrm{全连接层}FC\times K 输入−>(卷积层convolution×N+采样层pooling)×M−>全连接层FC×K

1. 卷积层(convolution)

一个卷积层是将深度为 d d d,每个深度上维度为 f × f f\times f f×f的矩阵(过滤器/卷积核)按照卷积运算的方式,作用在深度(通道)为 d d d,且每个通道上为一个二维 m × n m\times n m×n特征矩阵(Feature Map)上面,进行扫描运算。(还可以加上padding操作,防止矩阵越算算越小。)

具体示意图如下:

![]()

卷积层可以看做将原有矩阵特征通过卷积核生成新矩阵特征的过程。

2. 采样层(pooling)

Pooling层主要的作用是下采样,通过去掉Feature Map中不重要的样本,进一步减少参数数量。Pooling的方法很多,最常用的是Max Pooling。Max Pooling实际上就是在 n × n n\times n n×n的样本中取最大值,作为采样后的样本值。Max Pooling的示意图如下:

pooling层的通常具有以下作用:

对Feature Map进行压缩,保留主要的特征同时减少参数,提高模型泛华能力

增加特征的平移不变性(矩阵进行平移后旋转等操作时,对卷积层的运算结果影响不大。(详细可参考pooling的作用)

3.全连接层(Full Connection)

将Feature Map展开成一维后,连接全连接层。

关于卷积神经网络,网上讲解非常多,此处不仔细表述,推荐一个关于神经网络很通俗易懂的教程:零基础入门深度学习(4) - 卷积神经网络

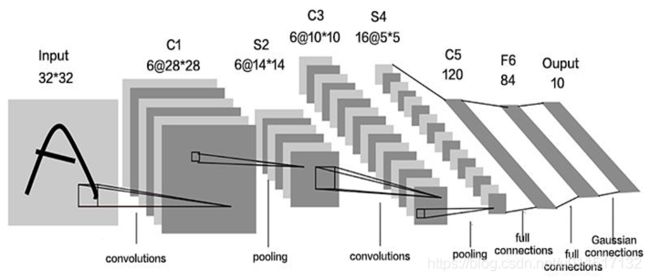

二、LeNet-5的手写数字识别

LeNet-5出自论文Gradient-Based Learning Applied to Document Recognition,对MNIST数据集(mnist数据集下载地址)的分识别准确度可达99.2%。其网络结构为:

输 入 − > ( 卷 积 层 c o n v o l u t i o n + 采 样 层 p o o l i n g ) × 2 − > 全 连 接 层 F C × 2 − > s o f t m a x 层 \mathrm{输入}->(\mathrm{卷积层}convolution+采样层pooling)\times 2->\mathrm{全连接层}FC\times 2->softmax层 输入−>(卷积层convolution+采样层pooling)×2−>全连接层FC×2−>softmax层

使用深度学习工具tensorflow,编写LeNet-5结构,并对mnist手写数字集(mnist数据集下载地址)进行训练。其模型训练的python代码如下:

# -*- coding: utf-8 -*-

"""

Created on Tue Aug 20 17:51:50 2019

@author: nbszg

"""

#引入依赖包

import os

import struct

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

#数据加载函数

def load_mnist(path, kind='train'):

"""load mnist date

Args:

path: date path

kind: train or test

Returns:

images and labels

"""

labels_path = os.path.join(path,'%s-labels.idx1-ubyte'% kind) #标签数据

images_path = os.path.join(path,'%s-images.idx3-ubyte'% kind) #图像数据

with open(labels_path, 'rb') as lbpath:

magic, n = struct.unpack('>II',lbpath.read(8))

labels = np.fromfile(lbpath,dtype=np.uint8)

with open(images_path, 'rb') as imgpath:

magic, num, rows, cols = struct.unpack('>IIII',imgpath.read(16))

images = np.fromfile(imgpath,dtype=np.uint8).reshape(len(labels), 784)

return images, labels

path = 'D:/data/mnist/' #数据所在路径

X_train, y_train = load_mnist(path, kind='train') #训练集

X_test, y_test = load_mnist(path, kind='t10k') #测试集

#将label进行one-hot处理

def y_onehot(y):

"""one-hot option

Args:

y: labels

Returns:

one-hot label

eg:1->[0,1,0,0,0,0,0,0,0]

"""

n_class = 10

y_labels = np.eye(n_class)[y]

return y_labels

y_train_labels = y_onehot(y_train)

y_test_labels = y_onehot(y_test)

#网络权重变量

def weight_variable(shape):

"""weight_variable

Args:

shape: weight's shape with 4 dimensions

"""

initial = tf.truncated_normal(shape, stddev=0.1) #对变量进行初始化

return tf.Variable(initial)

#网络偏置项变量

def bias_variable(shape):

"""bias_variable

Args:

shape: bias's shape with 1 dimension

"""

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

#定义卷积层,padding='SAME'

def conv2d(x, W):

"""conv2d layer

Args:

x: input

W:weights

Returns:

conv2d output

"""

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

#定义pooling层,采用max_pooling

def max_pool_2x2(x):

"""max pooling layer

Args:

x: input

Returns:

max pooling output

"""

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1],strides=[1, 2, 2, 1], padding='SAME')

#超参

learning_rate = 0.0001 #学习速率

training_steps = 2000 #训练轮数

batch_size = 200 #批训练大小

display_step = 100 #展示步长

X = tf.placeholder(tf.float32,[None, 784]) #训练X

Y = tf.placeholder(tf.float32, [None, 10]) #标签y

W_conv1 = weight_variable([5, 5, 1, 32]) #第一层卷积参数5*5,输入通道1,输出通道32

b_conv1 = bias_variable([32]) #第一层卷积,偏置项输出通道32

W_conv2 = weight_variable([5, 5, 32, 64]) #第二层卷积参数5*5,输入通道32,输出通道64

b_conv2 = bias_variable([64]) #第二层卷积,偏置项输出通道64

W_fc1 = weight_variable([7 * 7 * 64, 1024]) #全连接层,64个通道图像变为1维,为7*7*64;设置1024个神经元

b_fc1 = bias_variable([1024]) #对应1024个偏置项

keep_prob = tf.placeholder("float") #drop_out概率

W_fc2 = weight_variable([1024, 10]) #全连接层变量

b_fc2 = bias_variable([10]) #全连接层偏置项

def conv_net(x, W_conv1, b_conv1, W_conv2, b_conv2, W_fc1, b_fc1, W_fc2, b_fc2, keep_prob):

"""conv_net model

Args:

...

Returns:

conv_net model prediction

"""

x_image = tf.reshape(x, [-1,28,28,1])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1) #第一层卷积层:relu函数

h_pool1 = max_pool_2x2(h_conv1) #第一Pooling层,因为pooling步长为2,因此图像矩阵变为14*14

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2) #第二层卷积层:relu函数,因为pooling步长为2

h_pool2 = max_pool_2x2(h_conv2) #第二Pooling层,因为pooling步长为2,因此图像矩阵变为7*7

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64]) #改变最后Plooing层形状,为对应的7*7*64

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1) #全连接层

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

y_conv=tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2) #最后softmax全连接层

return y_conv

y_conv = conv_net(X, W_conv1, b_conv1, W_conv2, b_conv2, W_fc1, b_fc1, W_fc2, b_fc2, keep_prob)

cross_entropy = -tf.reduce_mean(Y*tf.log(tf.clip_by_value(y_conv,1e-11,1.0))) #交叉熵为目标函数

train_step = tf.train.AdamOptimizer(learning_rate).minimize(cross_entropy) #Adam梯度下降优化

correct_prediction = tf.equal(tf.argmax(y_conv,1), tf.argmax(Y,1)) #判断预测准确率

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float")) #准确率

init = tf.global_variables_initializer()

#定义模型载入函数

def load_model(sess, saver,ckpt_path):

latest_ckpt = tf.train.latest_checkpoint(ckpt_path)

if latest_ckpt: #如果之间训练过,可以在训练过的模型的基础上继续进行训练

print ('resume from', latest_ckpt)

saver.restore(sess, latest_ckpt)

return int(latest_ckpt[latest_ckpt.rindex('-') + 1:])

else:

print ('building model start')

sess.run(tf.global_variables_initializer())

return -1

#模型训练

with tf.Session() as sess:

sess.run(init)

total_batch = int(len(X_train)/batch_size)

saver = tf.train.Saver(tf.global_variables())

last_epoch = load_model(sess, saver,'D:/data/mnist/cnn_model_file/')

for step in range(1,training_steps+1):

for i in range(1,total_batch):

batch_x = X_train[(i-1)*batch_size: i*batch_size]

batch_y = y_train_labels[(i-1)*batch_size: i*batch_size]

sess.run(train_step, feed_dict={

X:batch_x, Y:batch_y, keep_prob: 0.5})

if (step) % display_step == 0:

loss,acc = sess.run([cross_entropy,accuracy], feed_dict={

X:X_train[0:1000], Y:y_train_labels[0:1000], keep_prob: 1.0})

print ("Step"+str(step)+", Minibatch Loss=========>"+"{:.4f}".format(loss)+", Training Accuracy=========>"+"{:.3f}".format(acc))

saver.save(sess, 'D:/data/mnist/cnn_mnist.module', global_step=step)

loss, acc = sess.run([cross_entropy, accuracy], feed_dict={

X:X_train[0:1000], Y:y_train_labels[0:1000], keep_prob: 1.0})

print ("Step"+str(step)+", Minibatch Loss="+"{:.4f}".format(loss)+", Training Accuracy="+"{:.3f}".format(acc))

print ("Optimization Finished!")

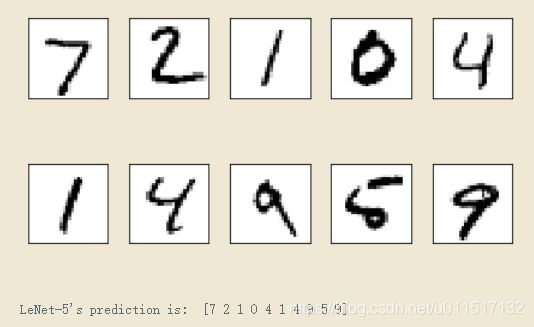

使用训练好的模型对test集的样本进行预测。次数列举对10张图片的预测结果:

可以看到,LeNet对10张手写数字图片的预测都是正确的。

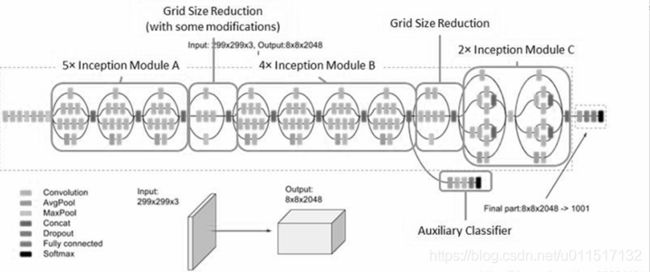

二、Inception-V3的猫狗图片分类

Inception 网络是 CNN 分类器发展史上一个重要的里程碑。之前的CNN总是尝试卷积层的不断传串联,希望用越来越深的网络达到更好的效果。而Inception族的网络是网络不在是简单的堆叠加深。Inception-V3便是Inception其中的一员。其网络结构如下:

关于Inception-V3的更多知识可阅读:深度学习卷积神经网络——经典网络GoogLeNet(Inception V3)网络的搭建与实现

Keras 是一个用 Python 编写的高级神经网络 API,它能够以 TensorFlow, CNTK, 或者 Theano 作为后端运行。当以TensorFlow为后端时,Kreas可以看做TensorFlow的封装。此时Kreas的编写往往比TensorFlow编写更加简单。因此此处使用Kreas对Inception-V3案例进行编写。

Inception-V3结构大约有2500万个参数,使用普通个人电脑对其进行训练是本可能完成的。因此此处使用迁移学习,将已经训练好的Inception-V3的大部分层参数直接载入,只对最后几层的网络进行训练。

模型训练的python代码如下:

# -*- coding: utf-8 -*-

"""

Created on Tue Aug 21 13:31:50 2019

@author: nbszg

"""

#引入依赖包# -*- coding: utf-8 -*-

import os

import numpy as np

from keras.applications.inception_v3 import InceptionV3

from keras.layers import Dense,Flatten,GlobalAveragePooling2D

from keras.models import Model,load_model

from keras.optimizers import SGD

from keras.preprocessing.image import ImageDataGenerator

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('ignore')

class PowerTransferMode:

#数据准备

def DataGen(self, dir_path, img_row, img_col, batch_size, is_train):

"""

data enhancement

Args:

dir_path:dir path of data

img_row: image width

img_col: image height

batch_size: bitch_size of trian

is_train: boolean

return:

data enhancement result

"""

#数据增强可以增加训练数据,包括平移,旋转,镜像,加入噪声等

if is_train:

datagen = ImageDataGenerator(rescale=1./255,

zoom_range=0.25, rotation_range=15.,

channel_shift_range=25., width_shift_range=0.02, height_shift_range=0.02,

horizontal_flip=True, fill_mode='constant')

else:

datagen = ImageDataGenerator(rescale=1./255) #若不是训练集,则直接输出

generator = datagen.flow_from_directory(

dir_path, target_size=(img_row, img_col),

batch_size=batch_size,

#class_mode='binary',

shuffle=is_train)

return generator

# InceptionV3模型

def InceptionV3_model(self, lr=0.005, decay=1e-6, momentum=0.9, nb_classes=2, img_rows=197, img_cols=197, RGB=True):

"""

InceptionV3_model

Args:

...

return:

InceptionV3_model

"""

color = 3 if RGB else 1

base_model = InceptionV3(weights='imagenet', include_top=False, pooling=None,input_shape=(img_rows, img_cols, color),classes=nb_classes) #迁移学习,InceptionV3_model模型复用

# 冻结base_model所有层,这样就可以正确获得bottleneck特征

for layer in base_model.layers:

layer.trainable = False #迁移学习的InceptionV3_model部分不需要再训练

x = base_model.output

# 添加自己的全链接分类层

x = GlobalAveragePooling2D()(x) #添加average pooling层

x = Dense(1024, activation='relu')(x) #全连接层

predictions = Dense(nb_classes, activation='softmax')(x) #softmax输出预测结果

# 训练模型

model = Model(inputs=base_model.input, outputs=predictions)

sgd = SGD(lr=lr, decay=decay, momentum=momentum, nesterov=True) #优化器

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

return model

#训练模型

def train_model(self, model, epochs, train_generator, steps_per_epoch, validation_generator,

validation_steps, model_url, is_load_model=False):

"""

train model

Args:

...

return:

model trained

"""

if is_load_model and os.path.exists(model_url):

model = load_model(model_url) #载入模型

history_ft = model.fit_generator(

train_generator,

steps_per_epoch=steps_per_epoch,

epochs=epochs,

validation_data=validation_generator,

validation_steps=validation_steps) #模型训练

model.save(model_url,overwrite=True) #模型保存

return history_ft

image_size = 197

batch_size = 32

transfer = PowerTransferMode()

train_generator = transfer.DataGen('D:/data/cat_vs_dog/train_set/', image_size, image_size, batch_size, True)

validation_generator = transfer.DataGen('D:/data/cat_vs_dog/validation_set/', image_size, image_size, batch_size, False)

model = transfer.InceptionV3_model(nb_classes=2, img_rows=image_size, img_cols=image_size, is_plot_model=False)

history_ft = transfer.train_model(model, 10, train_generator, 600, validation_generator, 60, 'D:/data/cat_vs_dog/inceptionv3_nbs.model', is_load_model=False) #'D:/data/cat_vs_dog/inceptionv3_nbs.model'为已经下载好的迁移学习预训练文件