- 以一维卷积神经网络为例

1 直接使用 numpy 与 tensor 来构建数据集

1.1一维卷积神经网络结构

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv1d(in_channels=1, out_channels=10, kernel_size=3, stride=2)

self.max_pool1 = nn.MaxPool1d(kernel_size=3, stride=2)

self.conv2 = nn.Conv1d(10, 20, 3, 2)

self.max_pool2 = nn.MaxPool1d(3, 2)

self.conv3 = nn.Conv1d(20, 40, 3, 2)

self.liner1 = nn.Linear(40 * 14, 120)

self.liner2 = nn.Linear(120, 84)

self.liner3 = nn.Linear(84, 4)

def forward(self, x):

x = F.relu(self.conv1(x))

x = self.max_pool1(x)

x = F.relu(self.conv2(x))

x = self.max_pool2(x)

x = F.relu(self.conv3(x))

x = x.view(-1, 40 * 14)

x = F.relu(self.liner1(x))

x = F.relu(self.liner2(x))

x = self.liner3(x)

return x

1.2 数据的结构

x_train, x_test, y_train, y_test = train_test_split(dataSet, labels, test_size=0.3, random_state=42)

print( x_train.shape, y_train.shape, x_test.shape, y_test.shape)

out:

((51527, 500), (51527,), (22084, 500), (22084,))

1.3 数据的结构

1.3.1 将 numpy 数据集转化为 tensor

x_train = torch.from_numpy(x_train)

y_train = torch.from_numpy(y_train)

x_test = torch.from_numpy(x_test)

y_test = torch.from_numpy(y_test)

1.3.2 训练集数据类型转化为:tensor.float32

x_train = torch.tensor(x_train, dtype=torch.float32)

x_test = torch.tensor(x_test, dtype=torch.float32)

1.3.3 改变 x_train.shape , x_test.shape 的形状

送入训练的数据格式为:(1, 1, 500)

x_train = x_train.reshape(x_train.shape[0], 1, 1, x_train.shape[1])

x_test = x_test.reshape(x_test.shape[0], 1, x_train.shape[1])

print(x_train.shape, x_test.shape)

out:

(torch.Size([51527, 1, 1, 500]), torch.Size([22084, 1, 500]))

1.3.4 标签的数据格式 与 数据类型

- 在1.2 节中给出标签的形状:

x_train.shape = (51527,) , y_train.shape = (22084,),,类型是:float, 但是训练器需要的是:(51527, 1), (22084, 1) ,dtype=tensor.long

y_train = y_train.reshape(y_train.shape[0], 1)

y_test = y_test.reshape(y_test.shape[0], 1)

y_train = torch.tensor(y_train, dtype=torch.long)

y_test = torch.tensor(y_test, dtype=torch.long)

1.4 开始训练

# 定义损失函数 与 优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

start = time.time()

for epoch in tqdm(range(10)):

running_loss = 0

for i, input_data in enumerate(x_train, 0):

# print(input_data.shape)

label = y_train[i]

optimizer.zero_grad()

outputs = net(input_data)

loss = criterion(outputs, label)

loss.backward()

optimizer.step()

running_loss += loss.item()

if i % 2000 == 1999:

print('[%d, %5d] loss: %0.3f' % (epoch + 1, i + 1, running_loss / 2000))

running_loss = 0.0

print('time = %2dm:%2ds' % ((time.time() - start)//60, (time.time()-start)%60))

2 通过继承 torch.utils.data.Dataset类构建一个数据集

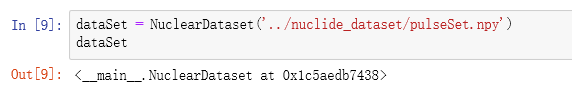

class NuclearDataset(Dataset):

""" Nuclear Dataset."""

def __init__(self, data_file, root_dir=None, transform=None):

"""

Args:

data_file (string): Path to the data file with annotations.

root_dir (string): Directory with all the images.

transform (callable, optional): Optional transform to be applied

on a sample.

"""

# self.landmarks_frame = pd.read_csv(csv_file)

# 加载数据

self.landmarks_frame = np.load(data_file)

self.root_dir = root_dir

self.transform = transform

def __len__(self):

return len(self.landmarks_frame)

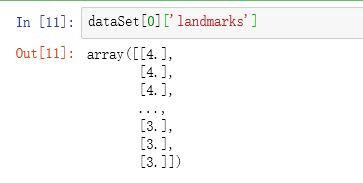

def __getitem__(self, idx):

if torch.is_tensor(idx):

idx = idx.tolist()

# img_name = os.path.join(self.root_dir,self.landmarks_frame.iloc[idx, 0])

# image = io.imread(img_name)

# landmarks = self.landmarks_frame.iloc[idx, 1:]

landmarks = self.landmarks_frame[:, -1]

landmarks = np.array([landmarks])

# landmarks = landmarks.astype('float').reshape(-1, 2)

landmarks = landmarks.astype('float').reshape(-1, 1)

sample = {'landmarks': landmarks}

if self.transform:

sample = self.transform(sample)

return sample

同样可以直接定义函数 划分训练集与训练集,比较方便,代码可移植性较好。这其中数据的形状是怎样的,可以按照代码运行的错误提示进行修改。