1.脚本分析

#启动所有hadoop守护进程

sbin/start-all.sh

--------------

libexec/hadoop-config.sh

start-dfs.sh #用于启动namenode,datanode,secondarynamenode

start-yarn.sh #用于启动nodemanager,resourcemanager

#用于启动namenode,datanode,secondarynamenode

sbin/start-dfs.sh

--------------

libexec/hadoop-config.sh

sbin/hadoop-daemons.sh --config .. --hostname .. start namenode ...

sbin/hadoop-daemons.sh --config .. --hostname .. start datanode ...

sbin/hadoop-daemons.sh --config .. --hostname .. start sescondarynamenode ...

sbin/hadoop-daemons.sh --config .. --hostname .. start zkfc ... //

#用于启动nodemanager,resourcemanager

sbin/start-yarn.sh

--------------

libexec/yarn-config.sh

bin/yarn-daemon.sh start resourcemanager

bin/yarn-daemons.sh start nodemanager

#主要调用hadoop-daemon.sh,其中hadoop-daemons.sh在多个主机上启动,hadoop-daemon.sh在单个主机上启动,并且二者均可用于启动namenode,datanode,secondarynamenode

sbin/hadoop-daemons.sh

----------------------

libexec/hadoop-config.sh

slaves

hadoop-daemon.sh

#主要调用hdfs命令

sbin/hadoop-daemon.sh

-----------------------

libexec/hadoop-config.sh

bin/hdfs ....

#主要调用yarn命令,yarn-daemon.sh和yarn-daemons.sh均可用于启动nodemanager,resourcemanager

sbin/yarn-daemon.sh

-----------------------

libexec/yarn-config.sh

bin/yarn

使用stop-dfs.sh和stop-yarn.sh关闭所有hadoop守护进程:

henry@s201:~$ xcall.sh jps

============= s201 jps =============

5828 ResourceManager

5462 NameNode

48363 Jps

5676 SecondaryNameNode

============= s202 jps =============

30546 NodeManager

68245 Jps

30415 DataNode

============= s203 jps =============

68448 Jps

30812 NodeManager

30687 DataNode

============= s204 jps =============

30289 DataNode

68112 Jps

30426 NodeManager

henry@s201:~$

henry@s201:~$

henry@s201:~$ stop-dfs.sh

Stopping namenodes on [s201]

s201: stopping namenode

s204: stopping datanode

s203: stopping datanode

s202: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

henry@s201:~$ xcall.sh jps

============= s201 jps =============

48772 Jps

5828 ResourceManager

============= s202 jps =============

30546 NodeManager

68349 Jps

============= s203 jps =============

68553 Jps

30812 NodeManager

============= s204 jps =============

68217 Jps

30426 NodeManager

henry@s201:~$ stop-yarn.sh

stopping yarn daemons

stopping resourcemanager

s203: stopping nodemanager

s204: stopping nodemanager

s202: stopping nodemanager

no proxyserver to stop

henry@s201:~$ xcall.sh jps

============= s201 jps =============

48928 Jps

============= s202 jps =============

68453 Jps

============= s203 jps =============

68657 Jps

============= s204 jps =============

68315 Jps

使用hadoop-daemons.sh、hadoop-daemon.sh、yarn-daemons.sh、yarn-daemon.sh启动所有hadoop进程

henry@s201:~$ xcall.sh jps

============= s201 jps =============

51938 Jps

============= s202 jps =============

70422 Jps

============= s203 jps =============

70618 Jps

============= s204 jps =============

70279 Jps

henry@s201:~$ hadoop-daemon.sh start namenode

starting namenode, logging to /home/henry/soft/hadoop-2.7.2/logs/hadoop-henry-namenode-s201.out

henry@s201:~$ jps

52048 Jps

51981 NameNode

henry@s201:~$ hadoop-daemon.sh start secondarynamenode

starting secondarynamenode, logging to /home/henry/soft/hadoop-2.7.2/logs/hadoop-henry-secondarynamenode-s201.out

henry@s201:~$ jps

52128 Jps

52081 SecondaryNameNode

51981 NameNode

henry@s201:~$ hadoop-daemons.sh start datanode

s204: starting datanode, logging to /home/henry/soft/hadoop-2.7.2/logs/hadoop-henry-datanode-s204.out

s203: starting datanode, logging to /home/henry/soft/hadoop-2.7.2/logs/hadoop-henry-datanode-s203.out

s202: starting datanode, logging to /home/henry/soft/hadoop-2.7.2/logs/hadoop-henry-datanode-s202.out

henry@s201:~$ xcall.sh jps

============= s201 jps =============

52208 Jps

52081 SecondaryNameNode

51981 NameNode

============= s202 jps =============

70608 Jps

70503 DataNode

============= s203 jps =============

70698 DataNode

70797 Jps

============= s204 jps =============

70359 DataNode

70458 Jps

henry@s201:~$ yarn-daemons.sh start nodemanager

s204: starting nodemanager, logging to /home/henry/soft/hadoop-2.7.2/logs/yarn-henry-nodemanager-s204.out

s203: starting nodemanager, logging to /home/henry/soft/hadoop-2.7.2/logs/yarn-henry-nodemanager-s203.out

s202: starting nodemanager, logging to /home/henry/soft/hadoop-2.7.2/logs/yarn-henry-nodemanager-s202.out

henry@s201:~$ xcall.sh jps

============= s201 jps =============

52081 SecondaryNameNode

52301 Jps

51981 NameNode

============= s202 jps =============

70675 NodeManager

70503 DataNode

70824 Jps

============= s203 jps =============

71013 Jps

70870 NodeManager

70698 DataNode

============= s204 jps =============

70531 NodeManager

70674 Jps

70359 DataNode

henry@s201:~$ yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /home/henry/soft/hadoop-2.7.2/logs/yarn-henry-resourcemanager-s201.out

henry@s201:~$ xcall.sh jps

============= s201 jps =============

52081 SecondaryNameNode

52596 Jps

52348 ResourceManager

51981 NameNode

============= s202 jps =============

70675 NodeManager

70503 DataNode

70861 Jps

============= s203 jps =============

71056 Jps

70870 NodeManager

70698 DataNode

============= s204 jps =============

70531 NodeManager

70359 DataNode

70717 Jps

2 常用命令

bin/hadoop

------------------------

hadoop verion //版本

hadoop fs //文件系统客户端.

hadoop jar //

hadoop classpath

hadoop checknative

bin/hdfs

------------------------

dfs // === hadoop fs

classpath

namenode -format

secondarynamenode

namenode

journalnode

zkfc

datanode

dfsadmin

haadmin

fsck

balancer

jmxget

mover

oiv

oiv_legacy

oev

fetchdt

getconf

groups

snapshotDiff

lsSnapshottableDir

portmap

nfs3

cacheadmin

crypto

storagepolicies

version

hdfs常用命令

--------------------

$>hdfs dfs -mkdir /user/centos/hadoop

$>hdfs dfs -ls -r /user/centos/hadoop

$>hdfs dfs -lsr /user/centos/hadoop

$>hdfs dfs -put index.html /user/centos/hadoop

$>hdfs dfs -get /user/centos/hadoop/index.html a.html

$>hdfs dfs -rm -r -f /user/centos/hadoop

- hadoop fs命令等价于hdfs dfs命令

- 使用hdfs dfs -mkdir -p /usr/ubuntu/hadoop

henry@s201:~$ hdfs dfs -mkdir -p /usr/ubuntu/hadoop

henry@s201:~$ hdfs dfs -ls -R /

drwxr-xr-x - henry supergroup 0 2018-06-26 08:46 /usr

drwxr-xr-x - henry supergroup 0 2018-06-26 08:46 /usr/ubuntu

drwxr-xr-x - henry supergroup 0 2018-06-26 08:46 /usr/ubuntu/hadoop

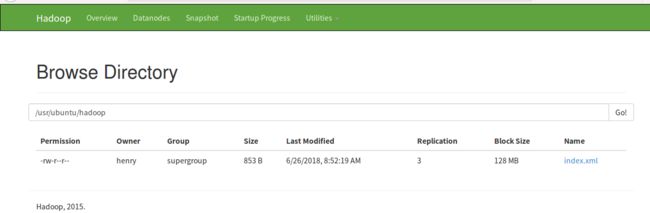

*使用hdfs dfs -put src dst命令上传文件到hadoop文件系统

henry@s201:~$ hdfs dfs -help put

-put [-f] [-p] [-l] ... :

Copy files from the local file system into fs. Copying fails if the file already

exists, unless the -f flag is given.

Flags:

-p Preserves access and modification times, ownership and the mode.

-f Overwrites the destination if it already exists.

-l Allow DataNode to lazily persist the file to disk. Forces

replication factor of 1. This flag will result in reduced

durability. Use with care.

henry@s201:~$ hdfs dfs -put index.xml /usr/ubuntu/hadoop

henry@s201:~$ hdfs dfs -ls -R /

drwxr-xr-x - henry supergroup 0 2018-06-26 08:46 /usr

drwxr-xr-x - henry supergroup 0 2018-06-26 08:46 /usr/ubuntu

drwxr-xr-x - henry supergroup 0 2018-06-26 08:52 /usr/ubuntu/hadoop

-rw-r--r-- 3 henry supergroup 853 2018-06-26 08:52 /usr/ubuntu/hadoop/index.xml

- 使用hdfs dfs -get命令从hadoop文件系统中下载文件到本地

henry@s201:~$ hdfs dfs -help get

-get [-p] [-ignoreCrc] [-crc] ... :

Copy files that match the file pattern to the local name. is kept.

When copying multiple files, the destination must be a directory. Passing -p

preserves access and modification times, ownership and the mode.

henry@s201:~$ hdfs dfs -get /usr/ubuntu/hadoop a.xml

henry@s201:~$ ls -l a.xml

total 4

-rw-r--r-- 1 henry henry 853 6月 26 08:58 index.xml

- 使用hdfs dfs -rm命令删除文件

henry@s201:~$ hdfs dfs -rm -f /usr/ubuntu/hadoop/*

18/06/26 09:00:03 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 0 minutes, Emptier interval = 0 minutes.

Deleted /usr/ubuntu/hadoop/index.xml

henry@s201:~$ hdfs dfs -ls /usr/ubuntu/hadoop

henry@s201:~$