用pytorch实现一个简单的线性回归模型

我们使用pytorch实现一个简单的线性回归模型。(我是在jupyter notebook下做的,建议在这个环境下写代码)

首先我们先定义数据集:

#数据集中的样本值

x = [35.7, 55.9, 58.2, 81.9, 56.3, 48.9, 33.9, 21.8, 48.4, 60.4, 68.4]

#数据集中的标签

y = [0.5, 14.0, 15.0, 28.0, 11.0, 8.0, 3.0, -4.0, 6.0, 13.0, 21.0]

x = torch.tensor(x)

y = torch.tensor(y)创建线性模型与损失函数,在这里我们的损失函数是平方均方误差函数:

def model(x, w, b):

return w * x + b

def loss_fn(y_pre, y):

squared_diffs = (y_pre - y) ** 2

return squared_diffs.mean()做分析(非必须):

w = torch.ones(1)

b = torch.zeros(1)

y_pre = model(x, w, b)

y_pre

loss = loss_fn(y_pre, y)

loss之后我们要做梯度下降,做梯度下降,我就需要对w和b求偏导。

def loss_fn(y_pre, y):

squared_diffs = (y_pre - y) ** 2

return squared_diffs.mean()

def dloss_fn(y_pre, y):

dsq_diffs = 2 * (y_pre - y)

return dsq_diffs

def dmodel_dw(x, w, b):

return x

def dmodel_db(x, w, b):

return 1.0

def grad_fn(x, y, y_pre, w, b):

dloss_dw = dloss_fn(y_pre, y) * dmodel_dw(x, w, b)

dloss_db = dloss_fn(y_pre, y) * dmodel_db(x, w, b)

return torch.stack([dloss_dw.mean(), dloss_db.mean()])我们写最后训练函数:

def training_loop(n_epochs, learning_rate, params, x, y):

for epoch in range(1, n_epochs + 1):

w, b = params

y_pre = model(x, w, b)

loss = loss_fn(y_pre, y)

grad = grad_fn(x, y, y_pre, w, b)

params = params - learning_rate * grad

print('Epoch %d, Loss %f' % (epoch, float(loss)))

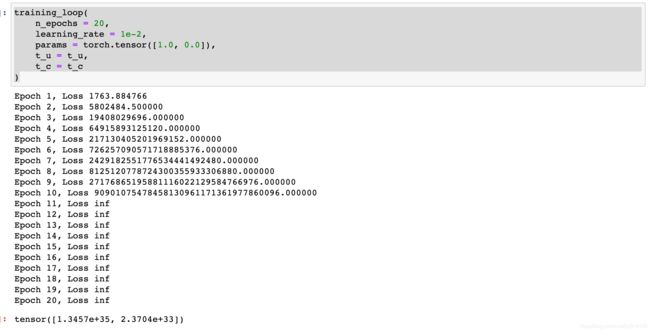

return params最后的训练效果:

全部代码示例:

import torch

#数据集中的样本值

x = [35.7, 55.9, 58.2, 81.9, 56.3, 48.9, 33.9, 21.8, 48.4, 60.4, 68.4]

#数据集中的标签

y = [0.5, 14.0, 15.0, 28.0, 11.0, 8.0, 3.0, -4.0, 6.0, 13.0, 21.0]

x = torch.tensor(x)

y = torch.tensor(y)

def model(x, w, b):

return w * x + b

def loss_fn(y_pre, y):

squared_diffs = (y_pre - y) ** 2

return squared_diffs.mean()

w = torch.ones(1)

b = torch.zeros(1)

y_pre = model(x, w, b)

y_pre

loss = loss_fn(y_pre, y)

loss

def loss_fn(y_pre, y):

squared_diffs = (y_pre - y) ** 2

return squared_diffs.mean()

def dloss_fn(y_pre, y):

dsq_diffs = 2 * (y_pre - y)

return dsq_diffs

def dmodel_dw(x, w, b):

return x

def dmodel_db(x, w, b):

return 1.0

def grad_fn(x, y, y_pre, w, b):

dloss_dw = dloss_fn(y_pre, y) * dmodel_dw(x, w, b)

dloss_db = dloss_fn(y_pre, y) * dmodel_db(x, w, b)

return torch.stack([dloss_dw.mean(), dloss_db.mean()])

def training_loop(n_epochs, learning_rate, params, x, y):

for epoch in range(1, n_epochs + 1):

w, b = params

y_pre = model(x, w, b)

loss = loss_fn(y_pre, y)

grad = grad_fn(x, y, y_pre, w, b)

params = params - learning_rate * grad

print('Epoch %d, Loss %f' % (epoch, float(loss)))

return params

training_loop(

n_epoches = 20,

learning_rate = 1e-4,

params = torch.tensor([1.0, 0.0]),

x = x,

y = y

)

training_loop(

n_epoches = 20,

learning_rate = 1e-2,

params = torch.tensor([1.0, 0.0]),

x = x,

y = y

)

参考资料:

《Deep Learning with PyTorch》Essential Excepts. Eli Stevens, Luca Antiga.