全国大学生大数据技能竞赛

文章目录

- 1.ip地址配置

- 2.修改虚拟机的名称

- 3.修改hosts文件

- 4.修改防火墙

- 5.SSH免密登录

- 5.jdk安装

- 6.集群分发

-

- 6.1:scp(secure copy)安全拷贝

- 6.2 rsync 远程同步工具

- 7.安装Zookeeper

- 8.hadoop安装

- 9.HBase安装

- 10 构建数据仓库

-

- 10.1在slave2 上安装mysql server

- 设置sql密码为空

- 10.2在slave1 上安装hive

- 11.Centos7安装mysql

- 12.hive的部署

-

-

- 启动hive

-

- Flume 安装教程

-

- 1.1案例实现

1.ip地址配置

- 查看ip信息

ip addr

ip a

- 修改配置文件

vi /etc/sysconfig/network-scripts/ifcfg-ens33

service network restart

2.修改虚拟机的名称

- 执行以下命令

hostnamectl set-hostname master

- 进入配置文件

vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=master

- 使修改立即生效,执行以下命令

bash

3.修改hosts文件

- 进入编辑器

vi /etc/hosts

4.修改防火墙

- 查看状态

systemctl status firewalld

- 临时关闭

systemctl stop firewalld

- 临时启动

systemctl start firewalld

- 开机禁用

systemctl disable firewalld

- 开机启用

systemctl enable firewalld

![]()

![]()

5.SSH免密登录

- 基本语法

ssh另一台电脑的ip地址

- 配置方法:

执行以下命令,然后敲(四个回车)

ssh-keygen

- 配置方法

(1)生成公钥和私钥:

ssh-keygen -t rsa

然后敲(三个回车),就会生成两个文件id_rsa(私钥)、id_rsa.pub(公钥)

(2)将公钥拷贝到要免密登录的目标机器上

ssh-copy-id master

ssh-copy-id slave1

ssh-copy-id slave2

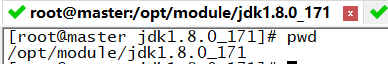

5.jdk安装

- 在/opt目录下新建两个文件夹

software和module

mkdir /opt/software #用于存放压缩文件

mkdir /opt/module #用于存放解压文件

tar -zxvf /opt/software/jdk-8u171-linux-x64.tar.gz -C /opt/module/

(2)打开/etc/profile文件

vi /etc/profile

(3)在profile文件末尾添加JDK路径

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk1.8.0_171

export PATH=$PATH:$JAVA_HOME/bin

(4)让修改后的文件生效

source /etc/profile

(5)测试JDK是否安装成功

java -version

(6)将jdk分发到slave1的节点

scp -r /opt/module slave1:/opt/module

6.集群分发

6.1:scp(secure copy)安全拷贝

(1)scp定义:

scp可以实现服务器与服务器之间的数据拷贝。(from server1 to server2)

(2)基本语法

scp -r $pdir/$fname $user@hadoop$host:$pdir/$fname

命令 递归 要拷贝的文件路径/名称 目的用户@主机:目的路径/名称

6.2 rsync 远程同步工具

rsync主要用于备份和镜像。具有速度快、避免复制相同内容和支持符号链接的优点。

rsync和scp区别:用rsync做文件的复制要比scp的速度快,rsync只对差异文件做更新。scp是把所有文件都复制过去。

(1)基本语法

rsync -rvl $pdir/$fname $user@hadoop$host:$pdir/$fname

命令 选项参数 要拷贝的文件路径/名称 目的用户@主机:目的路径/名称

-r 递归

-v 显示复制过程

-l 拷贝符号连接

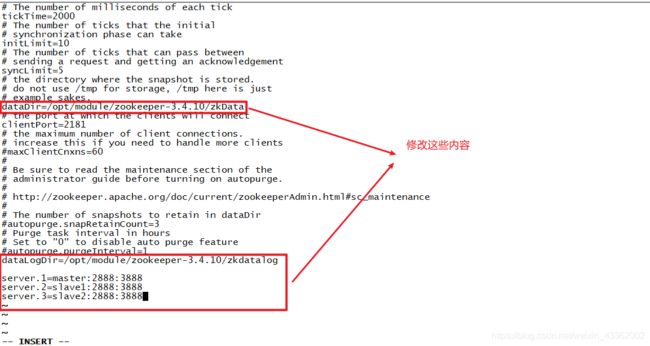

7.安装Zookeeper

- 安装jdk

- 拷贝Zookeeper安装包到Linux系统下

- 解压到指定目录

tar -zxvf /opt/software/zookeeper-3.4.10.tar.gz -C /opt/module/

- 将/opt/module/zookeeper-3.4.10/conf这个路径下的zoo_sample.cfg修改为zoo.cfg;

mv zoo_sample.cfg zoo.cfg

- 在/opt/module/zookeeper-3.4.10/这个目录上创建

zkData和zkdatalog文件夹

mkdir zkData

touch myid

- 在文件中添加与server对应的编号:

- 启动ZooKeeper集群

在ZooKeeper集群的每个节点上,执行启动ZooKeeper服务的脚本,

启动zookper

bin/zkServer.sh start

查看状态

bin/zkServer.sh status

8.hadoop安装

- 解压到指定目录

tar -zxvf hadoop-2.7.3.tar.gz -C /opt/module/

- 修改环境变量

1)获取hadoop的路径

进入hadoop的安装目录,然后pwd

(2)修改配置文件

vi /etc/profile

进入这个文件,添加一下代码

##HADOOP

export HADOOP_HOME=/opt/module/hadoop-2.7.3

export CLASSPATH=$CLASSPATH:$HADOOP_HOME/lib

export PATH=$PATH:$HADOOP_HOME/bin

(3)让配置文件生效

source /etc/profile

(4)测试

hadoop version

export JAVA_HOME=/opt/module/jdk1.8.0_171

<!-- 指定HDFS中NameNode的地址 -->

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

</property>

<!-- 指定Hadoop运行时产生文件的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-2.7.3/hdfs/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>fs.checkpoint.period</name>

<value>60</value>

</property>

<property>

<name>fs.checkpoint.size</name>

<value>67108864</value>

</property>

解析:配置HDFS的namenode的地址;配置hadoop运行时产生的文件的目录

1)fs.default.name是NameNode的URI。hdfs://主机名:端口/

2)hadoop.tmp.dir :Hadoop的默认临时路径,这个最好配置,如果在新增节点或者其他情况下莫名其妙的DataNode启动不了,就删除此文件中的tmp目录即可。不过如果删除了NameNode机器的此目录,那么就需要重新执行NameNode格式化的命令。

<!-- Reducer获取数据的方式 -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 指定YARN的ResourceManager的地址 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>slave2</value>

</property>

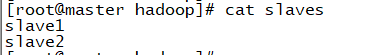

(8)编写slaves文件

vi slaves

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 指定Hadoop辅助名称节点主机配置 -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>slave1:9001</value>

</property>

(8) mapred-site.xml

首先将模板文件复制为 xml 文件,对其进行编辑:

cp mapred-site.xml.template mapred-site.xml

编辑mapred-site.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

scp -r /opt/module/hadoop-2.7.3/ slave1:/opt/module/

(10)配置slave1和slave2的环境变量

vi /etc/profile

hadoop namenode -format

(11)启动集群

启动所有服务

sbin/start-dfs.sh

启动HDFS

sbin/start-dfs.sh

启动yarn在slave1上

sbin/start-yarn.sh

IP地址:50070

9.HBase安装

- hbase解压

tar -zxvf hbase-1.2.4-bin.tar.gz -C /opt/module/

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.master</name>

<value>hdfs://master:6000</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,slave1,slave2</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/opt/module/zookeeper-3.4.10</value>

</property>

</configuration>

- 配置 conf/regionservers

在这里列出了希望运行的全部 HRegionServer,一行写一个 host。列在这里的 server 会随着集群的启动而启动,集群的停止而停止。

在这里列出了希望运行的全部 HRegionServer,一行写一个 host。列在这里的 server 会随着集群的启动而启动,集群的停止而停止。 - hadoop 配置文件拷入 hbase 的 conf 目录下:(当前位置为 hbased 的conf 配置文件夹)

一下两条命令均在conf目录下操作。。。注意最后的点.

cp /opt/module/hadoop-2.7.3/etc/hadoop/core-site.xml .

cp /opt/module/hadoop-2.7.3/etc/hadoop/hdfs-site.xml .

- 分发hbase

scp -r /opt/module/hbase-1.2.4/ slave1:/opt/module

- 配置环境变量

在修改/etc/profile文件

#set hbase environment

export HBASE_HOME=/opt/module/hbase-1.2.4

export PATH=$PATH:$HBASE_HOME/bin

- 让环境变量生效

source /etc/profile

- 启动hbase。在 master 上执行(保证 hadoop 和 zookeeper 已开启):

bin/start-hbase.sh

http://192.168.152.101:16010/master-status

10 构建数据仓库

master 作为 client 客户端

slave1 作为 hive server 服务器端

slave2 安装 mysql server

10.1在slave2 上安装mysql server

- 安装从网上下载文件的wget命令

yum -y install wget

- 下载mysql的repo源

wget http://repo.mysql.com/mysql-community-release-el7-5.noarch.rpm

- 安装mysql-community-release-el7-5.noarch.rpm包

rpm -ivh mysql-community-release-el7-5.noarch.rpm

- 查看

ls -1 /etc/yum.repos.d/mysql-community*

/etc/yum.repos.d/mysql-community.repo

/etc/yum.repos.d/mysql-community-source.repo

- 安装mysql

yum install mysql-server

- 启动服务

重载所有修改过的配置文件:

systemctl daemon-reload

开启服务:

systemctl start mysqld

开机自启

systemctl enable mysqld

设置sql密码为空

1.检查mysql服务是否启动,如果启动,关闭mysql服务

//查看mysql服务状态

[root@mytestlnx02 ~]# ps -ef | grep -i mysql

root 22972 1 0 14:18 pts/0 00:00:00 /bin/sh /usr/bin/mysqld_safe --datadir=/var/lib/mysql --socket=/var/lib/mysql/mysql.sock --pid-file=/var/run/mysqld/mysqld.pid --basedir=/usr --user=mysql

mysql 23166 22972 0 14:18 pts/0 00:00:00 /usr/sbin/mysqld --basedir=/usr --datadir=/var/lib/mysql --plugin-dir=/usr/lib/mysql/plugin --user=mysql --log-error=/var/log/mysqld.log --pid-file=/var/run/mysqld/mysqld.pid --socket=/var/lib/mysql/mysql.sock

root 23237 21825 0 14:22 pts/0 00:00:00 grep -i mysql

//关闭服务

[root@mytestlnx02 ~]# systemctl stop mysqld

[root@mytestlnx02 ~]#

- 修改mysql的配置文件my.cnf

my.cnf配置文件的位置,一般在/etc/my.cnf,有些版本在/etc/mysql/my.cnf

在配置文件中,增加2行代码

[mysqld]

skip-grant-tables

作用是登录mysql的时候跳过密码验证

然后启动mysql服务,并进入mysql

[root@mytestlnx02 ~]# systemctl start mysqld

[root@mytestlnx02 ~]#

[root@mytestlnx02 ~]# mysql -u root

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

- 修改密码

连接mysql这个数据库,修改用户密码

mysql> use mysql;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> update mysql.user set authentication_string=password('root_password') where user='root';

Query OK, 1 row affected, 1 warning (0.00 sec)

Rows matched: 1 Changed: 1 Warnings: 1

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> exit

- 重启mysql服务

先将之前加在配置文件里面的2句代码注释或删除掉,然后重启mysql服务,就可以使用刚刚设置的密码登录了。

[root@mytestlnx02 ~]# systemctl start mysqld

[root@mytestlnx02 ~]#

[root@mytestlnx02 ~]# mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

查看mysql的安装路径

whereis mysql

/usr/bin/mysql /usr/lib64/mysql /usr/share/mysql /usr/share/man/man1/mysql.1.gz

允许远程连接

grant all privileges on *.* to 'root'@'%' with grant option;

刷新权限:

flush privileges;

10.2在slave1 上安装hive

- 先在 master 中对 hive 进行解压,然后将其复制到

slave1 中。

tar -zxvf /opt/software/apache-hive-2.1.1-bin.tar.gz -C /opt/module/

scp -r /opt/module/apache-hive-2.1.1-bin/ slave1:/opt/module/

- 修改配置文件

vi /etc/profile

#set hive

export HIVE_HOME=/opt/module/apache-hive-2.1.1-bin

export PATH=$PATH:$HIVE_HOME/bin

让配置文件生效

source /etc/profile

- 修改hive-env.sh配置文件

进入/opt/module/apache-hive-2.1.1-bin/conf这个目录

复制hive-env.sh.template为hive-env.sh

cp hive-env.sh.template hive-env.sh

HADOOP_HOME=/opt/module/hadoop-2.7.3

- 修改hive-site.xml

cp hive-default.xml.template hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value></value>

</property>

</configuration>

slave1上启动hive

bin/hive --service metastore

master上启动hive

bin/hive

11.Centos7安装mysql

- 下载mysql源安装包

wget http://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm

2.安装mysql源

yum localinstall mysql57-community-release-el7-8.noarch.rpm

3.检查mysql源是否安装成功

yum repolist enabled | grep "mysql.*-community.*"

4.修改yum源 【可跳过】

vi /etc/yum.repos.d/mysql-community.repo

改变默认安装的mysql版本。比如要安装5.6版本,将5.7源的enabled=1改成enabled=0。然后再将5.6源的enabled=0改成enabled=1即可。

备注:enabled=1表示即将要安装的mysql版本,这个文件也可以不修改,默认安装mysql最高版本

- 安装MySQL

yum install mysql-community-server

- 重载所有修改过的配置文件

systemctl daemon-reload

- 启动MySQL服务并设置开机启动

systemctl start mysqld 开启服务

systemctl enable mysqld 开机自启

systemctl daemon-reload 更新配置

- 查看mysql密码

grep 'temporary password' /var/log/mysqld.log

- MySQL 密码安全策略:

设置密码强度为低级:

set global validate_password_policy=0;

设置密码长度:

set global validate_password_length=4;

修改本地密码:

alter user 'root'@'localhost' identified by '123456';

退出:\q

- 授权及生效

新密码登录Mysql:

mysql -uroot -p123456

创建用户:

create user'root'@'%' identified by '123456';

允许远程连接:

grant all privileges on *.* to 'root'@'%' with grant option;

刷新权限:

flush privileges;

12.hive的部署

- 将hive解压到指定目录hive目录下

tar -zxvf /opt/software/apache-hive-2.1.1-bin.tar.gz -C /opt/module/hive/

- 分发到slave1上

scp -r /opt/module/hive/apache-hive-2.1.1-bin/ slave1:/opt/module/hive/

- 修改配置文件

vi /etc/profile

#set hive

export HIVE_HOME=/opt/module/hive/apache-hive-2.1.1-bin

export PATH=$PATH:$HIVE_HOME/bin

让配置文件生效

source /etc/profile

- 修改hive-env.sh配置文件

- 进入

/opt/module/apache-hive-2.1.1-bin/conf这个目录

复制hive-env.sh.template为hive-env.sh

cp hive-env.sh.template hive-env.sh

HADOOP_HOME=/opt/module/hadoop-2.7.3

- 在hive的lib目录下拷贝一个jar包

注意用fx传输时,类型一定是非text类型

mysql-connector-java-5.1.47-bin.jar

- slave2上配置hive-site.xml文件

touch hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- Hive 产生的元数据存放位置-->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive_remote/warehouse</value>

</property>

<!-- 数据库连接 JDBC 的 URL 地址-->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://slave2:3306/hive?createDatabaseIfNotExist=true</value>

</property>

<!-- 数据库连接 driver,即 MySQL 驱动-->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<!-- MySQL 数据库用户名-->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<!-- MySQL 数据库密码-->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

</configuration>

- master配置

8.1 jline版本冲突

cp /opt/module/hive/apache-hive-2.1.1-bin/lib/jline-2.12.jar /opt/module/hadoop-2.7.3/share/hadoop/yarn/lib/

8.2 修改hive-site.xml文件

touch hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- Hive 产生的元数据存放位置-->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive_remote/warehouse</value>

</property>

<!--- 使用本地服务连接 Hive,默认为 true-->

<property>

<name>hive.metastore.local</name>

<value>false</value>

</property>

<!-- 连接服务器-->

<property>

<name>hive.metastore.uris</name>

<value>thrift://slave1:9083</value>

</property>

</configuration>

启动hive

- 在slave1(服务端)初始化数据库

schematool -dbType mysql -initSchema

- 在slave1(服务端)启动hive service

hive --service metastore &

过程会卡住不动注意这不是报错。回车可退出卡动。

show databases;

Flume 安装教程

- 解压 apache-flume-1.7.0-bin.tar.gz 到/opt/module/目录下

tar -zxf apache-flume-1.7.0-bin.tar.gz -C /opt/module/

- 修改 apache-flume-1.7.0-bin 的名称为 flume

mv apache-flume-1.7.0-bin flume

- 将 flume/conf 下的 flume-env.sh.template 文件修改为 flume-env.sh,并配置 flumeenv.sh 文件

mv flume-env.sh.template flume-env.sh

vi flume-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_144

1.1案例实现

- 安装 netcat 工具

sudo yum install -y nc

- 判断 44444 端口是否被占用

sudo netstat -tunlp | grep 44444

- 创建 Flume Agent 配置文件 flume-netcat-logger.conf

在 `flume 目录`下创建 job 文件夹并进入 job 文件夹。

mkdir job

cd job/

在job 文件夹下创建 Flume Agent 配置文件 flume-netcat-logger.conf。

vim flume-netcat-logger.conf

在 flume-netcat-logger.conf 文件中添加如下内容。

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1