数据仓库之电商数仓-- 2、业务数据采集平台

目录

- 一、电商业务简介

-

- 1.1 电商业务流程

- 1.2 电商常识(SKU、SPU)

- 1.3 电商系统表结构

-

- 1.3.1 活动信息表(activity_info)

- 1.3.2 活动规则表(activity_rule)

- 1.3.3 活动商品关联表(activity_sku)

- 1.3.4 平台属性表(base_attr_info)

- 1.3.5 平台属性值表(base_attr_value)

- 1.3.6 商品一级分类表(base_category1)

- 1.3.7 二级分类表(base_category2)

- 1.3.8 三级分类表(base_category3)

- 1.3.9 字典表(base_dic)

- 1.3.10 省份表(base_province)

- 1.3.11 地区表(base_region)

- 1.3.12 品牌表(base_trademark)

- 1.3.13 购物车表(cart_info)

- 1.3.14 评价表(comment_info)

- 1.3.15 优惠券信息表(coupon_info)

- 1.3.16 优惠券优惠范围表(coupon_range)

- 1.3.17 优惠券领用表(coupon_use)

- 1.3.18 收藏表(favor_info)

- 1.3.19 订单明细表(order_detail)

- 1.3.20 订单明细活动关联表(order_detail_activity)

- 1.3.21 订单明细优惠券关联表(order_detail_coupon)

- 1.3.22 订单表(order_info)

- 1.3.23 退单表(order_refund_info)

- 1.3.24 订单状态流水表(order_status_log)

- 1.3.25 支付表(payment_info)

- 1.3.26 退款表(refund_payment)

- 1.3.27 SKU平台属性表(sku_attr_info)

- 1.3.28 SKU信息表(sku_info)

- 1.3.29 SKU销售属性表(sku_sale_attr_value)

- 1.3.30 SPU信息表(spu_info)

- 1.3.31 SPU销售属性表(spu_sale_attr)

- 1.3.32 SPU销售属性值表(spu_sale_attr_value)

- 1.3.33 用户地址表(user_address)

- 1.3.34 用户信息表(user_info)

- 二、业务数据采集模块

-

- 2.1 MySQL安装

-

- 2.1.1 安装MySQL

- 2.1.2 配置MySQL

- 2.2 业务数据生成

-

- 2.2.1 连接MySQL

- 2.2.2 建表语句

- 2.2.3 生成业务数据

- 2.3 Sqoop安装

-

- 2.3.1 下载并解压

- 2.3.2 修改配置文件

- 2.3.3 拷贝JDBC驱动

- 2.3.4 验证Sqoop

- 2.3.5 测试Sqoop是否能够成功连接数据库

- 2.3.6 Sqoop基本使用

- 2.4 同步策略

-

- 2.4.1 全量同步策略

- 2.4.2 增量同步策略

- 2.4.3 新增及变化策略

- 2.4.4 特殊策略

- 2.5 业务数据导入 HDFS

-

- 2.5.1 分析表同步策略

- 2.5.2 业务数据首日同步脚本

- 2.5.3 业务数据每日同步脚本

- 2.5.4 项目经验

- 三、数据环境准备

-

- 3.1 Hive安装部署

- 3.2 Hive原数据配置到MySQL

-

- 3.2.1 拷贝驱动

- 3.2.2 配置Metastore到MySQL

- 3.3 启动Hive

-

- 3.3.1 初始化元数据库

- 3.3.2 启动Hive客户端

一、电商业务简介

1.1 电商业务流程

电商的业务流程可以以一个普通用户的浏览足迹为例进行说明,用户点开电商首页开始浏览,可能会通过分类查询也可能通过全文搜索寻找自己中意的商品,这些商品无疑都是存储在后台的管理系统中的。

当用户寻找到自己中意的商品,可能会想要购买,将商品添加到购物车后发现需要登录, 登录后对商品进行结算,这时候购物车的管理和商品订单信息的生成都会对业务数据库产生影响,会生成相应的订单数据和支付数据。

订单正式生成之后,还会对订单进行跟踪处理,直到订单全部完成。

电商的主要业务流程包括用户前台浏览商品时的商品详情的管理,用户商品加入购物车进行支付时用户个人中心&支付服务的管理,用户支付完成后订单后台服务的管理,这些流程涉及到了十几个甚至几十个业务数据表,甚至更多。

1.2 电商常识(SKU、SPU)

SKU=StockKeepingUni(t 库存量基本单位) : 现在已经被引申为产品统一编号的简称, 每种产品均对应有唯一的 SKU 号。

SPU(Standard Product Unit): 是商品信息聚合的最小单位,是一组可复用、易检索的 标准化信息集合。

例如:iPhoneX 手机就是 SPU。一台银色、128G 内存的、支持联通网络的 iPhoneX,就 是 SKU。

tips:

简单来说,SKU、SPU都是指一类产品;SPU表示商品名称、型号相同的一类商品;SKU是指商品名称、型号、各个参数属性也相同的一类商品。

SPU 表示一类商品。好处就是:可以共用商品图片,海报、销售属性等。

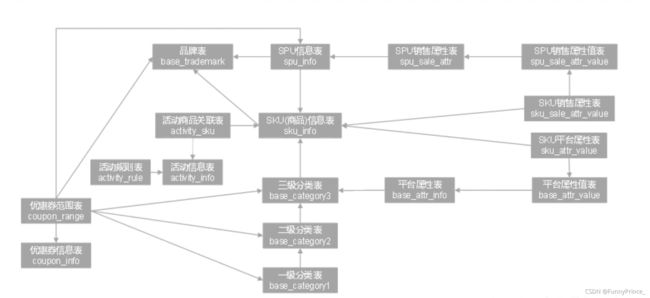

1.3 电商系统表结构

本电商数仓系统涉及到的业务数据表结构关系共 34 个表,以订单表、用户表、SKU 商品表、活动表和优惠券表为中心,延伸出了优惠券领用表、支付流水表、活动订单表、订单 详情表、订单状态表、商品评论表、编码字典表退单表、SPU 商品表等,用户表提供用户的详细信息,支付流水表提供该订单的支付详情,订单详情表提供订单的商品数量等情况,商品表给订单详情表提供商品的详细信息。

1.3.1 活动信息表(activity_info)

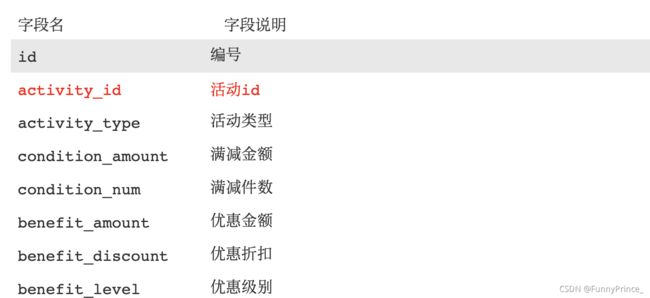

1.3.2 活动规则表(activity_rule)

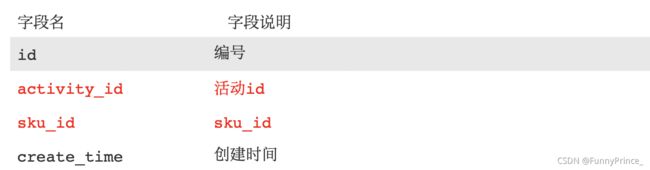

1.3.3 活动商品关联表(activity_sku)

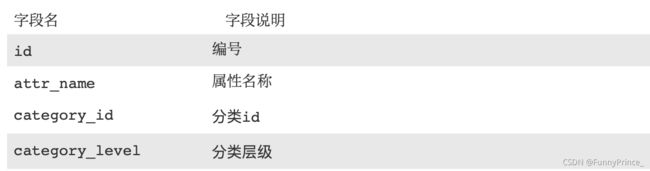

1.3.4 平台属性表(base_attr_info)

1.3.5 平台属性值表(base_attr_value)

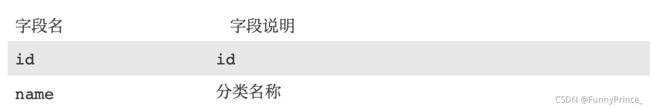

1.3.6 商品一级分类表(base_category1)

1.3.7 二级分类表(base_category2)

1.3.8 三级分类表(base_category3)

1.3.9 字典表(base_dic)

1.3.10 省份表(base_province)

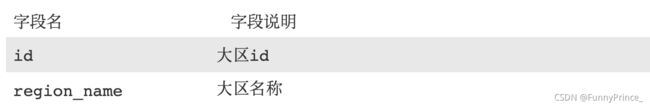

1.3.11 地区表(base_region)

1.3.12 品牌表(base_trademark)

1.3.13 购物车表(cart_info)

1.3.14 评价表(comment_info)

1.3.15 优惠券信息表(coupon_info)

1.3.16 优惠券优惠范围表(coupon_range)

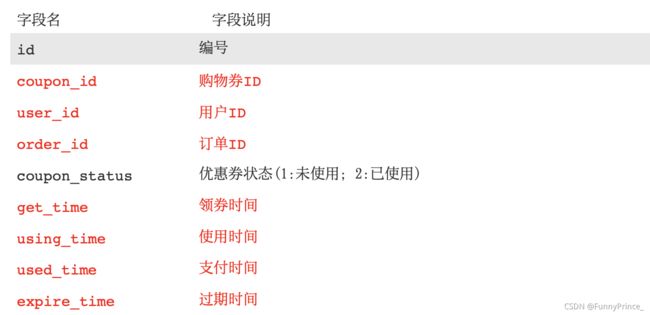

1.3.17 优惠券领用表(coupon_use)

1.3.18 收藏表(favor_info)

1.3.19 订单明细表(order_detail)

1.3.20 订单明细活动关联表(order_detail_activity)

1.3.21 订单明细优惠券关联表(order_detail_coupon)

1.3.22 订单表(order_info)

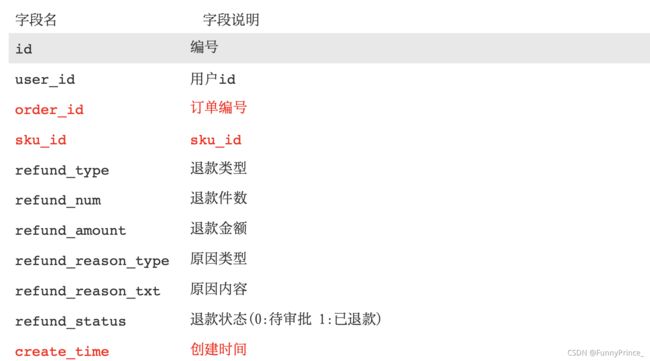

1.3.23 退单表(order_refund_info)

1.3.24 订单状态流水表(order_status_log)

1.3.25 支付表(payment_info)

1.3.26 退款表(refund_payment)

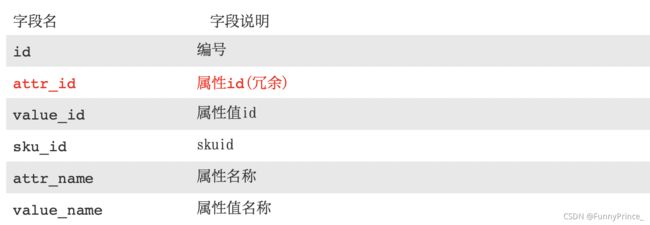

1.3.27 SKU平台属性表(sku_attr_info)

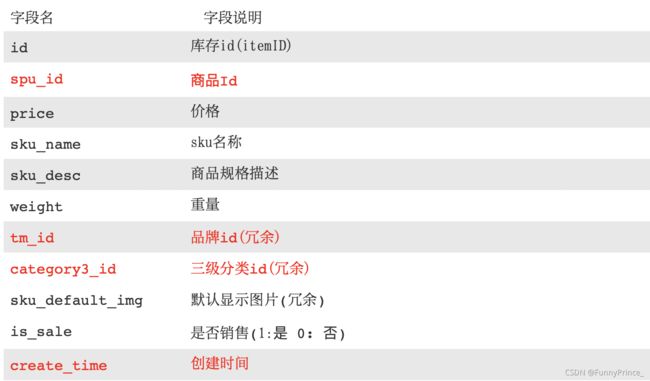

1.3.28 SKU信息表(sku_info)

1.3.29 SKU销售属性表(sku_sale_attr_value)

1.3.30 SPU信息表(spu_info)

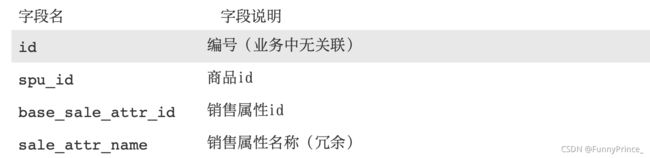

1.3.31 SPU销售属性表(spu_sale_attr)

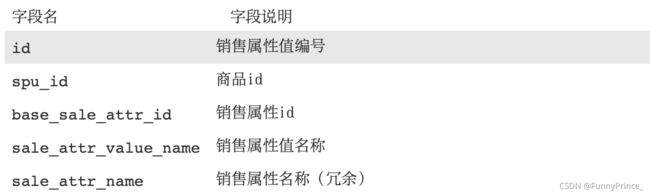

1.3.32 SPU销售属性值表(spu_sale_attr_value)

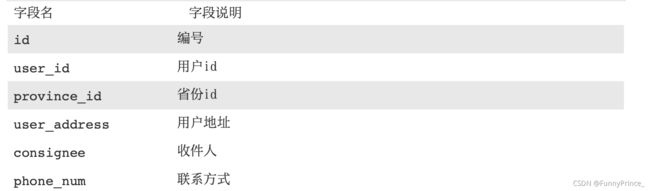

1.3.33 用户地址表(user_address)

1.3.34 用户信息表(user_info)

二、业务数据采集模块

2.1 MySQL安装

2.1.1 安装MySQL

[xiaobai@hadoop102 mysql]$ rpm -qa | grep -i -E mysql\ | mariadb | xargs =-n1 sudo rpm -e --nodeps

tips: 查询自带的rpm包 -i忽略大小写 -E正则表达式查询 mysql/mariadb; xargs=-n1 表示将上一个命令的输出作为下一个命令sudo rpm -e --nodeps的输入。

若是遇到一些很难删除掉的MySQL戳这里==>

- 安装MySQL依赖:

[xiaobai@hadoop102 mysql]$ sudo rpm -ivh 01_mysql-community-common-5.7.16-1.el7.x86_64.rpm

[xiaobai@hadoop102 mysql]$ sudo rpm -ivh 02_mysql-community-libs-5.7.16-1.el7.x86_64.rpm

[xiaobai@hadoop102 mysql]$ sudo rpm -ivh 03_mysql-community-libs-compat-5.7.16-1.el7.x86_64.rpm

- 安装mysql-client:

[xiaobai@hadoop102 mysql]$ sudo rpm -ivh 04_mysql-community-client-5.7.16-1.el7.x86_64.rpm

- 安装mysql-server:

[xiaobai@hadoop102 mysql]$ sudo rpm -ivh 05_mysql-community-server-5.7.16-1.el7.x86_64.rpm

注⚠️:

若是报以下错误,是由于yum安装了旧版本的GPG keys造成的,从rpm版本4.1后,在安装或升级软件包时会自动检查软件包的签名。

解决方法: 在安装包命令后加上--force --nodeps:

[xiaobai@hadoop102 mysql]$ sudo rpm -ivh 02_mysql-community-libs-5.7.16-1.el7.x86_64.rpm --force --nodeps

- 启动MySQL:

[xiaobai@hadoop102 mysql]$ sudo systemctl start mysqld

- 查看MySQL密码:

[xiaobai@hadoop102 mysql]$ sudo cat /var/log/mysqld.log | grep password

2.1.2 配置MySQL

注⚠️:

配置只要是root用户 + 密码,在任何主机上都可以登录MySQL数据库。

- 用刚查询到的密码进入MySQL:

使用此方法进不去mysql,提示ERROR 1045 (28000): Access denied for user ‘root‘@‘localhost错误的请戳这里==>

- 设置复杂密码(MySQL密码策略):

mysql> set password=password("H232%sd=55");

- 更改MySQL密码策略:

mysql> set global validate_password_length=4;

mysql> set global validate_password_policy=0;

- 设置自己的密码:

mysql> set password=password("******");

- 进入MySQL库:

mysql> use mysql

- 查询user表:

mysql> select user, host from user;

+-----------+-----------+

| user | host |

+-----------+-----------+

| mysql.sys | localhost |

| root | localhost |

+-----------+-----------+

2 rows in set (0.00 sec)

- 修改user表,把Host表内容修改为%:

mysql> update user set host="%" where user="root";

Query OK, 1 row affected (0.00 sec)

Rows matched: 1 Changed: 1 Warnings: 0

mysql> select user,host from user;

+-----------+-----------+

| user | host |

+-----------+-----------+

| root | % |

| mysql.sys | localhost |

+-----------+-----------+

2 rows in set (0.00 sec)

- 刷新:

mysql> flush privileges;

- 退出:

mysql> quit

2.2 业务数据生成

2.2.1 连接MySQL

通过Navicat Premium连接MySQL数据库,可先点击连接测试进行测试:

2.2.2 建表语句

-

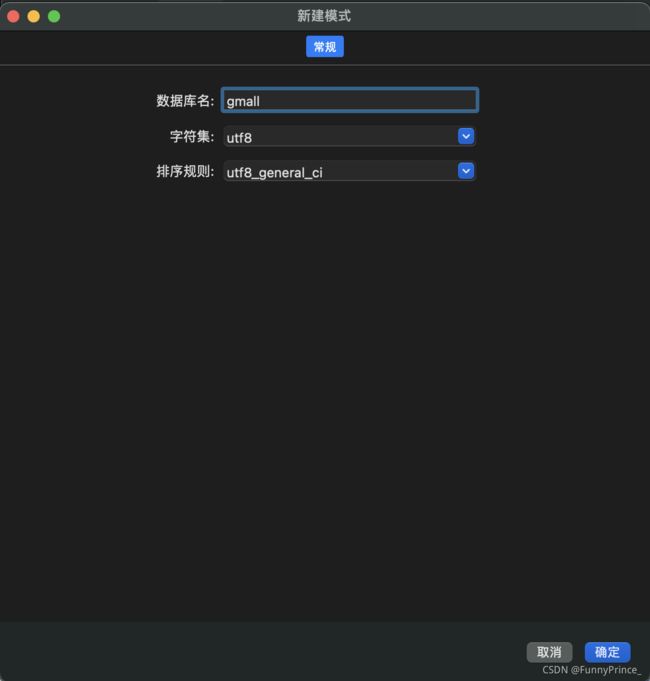

通过 Navicat 创建数据库

gmall; -

导入数据库结构脚本(

gmall.sql):打开数据库–>运行sql文件–>gmall.sql–>开始:

注⚠️:编码选择utf-8;

-

如图,右键点击‘表’ 进行‘刷新’即可看见数据库中的表:

2.2.3 生成业务数据

- 在 hadoop102 的/opt/module/目录下创建

db_log文件夹:

[xiaobai@hadoop102 module]$ mkdir db_log/

-

将业务数据生成器以及配置文件

gmall2020-mock-db-2021-01-22.jar、application.properties上传到 hadoop102 的 /opt/module/db_log 路径上;

-

根据需求修改 application.properties 相关配置:

logging.level.root=info

spring.datasource.driver-class-name=com.mysql.jdbc.Driver

spring.datasource.url=jdbc:mysql://hadoop102:3306/gmall?characterEncoding=utf-8&useSSL=false&serverTimezone=GMT%2B8

spring.datasource.username=root

spring.datasource.password=记得修改密码!

logging.pattern.console=%m%n

mybatis-plus.global-config.db-config.field-strategy=not_null

#业务日期

mock.date=2020-06-14

#是否重置 注意:第一次执行必须设置为1,后续不需要重置不用设置为1

mock.clear=1

#是否重置用户 注意:第一次执行必须设置为1,后续不需要重置不用设置为1

mock.clear.user=1

#生成新用户数量

mock.user.count=100

#男性比例

mock.user.male-rate=20

#用户数据变化概率

mock.user.update-rate:20

#收藏取消比例

mock.favor.cancel-rate=10

#收藏数量

mock.favor.count=100

#每个用户添加购物车的概率

mock.cart.user-rate=50

#每次每个用户最多添加多少种商品进购物车

mock.cart.max-sku-count=8

#每个商品最多买几个

mock.cart.max-sku-num=3

#购物车来源 用户查询,商品推广,智能推荐, 促销活动

mock.cart.source-type-rate=60:20:10:10

#用户下单比例

mock.order.user-rate=50

#用户从购物中购买商品比例

mock.order.sku-rate=50

#是否参加活动

mock.order.join-activity=1

#是否使用购物券

mock.order.use-coupon=1

#购物券领取人数

mock.coupon.user-count=100

#支付比例

mock.payment.rate=70

#支付方式 支付宝:微信 :银联

mock.payment.payment-type=30:60:10

#评价比例 好:中:差:自动

mock.comment.appraise-rate=30:10:10:50

#退款原因比例:质量问题 商品描述与实际描述不一致 缺货 号码不合适 拍错 不想买了 其他

mock.refund.reason-rate=30:10:20:5:15:5:5

2.3 Sqoop安装

2.3.1 下载并解压

- 下载地址:http://mirrors.hust.edu.cn/apache/sqoop/1.4.6/

- 上传安装包

sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz到 hadoop102 的/opt/software/sqoop 路径中:

[xiaobai@hadoop102 sqoop]$ ll

total 16476

-rw-r--r--. 1 root root 16870735 Oct 3 21:02 sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

- 解压 sqoop 安装包到指定目录/opt/module/:

[xiaobai@hadoop102 sqoop]$ tar -zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz -C /opt/module/

- 将/opt/module/目录下的sqoop-1.4.6.bin__hadoop-2.0.4-alpha改名为

sqoop:

[xiaobai@hadoop102 module]$ mv sqoop-1.4.6.bin__hadoop-2.0.4-alpha/ sqoop

2.3.2 修改配置文件

- 进入到/opt/module/sqoop/conf 目录,重命名配置文件sqoop-env-template.sh为

sqoop-env.sh:

[xiaobai@hadoop102 conf]$ mv sqoop-env-template.sh sqoop-env.sh

- 修改配置文件

[xiaobai@hadoop102 conf]$ vim sqoop-env.sh

增加如下内容:

export HADOOP_COMMON_HOME=/opt/module/hadoop-3.2.2

export HADOOP_MAPRED_HOME=/opt/module/hadoop-3.2.2

export HIVE_HOME=/opt/module/hive

export ZOOKEEPER_HOME=/opt/module/zookeeper-3.5.7

export ZOOCFGDIR=/opt/module/zookeeper-3.5.7/conf

2.3.3 拷贝JDBC驱动

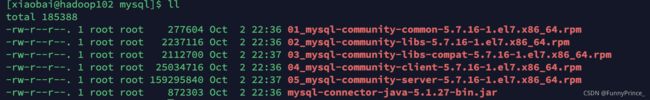

将原先上传到/opt/software/mysql路径下的JDBC驱动mysql-connector-java-5.1.27-bin.jar拷贝到/opt/module/sqoop/lib/路径下:

[xiaobai@hadoop102 conf]$ cp /opt/software/mysql/mysql-connector-java-5.1.27-bin.jar /opt/module/sqoop/lib/

2.3.4 验证Sqoop

可通过一些command来验证sqoop配置是否正确,如:

[xiaobai@hadoop102 sqoop]$ bin/sqoop help

如图,会出现一些warning警告和help命令的输出:

![]()

2.3.5 测试Sqoop是否能够成功连接数据库

在sqoop根目录下使用以下命令测试sqoop是否可以正确连接数据库:

[xiaobai@hadoop102 sqoop]$ bin/sqoop list-databases --connect jdbc:mysql://hadoop102:3306/ --username root --password ******

2.3.6 Sqoop基本使用

将mysql中sku_info表数据导入到HDSF的路径下:

[xiaobai@hadoop102 sqoop]$ bin/sqoop import \

> --connect jdbc:mysql://hadoop102:3306/gmall \

> --username root \

> --password mysql123 \

> --table sku_info \

> --columns id,sku_name \

> --where 'id>=1 and id<=20' \

> --target-dir /sku_info \

> --delete-target-dir \

> --fields-terminated-by '\t' \

> --num-mappers 2 \

> --split-by id

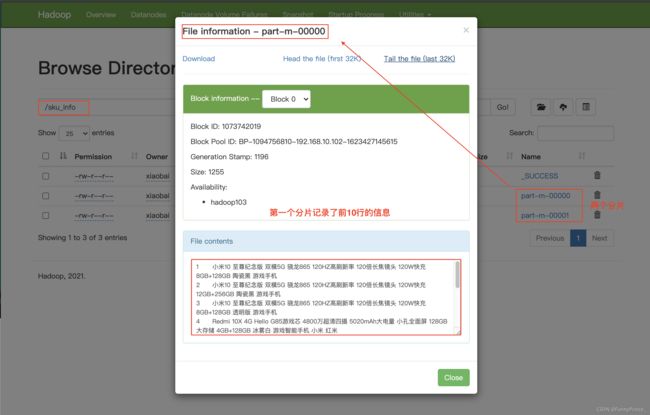

注⚠️:因为sqoop的mapreduce只有map,无reduce,所以最终输出的文件个数就是map的个数。

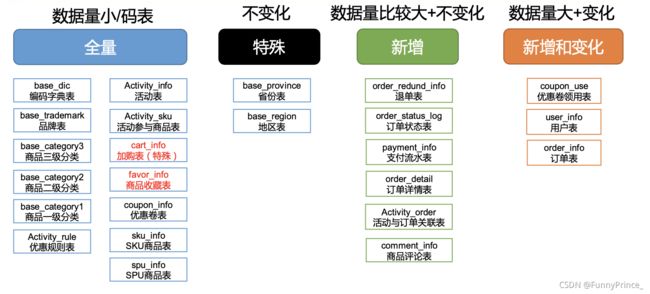

2.4 同步策略

数据同步策略的类型包括: 全量同步、增量同步、新增及变化同步、特殊情况 ;

全量表: 存储完整的数据。

增量表: 存储新增加的数据。

新增及变化表: 存储新增加的数据和变化的数据。

特殊表: 只需要存储一次。

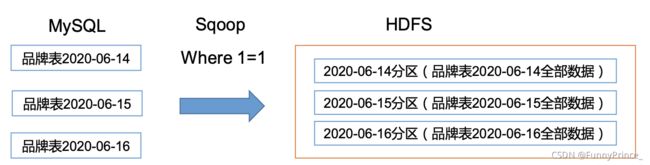

2.4.1 全量同步策略

每日全量:就是每天存储一份完整数据,作为一个分区。

适用场景: 适用于表数据量不大,且每天既会有新数据插入,也会有旧数据的修改的场景。

例如:编码字典表、品牌表、商品三级分类、商品二级分类、商品一级分类、优惠规则表、活动表、活动参与商品表、加购表、商品收藏表、优惠卷表、SKU 商品表、SPU商品表。

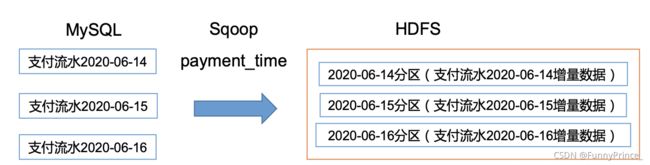

2.4.2 增量同步策略

每日增量: 就是每天存储一份增量数据,作为一个分区。

适用场景: 适用于表数据量大,且每天只会有新数据插入的场景。

例如:退单表、订单状 态表、支付流水表、订单详情表、活动与订单关联表、商品评论表。

2.4.3 新增及变化策略

每日新增及变化: 就是存储创建时间和操作时间都是今天的数据。

适用场景: 表的数据量大,既会有新增,又会有变化。

例如:用户表、订单表、优惠卷领用表。

2.4.4 特殊策略

特殊策略: 某些特殊的维度表,可不必遵循上述同步策略。

- 客观世界维度:没变化的客观世界的维度(比如性别,地区,民族,政治成分,鞋子尺码)可以只存一 份固定值。

- 日期维度:日期维度可以一次性导入一年或若干年的数据。

2.5 业务数据导入 HDFS

2.5.1 分析表同步策略

在生产环境,个别小公司,为了简单处理,所有表全量导入。

中大型公司,由于数据量比较大,还是严格按照同步策略导入数据。

2.5.2 业务数据首日同步脚本

- 在/home/xiaobai/bin 目录下创建

mysql_to_hdfs_init.sh:

[xiaobai@hadoop102 bin]$ vim mysql_to_hdfs_init.sh

在脚本里增加以下内容:

#! /bin/bash

APP=gmall

sqoop=/opt/module/sqoop/bin/sqoop

if [ -n "$2" ] ;then

do_date=$2

else

echo "请传入日期参数"

exit

fi

import_data(){

$sqoop import \

--connect jdbc:mysql://hadoop102:3306/$APP \

--username root \

--password ****** \

--target-dir /origin_data/$APP/db/$1/$do_date \

--delete-target-dir \

--query "$2 where \$CONDITIONS" \

--num-mappers 1 \

--fields-terminated-by '\t' \

--compress \

--compression-codec lzop \

--null-string '\\N' \

--null-non-string '\\N'

hadoop jar /opt/module/hadoop-3.2.2/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer /origin_data/$APP/db/$1/$do_date

}

import_order_info(){

import_data order_info "select

id,

total_amount,

order_status,

user_id,

payment_way,

delivery_address,

out_trade_no,

create_time,

operate_time,

expire_time,

tracking_no,

province_id,

activity_reduce_amount,

coupon_reduce_amount,

original_total_amount,

feight_fee,

feight_fee_reduce

from order_info"

}

import_coupon_use(){

import_data coupon_use "select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time,

expire_time

from coupon_use"

}

import_order_status_log(){

import_data order_status_log "select

id,

order_id,

order_status,

operate_time

from order_status_log"

}

import_user_info(){

import_data "user_info" "select

id,

login_name,

nick_name,

name,

phone_num,

email,

user_level,

birthday,

gender,

create_time,

operate_time

from user_info"

}

import_order_detail(){

import_data order_detail "select

id,

order_id,

sku_id,

sku_name,

order_price,

sku_num,

create_time,

source_type,

source_id,

split_total_amount,

split_activity_amount,

split_coupon_amount

from order_detail"

}

import_payment_info(){

import_data "payment_info" "select

id,

out_trade_no,

order_id,

user_id,

payment_type,

trade_no,

total_amount,

subject,

payment_status,

create_time,

callback_time

from payment_info"

}

import_comment_info(){

import_data comment_info "select

id,

user_id,

sku_id,

spu_id,

order_id,

appraise,

create_time

from comment_info"

}

import_order_refund_info(){

import_data order_refund_info "select

id,

user_id,

order_id,

sku_id,

refund_type,

refund_num, refund_amount,

refund_reason_type,

refund_status,

create_time

from order_refund_info"

}

import_sku_info(){

import_data sku_info "select

id,

spu_id,

price,

sku_name,

sku_desc,

weight,

tm_id,

category3_id,

is_sale,

create_time

from sku_info"

}

import_base_category1(){

import_data "base_category1" "select

id,

name

from base_category1"

}

import_base_category2(){

import_data "base_category2" "select

id,

name,

category1_id

from base_category2"

}

import_base_category3(){

import_data "base_category3" "select

id,

name,

category2_id

from base_category3"

}

import_base_province(){

import_data base_province "select

id,

name,

region_id,

area_code,

iso_code,

iso_3166_2

from base_province"

}

import_base_region(){

import_data base_region "select id,

region_name

from base_region"

}

import_base_trademark(){

import_data base_trademark "select

id,

tm_name

from base_trademark"

}

import_spu_info(){

import_data spu_info "select

id,

spu_name,

category3_id,

tm_id

from spu_info"

}

import_favor_info(){

import_data favor_info "select

id,

user_id,

sku_id,

spu_id,

is_cancel,

create_time,

cancel_time

from favor_info"

}

import_cart_info(){

import_data cart_info "select

id,

user_id,

sku_id,

cart_price,

sku_num,

sku_name,

create_time,

operate_time,

is_ordered,

order_time,

source_type,

source_id

from cart_info"

}

import_coupon_info(){

import_data coupon_info "select

id,

coupon_name,

coupon_type,

condition_amount,

condition_num,

activity_id,

benefit_amount,

benefit_discount,

create_time,

range_type,

limit_num,

taken_count,

start_time,

end_time,

operate_time,

expire_time

from coupon_info"

}

import_activity_info(){

import_data activity_info "select

id,

activity_name,

activity_type,

start_time,

end_time,

create_time

from activity_info"

}

import_activity_rule(){

import_data activity_rule "select

id,

activity_id,

activity_type,

condition_amount,

condition_num,

benefit_amount,

benefit_discount,

benefit_level

from activity_rule"

}

import_base_dic(){

import_data base_dic "select

dic_code,

dic_name,

parent_code,

create_time,

operate_time

from base_dic"

}

import_order_detail_activity(){

import_data order_detail_activity "select

id,

order_id,

order_detail_id,

activity_id,activity_rule_id,

sku_id,

create_time

from order_detail_activity"

}

import_order_detail_coupon(){

import_data order_detail_coupon "select

id,

order_id,

order_detail_id,

coupon_id,

coupon_use_id,

sku_id,

create_time

from order_detail_coupon"

}

import_refund_payment(){

import_data refund_payment "select

id,

out_trade_no,

order_id,

sku_id,

payment_type,

trade_no,

total_amount,

subject,

refund_status,

create_time,

callback_time

from refund_payment"

}

import_sku_attr_value(){

import_data sku_attr_value "select

id,

attr_id,

value_id,

sku_id,

attr_name,

value_name

from sku_attr_value"

}

import_sku_sale_attr_value(){

import_data sku_sale_attr_value "select

id,

sku_id,

spu_id,

sale_attr_value_id,

sale_attr_id,

sale_attr_name,

sale_attr_value_name

from sku_sale_attr_value"

}

case $1 in

"order_info")

import_order_info

;;

"base_category1")

import_base_category1

;;

"base_category2")

import_base_category2

;;

"base_category3")

import_base_category3

;;

"order_detail")

import_order_detail

;;

"sku_info")

import_sku_info

;;

"user_info")

import_user_info

;;

"payment_info")

import_payment_info

;;

"base_province")

import_base_province

;;

"base_region")

import_base_region

;;

"base_trademark")

import_base_trademark

;;

"activity_info")

import_activity_info

;;

"cart_info")

import_cart_info

;;

"comment_info")

import_comment_info

;;

"coupon_info")

import_coupon_info

;;

"coupon_use")

import_coupon_use

;;

"favor_info")

import_favor_info

;;

"order_refund_info")

import_order_refund_info

;;

"order_status_log")

import_order_status_log

;;

"spu_info")

import_spu_info

;;

"activity_rule")

import_activity_rule

;;

"base_dic")

import_base_dic

;;

"order_detail_activity")

import_order_detail_activity

;;

"order_detail_coupon")

import_order_detail_coupon

;;

"refund_payment")

import_refund_payment

;;

"sku_attr_value")

import_sku_attr_value

;;

"sku_sale_attr_value")

import_sku_sale_attr_value

;;

"all")

import_base_category1

import_base_category2

import_base_category3

import_order_info

import_order_detail

import_sku_info

import_user_info

import_payment_info

import_base_region

import_base_province

import_base_trademark

import_activity_info

import_cart_info

import_comment_info

import_coupon_use

import_coupon_info

import_favor_info

import_order_refund_info

import_order_status_log

import_spu_info

import_activity_rule

import_base_dic

import_order_detail_activity

import_order_detail_coupon

import_refund_payment

import_sku_attr_value

import_sku_sale_attr_value

;;

esac

- 给脚本增加执行权限,见2.5.3⬇️

2.5.3 业务数据每日同步脚本

- 在/home/xiaobai/bin 目录下创建

mysql_to_hdfs.sh:

在脚本里添加以下内容:

#! /bin/bash

APP=gmall

sqoop=/opt/module/sqoop/bin/sqoop

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d '-1 day' +%F`

fi

import_data(){

$sqoop import \

--connect jdbc:mysql://hadoop102:3306/$APP \

--username root \

--password ****** \

--target-dir /origin_data/$APP/db/$1/$do_date \

--delete-target-dir \

--query "$2 and \$CONDITIONS" \

--num-mappers 1 \

--fields-terminated-by '\t' \

--compress \

--compression-codec lzop \

--null-string '\\N' \

--null-non-string '\\N'

hadoop jar /opt/module/hadoop-3.2.2/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer /origin_data/$APP/db/$1/$do_date

}

import_order_info(){

import_data order_info "select

id,

total_amount,

order_status,

user_id,

payment_way,

delivery_address,

out_trade_no,

create_time,

operate_time,

expire_time,

tracking_no,

province_id,

activity_reduce_amount,

coupon_reduce_amount,

original_total_amount,

feight_fee,

feight_fee_reduce

from order_info

where (date_format(create_time,'%Y-%m-%d')='$do_date'

or date_format(operate_time,'%Y-%m-%d')='$do_date')"

}

import_coupon_use(){

import_data coupon_use "select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time,

expire_time

from coupon_use

where (date_format(get_time,'%Y-%m-%d')='$do_date'

or date_format(using_time,'%Y-%m-%d')='$do_date'

or date_format(used_time,'%Y-%m-%d')='$do_date'

or date_format(expire_time,'%Y-%m-%d')='$do_date')"

}

import_order_status_log(){

import_data order_status_log "select

id,

order_id,

order_status,

operate_time

from order_status_log

where date_format(operate_time,'%Y-%m-%d')='$do_date'"

}

import_user_info(){

import_data "user_info" "select

id,

login_name,

nick_name,

name,

phone_num,

email,

user_level,

birthday,

gender,

create_time,

operate_time

from user_info

where (DATE_FORMAT(create_time,'%Y-%m-%d')='$do_date'

or DATE_FORMAT(operate_time,'%Y-%m-%d')='$do_date')"

}

import_order_detail(){

import_data order_detail "select

id,

order_id,

sku_id,

sku_name,

order_price,

sku_num,

create_time,

source_type,

source_id,

split_total_amount,

split_activity_amount,

split_coupon_amount

from order_detail

where DATE_FORMAT(create_time,'%Y-%m-%d')='$do_date'"}

import_payment_info(){

import_data "payment_info" "select

id,

out_trade_no,

order_id,

user_id,

payment_type,

trade_no,

total_amount,

subject,

payment_status,

create_time,

callback_time

from payment_info

where (DATE_FORMAT(create_time,'%Y-%m-%d')='$do_date'

or DATE_FORMAT(callback_time,'%Y-%m-%d')='$do_date')"

}

import_comment_info(){

import_data comment_info "select

id,

user_id,

sku_id,

spu_id,

order_id,

appraise,

create_time

from comment_info

where date_format(create_time,'%Y-%m-%d')='$do_date'"

}

import_order_refund_info(){

import_data order_refund_info "select

id,

user_id,

order_id,

sku_id,

refund_type,

refund_num,

refund_amount,

refund_reason_type,

refund_status,

create_time

from order_refund_info

where date_format(create_time,'%Y-%m-%d')='$do_date'"

}

import_sku_info(){

import_data sku_info "select

id,

spu_id,

price,

sku_name,

sku_desc,

weight,

tm_id,

category3_id,

is_sale,

create_time

from sku_info where 1=1"

}

import_base_category1(){

import_data "base_category1" "select

id,

name

from base_category1 where 1=1"

}

import_base_category2(){

import_data "base_category2" "select

id,

name,

category1_id

from base_category2 where 1=1"

}

import_base_category3(){

import_data "base_category3" "select

id,

name,

category2_id

from base_category3 where 1=1"

}

import_base_province(){

import_data base_province "select

id,

name,

region_id,

area_code,

iso_code,

iso_3166_2

from base_province

where 1=1"

}

import_base_region(){

import_data base_region "select

id,

region_name

from base_region

where 1=1"

}

import_base_trademark(){

import_data base_trademark "select

id,

tm_name

from base_trademark where 1=1"

}

import_spu_info(){

import_data spu_info "select

id,

spu_name,

category3_id,

tm_id

from spu_info

where 1=1"

}

import_favor_info(){

import_data favor_info "select

id,

user_id,

sku_id,

spu_id,

is_cancel,

create_time,

cancel_time

from favor_info

where 1=1"

}

import_cart_info(){

import_data cart_info "select

id,

user_id,

sku_id,

cart_price,

sku_num,

sku_name,

create_time,

operate_time,

is_ordered,

order_time,

source_type,

source_id

from cart_info

where 1=1"

}

import_coupon_info(){

import_data coupon_info "select

id,

coupon_name,

coupon_type,

condition_amount,

condition_num,

activity_id,

benefit_amount,

benefit_discount,

create_time,

range_type,

limit_num,

taken_count,

start_time,

end_time,

operate_time,

expire_time

from coupon_info

where 1=1"

}

import_activity_info(){

import_data activity_info "select

id,

activity_name,

activity_type,

start_time,

end_time,

create_time

from activity_info

where 1=1"

}

import_activity_rule(){

import_data activity_rule "select

id,

activity_id,

activity_type,

condition_amount,

condition_num,

benefit_amount,

benefit_discount,

benefit_level

from activity_rule

where 1=1"

}

import_base_dic(){

import_data base_dic "select

dic_code,

dic_name,

parent_code,

create_time,

operate_time

from base_dic

where 1=1"

}

import_order_detail_activity(){

import_data order_detail_activity "select

id,

order_id,

order_detail_id,

activity_id,

activity_rule_id,sku_id,

create_time

from order_detail_activity

where date_format(create_time,'%Y-%m-%d')='$do_date'"

}

import_order_detail_coupon(){

import_data order_detail_coupon "select

id,

order_id,

order_detail_id,

coupon_id,

coupon_use_id,

sku_id,

create_time

from order_detail_coupon

where date_format(create_time,'%Y-%m-%d')='$do_date'"

}

import_refund_payment(){

import_data refund_payment "select

id,

out_trade_no,

order_id,

sku_id,

payment_type,

trade_no,

total_amount,

subject,

refund_status,

create_time,

callback_time

from refund_payment

where (DATE_FORMAT(create_time,'%Y-%m-%d')='$do_date'

or DATE_FORMAT(callback_time,'%Y-%m-%d')='$do_date')"

}

import_sku_attr_value(){

import_data sku_attr_value "select

id,

attr_id,

value_id,

sku_id,

attr_name,

value_name

from sku_attr_value

where 1=1"

}

import_sku_sale_attr_value(){

import_data sku_sale_attr_value "select

id,

sku_id,

spu_id,

sale_attr_value_id,

sale_attr_id,

sale_attr_name,

sale_attr_value_name

from sku_sale_attr_value

where 1=1"

}

case $1 in

"order_info")

import_order_info

;;

"base_category1")

import_base_category1

;;

"base_category2")

import_base_category2

;;

"base_category3")

import_base_category3

;;

"order_detail")

import_order_detail

;;

"sku_info")

import_sku_info

;;

"user_info")

import_user_info

;;

"payment_info")

import_payment_info

;;

"base_province")

import_base_province

;;

"activity_info")

import_activity_info

;;

"cart_info")

import_cart_info

;;

"comment_info")

import_comment_info

;;

"coupon_info")

import_coupon_info

;;

"coupon_use")

import_coupon_use

;;

"favor_info")

import_favor_info

;;

"order_refund_info")

import_order_refund_info

;;

"order_status_log")

import_order_status_log

;;

"spu_info")

import_spu_info

;;

"activity_rule")

import_activity_rule

;;

"base_dic")

import_base_dic

;;

"order_detail_activity")

import_order_detail_activity

;;

"order_detail_coupon")

import_order_detail_coupon

;;

"refund_payment")

import_refund_payment

;;

"sku_attr_value")

import_sku_attr_value

;;

"sku_sale_attr_value")

import_sku_sale_attr_value

;;

"all")

import_base_category1

import_base_category2

import_base_category3

import_order_info

import_order_detail

import_sku_info

import_user_info

import_payment_info

import_base_trademark

import_activity_info

import_cart_info

import_comment_info

import_coupon_use

import_coupon_info

import_favor_info

import_order_refund_info

import_order_status_log

import_spu_info

import_activity_rule

import_base_dic

import_order_detail_activity

import_order_detail_coupon

import_refund_payment

import_sku_attr_value

import_sku_sale_attr_value

;;

esac

[xiaobai@hadoop102 bin]$ vim mysql_to_hdfs.sh

- 为以上两个脚本

mysql_to_hdfs_init.sh、mysql_to_hdfs.sh增加执行权限:

[xiaobai@hadoop102 bin]$ chmod +x mysql_to_hdfs*

- 初次导入脚本:

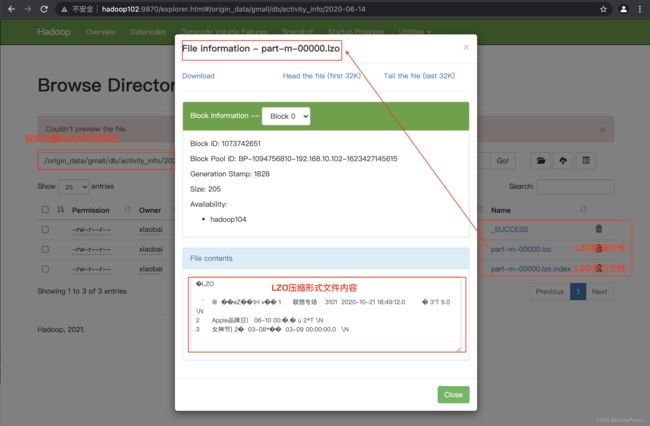

[xiaobai@hadoop102 bin]$ ./mysql_to_hdfs_init.sh all 2020-06-14

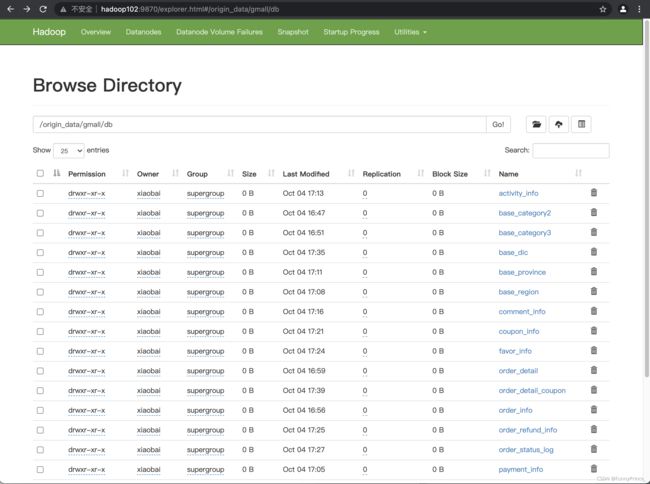

上述命令执行完成后,如图,打开http://hadoop102:9870/即可查看到我们所同步到hdfs上的数据:

- 每日导入脚本(为后续有增加或修改内容时使用):

[xiaobai@hadoop102 bin]$ ./mysql_to_hdfs.sh all 2020-06-15

注⚠️:

[ -n 变量值 ] 判断变量的值是否为空:

– 变量的值,非空,返回true;

– 变量的值,为空,返回false。

-

查看date命令的使用,[xiaobai@hadoop102 ~]$ date --help

do_date=\`date -d -1 day' +%F\`

=后面的命令➕`` 的作用是的作用就是把=后面date -d -1 day' +%F的结果赋给前面的变量do_date!

若不➕`` ,则会将=后面的命令当作一个字符串赋值直接给前面的变量do_date,不是我们想要的结果。

2.5.4 项目经验

Hive中的Null在底层是以“\N”来存储,而MySQL中的Null在底层就是Null,为了保证数据两端的一致性。在导出数据时采用–input-null-string和–input-null-non-string两个参数。导入数据时采用–null-string和–null-non-string。

三、数据环境准备

3.1 Hive安装部署

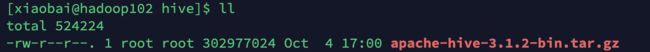

- 如图,把apache-hive-3.1.2-bin.tar.gz上传到Linux的/opt/software目录下:

- 解压apache-hive-3.1.2-bin.tar.gz到/opt/module/目录下面:

[xiaobai@hadoop102 hive]$ tar -zxvf apache-hive-3.1.2-bin.tar.gz -C /opt/module/

- 修改apache-hive-3.1.2-bin.tar.gz的名称为

hive:

[xiaobai@hadoop102 module]$ mv apache-hive-3.1.2-bin/ hive

- 修改

/etc/profile.d/my_env.sh,添加环境变量:

[xiaobai@hadoop102 module]$ sudo vim /etc/profile.d/my_env.sh

如图,添加以下内容:

#HIVE_HOME

export HIVE_HOME=/opt/module/hive

export PATH=$PATH:$HIVE_HOME/bin!

- source一下

/etc/profile.d/my_env.sh文件,使环境变量生效:

[xiaobai@hadoop102 module]$ source /etc/profile.d/my_env.sh

3.2 Hive原数据配置到MySQL

3.2.1 拷贝驱动

将MySQL的JDBC驱动拷贝到Hive的lib目录下:

[xiaobai@hadoop102 module]$ cp /opt/software/mysql/mysql-connector-java-5.1.27-bin.jar /opt/module/hive/lib/

3.2.2 配置Metastore到MySQL

在/opt/module/hive/conf/目录下新建hive-site.xml文件:

[xiaobai@hadoop102 conf]$ vim hive-site.xml

添加以下内容:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop102:3306/metastore?useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>******</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop102</value>

</property>

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

</configuration>

3.3 启动Hive

3.3.1 初始化元数据库

- 登录MySQL:

[xiaobai@hadoop102 conf]$ mysql -uroot -p

Enter password:

- 新建Hive元数据库:

mysql> create database metastore;

Query OK, 1 row affected (0.03 sec)

- 初始化Hive元数据库:

[xiaobai@hadoop102 conf]$ schematool -initSchema -dbType mysql -verbose

提示以下信息表示初始化完成!

0: jdbc:mysql://hadoop102:3306/metastore> !closeall

Closing: 0: jdbc:mysql://hadoop102:3306/metastore?useSSL=false

beeline>

beeline> Initialization script completed

schemaTool completed

3.3.2 启动Hive客户端

- 启动Hive客户端:

[xiaobai@hadoop102 conf]$ hive

- 查看一下数据库:

hive (default)> show databases;

OK

database_name

default

Time taken: 2.399 seconds, Fetched: 1 row(s)