动手学深度学习(三十)——语义分割概念及数据加载

文章目录

-

- 一、什么是语义分割

- 二、应用

- 三、Pascal VOC2012语义分割数据集

-

- 3.1 数据加载

- 3.2 数据预处理

- 3.3 自定义语义分割数据集类

- 3.4 整合全部组件

简单认识什么是语义分割,并加载语义分割数据集

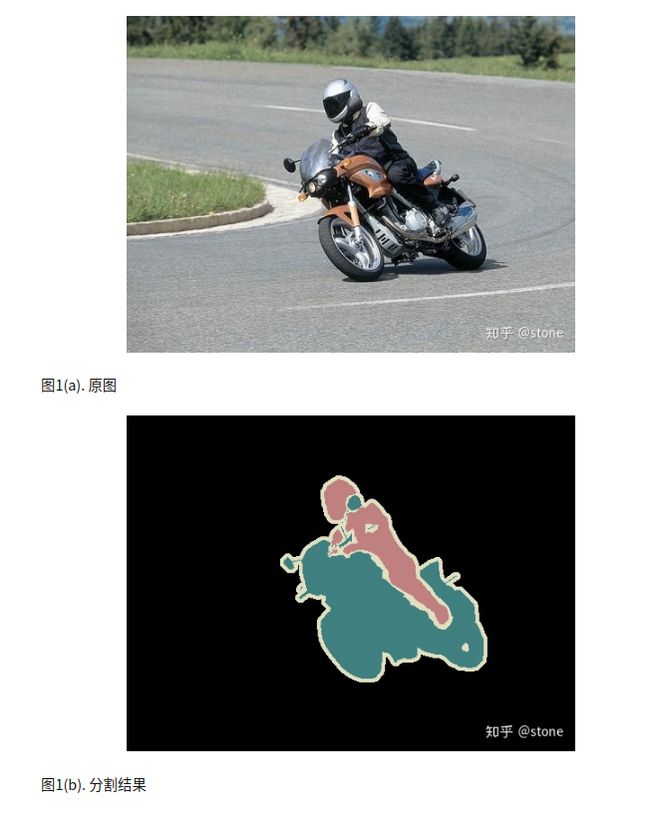

一、什么是语义分割

二、应用

三、Pascal VOC2012语义分割数据集

http://host.robots.ox.ac.uk/pascal/VOC/voc2012/

3.1 数据加载

%matplotlib inline

import os

import torch

import torchvision

from d2l import torch as d2l

# data download

#@save

d2l.DATA_HUB['voc2012'] = (d2l.DATA_URL + 'VOCtrainval_11-May-2012.tar',

'4e443f8a2eca6b1dac8a6c57641b67dd40621a49')

# voc_dir = d2l.download_extract('voc2012', 'VOCdevkit/VOC2012')

voc_dir = os.path.join("../data/VOCdevkit/VOC2012/")

#@save

def read_voc_images(voc_dir, is_train=True):

"""读取所有VOC图像并标注。"""

txt_fname = os.path.join(voc_dir, 'ImageSets', 'Segmentation',

'train.txt' if is_train else 'val.txt')

mode = torchvision.io.image.ImageReadMode.RGB

with open(txt_fname, 'r') as f:

images = f.read().split()

features, labels = [], []

for i, fname in enumerate(images):

features.append(

torchvision.io.read_image(

os.path.join(voc_dir, 'JPEGImages', f'{

fname}.jpg')))

# 对于语义分割,要求对每一个像素进行分类,所以label保存为一个没有经过压缩的.png文件是比较合适的

labels.append(

torchvision.io.read_image(

os.path.join(voc_dir, 'SegmentationClass', f'{

fname}.png'),

mode))

return features, labels

train_features, train_labels = read_voc_images(voc_dir, True)

绘制前五个输入的图像和标签。标签中,白色和黑色分别表示边框和背景,其他颜色表示对应不同的类别

n = 5

imgs = train_features[0:n] + train_labels[0:n]

imgs = [img.permute(1, 2, 0) for img in imgs]

d2l.show_images(imgs, 2, n)

列举RGB颜色和类名

#@save

VOC_COLORMAP = [[0, 0, 0], [128, 0, 0], [0, 128, 0], [128, 128, 0],

[0, 0, 128], [128, 0, 128], [0, 128, 128], [128, 128, 128],

[64, 0, 0], [192, 0, 0], [64, 128, 0], [192, 128, 0],

[64, 0, 128], [192, 0, 128], [64, 128, 128], [192, 128, 128],

[0, 64, 0], [128, 64, 0], [0, 192, 0], [128, 192, 0],

[0, 64, 128]]

#@save

VOC_CLASSES = [

'background', 'aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus',

'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse', 'motorbike',

'person', 'potted plant', 'sheep', 'sofa', 'train', 'tv/monitor']

"""

定义函数将RGB颜色列别和类别索引进行映射

"""

#@save

def voc_colormap2label():

"""构建从RGB到VOC类别索引的映射。"""

colormap2label = torch.zeros(256**3, dtype=torch.long)

for i, colormap in enumerate(VOC_COLORMAP):

colormap2label[(colormap[0] * 256 + colormap[1]) * 256 +

colormap[2]] = i

return colormap2label

#@save

def voc_label_indices(colormap, colormap2label):

"""将VOC标签中的RGB值映射到它们的类别索引。"""

colormap = colormap.permute(1, 2, 0).numpy().astype('int32')

idx = ((colormap[:, :, 0] * 256 + colormap[:, :, 1]) * 256 +

colormap[:, :, 2])

return colormap2label[idx]

例如,在第一张样本图片之中,飞机头部区域的索引为1,而背景的索引是0

y = voc_label_indices(train_labels[0],voc_colormap2label())

y[105:115,130:140],VOC_CLASSES[1]

(tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0, 1],

[0, 0, 0, 0, 0, 0, 0, 1, 1, 1],

[0, 0, 0, 0, 0, 0, 1, 1, 1, 1],

[0, 0, 0, 0, 0, 1, 1, 1, 1, 1],

[0, 0, 0, 0, 0, 1, 1, 1, 1, 1],

[0, 0, 0, 0, 1, 1, 1, 1, 1, 1],

[0, 0, 0, 0, 0, 1, 1, 1, 1, 1],

[0, 0, 0, 0, 0, 1, 1, 1, 1, 1],

[0, 0, 0, 0, 0, 0, 1, 1, 1, 1],

[0, 0, 0, 0, 0, 0, 0, 0, 1, 1]]),

'aeroplane')

3.2 数据预处理

在之前的alexnet和googlenet中我们通过缩放图像来将输入形状相同,但是对于语义分割工作而言并不合适,因为这样会破坏我们的标签映射。为了解决这个问题,我们通常将图片裁减为固定尺寸的小图。

#@save

def voc_rand_crop(feature, label, height, width):

"""随机裁剪特征和标签图像。"""

rect = torchvision.transforms.RandomCrop.get_params(

feature, (height, width))

feature = torchvision.transforms.functional.crop(feature, *rect)

label = torchvision.transforms.functional.crop(label, *rect)

return feature, label

imgs = []

for _ in range(n):

imgs += voc_rand_crop(train_features[0], train_labels[0], 200, 300)

imgs = [img.permute(1, 2, 0) for img in imgs]

d2l.show_images(imgs[::2] + imgs[1::2], 2, n)

3.3 自定义语义分割数据集类

继承了data.Dataset类别,其中__init__、getitem、__len__这三个类是必须重写的。

#@save

class VOCSegDataset(torch.utils.data.Dataset):

"""一个用于加载VOC数据集的自定义数据集。"""

def __init__(self, is_train, crop_size, voc_dir):

self.transform = torchvision.transforms.Normalize(

mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

self.crop_size = crop_size

features, labels = read_voc_images(voc_dir, is_train=is_train)

self.features = [

self.normalize_image(feature)

for feature in self.filter(features)]

self.labels = self.filter(labels)

self.colormap2label = voc_colormap2label()

print('read ' + str(len(self.features)) + ' examples')

def normalize_image(self, img):

return self.transform(img.float())

def filter(self, imgs):

return [

img for img in imgs if (img.shape[1] >= self.crop_size[0] and

img.shape[2] >= self.crop_size[1])]

def __getitem__(self, idx):

feature, label = voc_rand_crop(self.features[idx], self.labels[idx],

*self.crop_size)

return (feature, voc_label_indices(label, self.colormap2label))

def __len__(self):

return len(self.features)

"""假设crop的大小为(320*480),测试读取数据集的大小"""

crop_size = (320, 480)

voc_train = VOCSegDataset(True, crop_size, voc_dir)

voc_test = VOCSegDataset(False, crop_size, voc_dir)

read 1114 examples

read 1078 examples

设置批量大小为64,定义训练集为迭代器。输出第一个小批量的形状大小:标签是一个三维数据

batch_size = 64

train_iter = torch.utils.data.DataLoader(

voc_train, batch_size, shuffle=True, drop_last=True,

num_workers=d2l.get_dataloader_workers())

for X, Y in train_iter:

print("input data:{}".format(X.shape))

print("the corresponse label:{}".format(Y.shape))

break

torch.Size([64, 3, 320, 480])

torch.Size([64, 320, 480])

3.4 整合全部组件

- 下载和读取Pascal Voc2021数据

- 加载自定义数据集

#@save

def load_data_voc(batch_size, crop_size):

"""

加载VOC语义分割数据集。

"""

# voc_dir = d2l.download_extract('voc2012',

# os.path.join('VOCdevkit', 'VOC2012'))

voc_dir = os.path.join("../data/VOCdevkit/VOC2012/")

num_workers = d2l.get_dataloader_workers()

train_iter = torch.utils.data.DataLoader(

VOCSegDataset(True, crop_size, voc_dir), batch_size, shuffle=True,

drop_last=True, num_workers=num_workers)

test_iter = torch.utils.data.DataLoader(

VOCSegDataset(False, crop_size, voc_dir), batch_size, drop_last=True,

num_workers=num_workers)

return train_iter, test_iter

参考:

【1】李沐沐神的《动手学深度学习》https://zh-v2.d2l.ai/