pytorch使用tensorboardX做可视化(一)loss和直方图

一、安装pytorch,安装tensorboardX

使用pycharm的seting安装就好二、搭建一个简单的网络

这里用LeNet5

与tensorboardX相关的语句都标记了出来,主要是传个每轮的loss,参数model自带

import torch.nn as nn

import numpy as np

import torch

#定义lenet5

class LeNet5(nn.Module):

def __init__(self, num_clases=10):

super(LeNet5, self).__init__()

self.c1 = nn.Sequential(

nn.Conv2d(1, 6, kernel_size=5, stride=1, padding=2),

nn.BatchNorm2d(6),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.c2 = nn.Sequential(

nn.Conv2d(6, 16, kernel_size=5),

nn.BatchNorm2d(16),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.c3 = nn.Sequential(

nn.Conv2d(16, 120, kernel_size=5),

nn.BatchNorm2d(120),

nn.ReLU()

)

self.fc1 = nn.Sequential(

nn.Linear(120, 84),

nn.ReLU()

)

self.fc2 = nn.Sequential(

nn.Linear(84, num_clases),

nn.LogSoftmax()

)

def forward(self, x):

out = self.c1(x)

out = self.c2(out)

out = self.c3(out)

out = out.reshape(out.size(0), -1)

out = self.fc1(out)

out = self.fc2(out)

return out

#准备数据

import torchvision

import torchvision.transforms as transforms

import torch.optim as optim

mnist_train = torchvision.datasets.FashionMNIST(root='~/Datasets/FashionMNIST',

train=True, download=True, transform=transforms.ToTensor())

mnist_iter = torch.utils.data.DataLoader(mnist_train,64,shuffle = True)

# 训练整个网络

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

total_step = len(mnist_train)

curr_lr = 0.1

model = LeNet5(10)

optimizer = optim.SGD(model.parameters(), lr=curr_lr)

num_epoches = 1

loss_ = torch.nn.CrossEntropyLoss()

#--------------------- tensorboard ---------------#

loss_show = []

#--------------------- tensorboard ---------------#

for epoch in range(num_epoches):

for i, (images, labels) in enumerate(mnist_iter):

images = images.to(device)

labels = labels.to(device)

# 正向传播

outputs = model(images)

loss = loss_(outputs, labels)

# --------------------- tensorboard ---------------#

loss_show.append(loss)

# --------------------- tensorboard ---------------#

# 反向传播

optimizer.zero_grad()

loss.backward()

optimizer.step()

if (i + 1) % 100 == 0:

print(f'Epoch {epoch + 1}/{num_epoches}, Step {i + 1}/{total_step}, {loss.item()}') # 不要忘了item()

if i == 300:

break

#--------------------- tensorboard ---------------#

import tensorboardutil as tb

tb.show(model,loss_show)

#--------------------- tensorboard ---------------#

torch.save(model.state_dict(), 'ResnetCifar10.pt')

三、写tensorboard代码

from tensorboardX import SummaryWriter

# 定义Summary_Writer

writer = SummaryWriter('./Result') # 数据存放在这个文件夹

def show(model,loss):

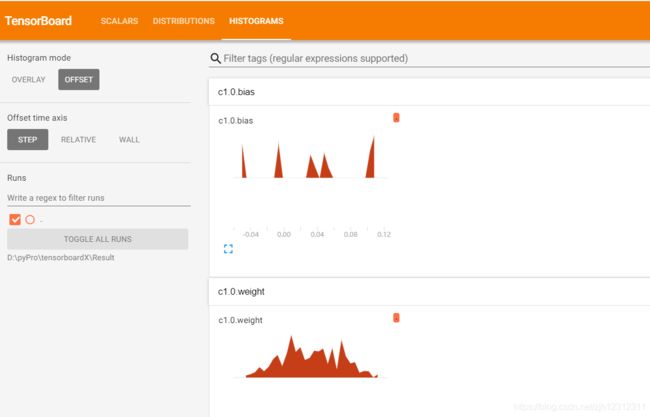

# 显示每个layer的权重

print(model)

for i, (name, param) in enumerate(model.named_parameters()):

if 'bn' not in name:

writer.add_histogram(name, param, 0)

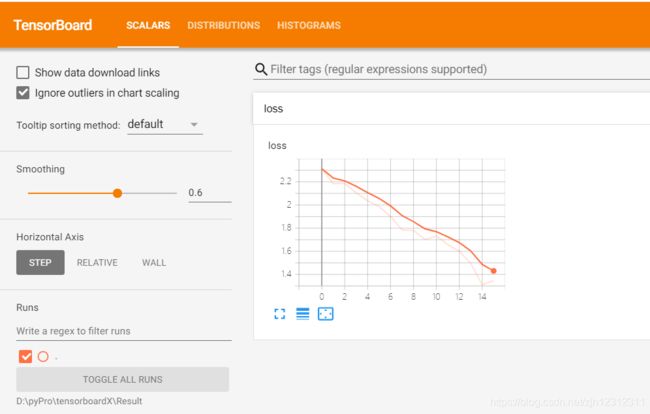

writer.add_scalar('loss', loss[i], i)四、运行

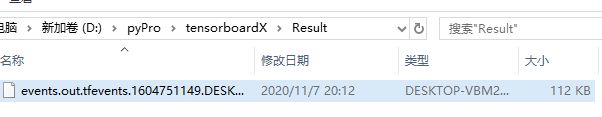

可以看到Result下有了一个文件

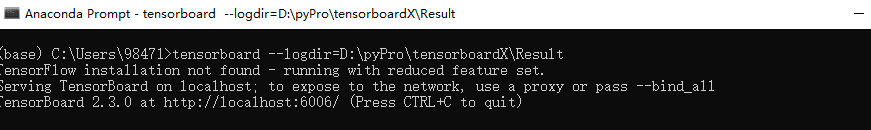

使用Anaconda的命令行打开,输入 tensorboard --logdir=D:\pyPro\tensorboardX\Result

(等于号后面是存放路径)

复制该网址到浏览器,http://localhost:6006/,打开,就可以看到了