【python】——爬虫02 requests 模块

目录

- 一、requests概述

- 二、发送GET

-

- 1. requests发送get请求

- 2. response 响应对象

-

- 2.1 解决中文乱码

- 2.2 response响应对象的属性、方法

- 3. requests发送header请求

- 三、cookie

-

- 1. requests发送header请求携带cookie

- 2. cookiejar对象

- 四、 参数

-

- 1. timeout

- 2. proxy

- 3. 协议

- 4. verify

- 五、发送POST请求

-

- 1. 发送post包,实现金山单词翻译

-

- 1.1 抓包确定请求URL

- 1.2 确定请求参数

- 1.3 确定返回数据的位置

- 1.4 模拟浏览器获取数据

一、requests概述

- 非转基因的python HTTP模块

- 发送http请求,获取响应数据

- 安装 pip/pip3 install requests

二、发送GET

请求

1. requests发送get请求

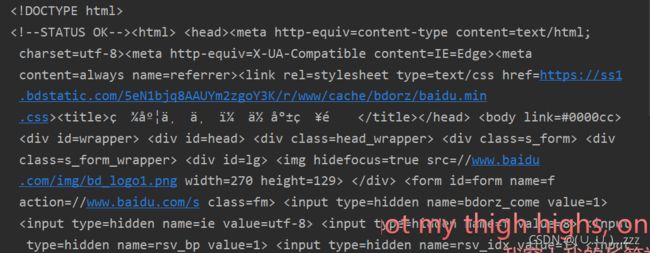

import requests

url = "https://www.baidu.com"

# 发送get请求

response = requests.get(url)

print(response.text)

2. response 响应对象

- response.text

类型: str

解码类型:requests模块自动根据HTTP头部对响应的编码作出有根据的推测,推测的文本编码

- response.content

类型:bytes

解码类型:没有指定

2.1 解决中文乱码

对response.content进行decode,解决中文乱码

response.content,decode()默认utf-8

import requests

url = "https://www.baidu.com"

# 发送get请求

response = requests.get(url)

response.encoding = 'utf8'

print(response.content)

print(response.content.decode())

2.2 response响应对象的属性、方法

import requests

url = "https://www.baidu.com"

# # 发送get请求

response = requests.get(url)

response.encoding = 'utf8'

# 响应url

print(response.url)

# https://www.baidu.com/

# 状态码

print(response.status_code) # 200

# 请求头

print(response.request.headers)

# {'User-Agent': 'python-requests/2.25.1',

# 'Accept-Encoding': 'gzip, deflate', 'Accept': '*/*',

# 'Connection': 'keep-alive'}

# 响应头

print(response.headers)

# {'Cache-Control': 'private, no-cache, no-store,

# proxy-revalidate, no-transform', 'Connection': 'keep-alive',

# 'Content-Encoding': 'gzip', 'Content-Type': 'text/html',

# 'Date': 'Mon, 01 Nov 2021 13:51:59 GMT',

# 'Last-Modified': 'Mon, 23 Jan 2017 13:24:18 GMT',

# 'Pragma': 'no-cache', 'Server': 'bfe/1.0.8.18',

# 'Set-Cookie': 'BDORZ=27315; max-age=86400;

# domain=.baidu.com; path=/', 'Transfer-Encoding': 'chunked'}

# 答应响应设置cookie

print(response.cookies)

# 3. requests发送header请求

requests.get(url,headers=headers)

- headers 参数接收字典形式的请求头

- key请求头字段,value字段对应值

import requests

url = 'https://www.baidu.com'

# 定制请求字典

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.54 Safari/537.36 Edg/95.0.1020.40'

}

# 发送( 披着羊皮的狼——伪装浏览器

response = requests.get(url,headers= headers)

print(response.request.headers)

三、cookie

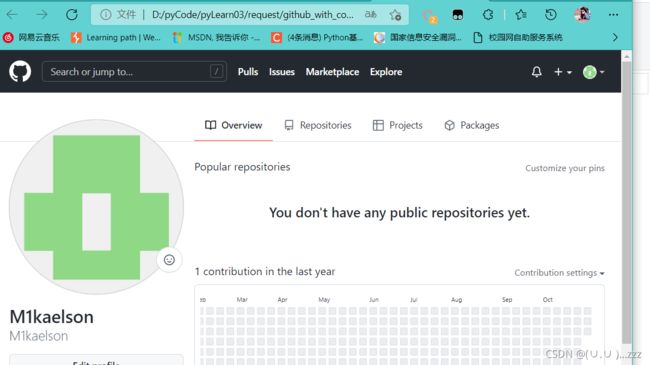

1. requests发送header请求携带cookie

import requests

url = 'https://github.com/M1kaelson'

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0'

}

temp = ''

temp=temp.encode("utf-8").decode("latin-1")

cookie_list = temp.split(';')

cookies = {

}

for cookie in cookie_list:

# cookies是一个字典,里面的值是

cookies[cookie.split('=')[0]] = cookie.split('=')[-1]

print(cookies)

response = requests.get(url , headers=headers,cookies=cookies,timeout=60)

with open("github_with_cookies_.html","wb")as f:

f.write(response.content)

2. cookiejar对象

- 构建cookies字典

cookies = {"name":"value"}

import requests

url = ''

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0'

}

temp = ''

cookie_list = temp.split(';')

cookies = {

}

for cookie in cookie_list:

# cookies是一个字典,里面的值是

cookies[cookie.split('=')[0]] = cookie.split('=')[-1]

print(cookies)

response = requests.get(url , headers=headers,cookies=cookies)

with open("github_with_cookies_.html","wb")as f:

f.write(response.content)

四、 参数

1. timeout

response = requests.get (url,timeout=3)

2. proxy

- 正向

- 反向(nginx 浏览器不知道服务器真实的地址

3. 协议

- http、https

- socks

socks只是简单的传递数据包,不关心应用层协议

socks费时比http https少

socks代理可以转发http https请求

response= requests.get(url,proxies=proxies)

# proxies的形式:字典

import requests

url = 'http://www.google.com'

proxies = {

'http':'http://ip:port'

}

response = requests.get(url,proxies=proxies)

print(response.text)

4. verify

- 使用verify忽略CA证书

- 为了在代码中正常请求,使用verify=false参数,此时requests模块发送请求将不做CA证书

response = request.get(url,verify=False)

五、发送POST请求

response= request.post(url,data)data参数接收一个字典- 其他参数和get的参数一致

1. 发送post包,实现金山单词翻译

# url

# headers

# data字典

# 发送请求 获取响应

# 数据解析

1.1 抓包确定请求URL

1.2 确定请求参数

1.3 确定返回数据的位置

1.4 模拟浏览器获取数据

#coding:utf-8

import requests

import json

class King(object):

def __init__( self,word ):

# url

self.url = "http://ifanyi.iciba.com/index.php?c=trans&m=fy&client=6&auth_user=key_ciba&sign=37218aa29f55fdcc"

# headers

self.headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0'

}

# data

self.data ={

"from": "zh",

"to": "en",

"q": word

}

def get_data(self):

response =requests.post(self.url,data=self.data,headers=self.headers)

return response.content.decode('unicode-escape')

def run(self):

response = self.get_data()

print(response)

if __name__ == '__main__':

King = King('字典')

King.run()

# with open("fanyi.html", "wb")as f:

# f.write(Youdao.run())