Android 平台人脸检测并不复杂

一、前言

上周,我们将于老师分享给开发者的 libfacedetection 开源库在 Windows 11 CLion 环境下实践了一把,速度快,效果好。

恰逢今日不加班,秉承着实践出真知的原则移植至 Android 平台。

二、环境准备

-

Android Studio 2020.3.1

-

OpenCV 4.5.4

-

CMake 3.10.2

-

libfacedetection 源码(https://github.com/ShiqiYu/libfacedetection)

三、工程实践

实现效果:基于 libfacedetection 源码实现 Android 平台图片人脸检测

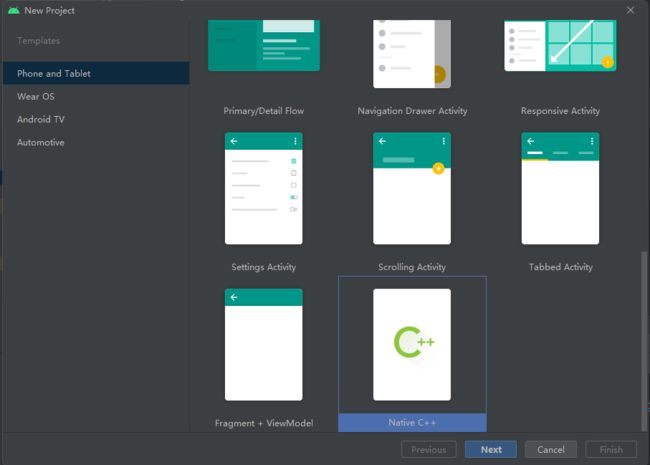

3.1 创建工程

File -> New -> New Project -> Native C++

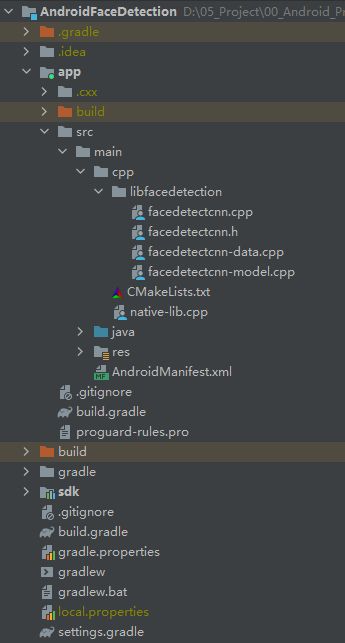

3.2 CPP 目录下导入 libfacedetection 源码

新建文件夹

libfacedetection,并复制src目录下的 4 个文件即可。

为了避免编译错误和提高运行效率,做如下几点调整:

| 文件名 | 调整内容 |

|---|---|

| facedetectcnn.h | 注释掉 #include "facedetection_export.h" |

| facedetectcnn.h | 删除 FACEDETECTION_EXPORT |

3.3 导入OpenCV-android-sdk

下载地址:https://sourceforge.net/projects/opencvlibrary/files/4.5.4/opencv-4.5.4-android-sdk.zip/download

解压后,将文件夹 OpenCV-android-sdk\sdk 以 Module 的形式导入至我们刚才新建的工程。这里就不得不提到之前我们讲到的一个 Android Studio BUG,如果你无法正常导入,按照文中方式操作即可。导入操作完成后,目录结构应大致如下:

3.3 修改 CMakeLists.txt

3.3.1 添加配置项

option(ENABLE_OPENMP "use openmp" ON)

option(ENABLE_INT8 "use int8" ON)

option(ENABLE_AVX2 "use avx2" OFF)

option(ENABLE_AVX512 "use avx512" OFF)

option(ENABLE_NEON "whether use neon, if use arm please set it on" ON)

option(DEMO "build the demo" OFF)

add_definitions("-O3")

if(ENABLE_OPENMP)

message("using openmp")

add_definitions(-D_OPENMP)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} ${OpenMP_CXX_FLAGS}")

set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} ${OpenMP_EXE_LINKER_FLAGS}")

endif()

if(ENABLE_INT8)

message("using int8")

add_definitions(-D_ENABLE_INT8)

endif()

IF(CMAKE_CXX_COMPILER_ID STREQUAL "GNU"

OR CMAKE_CXX_COMPILER_ID STREQUAL "Clang")

message("use -O3 to speedup")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -O3")

ENDIF()

if(ENABLE_AVX512)

add_definitions(-D_ENABLE_AVX512)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -mavx512bw")

endif()

if(ENABLE_AVX2)

add_definitions(-D_ENABLE_AVX2)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -mavx2 -mfma")

endif()

if(ENABLE_NEON)

message("Using NEON")

add_definitions(-D_ENABLE_NEON)

add_definitions(-DANDROID_ARM_NEON=ON)

endif()

3.3.2 配置头文件、源文件和 OpenCV

set(opencv_libs ${PROJECT_SOURCE_DIR}/../../../../sdk/native/libs)

set(opencv_includes ${PROJECT_SOURCE_DIR}/../../../../sdk/native/jni/include)

include_directories(${PROJECT_SOURCE_DIR}/include)

include_directories(${opencv_includes})

add_library(libopencv_java4 SHARED IMPORTED)

set_target_properties(libopencv_java4 PROPERTIES

IMPORTED_LOCATION "${opencv_libs}/${ANDROID_ABI}/libopencv_java4.so")

file(GLOB_RECURSE face_source_files ${PROJECT_SOURCE_DIR}/libfacedetection/*.cpp)

message("${face_source_files}")

3.3.3 编译目标

add_library( # Sets the name of the library.

detection

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

native-lib.cpp

${face_source_files})

3.3.4 链接

target_link_libraries( # Specifies the target library.

detection

# Links the target library to the log library

# included in the NDK.

${log-lib}

libopencv_java4)

3.4 编写示例

对源码

mobile/Android目录下的示例代码,稍加调整,已供参考。

3.4.1 native-lib.cpp

#include

#include

#include

#include "libfacedetection/facedetectcnn.h"

#include

//define the buffer size. Do not change the size!

#define DETECT_BUFFER_SIZE 0x20000

using namespace cv;

char *JNITag = const_cast("facedetection-jni");

extern "C" JNIEXPORT jobjectArray JNICALL

Java_tech_kicky_face_detection_MainActivity_facedetect(JNIEnv *env,jobject /* this */,jlong matAddr)

{

jobjectArray faceArgs = nullptr;

Mat& img = *(Mat*)matAddr;

Mat bgr = img.clone();

cvtColor(img, bgr, COLOR_RGBA2BGR);

__android_log_print(ANDROID_LOG_ERROR, JNITag,"convert RGBA to BGR");

//load an image and convert it to gray (single-channel)

if(bgr.empty())

{

fprintf(stderr, "Can not convert image");

return faceArgs;

}

int * pResults = NULL;

//pBuffer is used in the detection functions.

//If you call functions in multiple threads, please create one buffer for each thread!

unsigned char * pBuffer = (unsigned char *)malloc(DETECT_BUFFER_SIZE);

if(!pBuffer)

{

fprintf(stderr, "Can not alloc buffer.\n");

return faceArgs;

}

///

// CNN face detection

// Best detection rate

//

//!!! The input image must be a BGR one (three-channel)

//!!! DO NOT RELEASE pResults !!!

pResults = facedetect_cnn(pBuffer, (unsigned char*)(bgr.ptr(0)), bgr.cols, bgr.rows, (int)bgr.step);

int numOfFaces = pResults ? *pResults : 0;

__android_log_print(ANDROID_LOG_ERROR, JNITag,"%d faces detected.\n", numOfFaces);

/**

* 获取Face类以及其对于参数的签名

*/

jclass faceClass = env->FindClass("tech/kicky/face/detection/Face");//获取Face类

jmethodID faceClassInitID = (env)->GetMethodID(faceClass, "", "()V");

jfieldID faceConfidence = env->GetFieldID(faceClass, "faceConfidence", "I");//获取int类型参数confidence

jfieldID faceLandmarks = env->GetFieldID(faceClass, "faceLandmarks", "[Lorg/opencv/core/Point;");//获取List类型参数landmarks

jfieldID faceRect = env->GetFieldID(faceClass, "faceRect","Lorg/opencv/core/Rect;");//获取faceRect签名

/**

* 获取RECT类以及对应参数的签名

*/

jclass rectClass = env->FindClass("org/opencv/core/Rect");//获取到RECT类

jmethodID rectClassInitID = (env)->GetMethodID(rectClass, "", "()V");

jfieldID rect_x = env->GetFieldID(rectClass, "x", "I");//获取x的签名

jfieldID rect_y = env->GetFieldID(rectClass, "y", "I");//获取y的签名

jfieldID rect_width = env->GetFieldID(rectClass, "width", "I");//获取width的签名

jfieldID rect_height = env->GetFieldID(rectClass, "height", "I");//获取height的签名

/**

* 获取Point类以及对应参数的签名

*/

jclass pointClass = env->FindClass("org/opencv/core/Point");//获取到Point类

jmethodID pointClassInitID = (env)->GetMethodID(pointClass, "", "()V");

jfieldID point_x = env->GetFieldID(pointClass, "x", "D");//获取x的签名

jfieldID point_y = env->GetFieldID(pointClass, "y", "D");//获取y的签名

faceArgs = (env)->NewObjectArray(numOfFaces, faceClass, 0);

//print the detection results

for(int i = 0; i < (pResults ? *pResults : 0); i++)

{

short * p = ((short*)(pResults+1))+142*i;

int confidence = p[0];

int x = p[1];

int y = p[2];

int w = p[3];

int h = p[4];

__android_log_print(ANDROID_LOG_ERROR, JNITag,"face %d rect=[%d, %d, %d, %d], confidence=%d\n",i,x,y,w,h,confidence);

jobject newFace = (env)->NewObject(faceClass, faceClassInitID);

jobject newRect = (env)->NewObject(rectClass, rectClassInitID);

(env)->SetIntField(newRect, rect_x, x);

(env)->SetIntField(newRect, rect_y, y);

(env)->SetIntField(newRect, rect_width, w);

(env)->SetIntField(newRect, rect_height, h);

(env)->SetObjectField(newFace,faceRect,newRect);

env->DeleteLocalRef(newRect);

jobjectArray newPoints = (env)->NewObjectArray(5, pointClass, 0);

for (int j = 5; j < 14; j += 2){

int p_x = p[j];

int p_y = p[j+1];

jobject newPoint = (env)->NewObject(pointClass, pointClassInitID);

(env)->SetDoubleField(newPoint, point_x, (double)p_x);

(env)->SetDoubleField(newPoint, point_y, (double)p_y);

int index = (j-5)/2;

(env)->SetObjectArrayElement(newPoints, index, newPoint);

env->DeleteLocalRef(newPoint);

__android_log_print(ANDROID_LOG_ERROR, JNITag,"landmark %d =[%f, %f]\n",index,(double)p_x,(double)p_y);

}

(env)->SetObjectField(newFace,faceLandmarks,newPoints);

env->DeleteLocalRef(newPoints);

(env)->SetIntField(newFace,faceConfidence,confidence);

(env)->SetObjectArrayElement(faceArgs, i, newFace);

env->DeleteLocalRef(newFace);

}

//release the buffer

free(pBuffer);

return faceArgs;

}

3.4.2 MainActivity.kt

package tech.kicky.face.detection

import android.graphics.BitmapFactory

import androidx.appcompat.app.AppCompatActivity

import android.os.Bundle

import android.widget.TextView

import org.opencv.android.Utils

import org.opencv.core.MatOfRect

import org.opencv.core.Point

import org.opencv.core.Scalar

import org.opencv.imgproc.Imgproc

import org.opencv.imgproc.Imgproc.FONT_HERSHEY_SIMPLEX

import tech.kicky.face.detection.databinding.ActivityMainBinding

class MainActivity : AppCompatActivity() {

private lateinit var binding: ActivityMainBinding

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

binding = ActivityMainBinding.inflate(layoutInflater)

setContentView(binding.root)

testFaceDetect()

}

private fun testFaceDetect() {

val bmp = BitmapFactory.decodeResource(resources, R.drawable.basketball) ?: return

var str = "image size = ${

bmp.width}x${

bmp.height}\n"

binding.imageView.setImageBitmap(bmp)

val mat = MatOfRect()

val bmp2 = bmp.copy(bmp.config, true)

Utils.bitmapToMat(bmp, mat)

val FACE_RECT_COLOR = Scalar(255.0, 0.0, 0.0, 255.0)

val FACE_RECT_THICKNESS = 3

val TEXT_SIZE = 2.0

val startTime = System.currentTimeMillis()

val facesArray = facedetect(mat.nativeObjAddr)

str += "detectTime = ${

System.currentTimeMillis() - startTime}ms\n"

for (face in facesArray) {

val text_pos = Point(

face.faceRect.x.toDouble() - FACE_RECT_THICKNESS,

face.faceRect.y - FACE_RECT_THICKNESS.toDouble()

)

Imgproc.putText(

mat,

face.faceConfidence.toString(),

text_pos,

FONT_HERSHEY_SIMPLEX,

TEXT_SIZE,

FACE_RECT_COLOR

)

Imgproc.rectangle(mat, face.faceRect, FACE_RECT_COLOR, FACE_RECT_THICKNESS)

for (landmark in face.faceLandmarks) {

Imgproc.circle(

mat,

landmark,

FACE_RECT_THICKNESS,

FACE_RECT_COLOR,

-1,

Imgproc.LINE_AA

)

}

}

str += "face num = ${

facesArray.size}\n"

Utils.matToBitmap(mat, bmp2)

binding.imageView.setImageBitmap(bmp2)

binding.textView.text = str

}

/**

* A native method that is implemented by the 'detection' native library,

* which is packaged with this application.

*/

external fun facedetect(matAddr: Long): Array<Face>

companion object {

// Used to load the 'detection' library on application startup.

init {

System.loadLibrary("detection")

}

}

}

3.5 运行结果

四、总结

单张图片运行速度和效果整体符合官方数据。速度快且效果不错。

| Method | Time | FPS | Time | FPS |

|---|---|---|---|---|

| Single-thread | Single-thread | Multi-thread | Multi-thread | |

| cnn (CPU, 640x480) | 492.99ms | 2.03 | 149.66ms | 6.68 |

| cnn (CPU, 320x240) | 116.43ms | 8.59 | 34.19ms | 29.25 |

| cnn (CPU, 160x120) | 27.91ms | 35.83 | 8.43ms | 118.64 |

| cnn (CPU, 128x96) | 17.94ms | 55.74 | 5.24ms | 190.82 |