日志收集分析系统ELK---rsyslog+filebeat+logstash+es+kibana

rsyslog+filebeat+logstash+es+kibana

rsyslog服务端收集客户端的系统日志,filebeat收集rsyslog服务端上所有机器的系统日志,发送给logstash,logstash收集filebeat收集的日志并存储到es集群中,kibana图形化显示es中的数据

实验准备四台机器

192.168.200.130 es集群+kibana

192.168.200.131 rsyslog服务端+filebeat

192.168.200.131 logstash

192.168.200.132 rsyslog客户端

配置rsyslog服务器

首先配置rsyslog服务端

[root@localhost ~]# hostnamectl set-hostname rsyslog-beat

[root@rsyslog-beat ~]# vim /etc/rsyslog.conf

#这两行打开注释

$ModLoad imudp

$UDPServerRun 514

#添加这两行,以ip标记rsyslog服务端收集到的系统日志是来自哪台机器的

#### MODULES ####

$template myFormat,"%timestamp% %fromhost-ip% %syslogtag% %msg%\n"

$ActionFileDefaultTemplate myFormat

#修改完成重启服务

[root@rsyslog-beat ~]# systemctl restart rsyslog

配置rsyslog客户端

[root@localhost ~]# hostnamectl set-hostname rsyslog-client

[root@rsyslog-beat ~]# vim /etc/rsyslog.conf

#这两行打开注释

$ModLoad imudp

$UDPServerRun 514

#添加这一行(后面的ip为rsyslog客户端ip)

*.info;mail.none;authpriv.none;cron.none @192.168.200.131

#修改完成重启服务

[root@rsyslog-beat ~]# systemctl restart rsyslog

测试

rsyslog客户端下载一个apache,开关,查看rsyslog服务端时候能收集到

[root@rsyslog-client ~]# systemctl start httpd

[root@rsyslog-client ~]# systemctl stop httpd

[root@rsyslog-client ~]# systemctl start httpd

[root@rsyslog-client ~]# systemctl stop httpd

去rsyslog服务端查看/var/log/messages

[root@rsyslog-beat ~]# vim /var/log/messages

Jul 23 20:37:18 192.168.200.133 systemd: Starting The Apache HTTP Server...

Jul 23 20:37:27 192.168.200.133 httpd: AH00558: httpd: Could not reliably determine the server's fully qualified domain name, using 220.250.64.225. Set the 'ServerName' directive globally to suppress this message

Jul 23 20:37:29 192.168.200.133 systemd: Started The Apache HTTP Server.

Jul 23 20:37:33 192.168.200.133 systemd: Stopping The Apache HTTP Server...

Jul 23 20:37:34 192.168.200.133 systemd: Stopped The Apache HTTP Server.

Jul 23 20:37:35 192.168.200.133 systemd: Starting The Apache HTTP Server...

Jul 23 20:37:35 192.168.200.133 httpd: AH00558: httpd: Could not reliably determine the server's fully qualified domain name, using fe80::75f9:2d8:9831:f135. Set the 'ServerName' directive globally to suppress this message

Jul 23 20:37:39 192.168.200.133 systemd: Started The Apache HTTP Server.

Jul 23 20:37:41 192.168.200.133 systemd: Stopping The Apache HTTP Server...

Jul 23 20:37:42 192.168.200.133 systemd: Stopped The Apache HTTP Server.

可以看到客户端产生的系统日志已经被服务端收集到了,并且标记了ip

安装filebeat

[root@rsyslog-beat ~]# tar -xf filebeat-7.8.0-linux-x86_64.tar.gz -C /usr/local/

[root@rsyslog-beat ~]# cd /usr/local

[root@rsyslog-beat local]# ln -s filebeat-7.8.0-linux-x86_64 filebeat

配置开机启动

[root@rsyslog-beat ~]# vim /etc/systemd/system/filebeat.service

[Unit]

Description=filebeat server daemon

Documentation= /usr/local/filebeat/filebeat -help

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Environment="BEAT_CONFIG_OPTS=-c /usr/local/filebeat/filebeat.yml"

ExecStart= /usr/local/filebeat/filebeat $BEAT_CONFIG_OPTS

Restart=always

[Install]

WantedBy=multi-user.target

[root@rsyslog-beat ~]# systemctl daemon-reload

修改filebeat配置文件

收集/var/log/messages中的数据并发送给logstash

[root@rsyslog-beat ~]# vim /usr/local/filebeat/filebeat.yml

- type: log

enabled: true

paths:

- /var/log/messages

reload.enabled: true

# ------------------------------ Logstash Output -------------------------------

output.logstash:

# The Logstash hosts

hosts: ["192.168.200.132:5044"]

ES集群单节点多实例配置

首先关闭防火墙,selinux,修改主机名(es logstash机器均操作)

[root@localhost ~]#systemctl stop firewalld

[root@localhost ~]#setenforce 0

[root@localhost ~]#hostnamectl set-hostname es

[root@localhost ~]#hostnamectl set-hostname logstash

部署java环境

[root@es ~]#tar xf jdk-13.0.2_linux-x64_bin.tar.gz -C /usr/local/

[root@es ~]#cd /usr/local/

[root@es ~]#ln -s /usr/local/jdk-13.0.2/ /usr/local/java

[root@es ~]#vim /etc/profile

export JAVA_HOME=/usr/local/java

export PATH=$JAVA_HOME/bin:$PATH

[root@es ~]#source /etc/profile

修改文件限制

[root@es ~]#cat >>/etc/security/limits.conf <<-EOF

# 增加以下内容

* soft nofile 65536

* hard nofile 65536

* soft nproc 32000

* hard nproc 32000

elk soft memlock unlimited

elk hard memlock unlimited

EOF

配置对于systemd service的资源限制

[root@es ~]# cat >>/etc/systemd/system.conf<<-EOF

DefaultLimitNOFILE=65536

DefaultLimitNPROC=32000

DefaultLimitMEMLOCK=infinity

EOF

# nproc 操作系统级别对每个用户创建的进程数的限制

# nofile 是每个进程可以打开的文件数的限制

调整虚拟内存和最大并发连接

[root@es ~]# cat >>/etc/sysctl.conf<<-EOF

#增加的内容

vm.max_map_count=655360

fs.file-max=655360

vm.swappiness=0

EOF

给 ELK 用户添加免密 sudo

[root@es ~]#useradd elk

[root@es ~]#visudo

elk ALL=(ALL) NOPASSWD: ALL

重启

[root@es ~]#reboot

安装 Elasticsearch

创建 ELK 运行环境

# 切换用户到 elk

[elk@es ~]# su - elk

# 创建elkaPP目录并设置所有者

[elk@es ~]$ sudo mkdir /usr/local/elkapp

# 创建ELK数据目录并设置所有者

[elk@es ~]$ sudo mkdir /usr/local/elkdata

# 创建Elasticsearch主目录

[elk@es ~]$ sudo mkdir -p /usr/local/elkdata/es

# 创建Elasticsearch数据目录

[elk@es ~]$ sudo mkdir -p /usr/local/elkdata/es/data

# 创建Elasticsearch日志目录

[elk@es]$ sudo mkdir -p /usr/local/elkdata/es/logs

# 设置目录权限

[elk@es]$ sudo chown -R elk:elk /usr/local/elkapp

[elk@es]$ sudo chown -R elk:elk /usr/local/elkdata

下载elk

[elk@es ~]$ sudo tar -xf elasticsearch-7.8.0-linux-x86_64.tar.gz -C /usr/local/elkapp

[elk@es ~]$ cd /usr/local/elkapp

[elk@es ~]$ sudo ln -s /usr/local/elkapp/elasticsearch-7.8.0/ /usr/local/elkapp/elasticsearch

将解压出来的目录复制两份

[elk@es ~]$ sudo cp -r /usr/local/elkapp/elasticsearch /usr/local/elkapp/elasticsearch-node1

[elk@es ~]$ sudo cp -r /usr/local/elkapp/elasticsearch /usr/local/elkapp/elasticsearch-node2

配置 Elasticsearch

配置主节点

[elk@es ~]$ cd /usr/local/elkapp/elasticsearsh/config/

[elk@es config]$ sudo mv elasticsearch.yml{

,.bak}

[elk@es config]$ sudo vim elasticsearch.yml

cluster.name: my-es

node.name: es-master

node.master: true

node.data: true

bootstrap.memory_lock: true

path.data: /usr/local/elkdata/es/data

path.logs: /usr/local/elkdata/es/logs

network.host: 192.168.200.130

http.port: 9200

transport.tcp.port: 9300

discovery.seed_hosts: ["192.168.200.130:9300", "192.168.200.130:9301","192.168.200.130:9302"]

cluster.initial_master_nodes: ["192.168.200.130:9300"]

http.cors.enabled: true

http.cors.allow-origin: "*"

node.max_local_storage_nodes: 3 # 这个配置限制了单节点上可以开启的ES存储实例的个数,我们需要开多个实例,因此需要把这个配置写到配置文件中,并为这个配置赋值为2或者更高

discovery.zen.minimum_master_nodes: 3 #设置这个参数来保证集群中的节点可以知道其它N个有master资格的节点。默认为1,对于大的集群来说,可以设置大一点的值(2-4)

配置数据节点1

[elk@es config]$ cd /usr/local/elkapp/elasticsearsh-node1/config/

[elk@es config]$ sudo mv elasticsearch.yml{

,.bak}

[elk@es config]$ sudo vim elasticsearch.yml

cluster.name: my-es

node.name: es-node1

node.data: true

bootstrap.memory_lock: true

path.data: /usr/local/elkdata/es/data

path.logs: /usr/local/elkdata/es/logs

network.host: 192.168.200.130

http.port: 9201 #修改端口

transport.tcp.port: 9301 #修改端口

discovery.seed_hosts: ["192.168.200.130:9300","192.168.200.130:9301","192.168.200.130:9302"] #ip+端口

cluster.initial_master_nodes: ["192,168.200.130:9300"] #ip+端口

node.max_local_storage_nodes: 3

discovery.zen.minimum_master_nodes: 3

配置数据节点2

[elk@es config]$ cd /usr/local/elkapp/elasticsearsh-node2/config/

[elk@es config]$ sudo mv elasticsearch.yml{

,.bak}

[elk@es config]$ sudo vim elasticsearch.yml

cluster.name: my-es

node.name: es-node2

node.data: true

bootstrap.memory_lock: true

path.data: /usr/local/elkdata/es/data

path.logs: /usr/local/elkdata/es/logs

network.host: 192.168.200.130

http.port: 9202

transport.tcp.port: 9302

discovery.seed_hosts: ["192.168.200.130:9300","192.168.200.130:9301","192.168.200.130:9302"]

cluster.initial_master_nodes: ["192.168.200.130:9300"]

node.max_local_storage_nodes: 3

discovery.zen.minimum_master_nodes: 3

配置 ES 开机启动

实例一

[elk@es~]$ sudo vim /etc/systemd/system/elasticsearch.service

[Unit]

Description=elasticsearch

[Service]

User=elk

Group=elk

LimitMEMLOCK=infinity

LimitNOFILE=100000

LimitNPROC=100000

ExecStart=/usr/local/elkapp/elasticsearch/bin/elasticsearch

[Install]

WantedBy=multi-user.target

实例二

[elk@es~]$ sudo cp /etc/systemd/system/elasticsearch.service /etc/systemd/system/elasticsearch-node1.service

[elk@es~]$ sudo vim /etc/systemd/system/elasticsearch-node1.service

[Unit]

Description=elasticsearch

[Service]

User=elk

Group=elk

LimitMEMLOCK=infinity

LimitNOFILE=100000

LimitNPROC=100000

ExecStart=/usr/local/elkapp/elasticsearch-node1/bin/elasticsearch

[Install]

WantedBy=multi-user.target

实例三

[elk@es~]$ sudo cp /etc/systemd/system/elasticsearch.service /etc/systemd/system/elasticsearch-node2.service

[elk@es~]$ sudo vim /etc/systemd/system/elasticsearch-node2.service

[Unit]

Description=elasticsearch

[Service]

User=elk

Group=elk

LimitMEMLOCK=infinity

LimitNOFILE=100000

LimitNPROC=100000

ExecStart=/usr/local/elkapp/elasticsearch-node2/bin/elasticsearch

[Install]

WantedBy=multi-user.target

重新加载并设置开机自启

[elk@es~]$ sudo systemctl daemon-reload

[elk@es~]$ sudo systemctl start elasticsearch.service

[elk@es~]$ sudo systemctl enable elasticsearch.service

[elk@es~]$ sudo systemctl start elasticsearch-node2.service

[elk@es~]$ sudo systemctl enable elasticsearch-node2.service

[elk@es~]$ sudo systemctl start elasticsearch-node3.service

[elk@es~]$ sudo systemctl enable elasticsearch-node3.service

测试集群

查看端口和进程(端口的启动较慢)

浏览器访问测试

查看单个集群节点状态

http://192.168.200.130:9200

name "es-master"

cluster_name "my-es"

cluster_uuid "6hoy9bQyTgKxKEIKRaD26Q"

version

number "7.8.0"

build_flavor "default"

build_type "tar"

build_hash "757314695644ea9a1dc2fecd26d1a43856725e65"

build_date "2020-06-14T19:35:50.234439Z"

build_snapshot false

lucene_version "8.5.1"

minimum_wire_compatibility_version "6.8.0"

minimum_index_compatibility_version "6.0.0-beta1"

tagline "You Know, for Search"

查看集群健康状态

http://192.168.200.130:9200/_cluster/health

"cluster name":"my-es",

"status":"green", # 集群的状态红绿灯,绿:健康,黄:亚健康,红:病态

"timed out":false,

"number of nodes":3,

"number of data nodes":3, # 数据节点数量

"active primary shards":0, # 主分片数量

"active shards":0, # 可用的分片数量

"relocating shards":0, # 正在重新分配的分片数量,在新加或者减少节点的时候会发生

"initializing shards":0, # 正在初始化的分片数量,新建索引或者刚启动会存在,时间很短

"unassigned shards":0, # 没有分配的分片,一般就是那些名存实不存的副本分片。

"delayed unassigned shards":0, # 延迟未分配的分片数量

"number of pending tasks":0, # 等待执行任务数量

"number of in flight fetch":0, # 正在执行的数量

"task max waiting in queue_millis":0, # 任务在队列中等待的较大时间(毫秒)

"active shards percent as number":100.0 # 活跃分片数量的百分比

安装es-head插件

安装 nodejs

[elk@es ~]$ curl --silent --location https://rpm.nodesource.com/setup_12.x | sudo bash

[elk@es ~]$ sudo yum install -y nodejs

验证安装

[root@es ~]# node -v

v12.18.2

[root@es ~]# npm -v

6.14.5

配置nodejs 使用阿里源

[root@es ~]# npm install --registry=https://registry.npm.taobao.org (临时使用)

下载安装 elasticsearch-head

[root@es ~]# tar -xf elasticsearch-head-npm-install.tar.gz

[root@es ~]# cd elasticsearch-head

[root@es ~]# npm install --registry=https://registry.npm.taobao.org

[root@es ~]# npm run start &

[1] 2028

[root@es elasticsearch-head]#

> [email protected] start /root/elasticsearch-head

> grunt server

Running "connect:server" (connect) task

Waiting forever...

Started connect web server on http://localhost:9100

浏览器访问验证

安装 logstash

创建所需目录

[elk@logstash ~]$ sudo mkdir /usr/local/elkapp && sudo mkdir -p /usr/local/elkdata/logstash/{

data,logs} && chown -R elk:elk /usr/local/elkapp && sudo chown -R elk:elk /usr/local/elkdata

安装Logstash

[root@logstash ~]# tar -xf logstash-7.8.0.tar.gz -C /usr/local/elkapp

[elk@logstash ~]$ cd /usr/local/elkapp/

[elk@logstash elkapp]$ ln -s logstash-7.8.0/ logstash

配置Logstash

[elk@logstash ~]$ sudo vim /usr/local/elkapp/logstash-7.8.0/config/logstash.yml

#增加以下内容

path.data: /usr/local/elkdata/logstash/data

path.logs: /usr/local/elkdata/logstash/logs

配置输入输出

[elk@logstash config]$ cat input-output.conf

input{

syslog{

type => "system-syslog"

port => 514

host => "192.168.200.131"

}

}

}

filter {

}

output {

elasticsearch {

hosts => ["192.168.200.130:9200","192.168.20.130:9201","192.168.200.130:9202"]

index => "logstash-%{type}-%{+YYYY.MM.dd}"

document_type => "%{type}"

}

stdout {

}

}

配置 pipelines.yml

[elk@logstash ~]$ sudo cp /usr/local/elkapp/logstash/configpipelines.yml{

,.bak}

[elk@logstash ~]$ sudo vim /usr/local/elkapp/logstash/config/pipelines.yml

内容如下

- pipeline.id: id1

pipeline.workers: 1

path.config: "/usr/local/elkapp/logstash/config/input-output.conf

配置开机启动

[elk@logstash ~]$ sudo vim /etc/systemd/system/logstash.service

[Unit]

Description=logstash

[Service]

User=elk

Group=elk

LimitMEMLOCK=infinity

LimitNOFILE=100000

LimitNPROC=100000

ExecStart=/usr/local/elkapp/logstash/bin/logstash

[Install]

WantedBy=multi-user.target

[elk@logstash ~]$ sudo systemctl daemon-reload

[elk@logstash ~]$ sudo systemctl start logstash

[elk@logstash ~]$ sudo systemctl enable logstash

编辑一个输入输出配置文件

[elk@logstash config]$ sudo vim rsyslog.conf

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => ["192.168.200.130:9200","192.168.200.130:9201","192.168.200.130:9202"]

index => "logstash-%{type}-%{+YYYY.MM.dd}"

}

stdout {

}

}

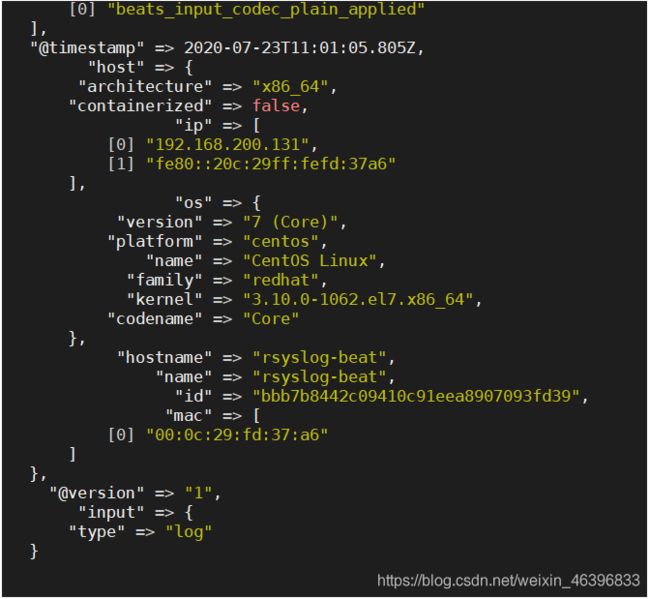

测试

开启filebeat和logstash服务

[root@rsyslog-beat ~]# systemctl start filebeat

[root@logstash ~]# cd /usr/local/elkapp/logstash

[root@logstash logstash]# ./bin/logstash -f config/rsyslog.conf

去es-head界面查看,已生成索引并产生了数据

注意!!如果不适用filebeat,直接用rsyslog发送数据给logstash,要用root用户执行logstash,因为rsyslog传输的端口是默认514,1000端口以下需要使用root用户,否则会报错,或者修改rsyslog的默认端口为1000以上

安装配置kibana

安装kibana

[elk@es ~]$ sudo mkdir -p /usr/local/elkdata/kibana/{data,logs} && sudo chown -R elk:elk /usr/local/elkapp && sudo chown -R elk:elk /usr/local/elkdata

[root@es ~]# tar -xf kibana-7.8.0-linux-x86_64.tar.gz -C /usr/local/elkapp/

[root@es ~]# cd/usr/local/elkapp/

[root@es elkapp]# ln -s kibana-7.8.0-linux-x86_64 kibana

配置

[root@es ~]# vim /usr/local/elkapp/kibana/config/kibana.yml

server.port: 5601

server.host: "192.168.200.130"

elasticsearch.hosts: ["http://192.168.200.130:9200"]

配置开机启动

[root@es ~]# vim /etc/systemd/system/kibana.service

[Unit]

Description=kibana

[Service]

User=elk

Group=elk

LimitMEMLOCK=infinity

LimitNOFILE=100000

LimitNPROC=100000

ExecStart=/usr/local/elkapp/kibana/bin/kibana

[Install]

WantedBy=multi-user.target

[root@es ~]# systemctl daemon-reload

[root@es ~]# systemctl start kibana

去浏览器访问并配置