kubernetes1.5.2版本 yum install 方式安装部署 认证授权机制 安全模式 完整版

https://www.sohu.com/a/316191121_701739

https://zhuanlan.zhihu.com/p/31046822

https://kubernetes.io/docs/reference/command-line-tools-reference/kube-controller-manager/

此次是kubernetes1.5的版本

认证相关参数:

• anonymous-auth参数:是否启用匿名访问,可以选择true或者false,默认是true,表示启用匿名访问。

• authentication-token-webhook参数:使用tokenreviewAPI来进行令牌认证。

• authentication-token-webhook-cache-ttl参数:webhook令牌认证缓存响应时长。

• client-ca-file参数:表示使用x509证书认证,如果设置此参数,那么就查找client-ca-file参数设置的认证文件,任何请求只有在认证文件中存在的对应的认证,那么才可以正常访问。

授权相关参数:

• authorization-mode参数:kubelet的授权模式,可以选择AlwaysAllow或者Webhook,如果设置成Webhook,那么使用SubjectAccessReviewAPI进行授权。

• authorization-webhook-cache-authorized-ttl参数:webhook授权时,已经被授权内容的缓存时长。

• authorization-webhook-cache-unauthorized-ttl参数:webhook授权时,没有被授权内容的缓存时长。

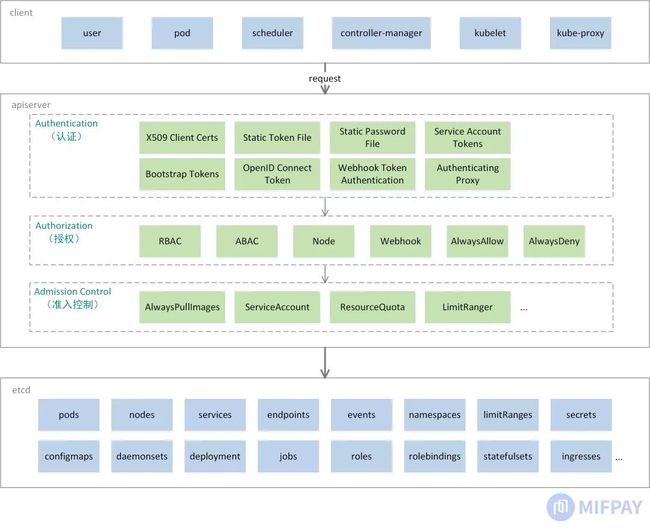

作为后台支撑,Kubernetes优势明显,咪付的蓝牙过闸系统和全态识别AI系统的后台支撑采用了Kubernetes。Kubernetes平台稳定运行一个重要因素是安全性的有效保障,其中API的访问安全是一个重要方面,本文讲解Kubernetes如何保证API访问的安全性。

本篇文章包含以下内容:

介绍Kubernetes API安全防护措施

如何对用户身份进行认证

如何对访问资源进行鉴权

介绍Kubernetes准入控制的作用

Kubernetes平台由6个组件组成:apiserver、scheduler、controller-manager、kubelet、kube-proxy、etcd,其中etcd是Kubernetes数据库,在生产环境中一般不允许直接访问。平台通过apiserver组件对外提供HTTPS协议服务接口,其它scheduler、controller-manager、kubelet、kube-proxy组件不提供交互接口。运维中对平台的管理操作都需要通过apiserver提供的功能接口完成,因此Kubernetes总体的API安全防护机制是对用户访问操作HTTPS服务接口的控制。

用户可通过客户端kubectl命令行工具或其它方式访问Kubernetes的API资源,每个访问API的请求,都要经过三个步骤校验:Authentication、Authorization、Admission Control,总体如下图所示:

- Authentication(认证),对用户身份进行验证。认证方式现共有8种,可以启用一种或多种认证方式,只要有一种认证方式通过,就不再进行其它方式的认证。通常启用X509 Client Certs和Service Accout Tokens两种认证方式。

- Authorization(授权),验证用户是否拥有访问权限。授权方式现共有6种,可以启用一种或多种授权方式,启用的任一种方式依据授权策略明确允许或拒绝请求,则立即返回,不再进行其它方式的授权。通常启用RBAC和Node授权方式。

- Admission Control(准入控制),对API资源对象修改、校验。它有一个插件列表,所有请求需要经过这个列表中的每个准入控制插件修改、校验,如果某一个准入控制插件验证失败,就拒绝请求。

用户访问 Kubernetes API时,apiserver认证授权模块从HTTPS请求中获取用户的身份信息、请求资源路径和业务对象参数。身份信息是用来确认用户的身份是否合法,资源路径是用于判定用户是否拥有访问操作的权限,业务对象参数则在准入控制中接受修改或校验参数设置是否符合业务要求。

- 请求到达后,apiserver从请求的证书、Header中获取用户信息:Name(用户名)、UID(用户唯一标识)、Groups(用户分组)、Extra(用户额外信息)。认证通过后,通过Context将用户信息向下传播。

- 授权模块从Context、请求的Method及URI提取用户信息、请求资源属性。使用请求资源属性与授权策略匹配或向外部服务验证判断是否准予访问。

- 准入控制接收到HTTPS请求提交的Body内容、用户信息、资源信息,根据不同准入控制插件的功能对上述信息做修改或校验。

二、认证

默认anonymous-auth参数设置成true,也就是可以进行匿名认证,这时对kubelet API的请求都以匿名方式进行,系统会使用默认匿名用户和默认用户组来进行访问,默认用户名“system:anonymous”,默认用户组名“system:unauthenticated”。

可以禁止匿名请求,这时就需要设置kubelet启动参数:“–anonymous-auth=false”,这时如果请求时未经过认证的,那么会返回“401 Unauthorized”。

可以使用x509证书认证(X.509格式的证书是最通用的一种签名证书格式)。这时就需要设置kubelet启动参数:“–client-ca-file”,提供认证文件,通过认证文件来进行认证。同时还需要设置api server组件启动参数:“–kubelet-client-certificate”和“–kubelet-client-key”。在认证文件中一个用户可以属于多个用户组,比如下面例子产生的认证:

openssl req -new -key jbeda.pem -outjbeda-csr.pem -subj “/CN=jbeda/O=app1/O=app2”

这个例子创建了csr认证文件,这个csr认证文件作用于用户jbeda,这个用户属于两个用户组,分别是app1和app2。

可以启用令牌认证,这时需要通过命令“–runtime-config=authentication.k8s.io/v1beta1=true“启用api server组件authentication.k8s.io/v1beta1相关的API,还需要启用kubelet组件的“–authentication-token-webhook”、“–kubeconfig”、“–require-kubeconfig”三个参数。

kubeconfig参数:设置kubelet配置文件路径,这个配置文件用来告诉kubelet组件api server组件的位置,默认路径是。

require-kubeconfig参数:这是一个布尔类型参数,可以设置成true或者false,如果设置成true,那么表示启用kubeconfig参数,从kubeconfig参数设置的配置文件中查找api server组件,如果设置成false,那么表示使用kubelet另外一个参数“api-servers”来查找api server组件位置。

================================================================================================

认证即身份验证,认证关注的是谁发送的请求,也就是说用户必须用某种方式证明自己的身份信息。Kubernetes集群有两种类型的用户:普通用户(normal users)、服务账户(service accounts),普通用户并不被Kubernetes管理和保存;而服务账户由Kubernetes存储在etcd中,并可通过apiserver API管理。

最新的1.14版本中已支持8种认证方式,从身份验证的方法上可分为4种类型:Token、证书、代理、密码。Kubernetes可以同时启用多个认证方式,在这种情况下,每个认证模块都按顺序尝试验证用户身份,直到其中一个成功就认证通过。如果启用匿名访问,没有被认证模块拒绝的请求将被视为匿名用户,并将该请求标记为system:anonymous 用户名和system:unauthenticated组。从1.6版本开始,ABAC和RBAC授权需要对system:anonymous用户或system:unauthenticated组进行明确授权。

启用多个认证方式时,验证顺序如下:

Static Password Files

Static Password File(静态密码认证),启用此认证方式是通过设置apiserver参数--basic-auth-file=SOMEFILE。密码是无限期使用,更改密码后需要重启apiserver才能生效。

静态密码文件是CSV格式文件,至少包含3列:密码,用户名,用户ID。从Kubernetes 1.6版本开始,第四列(可选)为用户分组,如果有多个用户分组,则该列必须加双引号。例如:

password,name,uid, "group1,group2,group3"

当使用HTTPS客户端连接时,apiserver从HTTPS Header中,如Authorization: Basic ENCODED,提取出用户名和密码。ENCODED解码后格式为USER:PASSWORD。

从请求中获取到用户名和密码,与静态密码文件中的用户逐一进行比较,匹配成功则认证通过,否则认证失败。认证通过时从CSV中提取匹配的用户信息用于后续的授权等。

root@localhost:/etc/kubernetes # ls

api apiserver config controller-manager kubelet proxy recycler.yaml scheduler

root@localhost:/etc/kubernetes # pwd

/etc/kubernetes

root@localhost:/etc/kubernetes # ls api

staticpassword-auth.csv statictoken-auth.csv

root@localhost:/etc/kubernetes # cat api/staticpassword-auth.csv

123456aA,admin,1

root@localhost:/etc/kubernetes # cat apiserver

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0 --bind-address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--port=8080 --secure-port=6443"

# Port minions listen on

KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://10.10.3.127:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# default admission control policies

#KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

# Add your own!

# username+password password,name,uid[123456aA,admin,1]

KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --basic-auth-file=/etc/kubernetes/api/staticpassword-auth.csv"

#token-auth [token,admin,1]

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --token-auth-file=/etc/kubernetes/api/statictoken-auth.csv"重启服务

systemctl restart kube-apiserver.service浏览访问https://10.10.3.127:6443/ 此时会让输入用户名和密码 admin/123456aA

curl --insecure -i -v -u admin:123456aA https://10.10.3.127:6443

--insecure方式因为还没有ca证书呢 下面会操作有ca证书的

root@localhost:/etc/kubernetes # curl --insecure -i -v -u admin:123456aA https://10.10.3.127:6443

* About to connect() to 10.10.3.127 port 6443 (#0)

* Trying 10.10.3.127...

* Connected to 10.10.3.127 (10.10.3.127) port 6443 (#0)

* Initializing NSS with certpath: sql:/etc/pki/nssdb

* skipping SSL peer certificate verification

* SSL connection using TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

* Server certificate:

* subject: CN=10.10.3.127@1597287437

* start date: Aug 13 02:57:17 2020 GMT

* expire date: Aug 13 02:57:17 2021 GMT

* common name: 10.10.3.127@1597287437

* issuer: CN=10.10.3.127@1597287437

* Server auth using Basic with user 'admin'

> GET / HTTP/1.1

> Authorization: Basic YWRtaW46MTIzNDU2YUE=

> User-Agent: curl/7.29.0

> Host: 10.10.3.127:6443

> Accept: */*

>

< HTTP/1.1 200 OK

HTTP/1.1 200 OK

< Content-Type: application/json

Content-Type: application/json

< Date: Tue, 18 Aug 2020 07:55:39 GMT

Date: Tue, 18 Aug 2020 07:55:39 GMT

< Content-Length: 988

Content-Length: 988

<

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/apps",

"/apis/apps/v1beta1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v2alpha1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1alpha1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/policy",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1alpha1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/ping",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/extensions/third-party-resources",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/logs",

"/metrics",

"/swaggerapi/",

"/ui/",

"/version"

]

* Connection #0 to host 10.10.3.127 left intact

}#curl --insecure -i -v -H 'Authorization: Basic YWRtaW46MTIzNDU2YUE=' 'https://10.10.3.127:6443' 也可以

kubectl也是访问api-server的所以其他任意有kubectl的地方配置如下即可使用,生成下面的配置是通过kubectl config 参考https://blog.csdn.net/Michaelwubo/article/details/108061018

[root@localhost kubernetes]# cat ~/.kube/config

apiVersion: v1

clusters:

- cluster:

insecure-skip-tls-verify: true

server: https://10.10.3.127:6443

name: k8s

contexts:

- context:

cluster: k8s

namespace: kube-system

user: admin-credentials

name: ctx-admin

current-context: ctx-admin

kind: Config

preferences: {}

users:

- name: admin-credentials

user:

password: 123456aA

username: admin

Static Token File

Static Token File(静态Token认证),启用此认证方式是通过设置apiserver参数--token-auth-file=SOMEFILE。Token长期有效,更改Token列表后需要重启apiserver才能生效。

SOMEFILE是CSV格式文件,至少包含3列:Token、用户名、用户的UID,其次是可选的分组。每一行表示一个用户。例如:

token,name,uid,"group1,group2,group3"

当使用HTTPS客户端连接时,apiserver从HTTPS Header中提取身份Token。Token必须是一个字符序列,在HTTPS Header格式如下:

Authorization: Bearer 31ada4fd-adec-460c-809a-9e56ceb75269

从请求中提取身份Token,与静态Token文件中的用户逐一进行比较,匹配成功则认证通过,否则认证失败。认证通过后将匹配的用户信息用于后续的授权等。

root@localhost:/etc/kubernetes # ls 1 ↵

api apiserver config controller-manager kubelet proxy recycler.yaml scheduler

root@localhost:/etc/kubernetes # cat api/statictoken-auth.csv

5c6777cc03153121f70bdd8d83c731bf,admin,1

root@localhost:/etc/kubernetes # cat apiserver

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0 --bind-address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--port=8080 --secure-port=6443"

# Port minions listen on

KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://10.10.3.127:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# default admission control policies

#KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

# Add your own!

# username+password password,name,uid[123456aA,admin,1]

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --basic-auth-file=/etc/kubernetes/api/staticpassword-auth.csv"

#token-auth [token,admin,1]

KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --token-auth-file=/etc/kubernetes/api/statictoken-auth.csv"重启服务

systemctl restart kube-apiserver.service浏览器方式

--insecure或-k 此时是没有ca证书的x501

root@localhost:/etc/kubernetes # curl --insecure -v -i -H "Accept:application/json" -H "Authorization: Bearer 5c6777cc03153121f70bdd8d83c731bf" 'https://10.10.3.127:6443'

* About to connect() to 10.10.3.127 port 6443 (#0)

* Trying 10.10.3.127...

* Connected to 10.10.3.127 (10.10.3.127) port 6443 (#0)

* Initializing NSS with certpath: sql:/etc/pki/nssdb

* skipping SSL peer certificate verification

* SSL connection using TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

* Server certificate:

* subject: CN=10.10.3.127@1597287437

* start date: Aug 13 02:57:17 2020 GMT

* expire date: Aug 13 02:57:17 2021 GMT

* common name: 10.10.3.127@1597287437

* issuer: CN=10.10.3.127@1597287437

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Host: 10.10.3.127:6443

> Accept:application/json

> Authorization: Bearer abcd1234

>

< HTTP/1.1 200 OK

HTTP/1.1 200 OK

< Content-Type: application/json

Content-Type: application/json

< Date: Tue, 18 Aug 2020 08:03:16 GMT

Date: Tue, 18 Aug 2020 08:03:16 GMT

< Content-Length: 988

Content-Length: 988

<

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/apps",

"/apis/apps/v1beta1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v2alpha1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1alpha1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/policy",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1alpha1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/ping",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/extensions/third-party-resources",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/logs",

"/metrics",

"/swaggerapi/",

"/ui/",

"/version"

]

* Connection #0 to host 10.10.3.127 left intact

}#kubectl也是访问api-server的所以其他任意有kubectl的地方配置如下即可使用

此时还是--insecure方式因为还没有ca证书呢

https://www.cnblogs.com/tianshifu/p/7841007.html

root@localhost:~/.kube # kubectl config set-cluster k8s --server=https://10.10.3.127:6443 --insecure-skip-tls-verify=true

Cluster "k8s" set.

root@localhost:~/.kube # kubectl config set-credentials admin --token=abcd1234

root@localhost:~/.kube # head -c 16 /dev/urandom | od -An -t x | tr -d ' ' #token 生成,也可以自定义如abcd1234

root@localhost:~/.kube # head -c 16 /dev/urandom | od -An -t x | tr -d ' '

5c6777cc03153121f70bdd8d83c731bf

root@localhost:~/.kube # kubectl config set-credentials admin --token=5c6777cc03153121f70bdd8d83c731bf

User "admin" set.

root@localhost:~/.kube # kubectl config set-context admin@k8s --cluster=k8s --user=admin --namespace=wubo

Context "admin@k8s" set.

root@localhost:~/.kube # kubectl config use-context admin@k8s

Switched to context "admin@k8s".kube-apiserver设置

添加kube-apiserver端token证书

$ cat > /etc/kubernetes/api/statictoken-auth.csv<把config拷贝到有kubectl的机器上面去即可

[root@localhost kubernetes]# cat ~/.kube/config

apiVersion: v1

clusters:

- cluster:

insecure-skip-tls-verify: true

server: https://10.10.3.127:6443

name: k8s

contexts:

- context:

cluster: k8s

user: admin

name: admin@k8s

current-context: admin@k8s

kind: Config

preferences: {}

users:

- name: admin

user:

token: 5c6777cc03153121f70bdd8d83c731bf

[root@localhost kubernetes]# kubectl get nodes

NAME STATUS AGE

10.10.3.119 Ready 5d

10.10.3.183 NotReady 5d

CA证书认证:

在使用证书认证之前首先需要申请证书,证书可以通过权威CA来申请,也可以通过自签证书,不过部署kubernetes的大多数环境都是内网环境,所以更多的还是使用的是自签证书。

生成证书的步骤如下:

1.首先需要你的linux系统上安装有openssl,大多数的linux发行版都带有这个工具,使用openssl生成根证书cacert:

HTTPS服务是工作在SSL/TLS上的HTTP。

首先简单区分一下HTTPS,SSL ,TLS ,OpenSSL这四者的关系:

- SSL:(Secure Socket Layer,安全套接字层)是在客户端和服务器之间建立一条SSL安全通道的安全协议;

- TLS:(Transport Layer Security,传输层安全协议),用于两个应用程序之间提供保密性和数据完整性;

- TLS的前身是SSL;

- OpenSSL是TLS/SSL协议的开源实现,提供开发库和命令行程序;

- HTTPS是HTTP的加密版,底层使用的加密协议是TLS。

结论:SSL/TLS 是协议,OpenSSL是协议的代码实现。

用OpenSSL配置带有SubjectAltName的ssl请求

对于多域名,只需要一个证书就可以保护非常多的域名。SubjectAltName是X509 Version 3 (RFC 2459)的扩展,允许ssl证书指定多个可以匹配的名称。

SubjectAltName 可以包含email 地址,ip地址,正则匹配DNS主机名,等等。ssl这样的一个特性叫做:SubjectAlternativeName(简称:san)

生成证书请求文件

对于一个通用的ssl证书请求文件(CSR),openssl不需要很多操作。

因为我们可能需要添加一个或者两个SAN到我们CSR,我们需要在openssl配置文件中添加一些东西:你需要告诉openssl创建一个包含x509 V3扩展的CSR,并且你也需要告诉openssl在你的CSR中包含subject alternative names列表。

创建一个openssl配置文件(openssl.cnf),并启用subject alternative names:

找到req段落。这段落的内容将会告诉openssl如何去处理证书请求(CSR)。

在req段落中应该要包含一个以req_extensions开始的行。如下:

[req]

distinguished_name = req_distinguished_name

req_extensions = v3_req 这个配置是告诉openssl在CSR中要包含v3_req段落的部分。

现在我们来配置v3_req,如下:

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = www.superred.com

DNS.2 = superred.com

IP.1 = 10.10.3.127请注意:无论v3_req放哪里,都是可以的,都会在所有生成的CSR中。

要是之后,你又想生成一个不同的SANs的CSR文件,你需要编辑这个配置文件,并改变DNS.x列表。 req_extensions = v3_req打开注释

完整的openssl.conf 如下

root@localhost:/etc/pki/k8s # cat openssl.cnf | grep -v ^# | grep -v ^$

HOME = .

RANDFILE = $ENV::HOME/.rnd

oid_section = new_oids

[ new_oids ]

tsa_policy1 = 1.2.3.4.1

tsa_policy2 = 1.2.3.4.5.6

tsa_policy3 = 1.2.3.4.5.7

[ ca ]

default_ca = CA_default # The default ca section

[ CA_default ]

dir = /etc/pki/CA # Where everything is kept

certs = $dir/certs # Where the issued certs are kept

crl_dir = $dir/crl # Where the issued crl are kept

database = $dir/index.txt # database index file.

# several ctificates with same subject.

new_certs_dir = $dir/newcerts # default place for new certs.

certificate = $dir/cacert.pem # The CA certificate

serial = $dir/serial # The current serial number

crlnumber = $dir/crlnumber # the current crl number

# must be commented out to leave a V1 CRL

crl = $dir/crl.pem # The current CRL

private_key = $dir/private/cakey.pem# The private key

RANDFILE = $dir/private/.rand # private random number file

x509_extensions = usr_cert # The extentions to add to the cert

name_opt = ca_default # Subject Name options

cert_opt = ca_default # Certificate field options

default_days = 365 # how long to certify for

default_crl_days= 30 # how long before next CRL

default_md = sha256 # use SHA-256 by default

preserve = no # keep passed DN ordering

policy = policy_match

[ policy_match ]

countryName = match

stateOrProvinceName = match

organizationName = match

organizationalUnitName = optional

commonName = supplied

emailAddress = optional

[ policy_anything ]

countryName = optional

stateOrProvinceName = optional

localityName = optional

organizationName = optional

organizationalUnitName = optional

commonName = supplied

emailAddress = optional

[ req ]

default_bits = 2048

default_md = sha256

default_keyfile = privkey.pem

distinguished_name = req_distinguished_name

attributes = req_attributes

x509_extensions = v3_ca # The extentions to add to the self signed cert

string_mask = utf8only

req_extensions = v3_req

[ req_distinguished_name ]

countryName = Country Name (2 letter code)

countryName_default = XX

countryName_min = 2

countryName_max = 2

stateOrProvinceName = State or Province Name (full name)

localityName = Locality Name (eg, city)

localityName_default = Default City

0.organizationName = Organization Name (eg, company)

0.organizationName_default = Default Company Ltd

organizationalUnitName = Organizational Unit Name (eg, section)

commonName = Common Name (eg, your name or your server\'s hostname)

commonName_max = 64

emailAddress = Email Address

emailAddress_max = 64

[ req_attributes ]

challengePassword = A challenge password

challengePassword_min = 4

challengePassword_max = 20

unstructuredName = An optional company name

[ usr_cert ]

basicConstraints=CA:FALSE

nsComment = "OpenSSL Generated Certificate"

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid,issuer

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = www.superred.com

DNS.2 = superred.com

IP.1 = 10.10.3.127

[ v3_ca ]

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid:always,issuer

basicConstraints = CA:true

[ crl_ext ]

authorityKeyIdentifier=keyid:always

[ proxy_cert_ext ]

basicConstraints=CA:FALSE

nsComment = "OpenSSL Generated Certificate"

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid,issuer

proxyCertInfo=critical,language:id-ppl-anyLanguage,pathlen:3,policy:foo

[ tsa ]

default_tsa = tsa_config1 # the default TSA section

[ tsa_config1 ]

dir = ./demoCA # TSA root directory

serial = $dir/tsaserial # The current serial number (mandatory)

crypto_device = builtin # OpenSSL engine to use for signing

signer_cert = $dir/tsacert.pem # The TSA signing certificate

# (optional)

certs = $dir/cacert.pem # Certificate chain to include in reply

# (optional)

signer_key = $dir/private/tsakey.pem # The TSA private key (optional)

default_policy = tsa_policy1 # Policy if request did not specify it

# (optional)

other_policies = tsa_policy2, tsa_policy3 # acceptable policies (optional)

digests = sha1, sha256, sha384, sha512 # Acceptable message digests (mandatory)

accuracy = secs:1, millisecs:500, microsecs:100 # (optional)

clock_precision_digits = 0 # number of digits after dot. (optional)

ordering = yes # Is ordering defined for timestamps?

# (optional, default: no)

tsa_name = yes # Must the TSA name be included in the reply?

# (optional, default: no)

ess_cert_id_chain = no # Must the ESS cert id chain be included?

# (optional, default: no)

生成私钥

数字证书中主题(Subject)中字段的含义

- 一般的数字证书产品的主题通常含有如下字段:

| 字段名 | 字段值 |

|---|---|

| 公用名称 (Common Name) | 简称:CN 字段,对于 SSL 证书,一般为网站域名;而对于代码签名证书则为申请单位名称;而对于客户端证书则为证书申请者的姓名; |

| 单位名称 (Organization Name) | 简称:O 字段,对于 SSL 证书,一般为网站域名;而对于代码签名证书则为申请单位名称;而对于客户端单位证书则为证书申请者所在单位名称; |

- 证书申请单位所在地

| 字段名 | 字段值 |

|---|---|

| 所在城市 (Locality) | 简称:L 字段 |

| 所在省份 (State/Provice) | 简称:S 字段 |

| 所在国家 (Country) | 简称:C 字段,只能是国家字母缩写,如中国:CN |

- 其他一些字段

| 字段名 | 字段值 |

|---|---|

| 电子邮件 (Email) | 简称:E 字段 |

| 多个姓名字段 | 简称:G 字段 |

| 介绍 | Description 字段 |

| 电话号码: | Phone 字段,格式要求 + 国家区号 城市区号 电话号码,如: +86 732 88888888 |

| 地址: | STREET 字段 |

| 邮政编码: | PostalCode 字段 |

| 显示其他内容 | 简称:OU 字段 |

8.单向认证双向认证

何为SSL/TLS单向认证,双向认证?

单向认证指的是只有一个对象校验对端的证书合法性。

通常都是client来校验服务器的合法性。那么client需要一个ca.crt,服务器需要server.crt,server.key

双向认证指的是相互校验,服务器需要校验每个client,client也需要校验服务器。

server 需要 server.key 、server.crt 、ca.crt

client 需要 client.key 、client.crt 、ca.crt

https://blog.csdn.net/u013066244/article/details/78725842/

https://blog.csdn.net/Michaelwubo/article/details/80594911?utm_source=blogxgwz9

https://www.cnblogs.com/tiny1987/p/12018080.html

https://www.jianshu.com/p/60d82b457174

https://blog.csdn.net/Michaelwubo/article/details/108103572

操作步骤如下

1.生成根的私钥

openssl genrsa -out ca.key 2048

2.根据私钥生成根证书crt文件

openssl req -x509 -nodes -key ca.key -subj "/O=superred/OU=linux/CN=root" -days 5000 -out ca.crt -config openssl.cnf

3.查看根证书信息

openssl x509 -text -noout -in ca.crt

# 如果是ca.csr

openssl req -text -noout -in ca.csr

========================================

创建api-server私钥

1.openssl genrsa -out server.key 2048

根据api-server私钥创建api-server的请求证书文件server.csr

2.openssl req -new -key server.key -subj "/CN=kube-apiserver" -out server.csr -config openssl.cnf

根据请求证书文件server.csr,根证书ca.crt以及跟密钥ca.key 得到server.crt证书文件

3.openssl x509 -req -in server.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out server.crt -days 5000 -extfile openssl.cnf -extensions v3_req

===============================================

创建client私钥

创建api-server私钥

1.openssl genrsa -out client.key 2048

根据clientr私钥创建client的请求证书文件clientr.csr

2.openssl req -new -key client.key -subj "/CN=client" -out client.csr -config openssl.cnf

根据请求证书文件clientr.csr,根证书ca.crt以及跟密钥ca.key 得到client.crt证书文件

3.openssl x509 -req -in client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out client.crt -days 5000 -extfile openssl.cnf -extensions v3_req

================================================

其他类似kubelet,kebe-proxy等等kube-apiserver设置

root@localhost:/etc/kubernetes # cat apiserver

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

#KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0 --bind-address=0.0.0.0 --advertise-address=10.10.3.127"

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0 --bind-address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--port=8080 --secure-port=6443"

# Port minions listen on

KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://10.10.3.127:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# default admission control policies

#KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

# Add your own!

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false"

# username+password password,name,uid[123456aA,admin,1]

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --basic-auth-file=/etc/kubernetes/api/staticpassword-auth.csv"

#token-auth [token,admin,1]

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --token-auth-file=/etc/kubernetes/api/statictoken-auth.csv"

#ca

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --client-ca-file=/etc/pki/k8s/ca.crt --tls-private-key-file=/etc/pki/k8s/server.key --tls-cert-file=/etc/pki/k8s/server.crt"

#all

KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --basic-auth-file=/etc/kubernetes/api/staticpassword-auth.csv --token-auth-file=/etc/kubernetes/api/statictoken-auth.csv --client-ca-file=/etc/pki/k8s/ca.crt --tls-private-key-file=/etc/pki/k8s/server.key --tls-cert-file=/etc/pki/k8s/server.crt"重启服务

systemctl restart kube-apiserver

浏览方式 此时注意curl 已经不是 --insecure方式了

颁发者根证书+密钥+参与者证书方式

root@localhost:/etc/pki/k8s # curl --cacert ./ca.crt --key ./server.key --cert ./server.crt https://www.superred.com:6443 1 ↵

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/apps",

"/apis/apps/v1beta1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v2alpha1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1alpha1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/policy",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1alpha1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/ping",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/extensions/third-party-resources",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/logs",

"/metrics",

"/swaggerapi/",

"/ui/",

"/version"

]

}#颁发者根证书+token方式

root@localhost:/etc/pki/k8s # curl --cacert ./ca.crt -H 'Authorization: Bearer 5c6777cc03153121f70bdd8d83c731bf' https://www.superred.com:6443 1 ↵

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/apps",

"/apis/apps/v1beta1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v2alpha1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1alpha1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/policy",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1alpha1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/ping",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/extensions/third-party-resources",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/logs",

"/metrics",

"/swaggerapi/",

"/ui/",

"/version"

]

}# 颁发者根证书+user+password方式

root@localhost:/etc/pki/k8s # curl -v -i --cacert ./ca.crt -u admin:123456aA https://www.superred.com:6443 1 ↵

* About to connect() to www.superred.com port 6443 (#0)

* Trying 10.10.3.127...

* Connected to www.superred.com (10.10.3.127) port 6443 (#0)

* Initializing NSS with certpath: sql:/etc/pki/nssdb

* CAfile: ./ca.crt

CApath: none

* NSS: client certificate not found (nickname not specified)

* SSL connection using TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

* Server certificate:

* subject: CN=kube-apiserver

* start date: Aug 19 06:11:02 2020 GMT

* expire date: Apr 28 06:11:02 2034 GMT

* common name: kube-apiserver

* issuer: CN=root,OU=linux,O=superred

* Server auth using Basic with user 'admin'

> GET / HTTP/1.1

> Authorization: Basic YWRtaW46MTIzNDU2YUE=

> User-Agent: curl/7.29.0

> Host: www.superred.com:6443

> Accept: */*

>

< HTTP/1.1 200 OK

HTTP/1.1 200 OK

< Content-Type: application/json

Content-Type: application/json

< Date: Wed, 19 Aug 2020 08:10:01 GMT

Date: Wed, 19 Aug 2020 08:10:01 GMT

< Content-Length: 988

Content-Length: 988

<

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/apps",

"/apis/apps/v1beta1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v2alpha1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1alpha1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/policy",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1alpha1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/ping",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/extensions/third-party-resources",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/logs",

"/metrics",

"/swaggerapi/",

"/ui/",

"/version"

]

* Connection #0 to host www.superred.com left intact

}#或者

root@localhost:/etc/pki/k8s # curl -v -i --cacert ./ca.crt -H 'Authorization:Basic YWRtaW46MTIzNDU2YUE=' https://www.superred.com:6443

* About to connect() to www.superred.com port 6443 (#0)

* Trying 10.10.3.127...

* Connected to www.superred.com (10.10.3.127) port 6443 (#0)

* Initializing NSS with certpath: sql:/etc/pki/nssdb

* CAfile: ./ca.crt

CApath: none

* NSS: client certificate not found (nickname not specified)

* SSL connection using TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

* Server certificate:

* subject: CN=kube-apiserver

* start date: Aug 19 06:11:02 2020 GMT

* expire date: Apr 28 06:11:02 2034 GMT

* common name: kube-apiserver

* issuer: CN=root,OU=linux,O=superred

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Host: www.superred.com:6443

> Accept: */*

> Authorization:Basic YWRtaW46MTIzNDU2YUE=

>

< HTTP/1.1 200 OK

HTTP/1.1 200 OK

< Content-Type: application/json

Content-Type: application/json

< Date: Wed, 19 Aug 2020 08:09:22 GMT

Date: Wed, 19 Aug 2020 08:09:22 GMT

< Content-Length: 988

Content-Length: 988

<

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/apps",

"/apis/apps/v1beta1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v2alpha1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1alpha1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/policy",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1alpha1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/ping",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/extensions/third-party-resources",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/logs",

"/metrics",

"/swaggerapi/",

"/ui/",

"/version"

]

* Connection #0 to host www.superred.com left intact

}#

目前研究发现浏览器方式如chrome,firfox,ie等只能是 颁发者根证书+user+password方式,不可以 颁发者根证书+token和 颁发者根证书+密钥+参与者证书方式

postman可以颁发者根证书+token和 颁发者根证书+密钥+参与者证书方式

kubectl 方式

1.kubectl config set-cluster k8s --server=https://www.superred.com:6443 --certificate-authority=/etc/pki/k8s/ca.crt --embed-certs=true

2.kubectl config set-credentials admin --client-certificate=/etc/pki/k8s/server.crt --client-key=/etc/pki/k8s/server.key --embed-certs=true

3.kubectl config --namespace=kube-system set-context admin@k8s --cluster=k8s --user=admin

4.kubectl config use-context admin@k8s

copy config到有kubectl的机器上面去 ~/.kube/

root@localhost:~/.kube # kubectl get nodes NAME STATUS AGE

10.10.3.119 Ready 6d

10.10.3.183 NotReady 6d--embed-certs=true 意思是把密钥,key,证书写入~/.kube/config中

访问总结如下

curl --cacert ./ca.crt --key ./client.key --cert ./client.crt 'https://m10.10.3.127:6443'

curl --cacert ./ca.crt -H "Authorization: Bearer 5c6777cc03153121f70bdd8d83c731bf" 'https://m10.10.3.127:6443'

curl --cacert ./ca.crt -H "Authorization: Basic YWRtaW46MTIzNDU2YUE=" 'https://m10.10.3.127:6443'

或者

curl --cacert ./ca.crt -i -v -u admin:123456aA 'https://10.10.3.127:6443'以上认证方式是针对外部用户user account.是浏览器,kubectl,curl客户端等对api-service访问

========================================================================

以下认证方式是针对 Service Account 属于内部用户,内部服务(service)就是用户 .是pod的进程对api-service访问

Service Account Token

具体使用参考:https://blog.csdn.net/Michaelwubo/article/details/108117339

apiserver:配置

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

Service account 是使用签名的 bearer token 来验证请求的额自动启用的验证器。该插件包括两个可选的标志:

cp server.key server.pem 拷贝一份即可 其他需要openssl 转换密钥

--service-account-key-file=/etc/pki/k8s/server.key:一个包含签名 bearer token 的 PEM 编码文件。如果没有指定,将使用 API server 的 TLS 私钥即--tls-private-key-file=/etc/pki/k8s/server.key。--service-account-lookup如果启用,从 API 中删除掉的 token 将被撤销。

Service account 通常 API server 自动创建,并通过 ServiceAccount 注入控制器 关联到集群中运行的 Pod 上。Bearer token 挂载到 pod 中众所周知的位置,并允许集群进程与 API server 通信。 帐户可以使用 PodSpec 的 serviceAccountName 字段显式地与Pod关联。

注意: serviceAccountName 通常被省略,因为这会自动生成。

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: nginx-deployment

namespace: default

spec:

replicas: 3

template:

metadata:

# ...

spec:

containers:

- name: nginx

image: nginx:1.7.9

serviceAccountName: bob-the-botService account bearer token 在集群外使用也是完全有效的,并且可以用于为希望与 Kubernetes 通信的长期运行作业创建身份。要手动创建 service account,只需要使用 kubectl create serviceaccount (NAME) 命令。这将在当前的 namespace 和相关连的 secret 中创建一个 service account。

$ kubectl create serviceaccount jenkins

serviceaccount "jenkins" created

$ kubectl get serviceaccounts jenkins -o yaml

apiVersion: v1

kind: ServiceAccount

metadata:

# ...

secrets:

- name: jenkins-token-1yvwg创建出的 secret 中拥有 API server 的公共 CA 和前面的饿 JSON Web Token(JWT)。

$ kubectl get secret jenkins-token-1yvwg -o yaml

apiVersion: v1

data:

ca.crt: (APISERVER'S CA BASE64 ENCODED)

namespace: ZGVmYXVsdA==

token: (BEARER TOKEN BASE64 ENCODED)

kind: Secret

metadata:

# ...

type: kubernetes.io/service-account-token注意:所有值是基于 base64 编码的,因为 secret 总是基于 base64 编码。

经过签名的 JWT 可以用作 bearer token 与给定的 service account 进行身份验证。请参阅 上面 关于如何在请求中放置 bearer token。通常情况下,这些 secret 被挂载到 pod 中,以便对集群内的 API server 进行访问,但也可以从集群外访问。

Service account 验证时用户名 system:serviceaccount:(NAMESPACE):(SERVICEACCOUNT),被指定到组 system:serviceaccounts 和 system:serviceaccounts:(NAMESPACE)。

注意:由于 service account 的 token 存储在 secret 中,所以具有对这些 secret 的读取权限的任何用户都可以作为 service account 进行身份验证。授予 service account 权限和读取 secret 功能时要谨慎。

=============================================

上面都只是kubectl,curl,浏览器等客户端对apiserver的认证。下面要对全面认证,客户端分外部和内部(自己分的)

内部:kube-scheduler,kube-controller-manager,kubelet,kube-proxy

外部:kubectl,cutl,浏览器方式refulapi

节点3个

| ip | 配置 | 端口 | /etc/hosts | |

| master:10.10.3.127 | etcd.conf,flanneld,apiserver,config,scheduler,controller-manager | (http)8080 对内 (https)6443 对外 |

10.10.3.127 www.superred.com | |

| work:10.10.3.119 | flanneld, config,kubelet,proxy | kubelet:10250 | 10.10.3.127 www.superred.com | |

| work:10.10.3.183 | lanneld, config,kubelet,proxy | kubelet:10250 | 10.10.3.127 www.superred.com | |

1任意一台机器生成ca,我在10.10.3.127生成的

https://www.cnblogs.com/normanlin/p/10909703.html

https://www.cnblogs.com/ajianboke/p/10917776.html

https://blog.csdn.net/BigData_Mining/article/details/88529157

https://my.oschina.net/lykops/blog/1586049

https://www.sohu.com/a/316191121_701739

https://www.jianshu.com/p/bb973ab1029b

https://zhuanlan.zhihu.com/p/31046822

https://www.cnblogs.com/tiny1987/p/12018080.html

https://www.bookstack.cn/read/kubernetes-handbook/guide-kubelet-authentication-authorization.md

https://blog.csdn.net/cbmljs/article/details/102953428

https://www.cnblogs.com/panwenbin-logs/p/10029834.html

http://docs.kubernetes.org.cn/51.html

1.1 基础目录

kubelet-proxy :此目录是放kubelet和proxy的key和证书 他俩共用一个,也可以不共用

service-account :此目录是放service-account的 key也可以用apiserver的key或其他的组件的key

admin :任意有kubectl或其他客户端的管理员的key和证书

apiserver :apiserver的key和证书

controller-scheduler:此目录是放controller-manager和scheduler的key和证书 他俩共用一个,也可以不共用

mkdir -p /etc/pki/k8s/{kubelet-proxy service-account admin apiserver controller-scheduler}

chmod 755 /etc/pki/k8s/*

此处目录必须755权限,要不启动组件权限不够报错

1.2 openssl.conf配置文件

root@www:/etc/pki/k8s # cat good/openssl.cnf.back | grep -v ^# | grep -v ^$ 2 ↵

HOME = .

RANDFILE = $ENV::HOME/.rnd

oid_section = new_oids

[ new_oids ]

tsa_policy1 = 1.2.3.4.1

tsa_policy2 = 1.2.3.4.5.6

tsa_policy3 = 1.2.3.4.5.7

[ ca ]

default_ca = CA_default # The default ca section

[ CA_default ]

dir = /etc/pki/CA # Where everything is kept

certs = $dir/certs # Where the issued certs are kept

crl_dir = $dir/crl # Where the issued crl are kept

database = $dir/index.txt # database index file.

# several ctificates with same subject.

new_certs_dir = $dir/newcerts # default place for new certs.

certificate = $dir/cacert.pem # The CA certificate

serial = $dir/serial # The current serial number

crlnumber = $dir/crlnumber # the current crl number

# must be commented out to leave a V1 CRL

crl = $dir/crl.pem # The current CRL

private_key = $dir/private/cakey.pem# The private key

RANDFILE = $dir/private/.rand # private random number file

x509_extensions = usr_cert # The extentions to add to the cert

name_opt = ca_default # Subject Name options

cert_opt = ca_default # Certificate field options

default_days = 365 # how long to certify for

default_crl_days= 30 # how long before next CRL

default_md = sha256 # use SHA-256 by default

preserve = no # keep passed DN ordering

policy = policy_match

[ policy_match ]

countryName = match

stateOrProvinceName = match

organizationName = match

organizationalUnitName = optional

commonName = supplied

emailAddress = optional

[ policy_anything ]

countryName = optional

stateOrProvinceName = optional

localityName = optional

organizationName = optional

organizationalUnitName = optional

commonName = supplied

emailAddress = optional

[ req ]

default_bits = 2048

default_md = sha256

default_keyfile = privkey.pem

distinguished_name = req_distinguished_name

attributes = req_attributes

x509_extensions = v3_ca # The extentions to add to the self signed cert

string_mask = utf8only

req_extensions = v3_req

[ req_distinguished_name ]

countryName = Country Name (2 letter code)

countryName_default = XX

countryName_min = 2

countryName_max = 2

stateOrProvinceName = State or Province Name (full name)

localityName = Locality Name (eg, city)

localityName_default = Default City

0.organizationName = Organization Name (eg, company)

0.organizationName_default = Default Company Ltd

organizationalUnitName = Organizational Unit Name (eg, section)

commonName = Common Name (eg, your name or your server\'s hostname)

commonName_max = 64

emailAddress = Email Address

emailAddress_max = 64

[ req_attributes ]

challengePassword = A challenge password

challengePassword_min = 4

challengePassword_max = 20

unstructuredName = An optional company name

[ usr_cert ]

basicConstraints=CA:FALSE

nsComment = "OpenSSL Generated Certificate"

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid,issuer

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = www.superred.com

DNS.2 = superred.com

DNS.3 = kubernetes

DNS.4 = kubernetes.default

DNS.5 = kubernetes.default.svc

DNS.6 = kubernetes.default.svc.superred.com

IP.1 = 10.10.3.127 #k8s master

IP.2 = 10.254.254.254 #k8s cluster_dns

IP.3 = 10.254.0.0 #service-cluster

IP.4 = 10.0.0.0 #docker

[ v3_ca ]

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid:always,issuer

basicConstraints = CA:true

[ crl_ext ]

authorityKeyIdentifier=keyid:always

[ proxy_cert_ext ]

basicConstraints=CA:FALSE

nsComment = "OpenSSL Generated Certificate"

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid,issuer

proxyCertInfo=critical,language:id-ppl-anyLanguage,pathlen:3,policy:foo

[ tsa ]

default_tsa = tsa_config1 # the default TSA section

[ tsa_config1 ]

dir = ./demoCA # TSA root directory

serial = $dir/tsaserial # The current serial number (mandatory)

crypto_device = builtin # OpenSSL engine to use for signing

signer_cert = $dir/tsacert.pem # The TSA signing certificate

# (optional)

certs = $dir/cacert.pem # Certificate chain to include in reply

# (optional)

signer_key = $dir/private/tsakey.pem # The TSA private key (optional)

default_policy = tsa_policy1 # Policy if request did not specify it

# (optional)

other_policies = tsa_policy2, tsa_policy3 # acceptable policies (optional)

digests = sha1, sha256, sha384, sha512 # Acceptable message digests (mandatory)

accuracy = secs:1, millisecs:500, microsecs:100 # (optional)

clock_precision_digits = 0 # number of digits after dot. (optional)

ordering = yes # Is ordering defined for timestamps?

# (optional, default: no)

tsa_name = yes # Must the TSA name be included in the reply?

# (optional, default: no)

ess_cert_id_chain = no # Must the ESS cert id chain be included?

# (optional, default: no)

此处的key包含公钥和私钥,可以从key里面提取公钥和私钥通过openssl工具

1.3 生成根证书

用 openssl 工具生成 CA 证书,请注意将其中 subject 等参数改为用户所需的数据,CN 的值通常是域名、主机名或 IP 地址。

1)跟密钥

umask 022;openssl genrsa -out ca.key 2048

2)跟证书

umask 022;openssl req -x509 -nodes -key ca.key -subj "/O=root/OU=linux/CN=superred" -days 5000 -out ca.crt -config openssl.cnf

注解:在k8s里面CN:表示用户,O:表示用户的组,OU表示证书/密钥属于的组1.4 生成 API Server 服务端证书和key

1).apiserver的key

umask 022;openssl genrsa -out apiserver/apiserver.key 2048

2).apiserver的csr申请证书文件

umask 022;openssl req -new -key apiserver/apiserver.key -subj "/CN=apiserver" -out apiserver/apiserver.csr -config openssl.cnf

3).用申请文件和根证书跟密钥签名得到apiserver的证书

umask 022;openssl x509 -req -in apiserver/apiserver.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out apiserver/apiserver.crt -days 5000 -extfile openssl.cnf -extensions v3_req1.5 生成 Controller Manager 与 Scheduler 进程共用的证书和key

1)umask 022;openssl genrsa -out cs-client/cs-client.key 2048

2)umask 022;openssl req -new -key cs-client/cs-client.key -subj "/CN=cs-client" -out cs-client/cs-client.csr -config openssl.cnf

3)umask 022;openssl x509 -req -in cs-client/cs-client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out cs-client/cs-client.crt -days 5000 -extfile openssl.cnf -extensions v3_req1.6 生成 Kubelet 和proxy所用的客户端证书和key

1).umask 022;openssl genrsa -out kubelet-proxy/kubelet-proxy.key 2048

2).umask 022;openssl req -new -key kubelet-proxy/kubelet-proxy.key -subj "/CN=kubelet-proxy" -out kubelet-proxy/kubelet-proxy.csr -config openssl.cnf

3).umask 022;openssl x509 -req -in kubelet-proxy/kubelet-proxy.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out kubelet-proxy/kubelet-proxy.crt -days 5000 -extfile openssl.cnf -extensions v3_req1.7生成 service-account的key

umask 022;openssl genrsa -out service-account/service-account.key 20481.8.管理员的证书和key,其他用户同理

1).umask 022;openssl genrsa -out admin/admin.key 204

2).umask 022;openssl req -new -key admin/admin.key -subj "/CN=admin" -out admin/admin.csr -config openssl.cnf

3).umask 022;openssl x509 -req -in admin/admin.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out admin/admin.crt -days 5000 -extfile openssl.cnf -extensions v3_req1.9.kubeconfig 文件,配置证书等相关参数

管理员的配置 admin

创建集群 k8s 且(embed-cert=true)写入默认的~/.kube/config文件里面

1)kubectl config set-cluster k8s --server=https://www.superred.com:6443 --certificate-authority=/etc/pki/k8s/ca.crt --embed-certs=true

创建集群k8s用户

2)kubectl config set-credentials admin --client-certificate=/etc/pki/k8s/admin/admin.crt --client-key=/etc/pki/k8s/admin/admin.key --embed-certs=true

创建上下文admin@k8s 且关联集群和用户,说名那个用户可以操作那个集群。

3)kubectl config --namespace=kube-system set-context admin@k8s --cluster=k8s --user=admin

切换当前默认集群,此时在 kubectl get pod 的时候不用-n namespace 默认就是上下文关联的namespace了既kube-system

4)kubectl config use-context admin@k8s

查看

kubectl config current-context1.10 controller-manager和scheduler的配置

--certificate-authority= 指向ca根证书

1)kubectl config set-cluster k8s --server=https://www.superred.com:6443 --certificate-authority=/etc/pki/k8s/ca.crt --embed-certs=true --kubeconfig=/etc/pki/k8s/cs-client/cs-client.kubeconfig

--client-key :客户端的key

--client-certificate :客户端的证书

2)kubectl config set-credentials cs-client --client-certificate=/etc/pki/k8s/cs-client/cs-client.crt --client-key=/etc/pki/k8s/cs-client/cs-client.key --embed-certs=true --kubeconfig=/etc/pki/k8s/cs-client/cs-client.kubeconfig

3)kubectl config --namespace=kube-system set-context cs-client@k8s --cluster=k8s --user=cs-client --kubeconfig=/etc/pki/k8s/cs-client/cs-client.kubeconfig

4)kubectl config use-context cs-client@k8s --kubeconfig=/etc/pki/k8s/cs-client/cs-client.kubeconfig

查看

kubectl config current-context --kubeconfig=/etc/pki/k8s/cs-client/cs-client.kubeconfig1.11 kubelet和proxy的配置

1)kubectl config set-cluster k8s --server=https://www.superred.com:6443 --certificate-authority=/etc/pki/k8s/ca.crt --embed-certs=true --kubeconfig=/etc/pki/k8s/kubelet-proxy/kubelet-proxy.kubeconfig

2)kubectl config set-credentials kubelet-proxy --client-certificate=/etc/pki/k8s/kubelet-proxy/kubelet-proxy.crt --client-key=/etc/pki/k8s/kubelet-proxy/kubelet-proxy.key --embed-certs=true --kubeconfig=/etc/pki/k8s/kubelet-proxy/kubelet-proxy.kubeconfig

3)kubectl config --namespace=kube-system set-context kubelet-proxy@k8s --cluster=k8s --user=kubelet-proxy --kubeconfig=/etc/pki/k8s/kubelet-proxy/kubelet-proxy.kubeconfig

4)kubectl config use-context kubelet-proxy@k8s --kubeconfig=/etc/pki/k8s/kubelet-proxy/kubelet-proxy.kubeconfig

查看

kubectl config current-context --kubeconfig=/etc/pki/k8s/kubelet-proxy/kubelet-proxy.kubeconfig

2.组件配置

所有节点:1)iptables -P FORWARD ACCEPT

2)systemctl disable firewalld; systemctl stop firewalld;

3)setenforce 0 ;永久

root@www:~/work/k8s/pods/kuber/python-web # cat /etc/selinux/config SELINUX=disabled

SELINUXTYPE=targeted

2.1 etcd:只有master节点

root@www:~/work/k8s/pods/kuber/python-web # cat /etc/etcd/etcd.conf | grep -v ^# | grep -v ^$ 2 ↵

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001"

ETCD_NAME="master"

ETCD_ADVERTISE_CLIENT_URLS="http://10.10.3.127:2379,http://10.10.3.127:4001"etcdctl mk /atomic.io/network/config '{ "Network": "10.0.0.0/16" }'

2.2 flanneld: 所有节点

root@www:~/work/k8s/pods/kuber/python-web # cat /etc/sysconfig/flanneld | grep -v ^# | grep -v ^$ 1 ↵

FLANNEL_ETCD_ENDPOINTS="http://10.10.3.127:2379"

FLANNEL_ETCD_PREFIX="/atomic.io/network"2.3 docker 所有节点

root@www:~/work/k8s/pods/kuber/python-web # cat /etc/sysconfig/docker | grep -v ^# | grep -v ^$

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --insecure-registry 10.10.3.104:8123 --insecure-registry 10.10.3.104 --insecure-registry harbor.superred.com'

if [ -z "${DOCKER_CERT_PATH}" ]; then

DOCKER_CERT_PATH=/etc/docker

fi2.4 apiserver 只有master节点

root@www:/etc/kubernetes # cat apiserver 1 ↵

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

#KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0 --bind-address=0.0.0.0 --advertise-address=10.10.3.127"

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0 --bind-address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--port=8080 --secure-port=6443"

# Port minions listen on

KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://10.10.3.127:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

#KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

# Add your own!

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false"

# username+password password,name,uid[123456aA,admin,1]

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --basic-auth-file=/etc/kubernetes/api/staticpassword-auth.csv"

#token-auth [token,admin,1]

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --token-auth-file=/etc/kubernetes/api/statictoken-auth.csv"

#ca

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --client-ca-file=/etc/pki/k8s/ca.crt --tls-private-key-file=/etc/pki/k8s/server.key --tls-cert-file=/etc/pki/k8s/server.crt"

#all

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --basic-auth-file=/etc/kubernetes/api/staticpassword-auth.csv --token-auth-file=/etc/kubernetes/api/statictoken-auth.csv --client-ca-file=/etc/pki/k8s/ca.crt --tls-private-key-file=/etc/pki/k8s/server.key --tls-cert-file=/etc/pki/k8s/server.crt --service-account-key-file=/etc/pki/k8s/server.key --service-account-lookup=true"

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --basic-auth-file=/etc/kubernetes/api/staticpassword-auth.csv --token-auth-file=/etc/kubernetes/api/statictoken-auth.csv --client-ca-file=/etc/pki/k8s/ca.crt --tls-private-key-file=/etc/pki/k8s/server.key --tls-cert-file=/etc/pki/k8s/server.crt --service-account-key-file=/etc/pki/k8s/server.key --service-account-lookup=true --runtime-config=authorization.k8s.io/v1beta1=true --authorization-mode=RBAC"

#KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --basic-auth-file=/etc/kubernetes/api/staticpassword-auth.csv --token-auth-file=/etc/kubernetes/api/statictoken-auth.csv --client-ca-file=/etc/pki/k8s/ca.crt --tls-private-key-file=/etc/pki/k8s/server.key --tls-cert-file=/etc/pki/k8s/server.crt --service-account-key-file=/etc/pki/k8s/server.key --service-account-lookup=true --storage-backend=etcd3 --etcd-cafile=/etc/pki/k8s/ca.crt --etcd-certfile=//etc/pki/k8s/server.crt --etcd-keyfile=/etc/pki/k8s/server.key"

KUBE_API_ARGS="--service-node-port-range=1-65535 --anonymous-auth=false --basic-auth-file=/etc/kubernetes/api/staticpassword-auth.csv --token-auth-file=/etc/kubernetes/api/statictoken-auth.csv --client-ca-file=/etc/pki/k8s/ca.crt --tls-private-key-file=/etc/pki/k8s/apiserver/apiserver.key --tls-cert-file=/etc/pki/k8s/apiserver/apiserver.crt --service-account-key-file=/etc/pki/k8s/service-account/service-account.key --service-account-lookup=true"2.5 controller-manager 只有master节点

root@www:/etc/kubernetes # cat controller-manager

###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

#KUBE_CONTROLLER_MANAGER_ARGS="--pv-recycler-pod-template-filepath-nfs=/etc/kubernetes/recycler.yaml --pv-recycler-pod-template-filepath-hostpath=/etc/kubernetes/recycler.yaml --service-account-private-key-file=/etc/pki/k8s/server.key"

#KUBE_CONTROLLER_MANAGER_ARGS="--pv-recycler-pod-template-filepath-nfs=/etc/kubernetes/recycler.yaml --pv-recycler-pod-template-filepath-hostpath=/etc/kubernetes/recycler.yaml --service-account-private-key-file=/etc/pki/k8s/server.key --cluster-signing-cert-file=/etc/pki/k8s/server.crt --cluster-signing-key-file=/etc/pki/k8s/server.key --root-ca-file=/etc/pki/k8s/ca.crt --kubeconfig=/etc/pki/k8s/config"

KUBE_CONTROLLER_MANAGER_ARGS="--pv-recycler-pod-template-filepath-nfs=/etc/kubernetes/recycler.yaml --pv-recycler-pod-template-filepath-hostpath=/etc/kubernetes/recycler.yaml --service-account-private-key-file=/etc/pki/k8s/service-account/service-account.key --cluster-signing-cert-file=/etc/pki/k8s/cs-client/cs-client.crt --cluster-signing-key-file=/etc/pki/k8s/cs-client/cs-client.key --root-ca-file=/etc/pki/k8s/ca.crt --kubeconfig=/etc/pki/k8s/cs-client/cs-client.kubeconfig"2.6 scheduler 只有master节点

root@www:/etc/kubernetes # cat scheduler

###

# kubernetes scheduler config

# default config should be adequate

# Add your own!

KUBE_SCHEDULER_ARGS="--kubeconfig=/etc/pki/k8s/cs-client/cs-client.kubeconfig"

#KUBE_SCHEDULER_ARGS="--kubeconfig=/etc/pki/k8s/config"2.7 config 所有节点 公共配置 piserver controller-manager kubelet proxy scheduler 都用的配置

root@www:/etc/kubernetes # cat config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

#KUBE_MASTER="--master=http://10.10.3.127:8080"

KUBE_MASTER="--master=https://www.superred.com:6443"2.8 recycler.yaml 是属于pvc pv操作里面的,持久化卷 详见https://blog.csdn.net/Michaelwubo/article/details/107998395

root@www:/etc/kubernetes # cat recycler.yaml

apiVersion: v1

kind: Pod

metadata:

name: pv-recycler-

namespace: default

spec:

restartPolicy: Never

volumes:

- name: vol

hostPath:

path: /any/path/it/will/be/replaced

containers:

- name: pv-recycler

image: "harbor.superred.com/kubernetes/busybox:latest"

imagePullPolicy: IfNotPresent

command: ["/bin/sh", "-c", "test -e /scrub && rm -rf /scrub/..?* /scrub/.[!.]* /scrub/* && test -z \"$(ls -A /scrub)\" || exit 1"]

volumeMounts:

- name: vol

mountPath: /scrub2.9 :10.10.3.183节点

[root@localhost kubernetes]# cat kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=0.0.0.0"

# The port for the info server to serve on

#KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=10.10.3.183"

# location of the api-server

#KUBELET_API_SERVER="--api-servers=http://10.10.3.127:8080"

KUBELET_API_SERVER="--api-servers=https://www.superred.com:6443"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=harbor.superred.com/kubernetes/rhel7/pod-infrastructure:latest"

# Add your own!

#KUBELET_ARGS="--cluster_dns=10.254.254.254 --cluster_domain=superred.com --kubeconfig=/etc/pki/k8s/config"

KUBELET_ARGS="--cluster_dns=10.254.254.254 --cluster_domain=superred.com --kubeconfig=/etc/pki/k8s/kubelet-proxy.kubeconfig"

#KUBELET_ARGS="--cluster_dns=10.254.254.254 --cluster_domain=superred.com --client-ca-file=/etc/pki/k8s/ca.crt --tls-cert-file=/etc/pki/k8s/server.crt --tls-private-key-file=server.key --kubeconfig=/etc/pki/k8s/config"

#KUBELET_ARGS="--cluster_dns=10.254.254.254 --cluster_domain=superred.com"

[root@localhost kubernetes]# cat proxy

###

# kubernetes proxy config

# default config should be adequate

# Add your own!

KUBE_PROXY_ARGS="--kubeconfig=/etc/pki/k8s/kubelet-proxy.kubeconfig"

#KUBE_PROXY_ARGS="--kubeconfig=/etc/pki/k8s/config"2.10: 10.10.3.119节点

[root@localhost kubernetes]# cat kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=0.0.0.0"

# The port for the info server to serve on

# KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=10.10.3.119"

# location of the api-server

#KUBELET_API_SERVER="--api-servers=http://10.10.3.127:8080"

KUBELET_API_SERVER="--api-servers=https://www.superred.com:6443"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=harbor.superred.com/kubernetes/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS="--cluster_dns=10.254.254.254 --cluster_domain=superred.com --kubeconfig=/etc/pki/k8s/kubelet-proxy.kubeconfig"

#KUBELET_ARGS="--cluster_dns=10.254.254.254 --cluster_domain=superred.com --kubeconfig=/etc/pki/k8s/config"

[root@localhost kubernetes]# cat proxy

###

# kubernetes proxy config

# default config should be adequate

# Add your own!

KUBE_PROXY_ARGS="--kubeconfig=/etc/pki/k8s/kubelet-proxy.kubeconfig"

#KUBE_PROXY_ARGS="--kubeconfig=/etc/pki/k8s/config"

3 启动服务

3.1 master

root@www:/etc/pki/k8s # systemctl restart etcd root@www:/etc/pki/k8s # systemctl restart flanneld.service

root@www:/etc/pki/k8s # systemctl restart docker root@www:/etc/pki/k8s # systemctl restart kube-apiserver.service root@www:/etc/pki/k8s # systemctl restart kube-controller-manager.service root@www:/etc/pki/k8s # systemctl restart kube-scheduler.service3.2 node

[root@localhost kubernetes]# systemctl restart kubelet

[root@localhost kubernetes]# systemctl restart flanneld

[root@localhost kubernetes]# systemctl restart kubelet

[root@localhost kubernetes]# systemctl restart kube-proxy3.3 把管理员admin的config默认在~/.kube/config 的配置copy到任意一台服务器(不一定要是上面的三台服务器,其他服务也可以)只要有客户端kubectl

然后执行kubectl get nodes

kubectl describe nodes 10.10.3.119 查看节点的状态

Ready True Fri, 21 Aug 2020 02:36:25 -0400 Fri, 21 Aug 2020 01:30:48 -0400 KubeletReady kubelet is posting ready statusroot@www:~/work/k8s/pods/kuber/python-web # kubectl get nodes 1 ↵

NAME STATUS AGE

10.10.3.119 Ready 3h

10.10.3.183 Ready 3h

3.4 案例 mysql的

1)创建namespace

kubectl create ns wubo2)创建serveraccount(sa)

root@www:/etc/pki/k8s # kubectl create sa admin -n wubo3)创建私有仓库的docker-registry

kubectl create secret docker-registry registrysecret --docker-server=http://harbor.superred.com --docker-username=admin --docker-password=Harbor12345 [email protected] -n wubo4) kubectl create -f mysql-con-svc.yaml

root@www:~/work/k8s/pods/kuber/python-web # cat mysql-con-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: server-mysql

namespace: wubo

labels:

name: server-mysql

spec:

#type: ClusterIP

#type: LoadBalancer

type: NodePort

#clusterIP: 10.254.111.110

#externalIPs:

# - 10.10.3.119

ports:

- port: 3306

nodePort: 33306

targetPort: 3306

selector:

name: server-mysql-pod

#spec:

# #type: ClusterIP

# #type: LoadBalancer

# #type: NodePort

# #clusterIP: 10.254.111.110

# externalIPs:

# - 10.10.3.119

# ports:

# - port: 3306

# #nodePort: 33306

# #targetPort: 3306

# selector:

# name: server-mysql-pod

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

namespace: wubo

name: server-mysql-deploy

labels:

name: server-mysql-deploy

spec:

replicas: 1

selector:

matchLabels:

name: server-mysql-pod

template:

metadata:

name: server-mysql-pod

labels:

name: server-mysql-pod

spec:

containers:

- name: server-mysql

image: harbor.superred.com/superredtools/mysql:5.7

resources:

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 3306

env:

- name: 'MYSQL_ROOT_PASSWORD'

value: '123456aA'

volumeMounts:

- name: mysql-storage

mountPath: /var/lib/mysql

imagePullPolicy: IfNotPresent #IfNotPresent #Always Nerver

#nodeName: k8s-node-2

restartPolicy: Always

volumes:

- name: mysql-storage

hostPath:

path: /mysql/data

imagePullSecrets:

- name: registrysecret

serviceAccountName: admin5)效果

root@www:/etc/pki/k8s # watch kubectl get pod,svc,serviceaccount,secrets -o wide -n wubo 1 ↵

Every 2.0s: kubectl get pod,svc,serviceaccount,secrets -o wide -n wubo Fri Aug 21 02:39:20 2020

NAME READY STATUS RESTARTS AGE IP NODE

po/server-mysql-deploy-1677406881-9w09k 1/1 Running 0 1h 10.0.29.2 10.10.3.183

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/server-mysql 10.254.14.40 3306:33306/TCP 1h name=server-mysql-pod

NAME SECRETS AGE

sa/admin 1 3h

sa/default 1 3h

NAME TYPE DATA AGE

secrets/admin-token-mv8w7 kubernetes.io/service-account-token 3 3h

secrets/default-token-1jl17 kubernetes.io/service-account-token 3 3h

secrets/registrysecret kubernetes.io/dockercfg 1 1h

3.5 案例grafana+heapster+influxdb的

https://blog.csdn.net/liukuan73/article/details/78704395

![]()

![]()

Heapster首先从apiserver获取集群中所有Node的信息,然后通过这些Node上的kubelet获取有用数据,而kubelet本身的数据则是从cAdvisor得到。所有获取到的数据都被推到Heapster配置的后端存储中,并还支持数据的可视化。现在后端存储 + 可视化的方法,如InfluxDB + grafana。

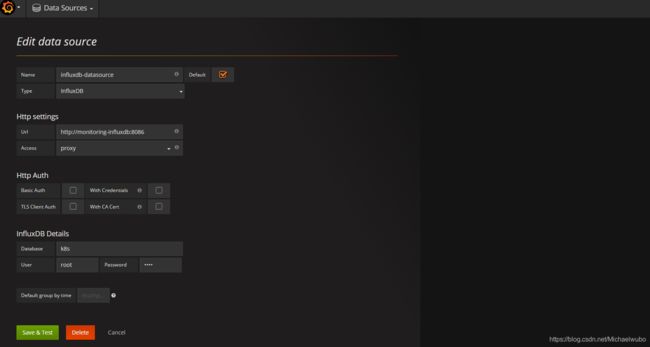

说明:本实验采用influxdb作为heapster后端

1.2 influxdb、grafana

influxdb和grafana的介绍请见这篇文章

2.部署

2.1 获取最新版(v1.5.2)heapster+influxdb+grafana安装yaml文件

到 heapster release 页面下载最新版本的 heapster:

wget https://github.com/kubernetes/heapster/archive/v1.5.2.zip

unzip v1.5.2.zip

cd heapster-1.5.2/deploy/kube-config/influxdb2.2 修改配置文件

我修改好的文件已上传到github。

2.2.1 heapster配置

修改heapster.yml配置文件:

root@www:~/work/k8s/pods/kuber/jiance/heapster-1.5.2/deploy/kube-config/influxdb/back # cat heapster.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: harbor.superred.com/kubernetes/heapster-amd64:v1.5.2

imagePullPolicy: IfNotPresent

command:

- /heapster

#- --source=kubernetes:https://kubernetes.default

- --source=kubernetes:http://10.10.3.127:8080?inClusterConfig=false

#- --sink=influxdb:http://monitoring-influxdb.kube-system.svc:8086

- --sink=influxdb:http://monitoring-influxdb.kube-system.svc.superred.com:8086

#- --sink=influxdb:http://10.10.3.119:48086

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster部署好后可以打开kubernetes-dashboard看效果,看是否显示各 Nodes、Pods 的 CPU、内存、负载等利用率曲线图;

2.2.2 influxdb配置

修改influxdb.yaml:

root@www:~/work/k8s/pods/kuber/jiance/heapster-1.5.2/deploy/kube-config/influxdb/back # cat influxdb.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: monitoring-influxdb

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: influxdb

spec:

containers:

- name: influxdb

image: harbor.superred.com/kubernetes/heapster-influxdb-amd64:v1.3.3

env:

- name: INFLUXDB_BIND_ADDRESS

value: 127.0.0.1:8088

volumeMounts:

- mountPath: /data

name: influxdb-storage

volumes:

- name: influxdb-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-influxdb

name: monitoring-influxdb

namespace: kube-system

spec:

type: NodePort

ports:

- name: http

port: 8083

nodePort: 48083

targetPort: 8083

- name: api

port: 8086

nodePort: 48086

targetPort: 8086

selector:

k8s-app: influxdb注意 默认没有

env:

- name: INFLUXDB_BIND_ADDRESS

value: 127.0.0.1:8088

我的没有的话 启动失败 报错 8088 bind错误

2.2.3 grafana配置

修改grafana.yaml配置文件:

root@www:~/work/k8s/pods/kuber/jiance/heapster-1.5.2/deploy/kube-config/influxdb/back # cat grafana.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana

image: harbor.superred.com/kubernetes/heapster-grafana-amd64:v4.4.3

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certificates

readOnly: true

- mountPath: /var

name: grafana-storage

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: "3000"

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

# If you're only using the API Server proxy, set this value instead:

# value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

value: /

volumes:

- name: ca-certificates

hostPath:

path: /etc/ssl/certs

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

# In a production setup, we recommend accessing Grafana through an external Loadbalancer

# or through a public IP.

# type: LoadBalancer

# You could also use NodePort to expose the service at a randomly-generated port

# type: NodePort

type: NodePort

ports:

- port: 80

nodePort: 43000

targetPort: 3000

selector:

k8s-app: grafana注意

在安装好 Grafana 之后我们使用的是默认的 template 配置,页面上的 namespace 选择里只有 default 和 kube-system,并不是说其他的 namespace 里的指标没有得到监控,只是我们没有在 Grafana 中开启他它们的显示而已。见 Cannot see other namespaces except, kube-system and default #1279。

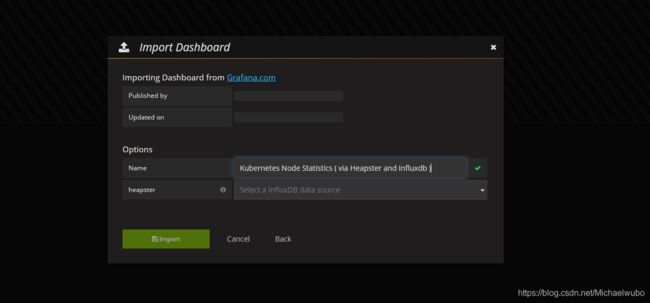

修改grafana模板

![]()

将 Templating 中的 namespace 的 Data source 设置为 influxdb-datasource,Refresh 设置为 on Dashboard Load 保存设置,刷新浏览器,即可看到其他 namespace 选项。

配置influxdb数据源

点击“Add data source”配置数据源:

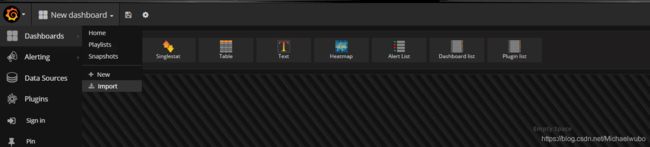

配置dashboard

1.导入“Kubernetes Node Statistics”dashabord

import这个模板的序号3646就可以了:

2.导入“Kubernetes Pod Statistics”dashabord

import这个模板的序号3649就可以了:

效果

总结

k8s原生的监控方案,它主要监控的是pod和node,对于kubernetes其他组件(API Server、Scheduler、Controller Manager等)的监控显得力不从心,而prometheus(一套开源的监控&报警&时间序列数据库的组合)功能更全面,后面有时间会进行实战。监控是一个非常大的话题,监控的目的是为预警,预警的目的是为了指导系统自愈。只有把 监控=》预警 =》自愈 三个环节都完成了,实现自动对应用程序性能和故障管理,才算得上是一个真正意义的应用程序性能管理系统(APM),所以这个系列会一直朝着这个目标努力下去,请大家继续关注。如果有什么好的想法,欢迎评论区交流。

3.6 案例kubernetes-dashboard.yaml的

root@www:~/work/k8s/pods/kuber/kuber_ui # cat kubernetes-dashboard.yaml

# Copyright 2015 Google Inc. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Configuration to deploy release version of the Dashboard UI.

#

# Example usage: kubectl create -f

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

labels:

app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: kubernetes-dashboard

template:

metadata:

labels:

app: kubernetes-dashboard

# Comment the following annotation if Dashboard must not be deployed on master

annotations:

scheduler.alpha.kubernetes.io/tolerations: |

[

{

"key": "dedicated",

"operator": "Equal",

"value": "master",

"effect": "NoSchedule"

}

]

spec:

#imagePullSecrets:

#- name: dashboard-key

containers:

- name: kubernetes-dashboard

image: harbor.superred.com/kubernetes/kubernetes-dashboard-amd64:v1.5.1

#image: jettech.com:5000/allan1991/kubernetes-dashboard-amd64:v1.5.1

imagePullPolicy: Always

ports:

- containerPort: 9090

protocol: TCP

args:

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

- --apiserver-host=http://10.10.3.127:8080

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

#nodeName: k8s-test-100

imagePullSecrets:

- name: registrysecret

---

kind: Service

apiVersion: v1

metadata:

labels:

app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

#type: NodePort

ports:

- port: 80

#nodePort: 8090

targetPort: 9090

selector:

app: kubernetes-dashboard http://10.10.3.127:8080/ui

3.7 案例kube-dns的

root@www:~/work/k8s/pods/kuber/kuber-dns/k8s-dns/1.3-after/1.5(master⚡) # cat skydns-rc.yaml

# Copyright 2016 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# TODO - At some point, we need to rename all skydns-*.yaml.* files to kubedns-*.yaml.*

# Should keep target in cluster/addons/dns-horizontal-autoscaler/dns-horizontal-autoscaler.yaml

# in sync with this file.

# Warning: This is a file generated from the base underscore template file: skydns-rc.yaml.base

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

spec:

replicas: 1

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

rollingUpdate:

maxSurge: 10%

maxUnavailable: 0

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]'

spec:

containers:

- name: kubedns

#image: gcr.io/google_containers/kubedns-amd64:1.9

image: harbor.superred.com/kubernetes/kubedns-amd64:1.9

#image: hub.c.163.com/allan1991/kubedns-amd64:1.9

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in