k8s安全05--配置网络策略 NetworkPolicy

k8s安全05--配置网络策略 NetworkPolicy

- 1 介绍

- 2 案例

-

- 2.1 案例1

-

- 1.1 创建 ns 和 pod

- 1.2 查看pod,svc 信息

- 1.3 测试网络联通性

- 1.4 在集群外部通过NodePort 访问服务

- 1.5 创建网络策略,prod-a 拒绝任何访问

- 1.6 测试网络策略

- 1.7 创建网络策略, 允许标签为 app: front 的pod 访问

- 1.8 测试网络策略

- 2.2 案例2

-

- 2.1 创建ns 和 pod

- 2.2 创建网络策略

- 2.3 测试网络策略

- 3 注意事项

-

- 3.1 常见Selector功能区别

- 3.2 拒绝和允许一个ns的ingress访问

- 4 说明

1 介绍

k8s安全04–kube-apiserver 安全配置 已经介绍了kube-apiserver 的常见安全配置,本文接上文继续介绍k8s的网络安策略,并使用两个案例加以说明,后续会在本文持续更新网络策略相关的案例和注意事项、

2 案例

2.1 案例1

1.1 创建 ns 和 pod

$ kubectl create ns dev-ns

$ kubectl create ns prod-a

$ kubectl create ns prod-b

vim netpods.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: devapp

namespace: dev-ns

labels:

app: devapp

user: bob

spec:

containers:

- name: ubuntu

image: ubuntu:20.04

command: ["/bin/sleep", "3650d"]

imagePullPolicy: IfNotPresent

restartPolicy: Always

---

apiVersion: v1

kind: Pod

metadata:

name: frontend

namespace: prod-a

labels:

app: front

user: tim

spec:

containers:

- name: frontend

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

restartPolicy: Always

---

apiVersion: v1

kind: Pod

metadata:

name: backend

namespace: prod-b

labels:

app: back

user: tim

spec:

containers:

- name: backend

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

labels:

app: devapp

user: bob

name: devapp

namespace: dev-ns

spec:

ports:

- port: 80

name: web

protocol: TCP

targetPort: 80

- port: 22

name: ssh

protocol: TCP

targetPort: 22

selector:

app: devapp

user: bob

sessionAffinity: None

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

labels:

app: front

user: tim

name: frontend

namespace: prod-a

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: front

user: tim

sessionAffinity: None

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app: back

user: tim

name: backend

namespace: prod-b

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: back

user: tim

sessionAffinity: None

type: ClusterIP

$ kubectl apply -f netpods.yaml

1.2 查看pod,svc 信息

$ kubectl get pod,svc -A |grep -v kube-system|grep -v lens-metrics

NAMESPACE NAME READY STATUS RESTARTS AGE

dev-ns pod/devapp 1/1 Running 0 12m

prod-a pod/frontend 1/1 Running 0 10m

prod-b pod/backend 1/1 Running 0 10m

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 91d

dev-ns service/devapp ClusterIP 10.98.181.135 <none> 80/TCP,22/TCP 12m

prod-a service/frontend NodePort 10.99.102.196 <none> 80:30119/TCP 12m

prod-b service/backend ClusterIP 10.105.19.195 <none> 80/TCP 12m

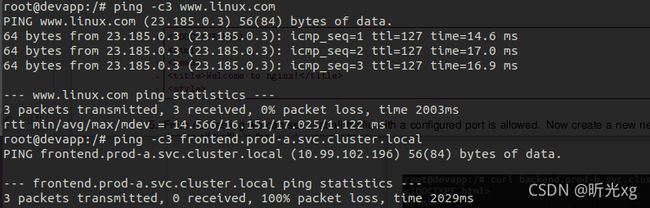

1.3 测试网络联通性

$ kubectl -n dev-ns exec -it devapp -- bash

# apt-get update; apt-get install iputils-ping netcat ssh curl iproute2 -y

# curl frontend.prod-a.svc.cluster.local

# curl backend.prod-b.svc.cluster.local

# ping -c3 www.linux.com

# ping -c3 frontend.prod-a.svc.cluster.local

# ping -c3 backend.prod-a.svc.cluster.local

可访问 frontend

可访问 backend

可ping www.linux.com

不可 ping -c3 frontend.prod-a.svc.cluster.local,backend.prod-a.svc.cluster.local

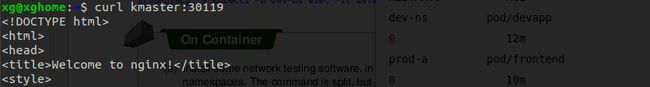

1.4 在集群外部通过NodePort 访问服务

$ curl kmaster:30119

1.5 创建网络策略,prod-a 拒绝任何访问

$ vim denyall.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: prod-deny-all

namespace: prod-a

spec:

podSelector: {

}

policyTypes:

- Ingress

$ kubectl apply -f denyall.yaml

networkpolicy.networking.k8s.io/prod-deny-all created

1.6 测试网络策略

从 dev-ns 访问

$ kubectl -n dev-ns exec -it devapp -- bash

# curl frontend.prod-a.svc.cluster.local

# curl backend.prod-b.svc.cluster.local

如下图,无法访问prod-a ns的服务,但是可以正常访问prod-b ns的服务

$ curl kmaster:30119 #集群外部访问也失败

$ kubectl -n prod-a exec -it frontend -- bash

# curl backend.prod-b.svc.cluster.local

# ping -c3 www.linux.com

# curl frontend.prod-a.svc.cluster.local

从prod-a 访问其它ns 或者域名,都可以正常访问;

从prod-a 访问它自己失败

1.7 创建网络策略, 允许标签为 app: front 的pod 访问

vim allowinternet.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: internet-access

namespace: prod-a

spec:

podSelector:

matchLabels:

app: front

policyTypes:

- Ingress

ingress:

- {

}

$ kubectl apply -f allowinternet.yaml

networkpolicy.networking.k8s.io/internet-access created

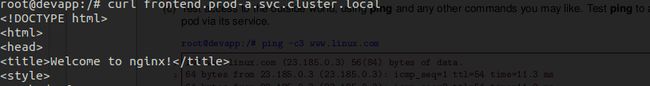

1.8 测试网络策略

$ kubectl -n prod-a exec -it frontend -- bash

# curl frontend.prod-a.svc.cluster.local

$ curl kmaster:30119

$ kubectl -n prod-b exec -it backend -- bash

# curl frontend.prod-a.svc.cluster.local

此时在prod-a prod-b 集群外部,都可以正常访问frontend服务

2.2 案例2

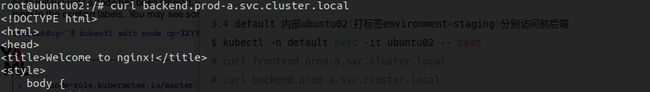

在prod-a 命名空间内,创建名为pod-access的NetworkPolicy作用于名为 frontend 的Pod,只允许命名空间为testing的Pod或者在任何命名空间内有environment:staging标签的Pod来访问.

2.1 创建ns 和 pod

创建 ns

vim ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: dev-ns

labels:

name: testing

spec: {

}

status: {

}

---

apiVersion: v1

kind: Namespace

metadata:

name: prod-a

spec: {

}

status: {

}

$ kubectl apply -f ns.yaml

namespace/dev-ns created

namespace/prod-a created

$ kubectl get ns -A --show-labels|grep -E 'dev-ns|prod-a|default'

default Active 92d kubernetes.io/metadata.name=default

dev-ns Active 21h kubernetes.io/metadata.name=dev-ns,name=testing

prod-a Active 21h kubernetes.io/metadata.name=prod-a

只有dev-ns 有标签 name:testing

创建 base pod

vim base.yaml

apiVersion: v1

kind: Pod

metadata:

name: ubuntu01

namespace: default

spec:

containers:

- name: ubuntu01

image: ubuntu:20.04

command: ["sleep", "infinity"]

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Pod

metadata:

name: ubuntu02

namespace: default

labels:

environment: staging

spec:

containers:

- name: ubuntu02

image: ubuntu:20.04

command: ["sleep", "infinity"]

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Pod

metadata:

name: ubuntu03

namespace: dev-ns

labels:

app: dev

spec:

containers:

- name: ubuntu03

image: ubuntu:20.04

command: ["sleep", "infinity"]

imagePullPolicy: IfNotPresent

$ kubectl apply -f base.yaml

创建 nginx pod

vim netpods.yaml

apiVersion: v1

kind: Pod

metadata:

name: frontend

namespace: prod-a

labels:

app: front

environment: staging

spec:

containers:

- name: frontend

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

labels:

app: front

name: frontend

namespace: prod-a

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: front

sessionAffinity: None

type: NodePort

---

apiVersion: v1

kind: Pod

metadata:

name: backend

namespace: prod-a

labels:

app: back

spec:

containers:

- name: backend

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

labels:

app: back

name: backend

namespace: prod-a

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: back

sessionAffinity: None

type: NodePort

$ kubectl apply -f netpods.yaml

查看ns,pod,svc 信息

$ kubectl get pod -A --show-labels|grep -E 'ubuntu|frontend|backend'

default ubuntu01 1/1 Running 0 31m <none>

default ubuntu02 1/1 Running 0 31m environment=staging

dev-ns ubuntu03 1/1 Running 0 15m app=dev

prod-a backend 1/1 Running 0 43m app=back

prod-a frontend 1/1 Running 0 21h app=front,environment=staging

$ kubectl get svc -A --show-labels|grep -E 'frontend|backend'

prod-a backend NodePort 10.110.111.176 <none> 80:32521/TCP 43m app=back

prod-a frontend NodePort 10.100.182.62 <none> 80:31671/TCP 21h app=front

2.2 创建网络策略

vim np.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: pod-access

namespace: prod-a

spec:

podSelector:

matchLabels:

environment: staging

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

name: testing

- from:

- namespaceSelector:

matchLabels: {

}

podSelector:

matchLabels:

environment: staging

$ kubectl apply -f np.yaml

networkpolicy.networking.k8s.io/pod-access created

2.3 测试网络策略

2.3.1 集群外部访问, 访问frontend 异常,访问backend 正常

$ curl kmaster:31671 #前端

$ curl kmaster:32521 #后端

2.3.2 dev-ns 内部分别访问frontend、backend

$ kubectl -n dev-ns exec -it ubuntu03 -- bash

# curl frontend.prod-a.svc.cluster.local

# curl backend.prod-a.svc.cluster.local

2.3.3 default 内部ubuntu01(没有打标签)分别访问前后端

$ kubectl -n default exec -it ubuntu01 -- bash

# curl frontend.prod-a.svc.cluster.local

# curl backend.prod-a.svc.cluster.local

2.3.4 default 内部ubuntu02(打标签environment=staging)分别访问前后端

$ kubectl -n default exec -it ubuntu02 -- bash

# curl frontend.prod-a.svc.cluster.local

# curl backend.prod-a.svc.cluster.local

3 注意事项

3.1 常见Selector功能区别

case 1: spec 下的 podSelector,其作用是确定哪些pod受到该网络策略的限制

spec:

podSelector

spec:

ingress:

- from:

- namespaceSelector:

case 2: spec.ingress.from 下的 podSelector,其作用为满足该标签的pod能访问 case 1 中pod

spec:

ingress:

- from:

- podSelector:

case 3: spec.ingress.from 下的 namespaceSelector,其作用为有这个标签的ns下的pod能访问 case 1 中pod

spec:

ingress:

- from:

- namespaceSelector:

case 4 spec.ingress.from 下同时有 namespaceSelector 和 podSelector,其作用为 满足 namespaceSelector 标签的 ns 且该ns 中pod的标签满足 podSelector 的pod 能访问 case 1 中pod

spec:

ingress:

- from:

- namespaceSelector:

podSelector:

3.2 拒绝和允许一个ns的ingress访问

vim deny.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-all-ingress

namespace: prod-a

spec:

podSelector: {

}

ingress:

policyTypes:

- Ingress

vim allow.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all-ingress

namespace: prod-a

spec:

podSelector: {

}

ingress:

- {

}

policyTypes:

- Ingress

两者区别,

allow.yaml 的 spec.ingress: 下面多了个 - {}

4 说明

Concepts->Services, Load Balancing, and Networking->Network Policies