机器学习算法理论与实践——线性判别分析(LDA)

文章目录

- 一、基本思想

- 二、数学推导

-

- 1.二分类

- 2.多分类

- 三、sklearn包中的LinearDiscriminantAnalysis

-

- 1.参数解释

- 2.属性解释

- 3.方法解释

- 四、实例

-

- 1.数据来源

- 2.数据概况

- 3.代码实现

一、基本思想

线性判别分析(Linear Discriminant Analysis,简称 LDA)的思想:给定训练样例集,设法将样例投射到一条直线或一个超平面上,使得同类样例的投影点尽可能接近、异类样例的投影点尽可能远离;在对新样本进行分类时,将其投影到同样的这条直线或超平面上,再根据投影点的位置来确定新样本的类别。

二、数学推导

1.二分类

给定数据集 D = { ( x i , y i ) } i = 1 m , y i ∈ { 0 , 1 } D=\{(x_i,y_i)\}_ {i=1}^m, y_i\in\{0,1\} D={ (xi,yi)}i=1m,yi∈{ 0,1}。令 X i X_i Xi表示样例集合, μ i \mu_i μi表示均值向量( μ 1 \mu_1 μ1表示 x ∈ X 1 x\in X_1 x∈X1的 x x x的均值), ∑ i \sum_i ∑i表示协方差矩阵( ∑ 0 = ∑ x ∈ X 0 ( x − μ 0 ) ( x − μ 0 ) T \sum_0=\sum_{x\in X_0}{(x-\mu_0)(x-\mu_0)^T} ∑0=∑x∈X0(x−μ0)(x−μ0)T),此处 i ∈ { 0 , 1 } i\in\{0,1\} i∈{ 0,1}。则两类样本的中心在直线上的投影为 w T μ i w^T\mu_i wTμi;两类样本所有点投射到直线上的协方差为 w T ∑ i w w^T\sum_iw wT∑iw。

投射的协方差推导过程:

由 ∑ i = ∑ x ∈ X i ( x i − μ i ) ( x i − μ i ) T \sum_i=\sum_{x\in X_i}(x_i-\mu_i)(x_i-\mu_i)^T ∑i=∑x∈Xi(xi−μi)(xi−μi)T可得投射后的协方差 ∑ i ′ = ∑ x ∈ X i ( w T x i − w T μ i ) ( w T x i − w T μ i ) T \sum_i'=\sum_{x\in X_i}(w^Tx_i-w^T\mu_i)(w^Tx_i-w^T\mu_i)^T ∑i′=∑x∈Xi(wTxi−wTμi)(wTxi−wTμi)T,又

( w T x i − w T μ i ) ( w T x i − w T μ i ) T = w T ( x i − μ i ) [ w T ( x i − μ i ) ] T = w T ( x i − μ i ) ( x i − μ i ) T w \begin{array}{l} (w^Tx_i-w^T\mu_i)(w^Tx_i-w^T\mu_i)^T \\ \\ =w^T(x_i-\mu_i)[w^T(x_i-\mu_i)]^T \\ \\ =w^T(x_i-\mu_i)(x_i-\mu_i)^Tw \end{array} (wTxi−wTμi)(wTxi−wTμi)T=wT(xi−μi)[wT(xi−μi)]T=wT(xi−μi)(xi−μi)Tw

则可得结果 w T ∑ i w w^T\sum_iw wT∑iw。

目标是使 w T ∑ 0 w + w T ∑ 1 w w^T\sum_0w+w^T\sum_1w wT∑0w+wT∑1w尽可能小,使 ∥ w T μ 0 − w T μ 1 ∥ 2 2 \parallel w^T\mu_0-w^T\mu_1\parallel_2^2 ∥wTμ0−wTμ1∥22尽可能大,同时考虑两者,可得到欲最大化的目标

J = ∥ w T μ 0 − w T μ 1 ∥ 2 2 w T ∑ 0 w + w T ∑ 1 w = w T ( μ 0 − μ 1 ) ( μ 0 − μ 1 ) T w w T ( ∑ 0 + ∑ 1 ) w = w T S b w w T S w w \begin{array}{c} J=\dfrac{\parallel w^T\mu_0-w^T\mu_1\parallel_2^2}{w^T\sum_0w+w^T\sum_1w} \\ \\ =\dfrac{w^T(\mu_0-\mu_1)(\mu_0-\mu_1)^Tw}{w^T(\sum_0+\sum_1)w} \\ \\ =\dfrac{w^TS_bw}{w^TS_ww} \end{array} J=wT∑0w+wT∑1w∥wTμ0−wTμ1∥22=wT(∑0+∑1)wwT(μ0−μ1)(μ0−μ1)Tw=wTSwwwTSbw

这就是LDA欲最大化的目标,即 S b S_b Sb与 S w S_w Sw的"广义瑞利商"(generalized Rayleigh quotient)。其中"类内散度矩阵"(within-class scatter matrix)为 S w = ∑ 0 + ∑ 1 = ∑ x ∈ X 0 ( x − μ 0 ) ( x − μ 0 ) T + ∑ x ∈ X 1 ( x − μ 1 ) ( x − μ 1 ) T S_w=\sum_0+\sum_1=\sum_{x\in X_0}(x-\mu_0)(x-\mu_0)^T+\sum_{x\in X_1}(x-\mu_1)(x-\mu_1)^T Sw=∑0+∑1=∑x∈X0(x−μ0)(x−μ0)T+∑x∈X1(x−μ1)(x−μ1)T;“类间散度矩阵”(between-class scatter matrix)为 S b = ( μ 0 − μ 1 ) ( μ 0 − μ 1 ) T S_b=(\mu_0-\mu_1)(\mu_0-\mu_1)^T Sb=(μ0−μ1)(μ0−μ1)T。

S b S_b Sb和 S w S_w Sw是通过给定数据集计算出来,是确定的,所以 J J J的分子分母都是关于 w w w的二次项,若 w w w是一个解,则对任意常数 α \alpha α, α w \alpha w αw也是解。不失一般性,令 w T S w w = 1 w^TS_ww=1 wTSww=1,则最大化目标等价于

min w − w T S b w s . t . w T S w w = 1 \begin{array}{c} \min_w -w^TS_bw \\ s.t.\quad w^TS_ww=1 \end{array} minw−wTSbws.t.wTSww=1

根据拉格朗日乘子法,有

L ( w ) = − w T S b w + λ ( w T S w w − 1 ) L(w)=-w^TS_bw+\lambda(w^TS_ww-1) L(w)=−wTSbw+λ(wTSww−1)

令 ∂ L ∂ w = − ( S b + S b T ) w + λ ( S w + S w T ) w = − 2 S b w + 2 λ S w w = 0 \dfrac{\partial L}{\partial w}=-(S_b+S_b^T)w+\lambda(S_w+S_w^T)w=-2S_bw+2\lambda S_ww=0 ∂w∂L=−(Sb+SbT)w+λ(Sw+SwT)w=−2Sbw+2λSww=0有 S b w = λ S w w S_bw=\lambda S_ww Sbw=λSww。(从 S b S_b Sb和 S w S_w Sw的定义可知 S b = S b T S_b=S_b^T Sb=SbT)

由于 S b w S_bw Sbw的方向恒为 μ 0 − μ 1 \mu_0-\mu_1 μ0−μ1,不妨令 S b w = λ ( μ 0 − μ 1 ) S_bw=\lambda(\mu_0-\mu_1) Sbw=λ(μ0−μ1),可解得

w = S w − 1 ( μ 0 − μ 1 ) w=S_w^{-1}(\mu_0-\mu_1) w=Sw−1(μ0−μ1)

实践中通常是对 S w S_w Sw进行奇异值分解,即 S w = U ∑ V T , ∑ S_w=U\sum V^T,\sum Sw=U∑VT,∑是一个实对角矩阵,对角线上的元素是 S w S_w Sw的奇异值,则 S w − 1 = V ∑ − 1 U T S_w^{-1}=V\sum^{-1}U^T Sw−1=V∑−1UT。

2.多分类

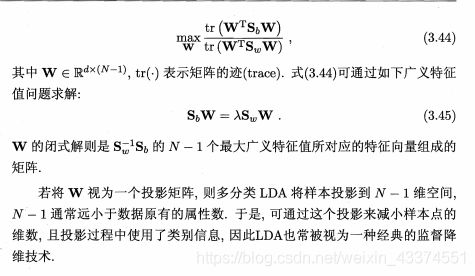

假定存在N个类,且第i类的样本数为 m i m_i mi。定义"全局散度矩阵"为 S t = S b + S w = ∑ i = 1 m ( x i − μ ) ( x i − μ ) T S_t=S_b+S_w=\sum_{i=1}^{m}{(x_i-\mu)(x_i-\mu)^T} St=Sb+Sw=∑i=1m(xi−μ)(xi−μ)T。"类内散度矩阵"为 S w = ∑ i = 1 N ∑ x ∈ X i ( x − μ i ) ( x − μ i ) T S_w=\sum_{i=1}^{N}\sum_{x\in X_i}{(x-\mu_i)(x-\mu_i)^T} Sw=∑i=1N∑x∈Xi(x−μi)(x−μi)T。"类间散度矩阵"为 S b = ∑ i = 1 N m i ( μ i − μ ) ( μ i − μ ) T S_b=\sum_{i=1}^{N}{m_i(\mu_i-\mu)(\mu_i-\mu)^T} Sb=∑i=1Nmi(μi−μ)(μi−μ)T,类似的

三、sklearn包中的LinearDiscriminantAnalysis

sklearn中关于实现LDA的类是LinearDiscriminantAnalysis,官方文档: https://scikit-learn.org/stable/modules/generated/sklearn.discriminant_analysis.LinearDiscriminantAnalysis.html#sklearn.discriminant_analysis.LinearDiscriminantAnalysis

下面是该类各参数、属性和方法的介绍,基本翻译自官方文档:

sklearn.discriminant_analysis.LinearDiscriminantAnalysis(solver=‘svd’, shrinkage=None, priors=None, n_components=None, store_covariance=False, tol=0.0001)

1.参数解释

- solver:字符串,可选参数。指定求解超平面矩阵的方法。可选方法有

(1) ‘svd’:奇异值分解(默认)。不需要计算协方差矩阵,因此对于具有大规模特征的数据,推荐使用该方法,该方法既可用于分类也可用于降维。

(2) ‘lsqr’:最小二乘,结合shrinkage参数。该方法仅可用于分类。

(3) ‘eigen’:特征值分解,结合shrinkage参数。特征值不多的时候推荐使用该方法,该方法既可用于分类也可用于降维。 - shrinkage:字符串或者浮点数,可选参数。正则化参数,增强LDA分类的泛化能力(仅做降维时可不考虑此参数)。

(1) None:不正则化(默认)。

(2) ‘auto’:算法自动决定是否正则化。

(3) [0,1]之间的浮点数:指定值。

shrinkage参数仅在solver参数选择’lsqr’和’eigen’时有用。 - priors:数组,可选参数。数组中的元素依次指定了每个类别的先验概率,默认为None,即每个类相等。降维时一般不需要关注这个参数。

- n_components:整数,可选参数。指定降维时降到的维度,该整数必须小于等于min(分类类别数-1,特征数),默认为None,即min(分类类别数-1,特征数)。

- store_covariance:布尔值,可选参数。额外计算每个类别的协方差矩阵,仅在solver参数选择’svd’时有效,默认为False不计算。

- tol:浮点数,可选参数。指定’svd’中秩估计的阈值。默认值为0.0001。

2.属性解释

- coef_:数组,shape (特征数,) or (分类数, 特征数)。权重向量。

- intercept_:数组,shape (特征数,) 。截距项。

- covariance_:数组,shape (特征数, 特征数) 。适用于所有类别的协方差矩阵。

- explained_variance_ratio_:数组,shape (n_components,) 。每一个维度解释的方差占比(原文Percentage of variance explained by each of the selected components)。

- means_:数组,shape (分类数, 特征数) 。类均值。

- priors_:数组,shape (分类数,) 。类先验概率(加起来等于1)。

- scalings_:数组,shape (rank秩, 分类数-1) 。每个高斯分布的方差σ(原文Scaling of the features in the space spanned by the class centroids)。

- xbar_:数组,shape (特征数,) 。整体均值。

- classes_:数组,shape (分类数,) 。去重的分类标签Unique class labels,即分哪几类。

3.方法解释

- decision_function(self, X):

预测置信度分数。置信度分数是样本到(分类)超平面的带符号的距离。

参数: X: shape(样本数量, 特征数)

返回值:数组,二分类——shape(样本数,),多分类——shape(样本数, 分类数) - fit(self, X, y):

训练模型 - fit_transform(self, X, y=None, **fit_params):

训练模型同时将X转换为新维度的标准化数据。

参数:X,y

返回值:数组,shape(样本数量, 新特征数) - transform(self, X):

X转换为标准化数据。

参数:X

返回值:数组,shape(样本数量, 维数) - get_params(self, deep=True):

以字典返回LDA模型的参数值,比如solver、priors等。参数deep默认为True,还会返回包含的子模型的参数值。 - predict(self, X):

根据模型的训练,返回预测值。

参数:X: shape(样本数量, 特征数)

返回值:数组,shape [样本数] - score(self, X, y, sample_weight=None):

根据给定的测试数据X和分类标签y返回预测正确的平均准确率。可以用于性能度量,返回模型的准确率,参数为x_test,y_test。

参数:X,y

返回值:浮点数 - predict_log_proba(self, X):

返回X中每一个样本预测为各个分类的对数概率

参数: X

返回值:数组,shape(样本数, 分类数) - predict_proba(self, X):

返回X中每一个样本预测为各个分类的概率

参数: X

返回值:数组,shape(样本数, 分类数)

四、实例

1.数据来源

本例数据来源于机器学习竞赛平台Kaggle,一个关于手机价格分类的数据。数据地址:https://www.kaggle.com/iabhishekofficial/mobile-price-classification

2.数据概况

数据一共2000个样例,20个字段。分类字段为price_range,一共分为了4类。数据集无缺失值,无错误值。字段及解释如下

由于从一般常识得知,机身厚度和机身重量对价格往往是没有直接影响的,故在建模时将这两个字段剔除。

3.代码实现

import pandas as pd

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

from sklearn import model_selection

# 读取数据

data = pd.read_csv(r'C:\Users\Administrator\Desktop\train.csv')

# 剔除两个无关字段

data.drop(['m_dep', 'mobile_wt'], axis=1, inplace=True)

X = data[data.columns[:-1]]

Y = data[data.columns[-1]]

# 拆分为训练集和测试集

x_train, x_test, y_train, y_test = model_selection.train_test_split(X, Y, test_size=0.25, random_state=1234)

# LDA

lda = LinearDiscriminantAnalysis()

# 训练模型

lda.fit(x_train, y_train)

# 模型评估

print('模型准确率:\n', lda.score(x_test, y_test))

参考文献:

[1]周志华.《机器学习》.清华大学出版社,2016-1

[2]https://scikit-learn.org/stable/modules/generated/sklearn.discriminant_analysis.LinearDiscriminantAnalysis.html#sklearn.discriminant_analysis.LinearDiscriminantAnalysis