Day8.HBase学习笔记1

一、回顾

HDFS架构、MapReduce的11个步骤、InputFormat的理解、shuffle的过程、shuffle实战

- 【面试题】hadoop在shuffle过程中经历了几次排序?

3次,map端溢写,溢写合并,reduce合并 - NoSQL根据使用场景,分为四类:

- k-v型 redis、ssdb

了解Redis 2.x/3.x/4.x/5.x

SSDB基于磁盘,基于Google的LevelDB,区别于Redis存储量更大,set较慢,get一样,可以节省集群路由间消耗 - 文档型 MongoDB、CouchDB

- 基于列存储型 HBase、Cassandra、GoogleBigTable

- 图形关系存储 Neo4j

注意:每一类Nosql在使用场景上不可替换。特定场景,特定nosql选型(定制型DB)

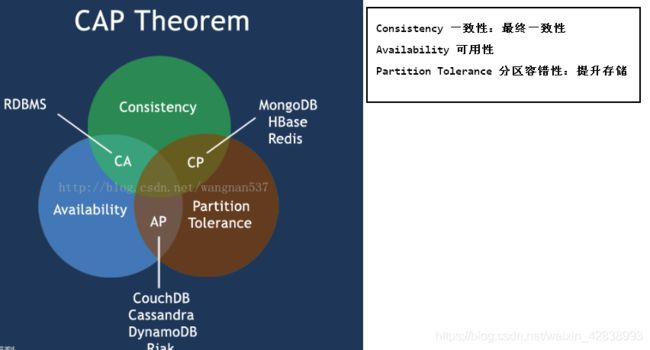

- NoSQL中的CAP定论,即分布式系统中,CAP不可兼得

二、引言

-

HBase是什么?

HBase是一个分布式,面向列的数据库,源自google BigTable。

就像Bigtable利用了Google文件系统(File System)所提供的分布式数据存储一样,HBase在Hadoop之上提供了类似于Bigtable的能力。

(HBase是构建在HDFS上的一款NoSQL数据库,实现了对HDFS上的数据的高效管理,能够实现对海量数据的随机读写,实现行级别数据管理。) -

HBase对比HDFS的关系?

首先了解数据库可以更细粒度的操纵数据,实现对数据的CRUD,我们真正指的是数据库服务。

HBase同数据库服务,HDFS只做到很好的存储,但不支持随机读写,HBase类似一个proxy,让使用者更细粒度的操纵文件。

ps.MapReduce只能读,不能写。 -

HBase的特点

- 大hbase一张表规模一般是在数亿行*数百万列且每一列具备上千个版本

- 稀疏 ,HBase没有固定的表结构,在一行记录中,可以有任意多个列存在(提升磁盘利用率)。

- HBase没有数据类型,所有的类型都是以字节数组形式存在。

- 该数据和常规的数据库最大的区别是在底层对表中记录管理形式上有很大的区别,因为绝大多数数据库都是面向行存储的模式,导致了系统的IO利用率低。在HBase中采用面向列存储的形式,极大的提升系统的IO利用率

- 行存储与列存储?

-

行存储(RDBMS劣势)

①不支持稀疏存储(空值也占存储空间,磁盘利用率低)

②“指定某些列的查询,是投影查询,整行加载”,因为按行存储,导致系统IO利用率低下。

海量存储需要考虑磁盘利用率和IO使用率 -

列存储

列簇:将所有操作特性相似的列归为一个簇(组),操作特性类似列共线性 ,HBase底层会将列簇的数据进行独立索引。

共线性,即同线性或同线型。统计学中,共线性即多重共线性。

多重共线性(Multicollinearity)是指线性回归模型中的解释变量之间由于存在精确相关关系或高度相关关系而使模型估计失真或难以估计准确。

一般来说,由于经济数据的限制使得模型设计不当,导致设计矩阵中解释变量间存在普遍的相关关系。完全共线性的情况并不多见,一般出现的是在一定程度上的共线性,即近似共线性。

三、HBase搭建

- 确保hadoop一切正常,即HDFS没问题

- 安装zookeeper(管理hbase服务)

[root@CentOS ~]# tar -zxf zookeeper-3.4.6.tar.gz -C /usr/

[root@CentOS ~]# vi /usr/zookeeper-3.4.6/conf/zoo.cfg

tickTime=2000

dataDir=/root/zkdata

clientPort=2181

[root@CentOS ~]# mkdir /root/zkdata #创建zookeeper数据目录

[root@CentOS ~]# cd /usr/zookeeper-3.4.6/

[root@CentOS zookeeper-3.4.6]# ./bin/zkServer.sh start zoo.cfg

JMX enabled by default

Using config: /usr/zookeeper-3.4.6/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@CentOS zookeeper-3.4.6]# start-dfs.sh

[root@CentOS zookeeper-3.4.6]# jps

2182 DataNode

1255 QuorumPeerMain

2346 SecondaryNameNode

2458 Jps

2093 NameNode

- 安装HBase

[root@centos ~]# tar -zxf hbase-1.2.4-bin.tar.gz -C /usr/

[root@centos ~]# vi /usr/hbase-1.2.4/conf/hbase-site.xml

<property>

<name>hbase.rootdir</name>

<value>hdfs://CentOS:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>CentOS</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

[root@centos ~]# vi /usr/hbase-1.2.4/conf/regionservers

CentOS #将localhost改成CentOS

修改环境变量

[root@centos ~]# vi .bashrc

HBASE_MANAGES_ZK=false

HBASE_HOME=/usr/hbase-1.2.4

HADOOP_HOME=/usr/hadoop-2.6.0

JAVA_HOME=/usr/java/latest

CLASSPATH=.

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin

export JAVA_HOME

export CLASSPATH

export PATH

export HADOOP_HOME

export HBASE_HOME

export HBASE_MANAGES_ZK

[root@CentOS ~]# source .bashrc

启动HBase

[root@CentOS ~]# start-hbase.sh

starting master, logging to /usr/hbase-1.2.4/logs/hbase-root-master-CentOS.out

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

CentOS: starting regionserver, logging to /usr/hbase-1.2.4/logs/hbase-root-regionserver-CentOS.out

[root@CentOS ~]# jps

2834 Jps

2755 HRegionServer //负责实际表数据的读写操作

2182 DataNode

1255 QuorumPeerMain

2633 HMaster //类似namenode管理表相关元数据、管理ResgionServer

2346 SecondaryNameNode

2093 NameNode

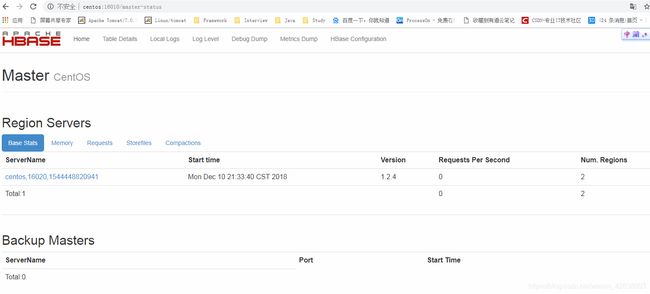

可以访问:http://centos:16010

四、HBase的shell命令

- 连接Hbase

[root@centos ~]# hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help' for list of supported commands.

Type "exit" to leave the HBase Shell

Version 1.2.4, rUnknown, Wed Feb 15 18:58:00 CST 2017

hbase(main):001:0>

- 查看系统状态

hbase(main):001:0> status

1 active master, 0 backup masters, 1 servers, 0 dead, 2.0000 average load

- 查看当前系统版本

hbase(main):006:0> version

1.2.4, rUnknown, Wed Feb 15 18:58:00 CST 2017

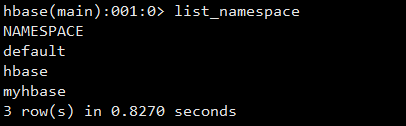

namespace操作(数据库)

- 查看系统数据库

hbase(main):003:0> list_namespace

NAMESPACE

default #没指定namespace(库),默认库

hbase #系统库

2 row(s) in 0.2710 seconds

- 创建namespace

hbase(main):006:0> create_namespace 'play',{

'author'=>'zs'} #=>相当于等号

0 row(s) in 0.3260 seconds

- 查看namespace的表

hbase(main):004:0> list_namespace_tables 'hbase'

TABLE

meta

namespace

- 查看建库详情

hbase(main):008:0> describe_namespace 'play'

DESCRIPTION

{

NAME => 'play', author => 'zs'}

1 row(s) in 0.0550 seconds

- 修改namespace

hbase(main):010:0> alter_namespace 'play',{

METHOD => 'set','author'=> 'wangwu'}

0 row(s) in 0.2520 seconds

hbase(main):011:0> describe_namespace 'play'

DESCRIPTION

{

NAME => 'play', author => 'wangwu'}

1 row(s) in 0.0030 seconds

hbase(main):012:0> alter_namespace 'play',{

METHOD => 'unset',NAME => 'author'}

0 row(s) in 0.0550 seconds

hbase(main):013:0> describe_namespace 'play'

DESCRIPTION

{

NAME => 'play'}

1 row(s) in 0.0080 seconds

- 删除namespace

hbase(main):020:0> drop_namespace 'play'

0 row(s) in 0.0730 seconds

HBase不允许删除有表的数据库

table相关操作(DDL操作)

- 创建表

hbase(main):023:0> create 't_user','cf1','cf2' #两种建表语句,简便版(默认库)

0 row(s) in 1.2880 seconds

=> Hbase::Table - t_user

hbase(main):024:0> create 'play:t_user',{

NAME=>'cf1',VERSIONS=>3},{

NAME=>'cf2',TTL=>3600} #复杂版

#version 历史版本,方便修改信息、#TTL time to live 缓存时间

0 row(s) in 1.2610 seconds

=> Hbase::Table - play:t_user

- 查看建表详情

hbase(main):026:0> describe 'play:t_user'

Table play:t_user is ENABLED

play:t_user

COLUMN FAMILIES DESCRIPTION #BLOOMFILTER 布隆过滤器,HBase在列簇下的列数据的索引方式

{

NAME => 'cf1', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '3', COMPRESSION =

> 'NONE', MIN_VERSIONS => '0', TTL => 'FOREVER', KEEP_DELETED_CELLS => 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', B

LOCKCACHE => 'true'}

{

NAME => 'cf2', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION =

> 'NONE', MIN_VERSIONS => '0', TTL => '3600 SECONDS (1 HOUR)', KEEP_DELETED_CELLS => 'FALSE', BLOCKSIZE => '65536', IN_MEMORY

=> 'false', BLOCKCACHE => 'true'}

2 row(s) in 0.0240 seconds

- 判断表是否存在

hbase(main):030:0> exists 't_user'

Table t_user does exist

0 row(s) in 0.0250 seconds

- enable/is_enabled/enable_all (类似disable、disable_all、is_disabled)

hbase(main):036:0> enable 't_user'

0 row(s) in 0.0220 seconds

hbase(main):037:0> is_enabled 't_user'

true

0 row(s) in 0.0090 seconds

hbase(main):035:0> enable_all 't_.*'

t_user

Enable the above 1 tables (y/n)?

y

1 tables successfully enabled

- drop表(先disable再drop)

hbase(main):038:0> disable 't_user'

0 row(s) in 2.2930 seconds

hbase(main):039:0> drop 't_user'

0 row(s) in 1.2670 seconds

- 展示所有用户表(无法查看系统表hbase下的表)

hbase(main):042:0> list 'play:.*'

TABLE

play:t_user

1 row(s) in 0.0050 seconds

=> ["play:t_user"]

hbase(main):043:0> list

TABLE

play:t_user

1 row(s) in 0.0050 seconds

- 获取一个表的引用

hbase(main):002:0> t=get_table 'play:t_user'

0 row(s) in 0.0440 seconds

- 修改表参数

hbase(main):008:0> alter 'play:t_user',{

NAME => 'cf2', TTL => 60 }

Updating all regions with the new schema...

1/1 regions updated.

Done.

0 row(s) in 2.7000 seconds

表的DML操作

- Put指令(插入/更新)

hbase(main):010:0> put 'play:t_user',1,'cf1:name','zhangsan'

0 row(s) in 0.2060 seconds

hbase(main):011:0> t = get_table 'play:t_user'

0 row(s) in 0.0010 seconds

hbase(main):012:0> t.put 1,'cf1:age','18'

0 row(s) in 0.0500 seconds

- Get指令(查询一行或一列)

hbase(main):017:0> get 'play:t_user',1

COLUMN CELL

cf1:age timestamp=1536996547967, value=21

cf1:name timestamp=1536996337398, value=zhangsan

2 row(s) in 0.0680 seconds

hbase(main):019:0> get 'play:t_user',1,{

COLUMN =>'cf1', VERSIONS=>10}

COLUMN CELL

cf1:age timestamp=1536996547967, value=21

cf1:age timestamp=1536996542980, value=20

cf1:age timestamp=1536996375890, value=18

cf1:name timestamp=1536996337398, value=zhangsan

4 row(s) in 0.0440 seconds

hbase(main):020:0> get 'play:t_user',1,{

COLUMN =>'cf1:age', VERSIONS=>10}

COLUMN CELL

cf1:age timestamp=1536996547967, value=21

cf1:age timestamp=1536996542980, value=20

cf1:age timestamp=1536996375890, value=18

3 row(s) in 0.0760 seconds

hbase(main):021:0> get 'play:t_user',1,{

COLUMN =>'cf1:age', TIMESTAMP => 1536996542980 }

COLUMN CELL

cf1:age timestamp=1536996542980, value=20

1 row(s) in 0.0260 seconds

hbase(main):025:0> get 'play:t_user',1,{

TIMERANGE => [1536996375890,1536996547967]}

COLUMN CELL

cf1:age timestamp=1536996542980, value=20

1 row(s) in 0.0480 seconds

hbase(main):026:0> get 'play:t_user',1,{

TIMERANGE => [1536996375890,1536996547967],VERSIONS=>10}

COLUMN CELL

cf1:age timestamp=1536996542980, value=20

cf1:age timestamp=1536996375890, value=18

2 row(s) in 0.0160 seconds

- scan(区别get,get至少要rowkey,scan给表名即可)

hbase(main):004:0> scan 'play:t_user'

ROW COLUMN+CELL

1 column=cf1:age, timestamp=1536996547967, value=21

1 column=cf1:height, timestamp=1536997284682, value=170

1 column=cf1:name, timestamp=1536996337398, value=zhangsan

1 column=cf1:salary, timestamp=1536997158586, value=15000

1 column=cf1:weight, timestamp=1536997311001, value=\x00\x00\x00\x00\x00\x00\x00\x05

2 column=cf1:age, timestamp=1536997566506, value=18

2 column=cf1:name, timestamp=1536997556491, value=lisi

2 row(s) in 0.0470 seconds

hbase(main):009:0> scan 'play:t_user', {

STARTROW => '1',LIMIT=>1}

ROW COLUMN+CELL

1 column=cf1:age, timestamp=1536996547967, value=21

1 column=cf1:height, timestamp=1536997284682, value=170

1 column=cf1:name, timestamp=1536996337398, value=zhangsan

1 column=cf1:salary, timestamp=1536997158586, value=15000

1 column=cf1:weight, timestamp=1536997311001, value=\x00\x00\x00\x00\x00\x00\x00\x05

1 row(s) in 0.0280 seconds

hbase(main):011:0> scan 'play:t_user', {

COLUMNS=>'cf1:age',TIMESTAMP=>1536996542980}

ROW COLUMN+CELL

1 column=cf1:age, timestamp=1536996542980, value=20

1 row(s) in 0.0330 seconds

- delete/deleteall

hbase(main):013:0> scan 'play:t_user', {

COLUMNS=>'cf1:age',VERSIONS=>3}

ROW COLUMN+CELL

1 column=cf1:age, timestamp=1536996547967, value=21

1 column=cf1:age, timestamp=1536996542980, value=20

1 column=cf1:age, timestamp=1536996375890, value=18

2 column=cf1:age, timestamp=1536997566506, value=18

2 row(s) in 0.0150 seconds

hbase(main):014:0> delete 'play:t_user',1,'cf1:age',1536996542980 #删除时间戳及以前数据

0 row(s) in 0.0920 seconds

hbase(main):015:0> scan 'play:t_user', {

COLUMNS=>'cf1:age',VERSIONS=>3}

ROW COLUMN+CELL

1 column=cf1:age, timestamp=1536996547967, value=21

2 column=cf1:age, timestamp=1536997566506, value=18

2 row(s) in 0.0140 seconds

hbase(main):016:0> delete 'play:t_user',1,'cf1:age'

0 row(s) in 0.0170 seconds

hbase(main):017:0> scan 'play:t_user', {

COLUMNS=>'cf1:age',VERSIONS=>3}

ROW COLUMN+CELL

2 column=cf1:age, timestamp=1536997566506, value=18

1 row(s) in 0.0170 seconds

hbase(main):019:0> deleteall 'play:t_user',1 #删掉多有版本

0 row(s) in 0.0200 seconds

hbase(main):020:0> get 'play:t_user',1

COLUMN CELL

0 row(s) in 0.0200 seconds

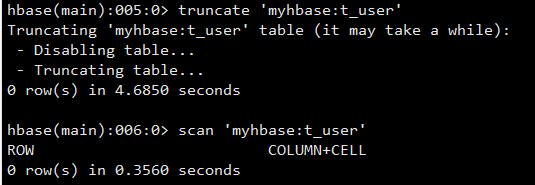

- truncate(最快速删掉表中数据,并保留表结构)

hbase(main):022:0> truncate 'play:t_user'

Truncating 'play:t_user' table (it may take a while):

- Disabling table...

- Truncating table...

0 row(s) in 4.0040 seconds

特点:只支持简单的rowkey、rowcol的查询和简单count逻辑,

不支持复杂查询,不支持统计,

所以,只实现了对海量数据的随机读写

五、HBase的api操作(DDL)

- 导入依赖

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-clientartifactId>

<version>1.2.4version>

dependency>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-commonartifactId>

<version>1.2.4version>

dependency>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-protocolartifactId>

<version>1.2.4version>

dependency>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-serverartifactId>

<version>1.2.4version>

dependency>

- 创建Connection和Admin对象

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.junit.After;

import org.junit.Before;

import java.io.IOException;

/**

* Created by Turing on 2018/12/10 19:02

*/

public class TestHBaseDemo {

private Connection conn;//DML

private Admin admin;//DDL

@Before

public void before() throws IOException {

//创建Connection

Configuration conf= HBaseConfiguration.create();

//因为HMaster和HRegionServer都将信息注册在zookeeper中

conf.set("hbase.zookeeper.quorum","CentOS");

conn= ConnectionFactory.createConnection(conf);

admin=conn.getAdmin();

}

@After

public void after() throws IOException {

admin.close();

admin.close();

}

}

- 创建NameSpace

/**

*创建库

*/

@Test

public void testCreateNamespace() throws IOException {

NamespaceDescriptor nd = NamespaceDescriptor.create("myhbase").addConfiguration("author", "play").build();

admin.createNamespace(nd);

}

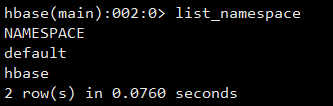

- 删除库

/**

*删库表(先删表,再删库)

*/

@Test

public void testDropNamespace() throws IOException {

admin.deleteNamespace("myhbase");

}

- 查看库下的表

@Test

public void testListTables() throws IOException {

TableName[] tableNames = admin.listTableNames("default:t_.*");

for (TableName tableName : tableNames) {

System.out.println(tableName);

}

}

@Test

public void testNamespaceTables() throws IOException {

TableName[] tbs = admin.listTableNamesByNamespace("default");

for (TableName tb : tbs) {

System.out.println(tb.getNameAsString());

}

}

- 创建表

/**

* 创建表

*/

@Test

public void testCreateTable() throws IOException {

//create 'myhbase:t_user',{NAME=>'cf1',VERIONS=>3},{NAME=>'cf2',TTL=>120}

TableName tname=TableName.valueOf("myhbase:t_user");

//创建表的描述

HTableDescriptor table=new HTableDescriptor(tname);

//配置Column Family(构建列簇)

HColumnDescriptor cf1 = new HColumnDescriptor("cf1");

cf1.setMaxVersions(3);

HColumnDescriptor cf2 = new HColumnDescriptor("cf2");

cf2.setTimeToLive(120);

cf2.setInMemory(true);

cf2.setBloomFilterType(BloomType.ROWCOL);//默认是ROWKEY 索引策略

//添加列簇

table.addFamily(cf1);

table.addFamily(cf2);

admin.createTable(table);

}

五、HBase的api操作(DML)

- 插入(put)

/**

* put:添加

* conn.getTable方式

*/

@Test

public void testPut01() throws IOException {

Table table = conn.getTable(TableName.valueOf("myhbase:t_user"));

String[] company={

"www.baidu.com","www.alibaba.com","www.qq.com"};

for(int i=0;i<10;i++){

String com=company[new Random().nextInt(3)];

String rowKey=com+":";

rowKey+="00"+i;

Put put=new Put(rowKey.getBytes());

put.addColumn("cf1".getBytes(),"name".getBytes(),("user"+i).getBytes());

put.addColumn("cf1".getBytes(),"age".getBytes(), Bytes.toBytes(i));

put.addColumn("cf1".getBytes(),"salary".getBytes(),Bytes.toBytes(6000.0+1000*i));

put.addColumn("cf1".getBytes(),"company".getBytes(),com.getBytes());

table.put(put);

}

table.close();

}

- 批量插入

/**

* put:添加

* conn.getBufferedMutator方式

* 批量处理

*/

@Test

public void testPut02() throws IOException {

BufferedMutator mutator = conn.getBufferedMutator(TableName.valueOf("myhbase:t_user"));

String[] company={

"www.baidu.com","www.alibaba.com","www.qq.com"};

int j=0;

for(int i=0;i<10;i++){

String com=company[new Random().nextInt(3)];

String rowKey=com+":";

rowKey+="00"+i;

Put put=new Put(rowKey.getBytes());

put.addColumn("cf1".getBytes(),"name".getBytes(),("user"+i).getBytes());

put.addColumn("cf1".getBytes(),"age".getBytes(), Bytes.toBytes(i));

put.addColumn("cf1".getBytes(),"salary".getBytes(),Bytes.toBytes(6000.0+1000*i));

put.addColumn("cf1".getBytes(),"company".getBytes(),com.getBytes());

mutator.mutate(put);

if(j%5==0){

mutator.flush();

}

j++;

}

mutator.close();

}

- 更新

/**

* 更新

*/

@Test

public void testUpdate() throws IOException {

Table table = conn.getTable(TableName.valueOf("myhbase:t_user"));

Put update=new Put("www.baidu.com:009".getBytes());

update.addColumn("cf1".getBytes(),"name".getBytes(),"zhangsan".getBytes());

table.put(update);

table.close();

}

- 查一个

/**

* 查一个

* 缺点:只能查到最新版本数据

*/

@Test

public void testGet01() throws IOException {

Table table = conn.getTable(TableName.valueOf("myhbase:t_user"));

Get get=new Get("www.baidu.com:009".getBytes());

//表示一行数据,涵盖n个cell

Result result = table.get(get);

String row=Bytes.toString(result.getRow());

String name=Bytes.toString(result.getValue("cf1".getBytes(),"name".getBytes()));

Integer age=Bytes.toInt(result.getValue("cf1".getBytes(),"age".getBytes()));

Double salary=Bytes.toDouble(result.getValue("cf1".getBytes(),"salary".getBytes()));

String company=Bytes.toString(result.getValue("cf1".getBytes(),"company".getBytes()));

System.out.println(row+"\t"+name+"\t"+age+"\t"+salary+"\t"+company);

table.close();

//结果:www.baidu.com:009 zhangsan 9 15000.0 www.baidu.com

}

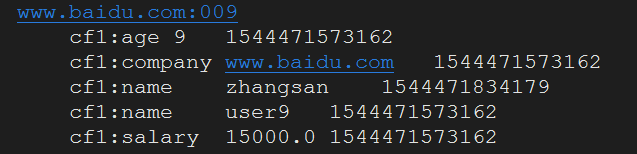

- 查一个(可以拿到所有过去版本)

/**

* 查一个

* 可以拿到过去版本

*/

@Test

public void testGet02() throws IOException {

Table table = conn.getTable(TableName.valueOf("myhbase:t_user"));

Get get=new Get("www.baidu.com:009".getBytes());

get.setMaxVersions(3);

Result result = table.get(get);

String row=Bytes.toString(result.getRow());//获取rowkey

CellScanner cellScanner = result.cellScanner();

System.out.println(row);

while(cellScanner.advance()){

Cell cell = cellScanner.current();//单元格

String column=Bytes.toString(CellUtil.cloneFamily(cell))+":"+Bytes.toString(CellUtil.cloneQualifier(cell));

byte[] bytes = CellUtil.cloneValue(cell);

Object value=null;

if(column.contains("name")||column.contains("company")){

value=Bytes.toString(bytes);

}else if(column.contains("age")){

value=Bytes.toInt(bytes);

}else if(column.contains("salary")){

value=Bytes.toDouble(bytes);

}

System.out.println("\t"+column+"\t"+value+"\t"+cell.getTimestamp());

}

table.close();

}

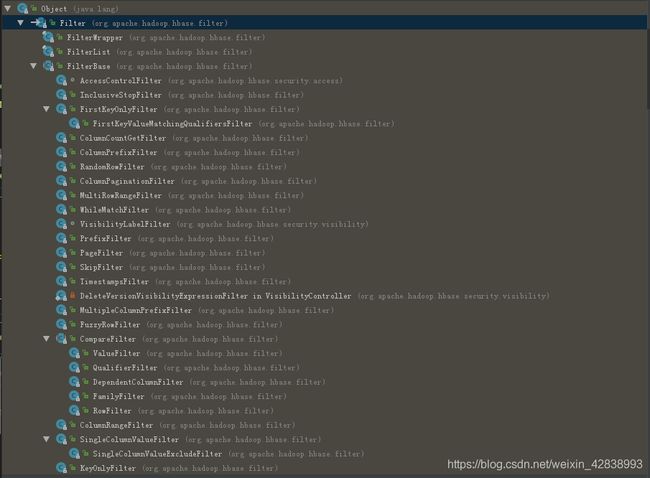

- 查询多条+Filter

@Test

public void testScan() throws IOException {

Table table = conn.getTable(TableName.valueOf("myhbase:t_user"));

Scan scan=new Scan();

Filter filter1=new PrefixFilter("www.baidu.com".getBytes());

Filter filter2=new RowFilter(CompareFilter.CompareOp.EQUAL,new SubstringComparator("007"));

FilterList filter=new FilterList(FilterList.Operator.MUST_PASS_ALL,filter1,filter2);

scan.setFilter(filter);

ResultScanner resultScanner = table.getScanner(scan);

for (Result result : resultScanner) {

String row=Bytes.toString(result.getRow());

String name=Bytes.toString(result.getValue("cf1".getBytes(),"name".getBytes()));

Integer age=Bytes.toInt(result.getValue("cf1".getBytes(),"age".getBytes()));

Double salary=Bytes.toDouble(result.getValue("cf1".getBytes(),"salary".getBytes()));

String company=Bytes.toString(result.getValue("cf1".getBytes(),"company".getBytes()));

System.out.println(row+" \t "+name+"\t"+age+"\t"+salary+"\t"+company);

}

table.close();

//查询结果 www.baidu.com:007 user7 7 13000.0 www.baidu.com

}