2021-4-16

1、初始化服务器

2、ETCD集群配置

3、安装配置keepalived

4、安装docker

5、安装配置k8s

1、初始化服务器(所有节点执行,可以粘贴入脚本执行)

#!/bin/bash

#centos7 系统初始化

yum install -y rsync lrzsz* vim telnet ntpdate wget net-tools nfs-utils.x86_64

chmod +x /etc/rc.d/rc.local

#关闭防火墙、swap分区

setenforce 0

sed -i 's/SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

systemctl disable firewalld.service

systemctl stop firewalld.service

swapoff -a;sed -i 's/.*swap.*/#&/' /etc/fstab

#修改系统参数、文件描述符

sed -i 's/4096/65535/' /etc/security/limits.d/20-nproc.conf

echo '* soft nofile 65535' >> /etc/security/limits.conf

echo '* hard nofile 65535' >> /etc/security/limits.conf

echo "ulimit revamped: `ulimit -n`"

#将桥接的IPv4流量传递到iptables的链,允许 iptables 检查桥接流量

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

#主机名写入hosts

cat >> /etc/hosts << EOF

192.168.2.241 2dot241

192.168.2.242 2dot242

192.168.2.243 2dot243

192.168.2.239 2dot239

192.168.2.240 2dot240

EOF

2、配置需认证etcd集群,etcd相当于整个k8s的数据库

——1、下载CFSSL工具;CFSSL 包含一个命令行工具和一个用于签名验证并且捆绑TLS证书的 HTTP API 服务

curl -L -o /bin/cfssl https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

curl -L -o /bin/cfssljson https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

curl -L -o /bin/cfssl-certinfo https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x /bin/cfssl*

——2、创建CA证书相关配置文件--证书过期时间改为10年

#配置证书生成策略,规定CA可以颁发那种类型的证书

cat > ca-config.json <——创建CA证书签名请求,crs文件

cat > ca-csr.json <——创建etcd相关证书文件 !!!#etcd集群ip地址根据自己的来

cat > etcd-csr.json <——3、生成证书和私钥,分发到其他etcd服务器,免密登录不在赘述,已经提前配置

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

#执行完成后会多2个pem文件和一个crs;ca-key.pem、ca.pem、ca.csr

cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

[root@2dot241 test]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem etcd.csr etcd-csr.json etcd-key.pem etcd.pem

[root@2dot241 test]# mkdir -p /etc/etcd/ssl

[root@2dot241 test]# cp etcd.pem etcd-key.pem ca.pem /etc/etcd/ssl/

[root@2dot241 test]# scp -r /etc/etcd 192.168.2.242:/etc/

[root@2dot241 test]# scp -r /etc/etcd 192.168.2.243:/etc/

——4、下载etcd软件,3台服务器全部执行,或者直接拷贝etcd两个脚本到另两台服务器/usr/local/bin/

[root@2dot241 test]# wget https://github.com/etcd-io/etcd/releases/download/v3.3.10/etcd-v3.3.10-linux-amd64.tar.gz

[root@2dot241 test]# tar -xvf etcd-v3.3.10-linux-amd64.tar.gz

[root@2dot241 test]# cp -a etcd-v3.3.10-linux-amd64/etcd* /usr/local/bin/

——5、生成etcd启动服务文件,拷贝至另两台服务器!!注意修改相关配置(四个ip一个name)

[root@2dot241 test]# cat > /etc/systemd/system/etcd.service <——6、启动etcd集群,验证集群状态,三台都启动,第一个启动后会处于等待状态,等待其他两个服务器启动

[root@2dot241 ~]# mkdir -p /var/lib/etcd

[root@2dot241 ~]# systemctl daemon-reload

[root@2dot241 ~]# systemctl enable etcd

[root@2dot241 ~]# systemctl start etcd

[root@2dot241 ~]# etcdctl --endpoints=https://192.168.2.241:2379 \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

cluster-health

#显示 member 1500ba7df8eae435 is healthy: got healthy result from https://192.168.2.241:2379

#显示 member e2dd7103115d4825 is healthy: got healthy result from https://192.168.2.243:2379

#显示 member ef5a0092d902bbfe is healthy: got healthy result from https://192.168.2.242:2379

3、安装keepalived,三台服务器执行

yum -y install keepalived

#241服务器

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://192.168.2.5:6443" # vip

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 本地网卡名称

virtual_router_id 61

priority 120 # 权重,要唯一!值越大权重越高,其他两个节点修改要小于他

advert_int 1

mcast_src_ip 192.168.2.241 # 本地IP

nopreempt

authentication {

auth_type PASS

auth_pass sqP05dQgMSlzrxHj

}

unicast_peer {

#192.168.2.241 #注释掉本地ip

192.168.2.242

192.168.2.243

}

virtual_ipaddress {

192.168.2.5/24 # VIP

}

track_script {

CheckK8sMaster

}

}

EOF

#242服务器

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://192.168.2.5:6443" # vip

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 本地网卡名称

virtual_router_id 61

priority 110 # 权重,要唯一!值越大权重越高

advert_int 1

mcast_src_ip 192.168.2.242 # 本地IP

nopreempt

authentication {

auth_type PASS

auth_pass sqP05dQgMSlzrxHj

}

unicast_peer {

192.168.2.241

#192.168.2.242 #注释掉本地ip

192.168.2.243

}

virtual_ipaddress {

192.168.2.5/24 # VIP

}

track_script {

CheckK8sMaster

}

}

EOF

#243服务器

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://192.168.2.5:6443" # vip

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 本地网卡名称

virtual_router_id 61

priority 100 # 权重,要唯一!值越大权重越高,其他两个节点修改要小于他

advert_int 1

mcast_src_ip 192.168.2.243 # 本地IP

nopreempt

authentication {

auth_type PASS

auth_pass sqP05dQgMSlzrxHj

}

unicast_peer {

192.168.2.241

192.168.2.242

#192.168.2.243 #注释掉本地ip

}

virtual_ipaddress {

192.168.2.5/24 # VIP

}

track_script {

CheckK8sMaster

}

}

EOF

#分别启动服务器

systemctl enable keepalived && systemctl start keepalived

#权重最高的服务器上验证服务,是否有虚拟ip

[root@2dot241 test]# ip addr

ens160: mtu 1500 qdisc mq state UP group default

qlen 1000

link/ether 00:50:56:b1:e4:82 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.241/24 brd 192.168.2.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet 192.168.2.5/24 scope global secondary ens160

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:feb1:e482/64 scope link

valid_lft forever preferred_lft forever

4、安装docker--(所有节点执行)

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce-18.09.0-3.el7 docker-ce-cli-18.09.0-3.el7 #查看可选择版本 yum list docker-ce --showduplicates | sort -r

systemctl restart docker && systemctl enable docker

5、安装kubeadm、kubelet (所有节点执行)

#配置k8s yum源

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#检查可安装版本--(我这选择安装1.15.6了)

yum list kubeadm --showduplicates | sort -r

yum list kubelet --showduplicates | sort -r

#安装1.15.6-0

yum install -y kubelet-1.15.6-0 kubeadm-1.15.6-0

systemctl enable kubelet

——1、初始化第一个master服务器

#创建kubeadm-conf.yaml 和 kube-flannel.yml 配置文件,修改配置文件kubeadm-conf.yaml

★ 修改certSANs的 ip 和 对应的 master主机名

★ etcd 节点的 ip 改成对应的

★ controlPlaneEndpoint 改成 Vip

★ serviceSubnet: 这个指的是k8s内 service 以后要用的 ip 网段

★ podSubnet: 这个指的是 k8s 内 pod 以后要用的 ip 网段

cat > kubeadm-config.yaml << EOF

apiServer:

certSANs:

- 192.168.2.5

- 192.168.2.241

- 192.168.2.242

- 192.168.2.243

- 127.0.0.1

- "2dot241"

- "2dot242"

- "2dot243"

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "192.168.2.5:6443"

controllerManager: {}

dns:

type: CoreDNS

etcd:

external:

endpoints:

- https://192.168.2.241:2379

- https://192.168.2.242:2379

- https://192.168.2.243:2379

caFile: /etc/etcd/ssl/ca.pem

certFile: /etc/etcd/ssl/etcd.pem

keyFile: /etc/etcd/ssl/etcd-key.pem

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.15.6

networking:

dnsDomain: cluster.local

podSubnet: 172.20.0.0/16

serviceSubnet: 172.21.0.0/16

scheduler: {}

EOF

#修改配置文件kube-flannel.yml

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml -O kube-flannel.yml

#如果下载不下来:链接:https://pan.baidu.com/s/1U8GR2B1InUxVP2RiBMCxFw 提取码:1234

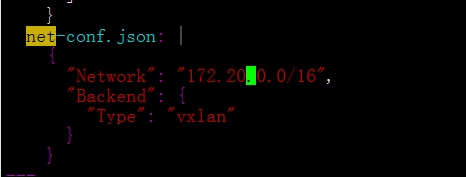

修改其中net-conf这个参数,网段和kubeadm-config.yaml中podSubnet: 要一致,其他就不用动了,如下图

#初始化服务器

[root@2dot241 ~]# kubeadm init --config kubeadm-config.yaml

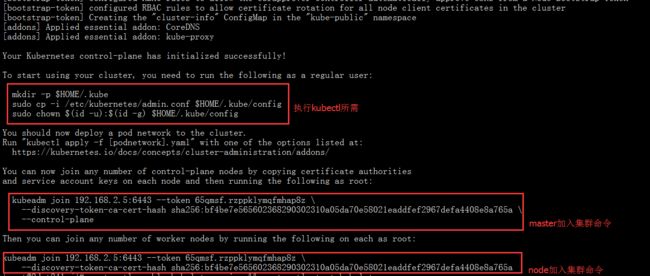

#按照提示1、配置kubeconf----使用kubectl命令会用到 2、应用kube-flannel.yml 3、加入集群

[root@2dot241 ~]# mkdir -p $HOME/.kube

[root@2dot241 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@2dot241 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@2dot241 ~]# kubectl create -f kube-flannel.yml #创建kube-flannel网络容器

#拷贝加入集群所需密钥至其他master服务器

scp -r /etc/kubernetes/pki/ [email protected]:/etc/kubernetes/

scp -r /etc/kubernetes/pki/ [email protected]:/etc/kubernetes/

——2、两台master执行加入集群命令

[root@2dot242 ~]# kubeadm join 192.168.2.5:6443 --token 65qmsf.rzppklymqfmhap8z --discovery-token-ca-cert-hash sha256:bf4be7e565602368290302310a05da70e58021eaddfef2967defa4408e8a765a --control-plane

[root@2dot243 ~]# kubeadm join 192.168.2.5:6443 --token 65qmsf.rzppklymqfmhap8z --discovery-token-ca-cert-hash sha256:bf4be7e565602368290302310a05da70e58021eaddfef2967defa4408e8a765a --control-plane

#按提示运行使用kubectl命令配置,这样其他两个服务器也可以使用kubectl命令

#随意一台集群中master服务器执行查看加入集群结果

[root@2dot242 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

2dot241 Ready master 23h v1.15.6

2dot242 Ready master 8m46s v1.15.6

2dot243 Ready master 6m46s v1.15.6

——3、加入k8s-node节点,在node节点执行上面图片的第三个红框框里命令就可以了

#因为我这做实验不是实时,加入命令中token已过期,默认24小时(node节点加入时报错failed to get config map: Unauthorized)。重新在服务器上获取

master端执行命令:kubeadm token create --print-join-command

[root@2dot241 ~]# kubeadm token create --print-join-command

kubeadm join 192.168.2.5:6443 --token jitt2t.7d2jaobt3j49rrxu --discovery-token-ca-cert-hash sha256:bf4be7e565602368290302310a05da70e58021eaddfef2967defa4408e8a765a

#粘贴到两个node节点

[root@2dot239 ~]# kubeadm join 192.168.2.5:6443 --token jitt2t.7d2jaobt3j49rrxu --discovery-token-ca-cert-hash sha256:bf4be7e565602368290302310a05da70e58021eaddfef2967defa4408e8a765a

[root@2dot240 ~]# kubeadm join 192.168.2.5:6443 --token jitt2t.7d2jaobt3j49rrxu --discovery-token-ca-cert-hash sha256:bf4be7e565602368290302310a05da70e58021eaddfef2967defa4408e8a765a

#服务端运行查看节点命令,node节点加入完成后大概1分钟左右会显示ready状态

[root@2dot241 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

2dot239 Ready 3m3s v1.15.6

2dot240 Ready 2m41s v1.15.6

2dot241 Ready master 40h v1.15.6

2dot242 Ready master 16h v1.15.6

2dot243 Ready master 16h v1.15.6

以后如果有时间在更新etcd的扩展和master扩展

k8s-master证书到期更新:https://www.jianshu.com/p/c4e1396b67cc

感谢两位大佬的文章

https://blog.csdn.net/qq_31547771/article/details/100699573

https://shenshengkun.github.io/posts/omn700fj.html