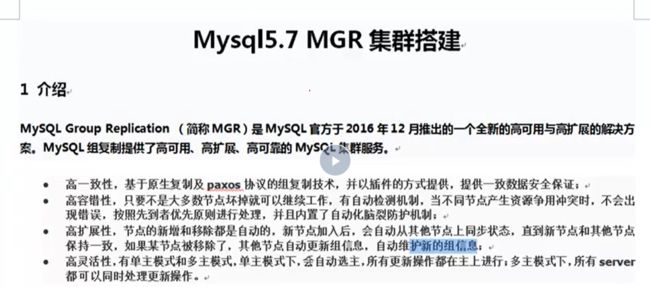

介绍

MGR 是MySQL 是数据库未来发展的一个重要方向

引擎

2.3 设置hostname和ip映射

1 )

设置 /etc/hosts

三台同样的配置

2) 登录数据库开始授权

grant replication slave on *.* to repl@'192.168.24.%' identified by '123456';

3 ) 打开/ etc/my.cnf 的配置文件

Group Replication

server_id = 100 #服务ID

gtid_mode = ON #全局事务

enforce_gtid_consistency = ON #强制GTID的一致性

master_info_repository = TABLE #将master.info元数据保存在系统表中

relay_log_info_repository = TABLE #将relay.info元数据保存在系统表中

binlog_checksum = NONE #禁用二进制日志事件校验

log_slave_updates = ON #级联复制

log_bin = binlog #开启二进制日志记录

binlog_format= ROW #以行的格式记录

transaction_write_set_extraction = XXHASH64 #使用哈希算法将其编码为散列

loose-group_replication_group_name = 'ce9be252-2b71-11e6-b8f4-00212844f856' #加入的组名

loose-group_replication_start_on_boot = off #不自动启用组复制集群

loose-group_replication_local_address = 'xuegod1:33061' #以本机端口33061接受来自组中成员的传入连接

loose-group_replication_group_seeds = 'xuegod1:33061, xuegod2:33062, xuegod1:33063' #组中成员访问表

loose-group_replication_bootstrap_group = off #不启用引导组

注释掉第一行否则会报错

4 ) 重启mysql 服务器

5 ) 登入mysql

修改master

change master to master_user='repl',master_password='123456' for channel 'group_replication_recovery';

6 ) 安装 插件

install PLUGIN group_replication SONAME 'group_replication.so';

7 ) 再次使用show 查看一下 就会看到

show flugins

8 ) 启动第一个节点 手工开机引导组 进入mysql 集群以后

set global group_replication_bootstrap_group=ON;

9 ) 开启引导

4. 下面分别记录下 MGR 基于单主模式和多主模式的集群环境部署过程

4.1 准备环境

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

三台服务器

172.16.60.211 MGR-node1 server_id=1

172.16.60.212 MGR-node2 server_id=2

172.16.60.213 MGR-node3 server_id=3

[root@MGR-node1 ~]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

为了方便实验,关闭所有节点的防火墙

[root@MGR-node1 ~]# systemctl stop firewalld

[root@MGR-node1 ~]# firewall-cmd --state

not running

[root@MGR-node1 ~]# cat /etc/sysconfig/selinux |grep "SELINUX=disabled"

SELINUX=disabled

[root@MGR-node1 ~]# setenforce 0

setenforce: SELinux is disabled

[root@MGR-node1 ~]# getenforce

Disabled

特别要注意一个关键点: 必须保证各个mysql节点的主机名不一致,并且能通过主机名找到各成员!

则必须要在每个节点的/etc/hosts里面做主机名绑定,否则后续将节点加入group组会失败!报错RECOVERING!!

[root@MGR-node1 ~]# cat /etc/hosts

........

172.16.60.211 MGR-node1

172.16.60.212 MGR-node2

172.16.60.213 MGR-node3

4.2 在三个节点上安装Mysql5.7

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

在三个mysql节点机上使用yum方式安装Mysql5.7,参考:https://www.cnblogs.com/kevingrace/p/8340690.html

安装MySQL yum资源库

[root@MGR-node1 ~]# yum localinstall https://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm

安装MySQL 5.7

[root@MGR-node1 ~]# yum install -y mysql-community-server

启动MySQL服务器和MySQL的自动启动

[root@MGR-node1 ~]# systemctl start mysqld.service

[root@MGR-node1 ~]# systemctl enable mysqld.service

设置登录密码

由于MySQL从5.7开始不允许首次安装后使用空密码进行登录!为了加强安全性,系统会随机生成一个密码以供管理员首次登录使用,

这个密码记录在/var/log/mysqld.log文件中,使用下面的命令可以查看此密码:

[root@MGR-node1 ~]# cat /var/log/mysqld.log|grep 'A temporary password'

2019-01-11T05:53:17.824073Z 1 [Note] A temporary password is generated for root@localhost: TaN.k:*Qw2xs

使用上面查看的密码TaN.k:*Qw2xs 登录mysql,并重置密码为123456

[root@MGR-node1 ~]# mysql -p #输入默认的密码:TaN.k:*Qw2xs

.............

mysql> set global validate_password_policy=0;

Query OK, 0 rows affected (0.00 sec)

mysql> set global validate_password_length=1;

Query OK, 0 rows affected (0.00 sec)

mysql> set password=password("123456");

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

查看mysql版本

[root@MGR-node1 ~]# mysql -p123456

........

mysql> select version();

+-----------+

| version() |

+-----------+

| 5.7.24 |

+-----------+

1 row in set (0.00 sec)

=====================================================================

温馨提示

mysql5.7通过上面默认安装后,执行语句可能会报错:

ERROR 1819 (HY000): Your password does not satisfy the current policy requirements

这个报错与Mysql 密码安全策略validate_password_policy的值有关,validate_password_policy可以取0、1、2三个值:

解决办法:

set global validate_password_policy=0;

set global validate_password_length=1;

4.3 安装和配置MGR信息

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

1) 配置所有节点的组复制信息

MGR-node01节点

[root@MGR-node1 ~]# cp /etc/my.cnf /etc/my.cnf.bak

[root@MGR-node1 ~]# >/etc/my.cnf

[root@MGR-node1 ~]# vim /etc/my.cnf

[mysqld]

datadir = /var/lib/mysql

socket = /var/lib/mysql/mysql.sock

symbolic-links = 0

log-error = /var/log/mysqld.log

pid-file = /var/run/mysqld/mysqld.pid

#复制框架

server_id=1

gtid_mode=ON

enforce_gtid_consistency=ON

binlog_checksum=NONE

log_bin=binlog

log_slave_updates=ON

binlog_format=ROW

master_info_repository=TABLE

relay_log_info_repository=TABLE

#组复制设置

#server必须为每个事务收集写集合,并使用XXHASH64哈希算法将其编码为散列

transaction_write_set_extraction=XXHASH64

#告知插件加入或创建组命名,UUID

loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

#server启动时不自启组复制,为了避免每次启动自动引导具有相同名称的第二个组,所以设置为OFF。

loose-group_replication_start_on_boot=off

#告诉插件使用IP地址,端口24901用于接收组中其他成员转入连接

loose-group_replication_local_address="172.16.60.211:24901"

#启动组server,种子server,加入组应该连接这些的ip和端口;其他server要加入组得由组成员同意

loose-group_replication_group_seeds="172.16.60.211:24901,172.16.60.212:24901,172.16.60.213:24901"

loose-group_replication_bootstrap_group=off

report_host=172.16.60.211

report_port=3306

如上配置完成后, 将MGR-node1节点的/etc/my.cnf文件拷贝到其他两个节点

[root@MGR-node1 ~]# rsync -e "ssh -p22" -avpgolr /etc/my.cnf [email protected]:/etc/

[root@MGR-node1 ~]# rsync -e "ssh -p22" -avpgolr /etc/my.cnf [email protected]:/etc/

3个MGR节点除了server_id、loose-group_replication_local_address、report_host 三个参数不一样外,其他保持一致。

所以待拷贝完成后, 分别修改MGR-node2和MGR-node3节点/etc/my.cnf文件的server_id、loose-group_replication_local_address、report_host 三个参数

2) 配置完成后, 要一次启动数据库,安装MGR插件,设置复制账号(所有MGR节点都要执行)

[root@MGR-node1 ~]# systemctl restart mysqld

[root@MGR-node1 ~]# mysql -p123456

.............

mysql> INSTALL PLUGIN group_replication SONAME 'group_replication.so';

Query OK, 0 rows affected (0.13 sec)

mysql> SET SQL_LOG_BIN=0;

Query OK, 0 rows affected (0.00 sec)

mysql> CREATE USER repl@'%' IDENTIFIED BY 'repl';

Query OK, 0 rows affected (0.00 sec)

mysql> GRANT REPLICATION SLAVE ON *.* TO repl@'%';

Query OK, 0 rows affected (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)

mysql> SET SQL_LOG_BIN=1;

Query OK, 0 rows affected (0.00 sec)

mysql> CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='repl' FOR CHANNEL 'group_replication_recovery';

Query OK, 0 rows affected, 2 warnings (0.21 sec)

4.4 启动MGR单主模式

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

1) 启动MGR,在主库(172.16.60.11)节点上上执行

[root@MGR-node1 ~]# mysql -p123456

...............

mysql> SET GLOBAL group_replication_bootstrap_group=ON;

Query OK, 0 rows affected (0.00 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (2.31 sec)

mysql> SET GLOBAL group_replication_bootstrap_group=OFF;

Query OK, 0 rows affected (0.00 sec)

查看MGR组信息

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 8769f936-3e51-11e9-acaa-005056ac6820 | 172.16.60.211 | 3306 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

1 row in set (0.01 sec)

2) 在其他节点加入MGR集群,在从库(172.16.60.212,172.16.60.213)上执行

[root@MGR-node2 ~]# mysql -p123456

................

mysql> START GROUP_REPLICATION;

ERROR 3092 (HY000): The server is not configured properly to be an active member of the group. Please see more details on error log.

查看日志:

[root@MGR-node2 ~]# tail -2000 /var/log/mysqld.log

.....................

.....................

2019-03-04T09:11:30.683714Z 0 [ERROR] Plugin group_replication reported: 'This member has more executed transactions than those present in the group. Local transactions: 87135ebb-3e51-11e9-8931-005056880888:1-2 > Group transactions: 851d03bb-3e51-11e9-8f8d-00505688047c:1-2,

8769f936-3e51-11e9-acaa-005056ac6820:1-2,

aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:1-4'

2019-03-04T09:11:30.683817Z 0 [Warning] Plugin group_replication reported: 'The member contains transactions not present in the group. It is only allowed to join due to group_replication_allow_local_disjoint_gtids_join option'

解决办法:

mysql> set global group_replication_allow_local_disjoint_gtids_join=ON;

Query OK, 0 rows affected, 1 warning (0.00 sec)

然后再接着加入MGR集群

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected, 1 warning (5.14 sec)

3) 再次查看MGR组信息 (在三个MGR节点上都可以查看)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 851d03bb-3e51-11e9-8f8d-00505688047c | 172.16.60.212 | 3306 | RECOVERING |

| group_replication_applier | 87135ebb-3e51-11e9-8931-005056880888 | 172.16.60.213 | 3306 | RECOVERING |

| group_replication_applier | 8769f936-3e51-11e9-acaa-005056ac6820 | 172.16.60.211 | 3306 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

3 rows in set (0.00 sec)

发现新加入的MGR-node2 , MGR-node3两个节点在集群里的状态是RECOVERING!!!

查看日志

[root@MGR-node3 ~]# tail -2000 /var/log/mysqld.log

.....................

.....................

2019-03-04T09:15:35.146740Z 734 [ERROR] Slave I/O for channel 'group_replication_recovery': Got fatal error 1236 from master when

reading data from binary log: 'The slave is connecting using CHANGE MASTER TO MASTER_AUTO_POSITION = 1, but the master has

purged binary logs containing GTIDs that the slave requires.', Error_code: 1236

解决办法:

登录主库172.16.60.211, 查看被purge的GTID:

[root@MGR-node1 ~]# mysql -p123456

....................

mysql> show global variables like 'gtid_purged';

+---------------+------------------------------------------+

| Variable_name | Value |

+---------------+------------------------------------------+

| gtid_purged | 8769f936-3e51-11e9-acaa-005056ac6820:1-2 |

+---------------+------------------------------------------+

1 row in set (0.00 sec)

接着在两个从库172.16.60.212, 172.16.60.213的数据库上执行下面命令,即跳过这个GTID:

mysql> STOP GROUP_REPLICATION;

Query OK, 0 rows affected (10.14 sec)

mysql> reset master;

Query OK, 0 rows affected (0.06 sec)

mysql> set global gtid_purged = '8769f936-3e51-11e9-acaa-005056ac6820:1-2';

Query OK, 0 rows affected (0.24 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected, 1 warning (3.49 sec)

再次查看查看MGR组信息 (在三个MGR节点上都可以查看),

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 851d03bb-3e51-11e9-8f8d-00505688047c | 172.16.60.212 | 3306 | ONLINE |

| group_replication_applier | 87135ebb-3e51-11e9-8931-005056880888 | 172.16.60.213 | 3306 | ONLINE |

| group_replication_applier | 8769f936-3e51-11e9-acaa-005056ac6820 | 172.16.60.211 | 3306 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

3 rows in set (0.00 sec)

通过上面可以看出: 三个MGR节点状态为online,并且主节点为172.16.60.211,只有主节点可以写入,其他两个MGR节点只读,MGR单主模式搭建成功。

==============================================================================

验证下MGR单主模式下节点数据的同步以及读写操作:

先在主库节点172.16.60.211上创建测试数据库

[root@MGR-node1 ~]# mysql -p123456

..............

mysql> CREATE DATABASE kevin CHARACTER SET utf8 COLLATE utf8_general_ci;

Query OK, 1 row affected (0.06 sec)

mysql> use kevin;

Database changed

mysql> create table if not exists haha (id int(10) PRIMARY KEY AUTO_INCREMENT,name varchar(50) NOT NULL);

Query OK, 0 rows affected (0.24 sec)

mysql> insert into kevin.haha values(1,"wangshibo"),(2,"guohuihui"),(3,"yangyang"),(4,"shikui");

Query OK, 4 rows affected (0.13 sec)

Records: 4 Duplicates: 0 Warnings: 0

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)

接着在其他的两个从节点172.16.60.212和172.16.60.213上查看数据, 发现主库数据已经同步到两个从库上了

[root@MGR-node2 ~]# mysql -p123456

..................

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)

然后尝试在两个从库上更新数据, 发现更新失败! 因为这是MGR单主模式, 从库只能进行读操作, 不能进行写操作!

[root@MGR-node3 ~]# mysql -p123456

.................

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)

mysql> delete from kevin.haha where id>3;

ERROR 1290 (HY000): The MySQL server is running with the --super-read-only option so it cannot execute this statement

mysql> insert into kevin.haha values(11,"beijing"),(12,"shanghai"),(13,"anhui");

ERROR 1290 (HY000): The MySQL server is running with the --super-read-only option so it cannot execute this statement

只有在主库上才能进行写操作

[root@MGR-node1 ~]# mysql -p123456

..............

mysql> insert into kevin.haha values(11,"beijing"),(12,"shanghai"),(13,"anhui");

Query OK, 3 rows affected (0.15 sec)

Records: 3 Duplicates: 0 Warnings: 0

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

| 11 | beijing |

| 12 | shanghai |

| 13 | anhui |

+----+-----------+

7 rows in set (0.00 sec)

4.5 切换到多主模式

MGR切换模式需要重新启动组复制,因些需要在所有节点上先关闭组复制,设置 group_replication_single_primary_mode=OFF 等参数,再启动组复制。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

1) 停止组复制(在所有MGR节点上执行):

mysql> stop group_replication;

Query OK, 0 rows affected (9.08 sec)

mysql> set global group_replication_single_primary_mode=OFF;

Query OK, 0 rows affected (0.00 sec)

mysql> set global group_replication_enforce_update_everywhere_checks=ON;

Query OK, 0 rows affected (0.00 sec)

2) 随便选择某个MGR节点执行 (比如这里选择在MGR-node1节点):

[root@MGR-node1 ~]# mysql -p123456

...............

mysql> SET GLOBAL group_replication_bootstrap_group=ON;

Query OK, 0 rows affected (0.00 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (2.20 sec)

mysql> SET GLOBAL group_replication_bootstrap_group=OFF;

Query OK, 0 rows affected (0.00 sec)

3) 然后在其他的MGR节点执行 (这里指MGR-node2和MGR-node3节点上执行):

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected, 1 warning (5.89 sec)

4) 查看MGR组信息 (在任意一个MGR节点上都可以查看)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 851d03bb-3e51-11e9-8f8d-00505688047c | 172.16.60.212 | 3306 | ONLINE |

| group_replication_applier | 87135ebb-3e51-11e9-8931-005056880888 | 172.16.60.213 | 3306 | ONLINE |

| group_replication_applier | 8769f936-3e51-11e9-acaa-005056ac6820 | 172.16.60.211 | 3306 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

3 rows in set (0.00 sec)

可以看到所有MGR节点状态都是online,角色都是PRIMARY,MGR多主模式搭建成功。

=========================================

验证下MGR多主模式的节点数据同步:

在MGR-node1节点更新数据:

[root@MGR-node1 ~]# mysql -p123456

.................

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

| 11 | beijing |

| 12 | shanghai |

| 13 | anhui |

+----+-----------+

7 rows in set (0.00 sec)

mysql> delete from kevin.haha where id>10;

Query OK, 3 rows affected (0.08 sec)

在MGR-node2节点更新数据

[root@MGR-node2 ~]# mysql -p123456

...............

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)

mysql> insert into kevin.haha values(11,"beijing"),(12,"shanghai"),(13,"anhui");

Query OK, 3 rows affected (0.08 sec)

Records: 3 Duplicates: 0 Warnings: 0

在MGR-node3节点更新数据

[root@MGR-node3 ~]# mysql -p123456

.............

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

| 11 | beijing |

| 12 | shanghai |

| 13 | anhui |

+----+-----------+

7 rows in set (0.00 sec)

mysql> delete from kevin.haha where id>11;

Query OK, 2 rows affected (0.14 sec)

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

| 11 | beijing |

+----+-----------+

5 rows in set (0.00 sec)

如上, MGR多主模式下, 所有节点都可以进行读写操作.

4.6 切回单主模式

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

1) 停止组复制(在所有MGR节点上执行):

mysql> stop group_replication;

Query OK, 0 rows affected (9.29 sec)

mysql> set global group_replication_enforce_update_everywhere_checks=OFF;

Query OK, 0 rows affected (0.00 sec)

mysql> set global group_replication_single_primary_mode=ON;

Query OK, 0 rows affected (0.00 sec)

2) 选择一个节点作为主节点, 在主节点上执行 (这里选择MGR-node1节点作为主节点)

[root@MGR-node1 ~]# mysql -p123456

................

mysql> SET GLOBAL group_replication_bootstrap_group=ON;

Query OK, 0 rows affected (0.00 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (2.12 sec)

mysql> SET GLOBAL group_replication_bootstrap_group=OFF;

Query OK, 0 rows affected (0.00 sec)

3) 在其他剩余的节点, 也就是从库节点上执行 (这里从库节点指的就是MGR-node2和MGR-node3):

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected, 1 warning (6.16 sec)

4) 查看MGR组信息 (在任意一个MGR节点上都可以查看)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 851d03bb-3e51-11e9-8f8d-00505688047c | 172.16.60.212 | 3306 | ONLINE |

| group_replication_applier | 87135ebb-3e51-11e9-8931-005056880888 | 172.16.60.213 | 3306 | ONLINE |

| group_replication_applier | 8769f936-3e51-11e9-acaa-005056ac6820 | 172.16.60.211 | 3306 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

3 rows in set (0.00 sec)

这样就又切回到MGR单主模式了, 其中172.16.60.211是主节点,具有写权限. 另外两个节点172.16.60.212和172.16.60.213是从库节点, 只能读不能写.

4.7 故障切换

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

1) 单主模式

如果主节点挂掉了, 通过选举程序会从从库节点中选择一个作为主库节点. 如下模拟故障:

关闭主库MGR-node1的mysqld服务

[root@MGR-node1 ~]# systemctl stop mysqld

接着在其他节点上查看MGR组信息. 比如在MGR-node2节点查看

[root@MGR-node2 ~]# mysql -p123456

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 851d03bb-3e51-11e9-8f8d-00505688047c | 172.16.60.212 | 3306 | ONLINE |

| group_replication_applier | 87135ebb-3e51-11e9-8931-005056880888 | 172.16.60.213 | 3306 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

2 rows in set (0.00 sec)

尝试在MGR-node2节点更新数据

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

| 11 | beijing |

| 12 | shanghai |

| 13 | anhui |

+----+-----------+

7 rows in set (0.00 sec)

mysql> delete from kevin.haha where id>10;

Query OK, 3 rows affected (0.06 sec)

如上, 发现在之前的主库MGR-node1节点挂掉后, MGR-node2节点可以进行写操作了, 说明此时已经选举MGR-node2节点为新的主节点了

那么,MGR-node3节点还是从节点, 只能读不能写

[root@MGR-node3 ~]# mysql -p123456

..............

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)

mysql> insert into kevin.haha values(11,"beijing"),(12,"shanghai"),(13,"anhui");

ERROR 1290 (HY000): The MySQL server is running with the --super-read-only option so it cannot execute this statement

然后再恢复MGR-node1节点, 恢复后, 需要手动激活下该节点的组复制功能

[root@MGR-node1 ~]# systemctl start mysqld

[root@MGR-node1 ~]# mysql -p123456

...............

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (3.15 sec)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 851d03bb-3e51-11e9-8f8d-00505688047c | 172.16.60.212 | 3306 | ONLINE |

| group_replication_applier | 87135ebb-3e51-11e9-8931-005056880888 | 172.16.60.213 | 3306 | ONLINE |

| group_replication_applier | 8769f936-3e51-11e9-acaa-005056ac6820 | 172.16.60.211 | 3306 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

3 rows in set (0.00 sec)

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)

mysql> insert into kevin.haha values(11,"beijing"),(12,"shanghai"),(13,"anhui");

ERROR 1290 (HY000): The MySQL server is running with the --super-read-only option so it cannot execute this statement

发现MGR-node1节点恢复后, 则变为了从库节点, 只能读不能写.

如果从节点挂了, 恢复后, 只需要手动激活下该节点的组复制功能("START GROUP_REPLICATION;"),

即可正常加入到MGR组复制集群内并自动同步其他节点数据.

=============================================================

2) 多主模式

如果某个节点挂了, 则其他的节点继续进行同步.

当故障节点恢复后, 只需要手动激活下该节点的组复制功能("START GROUP_REPLICATION;"),

即可正常加入到MGR组复制集群内并自动同步其他节点数据.