基于智能和视觉的火灾检测系统:调查 (论文翻译)

英文版论文原文:英文版论文链接地址

基于智能和视觉的火灾检测系统:调查

Intelligent and Vision-based Fire Detection Systems: a Survey

Fengju Bu& Mohammad Samadi Gharajeh

- 山东农业工程大学

- Shandong Agriculture and Engineering University

- Young Researchers and Elite Club, Tabriz Branch, Islamic Azad University

Abstract

火灾是世界上主要的灾难之一。火灾探测系统应在最短的时间内探测到各种环境(例如建筑物,森林和农村地区)中的火灾,以减少经济损失和人为灾难。实际上,火灾传感器是对传统点传感器(例如,烟雾和热探测器)的补充,后者可为人们提供火灾发生的预警。结合图像处理技术的摄像机比点传感器能够更快地检测到火灾。而且,与常规探测器相比,它们更容易提供火的大小,增长和方向。最初,本文简要介绍了火灾探测系统设计中应考虑的各种环境(包括建筑物,森林和矿山)的主要特征。随后,它描述了研究人员在过去十年中提出的一些基于智能和视觉的火灾探测系统。本文将这些系统分为两类:森林火灾智能检测系统和所有环境的智能火灾检测系统。他们使用各种智能技术(例如卷积神经网络,颜色模型和模糊逻辑)来在各种环境中高精度检测火灾发生。在不同评估方案下,将火灾检测系统的性能在检测率,精度,真阳性率,假阳性率等方面进行比较。

Fire is one of the main disasters in the world. A fire detection system should detect fires in various environments (e.g., buildings, forests, and rural areas) in the shortest time in order to reduce financial losses and humanistic disasters. Fire sensors are, in fact, complementary to conventional point sensors (e.g., smoke and heat detectors), which provide people the early warnings of fire occurrences. Cameras combined with image processing techniques detect fire occurrences more quickly than point sensors. Moreover, they provide the size, growth, and direction of fires more easily than their conventional detectors. This paper, initially, presents a glance view on the main features of various environments including buildings, forests, and mines that should be considered in the design of fire detection systems. Afterwards, it describes some of the intelligent and vision-based fire detection systems that have been presented by researchers in the last decade. These systems are categorized, in this paper, into two groups: intelligent detection systems for forest fires and intelligent fire detection systems for all of the environments. They use various intelligent techniques (e.g., convolutional neural networks, color models, and fuzzy logic) to detect fire occurrences with a high accuracy in various environments. Performances of the fire detection systems are compared to each other in terms of detection rate, precision, true positive rate, false positive rate, etc. under different evaluation scenarios.

1. Introduction

火灾会给人类安全,健康和财产造成更多问题。过去几年中,火灾造成的经济和人文损失已大大增加。它们是由一些不可预料的条件引起的,例如燃料管理政策的变更,气候变化以及农村地区的高速发展[1]。火灾探测系统是监视系统中的重要组成部分。它监视各种环境(例如建筑物)作为预警框架的主要单元,以最好地报告起火情况。现有的大多数火灾探测系统都使用有关颗粒采样,温度采样和烟雾分析的点传感器。它们缓慢地测量着火概率,通常在数分钟之内,并且在户外环境中几乎没有适用性。当有足够数量的烟雾颗粒流入设备或温度显着升高时,烟雾探测器和热探测器就会触发以避免误报。此外,时间是减少火灾损失的主要因素之一。因此,应减少探测系统的响应时间,以增加扑灭火灾的机会,从而减少财务和人文破坏[2]。

Fire causes much more problems to human safety, health, and property. Financial and humanistic losses in fires have been considerably increased over the past years. They are happened by some of the unpredicted conditions such as changing fuel management policies, climate change, and high development in rural areas [1]. Fire detection system is an important component in surveillance systems. It monitors various environments (e.g., buildings) as main unit of an early warning framework to report preferably the start of fire. Most of the existing fire detection systems use point sensor regarding the particle sampling, temperature sampling, and smoke analysis. They measure fire probabilities slowly, usually in minutes, and also have little applicability in outdoor environments. Upon a sufficient amount of smoke particles flows into the device or temperature is increased considerably, the smoke and heat detectors trigger to avoid false alarms. Besides, time is one of the main factors to minimize fire damages. Thus, the response time of detection systems should be decreased to increase the chances to extinguish fires and, thereby, reduce the financial and humanistic damages [2].

在过去的几十年中,研究人员试图实现点检测器的慢响应时间。 在替代的基于火灾传感器的自动火灾探测系统计算中可以观察到此问题。 他们总结说,检测烟雾和火焰的最新有效技术之一是从视觉传感器获得图像和/或视频帧[3]。 基于视觉传感器的技术可以缩短响应时间,提高检测过程的概率,并显着提供大面积的覆盖范围(例如,开放环境)。 视觉传感器可以提供有关火和烟的方向,生长以及大小的更多信息[4]。 此外,我们可以以较低的实施成本升级安装在各种环境(例如工厂和公共场所)中的现有监视系统。 通过使用视频处理和机器视觉技术,可以执行此过程以提供火灾预警,并检测火焰和烟雾。

Researchers have attempted to achieve the slow response time of point detectors in the last decades. This issue can be observed in the alternative, fire sensor based computing for automatic fire detection systems. They have summarized that one of the latest efficient techniques to detect smoke and flame is to achieve images and/or video frames from vision sensors [3]. Vision sensor based techniques can lead response time to be decreased, probability of the detection process to be enhanced, and coverage of large areas (e.g., open environments) to be provided considerably. Vision sensors can give more information about the direction, growth, and size of fire and smoke [4]. In addition, we can upgrade the existing surveillance systems installed in various environments (e.g., factories and public sites) with low implementation costs. This process can be done to provide early warnings of fires and, also, detect flame and smoke by using video processing and machine vision techniques.

由视觉传感器实现的火灾探测系统通常由三个单元组成:运动探测,候选对象分割以及火焰或烟雾斑点探测。在这些系统中,相机通常被认为是固定的,但是基于图像减法和背景建模技术的一些变化[5],可以检测到初始运动。运动检测过程之后可以使用颜色信息[6,7],像素可变性度量[8],纹理提示[9]或光流场分布[10]对候选对象进行分割。可以通过图像分离方法[11,12]或通过以数学术语对火焰或烟雾建模来识别火焰和烟雾斑点[13-15]。在进行运动检测之后,可以通过使用形态图像处理方法作为预处理步骤来识别火灾的地理区域。消防系统检测火灾和烟雾的能力取决于摄像机视野的视点和特定场景的深度。此外,烟雾和火焰具有不同的动态行为和物理特性。因此,与仅定位烟雾区域或火焰区域的系统相比,同时定位烟雾和火焰区域的消防系统可以更早地检测到火灾[16]。

Fire detection systems, implemented by vision sensors, are generally composed of three units: motion detection, candidate object segmentation, and flame or smoke blob detection. In these systems, cameras are usually considered to be fixed but initial motion is detected based on some variations on image subtraction and background modeling techniques [5]. Motion detection process can be followed by candidate object segmentation using color information [6, 7], pixel variability measures [8], texture cues [9], or optical flow field distribution [10]. Flame and smoke blobs can be identified by image separation approaches [11, 12] or by modeling the flame or smoke in mathematical terms [13-15]. After motion detection, geographical regions of fire can be identified by using morphological image processing methods as a preprocessing step. Ability of a fire system for detecting the fire and smoke depends on the viewpoint of camera field and the depth of specific scene. Moreover, smoke and flame have different dynamic behaviors and physical properties. Therefore, a fire system that locates the regions of both smoke and flame can considerably detect a fire earlier than a system that only locates the region of smoke or the region of flame [16].

研究人员已经提出了各种基于智能和视觉的火灾探测系统,尤其是在过去的十年中。他们使用一些方法和系统,例如相机(例如光学相机[17]),智能技术(例如神经网络[18-20],粒子群优化[21]和模糊逻辑[22]),无线网络(例如,无线传感器网络和卫星系统[17]),机器人系统(例如,无人机系统[23、24])和图像处理技术(例如,RGB颜色[25])。本文介绍了一些最新的基于视觉的智能消防系统的主要特征,优点和/或缺点。这些消防系统的设计和实现是为了检测不同环境(例如森林[26])中的火灾。似乎使用智能和基于视觉的技术已对火灾探测系统的性能产生了显着影响。使用智能技术(例如卷积神经网络),根据火灾的各种特征(例如火焰,烟雾和颜色)来组织最多的火灾检测系统。也就是说,这些系统之间有许多共同的特征。因此,本文根据环境类型对它们进行分类,包括森林火灾检测系统和所有室内和室外环境的火灾检测系统。

Various intelligent and vision-based fire detection systems have been presented by researchers, especially in the last decade. They use some of the methods and systems such as cameras (e.g., optical cameras [17]), intelligent techniques (e.g., neural networks [18-20], particle swarm optimization [21], and fuzzy logic [22]), wireless networks (e.g., wireless sensor networks and satellite systems [17]), robotic systems (e.g., unmanned aerial systems [23, 24]), and image processing techniques (e.g., RGB color [25]). Main features, advantages, and/or drawbacks of some of the latest intelligent and vision-based fire systems are described in this paper. These fire systems are designed and implemented for detecting the fire occurrences in different environments (e.g., forests [26]). It seems that the use of intelligent and vision-based techniques have had noticeable effects on the performance of fire detection systems. The most fire detection systems are organized based on various features of the fire (e.g., flame, smoke, and color) using the intelligent techniques (e.g., convolutional neural networks). That is, there are many common features between these systems. Therefore, they are categorized in this paper based on types of the environments including detection systems for forest fires and fire detection systems for all of the indoor and outdoor environments.

其余论文的结构如下。 第2节介绍了火灾探测系统应考虑的环境类型。 第3节介绍了一些基于智能和视觉的火灾探测系统,它们在不同的环境中使用各种方法(例如卷积神经网络)。 第4节根据不同的参数(例如检测率,真实阳性率和错误警报率)评估最新检测系统的仿真和/或实验结果。 第五部分讨论了智能火灾探测系统的基准数据集。 最后,第6节总结了论文。

The rest of paper is organized as follows. Section 2 presents types of the environments that should be considered by fire detection systems. Section 3 describes some of the intelligent and vision-based fire detection systems that use various methods (e.g., convolutional neural networks) in different environments. Section 4 evaluates the simulation and/or experimental results of the latest detection systems according to different parameters such as detection rate, true positive rate, and false alarm rate. Section 5 discusses about benchmark datasets of the intelligent fire detection systems. Finally, Section 6 concludes the paper.

2.环境类型

2. Types of the environments

火灾可能会破坏各种类型的环境。 应当针对此类环境设计和实施消防系统,以防止严重的生命损失,并且还应保留宝贵的自然和个人财产。 本节介绍了三个重要环境的一些物理特征,包括建筑物,森林和矿山。

There are various types of the environments that may be destroyed by fires. Fire systems should be designed and implemented for such environments to prevent terrible loss of lives and, also, survive valuable natural and individual properties. This section presents some physical features of the three important environments including buildings, forests, and mines.

荒地火灾可能会破坏建筑物组件和系统的暴露。根据暴露条件,随着野火向社区和城市地区蔓延,建筑物可能会着火。因此,通过了解缓解策略和结构的着火潜力,可以完全防止或大大减少着火。社区设计,规划实践,主动/被动抑制机制和基于工程的方法是一些缓解策略,可以减少火灾的财务和财产损失。 Hakes等。 [27]代表了典型的建筑构件和系统,这些构件和系统可以被认为是城市地区潜在的起火途径。他们讨论了一种具有主要组件和系统的结构,包括屋顶,山谷,天窗,檐槽,屋檐,山墙,通风口,壁板,甲板,围栏和覆盖物。当结构暴露于辐射,火斑和火焰接触时,结构上的某些组件将直接开始燃烧或先闷燃,然后过渡到明火。由于摇晃的木瓦和带状瓦的屋顶容易燃烧,有许多缝隙和大面积的易燃材料,因此通常会增加火灾危险[28]。檐沟中使用的碎屑可能会被火印点燃,尤其是在檐沟处。因此,檐槽是引爆房屋的潜在途径之一。在城市家庭中,屋檐和通风口被认为是可能的着火源。此外,通风孔会导致通向燃烧的品牌并渗入建筑物内部[29]。由于暴露于辐射热或直接接触火焰,通常认为壁板材料在起火时会着火。火斑的堆积会点燃附近的植被或其他燃料(例如,木桩和覆盖物),而在结构基础周围没有适当的间隙。它可能会导致辐射热暴露,并使外墙的壁板材料直接接触火焰。甲板,围栏和覆盖物是结构的其余材料,可能会引起最潜在的着火源[30-32]。

Wildland fires can destroy the exposure of building components and systems. Structures may ignite as wildland fire spreads toward the community and urban areas based on the exposure conditions. Thus, the ignition can be fully prevented or considerably reduced by understanding the mitigation strategies and the ignition potential of structures. Community design, planning practices, active/passive suppression mechanisms, and engineering-based methods are some of the mitigation strategies that can reduce the financial and property losses of fire occurrences. Hakes et al. [27] have represented the typical building components and systems that can be considered as potential pathways to ignition in urban areas. They have discussed about a structure with the main components and systems including roof, valley, dormer, gutter, eave, gable, vent, siding, deck, fence, and mulch. When a structure is revealed to radiation, firebrands, and flame contact, some components on the structure would directly begin to flame or firstly smolder and then transition to the flaming ignition. Since wood shake and shingle roofs ignite easily with many crevices and a large surface area of the flammable material, they often cause an increased fire risk [28]. The debris utilized in gutter may be ignited by firebrands, especially at roof-gutter intersection. Thus, gutters are one of the potential pathways to ignition of a home. Eaves and vents are identified as possible sources of ignition at homes in urban areas. Besides, vents cause to open a way toward the burning brands and penetrate the interior of a structure [29]. Because of radiant heat exposure or direct flame contact, siding materials have been often considered to ignite on fires. Firebrand accumulation can ignite the nearby vegetation or other fuels (e.g., wood piles and mulch) without proper clearance around the foundation of a structure. It can cause radiant heat exposure and direct flame contact in the siding materials on exterior walls. Decks, fences, and mulches are the remainder materials of a structure that may cause the most potential sources of ignition [30-32].

森林在大多数方面保护着地球的生态平衡。当森林大火蔓延到大片区域时,将相应地通知大火。因此,有时无法控制和停止它。森林大火造成了巨大损失,对环境和大气造成了不可挽回的破坏,对生态也造成了不可挽回的破坏。森林火灾不仅造成人员伤亡,宝贵的自然环境和个人财产(包括数百所房屋和数千公顷森林)的悲剧性丧失,而且对生态健康和环境保护构成重大威胁。研究表明,森林大火在大气和生态环境中增加了30%的二氧化碳(CO2)和大量的烟雾[33]。此外,森林大火会造成可怕的后果和长期灾难性影响,例如全球变暖,对当地天气模式的不利影响以及动植物稀有物种的灭绝[17]。 Sahin [34]提出了森林火灾探测系统的示例基础设施,但没有任何基于视觉的智能技术。该系统包括各种元素,尤其是通信通道,中央分类器和移动生物传感器。通信信道由基于卫星的系统和几个接入点提供,以覆盖森林环境的所有位置。中央分类器用于通过访问点对移动生物传感器的读数进行分类,并且还可以通过使用决策支持系统来实现。移动生物传感器是该系统最重要的部分,可传输温度或辐射水平的任何变化,从而将动物的地理位置发送到访问点。

Forests protect the earth’s ecological balance, in the most aspects. When a forest fire has spread over a large area, the fire will be notified accordingly. Thus, it is not possible to control and stop it at times. Forest fires cause a big loss and an irrevocable damage to environment and atmosphere plus an irrevocable damage to the ecology. Forest fire not only causes the tragic loss of lives, valuable natural environments, and individual properties (including hundreds of houses and thousands of hectares of forest), but also it is a big danger to ecological health and protection of environment. Researches show that forest fires increase 30% of carbon dioxide (CO2) and huge amounts of smoke in atmosphere and ecology [33]. Furthermore, forest fires cause terrible consequences and long-term disastrous effects such as global warming, negative effect on local weather patterns, and extinction of rare species of the fauna and flora [17]. Sahin [34] has presented a sample infrastructure of the forest fire detection system, without any intelligent and vision-based technique. This system includes various elements, especially communication channels, a central classifier, and mobile biological sensors. Communication channels are provided by a satellite based system and several access points to cover all locations of the forest environment. The central classifier is used to categorize readings of the mobile biological sensors via access points and is also implemented by using a decision support system. Mobile biological sensors are the most important parts of this system, which transmit any changes of the temperature or radiation level and thereby send geographical location of the animals to access points.

由于地下煤矿含有煤尘,甲烷和其他有毒气体[35],因此它们被认为是非常危险和危险的环境[36]。煤尘或甲烷爆炸的结果是导致采矿业约33.8%死亡的主要原因。最近的报道表明,盐范围地区煤矿的瓦斯积累已引起约38%的地下煤矿事故[37]。地下煤矿中存在各种有毒气体,包括甲烷气,一氧化碳(CO),CO2和硫化氢(H2S),这些气体会严重危害人体[38]。因此,针对地下矿山环境的有效监控系统可以在很大程度上确保矿工和矿山财产的安全。 Jo和Khan [39]提出了一种针对Hassan Kishore煤矿的预警安全系统,该系统使用物联网(IoT)而不是使用任何低成本,智能的,基于视觉的系统。该系统使用安装在各个位置的固定节点和移动节点来检测现象数据。此外,路由器节点专用于每个部分,以收集数据,然后通过网关节点将读数数据发送到基站。

Since underground coal mines contain coal dust, methane, and other toxic gases [35], they have been considered as a very hazardous and dangerous environment [36]. A consequence of coal dust or methane gas explosions is the main reason to cause approximately 33.8% of deaths in the mining sector. Recent reports represent that gas accumulation in the coal mines of salt-range region has caused approximately 38% of underground mine accidents [37]. There exist various toxic gases including methane gas, carbon monoxide (CO), CO2, and hydrogen sulfide (H2S) in underground coal mines that can critically lead to damage human body [38]. Consequently, an efficient monitoring system for underground mine environments can mostly guarantee the safety of miners and mine property. Jo and Khan [39] have presented an early-warning safety system for the Hassan Kishore coal mine that uses the Internet of Things (IoT) instead of using any low-cost, intelligent, vision based system. This system uses the stationary and mobile nodes that are installed in various locations to detect phenomena data. Moreover, a router node is dedicated for every section to gather and then transmit readings data toward a base station via gateway node.

3. 使用智能和基于视觉的方法的火灾探测系统

3. Fire detection systems using the intelligent and vision-based methods

可以组织火灾探测系统以在各种环境中使用。 由于每种环境都有一些特殊的规范,因此某些火灾探测可用于森林火灾,某些火灾探测可用于室外环境,而其他一些则可在所有环境中使用。 因此,本节将智能和基于视觉的火灾探测系统分为两组:森林火灾和所有环境。

Fire detection systems can be organized to use in various environments. Since every environment has some special specifications, some of the fire detections can be used in forest fires, some ones can be applied in outdoor environments, and some others can be utilized in all of the environments. Accordingly, this section describes the intelligent and vision-based fire detection systems into two groups: forest fires and all of the environments.

3.1. 森林火灾智能检测系统

3.1. Intelligent detection systems for forest fires

在图像分类和基于视觉的系统中,卷积神经网络(CNN)被认为是一种高性能技术[40]。它可用于提高火灾通知系统的检测准确性,最大程度地减少火灾,并减少社会和生态后果。张等。 [41]提出了一种基于深度学习的森林火灾检测系统。他们已经通过使用与深层CNN关联的完整图像和细粒补丁火分类器来训练该系统。该系统使用两种情况:(i)通过从头开始火灾补丁学习二进制分类器,以及(ii)学习全图像火灾分类器,然后应用细粒度补丁分类器。作者从在线资源中收集了火灾探测数据集[42],这些数据已在最新的火灾探测文献中得到应用。该数据集包括从森林环境获得的25个视频。它包含21个正(激发)序列和4个负(非激发)序列。这些文献中的大多数都报告了图像级别的评估,而另一些文献还报告了补丁级别的检测准确性。作者使用以下方程式来检测火灾的发生:

Convolutional neural network (CNN) is considered as a high-performance technique in image classification and vision-based systems [40]. It can be used to improve detection accuracy of fire notification systems, minimize fire disasters, and reduce the social and ecological results. Zhang et al. [41] have presented a deep-learning based detection system for forest fires. They have trained this system by using a full image and fine grained patch fire classifier that are associated to a deep CNN. The system uses two scenarios: (i) learning the binary classifier by fire patches from scratch and (ii) learning a full-image fire classifier and then applying the fine-grained patch classifier. The authors have collected a fire detection dataset from the online resources [42] that have been applied in the latest fire detection literatures. This dataset includes 25 videos obtained from forest environments. It contains 21 positive (fire) sequences and 4 negative (non-fire) sequences. Most of these literatures report image-level evaluation while some of them also report patch-level detection accuracy. The authors have used the below equations to detect a fire occurrence:

a c c u r a c y = N T P + N T N P O S + N E G ; D e t e c t i o n r a t e = N T P P O S F a l s e a l a r m r a t e = N F N N E G (1) \tag{1}accuracy=\frac{NTP+NTN}{POS+NEG};\space\space Detection rate=\frac{NTP}{POS}\space\space False\space alarm\space rate=\frac{NFN}{NEG} accuracy=POS+NEGNTP+NTN; Detectionrate=POSNTP False alarm rate=NEGNFN(1)

其中NTP是真正数,NTN是真负数,NFN是假负数,POS是正数,NEG是负数。 支持向量机(SVM)是一种机器学习算法,用于评估数据的分类和回归过程。 作者还使用了SVM算法来评估该检测系统的性能。

where NTP is the number of truth positives, NTN is the number of truth negatives, NFN is the number of false negatives, POS is the number of positives, and NEG is the number of negatives. Support vector machine (SVM) is a machine learning algorithm, which evaluates data for the classification and regression processes. The authors have also used the SVM algorithm to evaluate performance of this detection system.

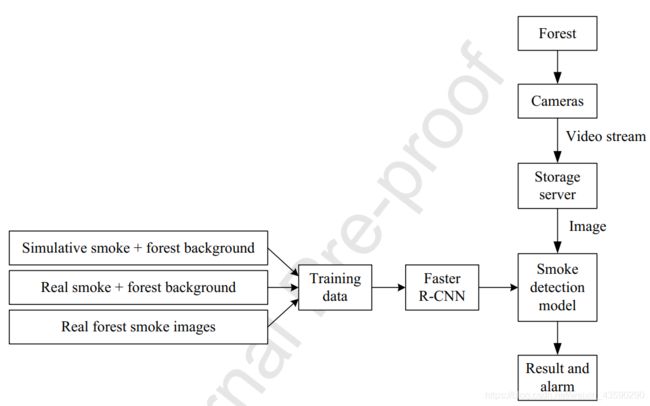

张等。 [20]提出了一种荒地森林火灾烟雾探测系统,该系统通过使用基于区域的更快CNN(R-CNN)和合成烟雾图像来工作。作者应用了两种方法来合成大量的森林烟雾图像,以防止传统视频烟雾检测技术中复杂特征图像的处理。第一种方法是从绿色背景中提取真实烟雾,然后将其插入森林背景中。在第二个步骤中,渲染软件会生成合成烟雾,像以前一样插入森林背景中。在该系统中,整个网络的输入是原始图像,无需使用任何预处理或块分割。因此,处理速度比前两代要快。图1显示了该系统的主要元素和工作流程。通过使用更快的R-CNN检测真实场景的烟雾,真实的森林烟雾图像可以提高训练数据的丰富度。该系统着重于用于训练过程的真实烟雾与合成烟雾之间的差异。作者已经在模拟烟雾+森林背景数据集(即SF数据集)和真实烟雾+森林背景数据集(即RF数据集)上训练了烟雾检测模型。

Zhang et al. [20] have presented a wildland forest fire smoke detection system that works by using faster region-based CNN (R-CNN) and synthetic smoke images. The authors have applied two approaches for synthesizing a large number of forest smoke images to prevent the process of complex feature images in traditional video smoke detection techniques. In the first approach, real smoke is extracted from green background to insert into the forest background. In the second one, synthetic smoke is generated by the rendering software to insert into the forest background, as before. In this system, input of the whole network is an original image without using any preprocessing or block segmentation. Thus, processing speed has been faster than the prior two generations. Fig. 1 shows main elements and workflow of this system. By using faster R-CNN in detecting the smoke of real scene, real forest smoke images can improve the affluence of training data. The system focuses on difference between the real smoke and synthetic smoke that are used for training process. The authors have trained smoke detection models on both of the simulative smoke + forest background dataset, namely SF dataset, and real smoke + forest background dataset, namely RF dataset.

图1. 使用更快的R-CNN [20]进行野外森林火灾烟雾探测的整体视图。

Fig. 1. Overall view of a wildland forest fire smoke detection using faster R-CNN [20].

无人机(UAV)是一种能够通过气动升力飞行并在没有机上乘员的情况下进行引导的机器。半自主或自主飞行可能是可恢复的或可消耗的。历史上,这种车辆的重要应用之一是在监视和检查领域[44]。因此,我们可以利用各种类型的空中交通工具来收集各个区域(例如森林)的基于图像的信息。克鲁兹等。 [45]提出了一种森林火灾探测方法,该方法可使用一种新的颜色指数应用于无人航空系统。他们着重于检测感兴趣区域,在这些区域中,关于颜色指数的计算,是火焰和烟雾。该系统包括转换单元,用于将图像从红色,绿色和蓝色(RGB)三波段空间转换为灰度波段。本机使用图像分量之间的一些算术运算来增强所需的颜色。然后,阈值处理将结果二值化以将感兴趣区域与其余区域分开。颜色指数通常被收集为植被指数,用于基于机器视觉的农业自动化过程,特别是用于农作物和杂草的检测。作者已尝试在有效的检测过程和最短执行时间之间建立联系。因此,他们使用了从图像中收集的额外数据(例如,火的大小,延伸速度和坐标)来对森林大火进行快速反应。通常,这项工作使用几个单位,包括归一化,颜色成分之间的关系,火灾探测指数和森林火灾探测指数。在归一化单元中,如下对所有组件进行归一化,以在不同的光照条件下获得更好的鲁棒性

Unmanned aerial vehicle (UAV) is a machine that is capable to fly by aerodynamic lift and guided without an onboard crew. It may be recoverable or expendable to fly semi-autonomously or autonomously. One of the important applications of this vehicle has been historically identified in the fields of surveillance and inspection [44]. Thus, we can utilize various types of aerials vehicle to collect image-based information over various areas (e.g., forests). Cruz et al. [45] have presented a forest fire detection approach for application in unmanned aerial systems using a new color index. They have focused on detection of the region of interests in which the cases are flames and smoke regarding the calculation of color indices. This system includes a transformation unit to convert images from Red, Green and Blue (RGB) three-band space to a grey-scale band. This unit uses some of the arithmetic operations between image components to enhance the desired colors. Afterwards, a thresholding process binarizes the result to separate the region of interest from the rest. Color indices are frequently collected as vegetation indices, which are used in a machine vision based process for agricultural automatization, especially for detection of the crops and weeds. The authors have attempted to make a relationship between effective detection process and minimum execution times. Thus, they have used extra data gathered from the images (e.g., the size of fire, extension velocity, and coordinates) to perform a rapid reaction in forest fires. Generally, this work uses several units including normalization, relation between color components, fire detection index, and forest fire detection index. In the normalization unit, all of the components are normalized as the below to obtain a better robustness against different lighting conditions

r = R S , g = G S , c = B S ; w h e r e S = R + G + B (2) \tag{2} r=\frac{R}{S},\space g=\frac{G}{S},\space c=\frac{B}{S};\space where \space S=R+G+B r=SR, g=SG, c=SB; where S=R+G+B(2)

仔细评估感兴趣区域的组成部分之间的关系,以改善所需的色调并为算术运算找到合适的方程式。 代表燃烧过程的颜色或关注点由较高的红色通道值而不是蓝色和绿色组成。 火灾探测指标根据归一化颜色计算如下

Relation between components of the regions of interest is evaluated carefully in order to improve th desired color tones and find appropriate equations for the arithmetic operations. The colors or interest, which represents a combustion process, consists of the high red channel values rather than blue and green. Fire detection index is calculated according to the normalized colors as the follow

F i r e d e t e c t i o n i n d e x = 2 r − g − b (3) \tag{3} Fire\space detection\space index=2r-g-b Fire detection index=2r−g−b(3)

当图像中有火焰时,可以使用以上公式。 最后,森林火灾检测指标用于赋予火灾和烟雾中存在的颜色更大的灵活性和重要性。 通过使用如下所示的归一化颜色和调节加权因子(ρ)进行计算

The above equation can be used when there is any flame in the image. Finally, forest fire detection index is used to give more flexibility and importance to the colors present in fire and smoke. It is calculated by using the normalized colors and a regulative weighting factor (ρ) as the below

F o r e s t f i r e d e t e c t i o n i n d e x = r ( 2 ρ + 1 ) − g ( ρ + 2 ) + b ( 1 − ρ ) (4) \tag{4} Forest\space fire\space detection\space index=r(2\rho+1)-g(\rho+2)+b(1-\rho) Forest fire detection index=r(2ρ+1)−g(ρ+2)+b(1−ρ)(4)

ρ ρ ρ 的不同值确定此索引的各种结果,以识别大量的红色,黄色,棕色和其他一些颜色色调。 此后,将对获得的图像进行二值化,并且还将通过检测过程标记分割的区域。

Different values of ρ ρ ρ determine various results for this index to identify a high amount of red, yellow, brown, and some other color tonalities. Afterwards, the obtained images will be binarized and also the segmented regions will be labeled by the detection process.

Yuan等。 [23]提出了一种森林火灾探测方法,该方法利用无人飞行器同时使用颜色和运动特征。 第一步,通过使用颜色决定规则和火的色度特征,提取火色像素作为火候选区域。 然后,通过Horn和Schunck光流算法计算候选区域的运动矢量。 光流结果还可以预测运动特征,以将火与其他火类似物区分开。 通过对运动矢量执行阈值处理和形态运算来确定二进制图像。 最后,使用斑点计数器方法在每个二进制图像中识别火灾的地理位置。

Yuan et al. [23] have presented a forest fire detection method that uses both color and motion features with the aid of unmanned aerial vehicles. In the first step, fire-colored pixels are extracted as fire candidate regions by using a color decision rule and the chromatic feature of fire. Afterwards, motion vectors of the candidate regions are calculated by the Horn and Schunck optical flow algorithm. Optical flow results can also predict motion feature for distinguishing the fires from other fire analogues. Binary images are determined by performing the thresholding process and morphological operations on motion vectors. Finally, geographical locations of the fires are identified in each binary image using a blob counter approach.

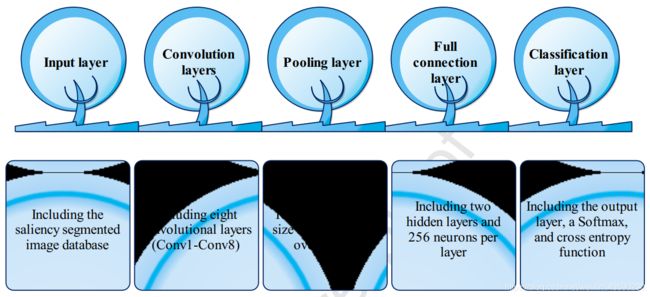

赵等。 [24]提出了一种使用无人机捕获的图像帧的基于显着性检测和深度学习的野火识别系统。他们提供了一种用于快速定位和分割航空图像中核心火区的检测算法。该系统可以大大防止由于直接调整大小而导致的功能丢失。此外,它还用于使用15层自学习深度CNN架构的标准火灾图像数据集的形成和数据扩充。该过程导致产生自学习的火特征校正器和分类器系统。作者考虑了一些不可忽视的挑战,尤其是火区的定位,固定训练图像的大小以及有限数量的鸟瞰野火图像。野火定位和分割算法由显着性检测和逻辑回归分类器通过两个步骤组成:感兴趣区域建议和使用机器学习的区域选择。第一步,通过显着性检测方法提取关注区域。因此,计算感兴趣区域的颜色和纹理特征以指定可能的着火区域。在第二步中,系统使用两个逻辑回归分类器来识别感兴趣区域内的特征向量。如果此向量属于火焰或烟雾,则这些区域将被系统分割。图2说明了此系统中使用的深度CNN模型的总体元素。它由五层组成,包括输入层,卷积层,池化层,完整连接层和分类层。这种自学习结构可以提取从低到高的野火特征。输入层将输入数据输入模型,该模型是显着性分割的图像数据库,具有三个随机RGB通道的颜色。卷积层包括八个卷积层,以从低到高提取火特征。池化层捕获可变形的零件,减小卷积输出的尺寸,并避免过度拟合。完整连接层包含两个隐藏层,每层256个神经元,以及一个权重初始化。它减少了神经元之间的依赖性,因此减少了网络的过度拟合。分类层包括全连接神经网络的输出层,Softmax和交叉熵函数。总结了用于识别特定图像的先前抽象的高级功能。对网络进行训练,直到获得的训练误差等于可以忽略的值为止。

Zhao et al. [24] have presented a saliency detection and deep learning-based wildfire identification system using the image frames captured by UAV. They have offered a detection algorithm for the fast location and segmentation of core fire areas in aerial images. This system can considerably prevent feature loss that is caused by direct resizing. Moreover, it is applied in the formation and data augmentation of a standard fire image dataset using a 15-layered self-learning deep CNN architecture. This process causes to produce a self-learning fire feature exactor and classifier system. The authors have considered some of the non-negligible challenges, especially localization of the fire area, fixed training image size, and limited amount of aerial view wildfire images. The wildfire localization and segmentation algorithm consists of saliency detection and logistic regression classifier through two steps: region of interest proposal and region selection using machine learning. In the first step, the region of interest is extracted by the saliency detection method. Accordingly, the color and texture features of the region of interests are calculated to specify possible fire regions. In the second step, the system uses two logistic regression classifiers to identify feature vector inside the region of interest. If this vector belongs to flame or smoke then these regions will be segmented by the system. Fig. 2 illustrates overall elements of the deep CNN model that is used in this system. It consists of five layers including input layer, convolution layers, pooling layer, full connection layer, and classification layer. The wildfire features from low to high level can be extracted by this self-learning structure. Input layer feeds input data into the model, which is the saliency segmented image database having the color of three randomized RGB channels. Convolutional layers include eight convolutional layers to extract fire features from low to high level. Pooling layer captures deformable parts, reduces dimension of the convolutional output, and avoids over fitting. Full connection layer contains two hidden layers, 256 neurons per layer, and a weight initialization. It reduces the dependency between neurons and, consequently, reduces the network’s overfitting. Classification layer includes output layer of the full connection neural network, a Softmax, and cross entropy function. It concludes the prior abstracted high-level features for recognition of a certain image. The network is trained until the training error is obtained equal to a negligible value.

图2. 显着性检测和基于深度学习的野火识别的总体元素[24]。

Fig. 2. Overall elements of a saliency detection and deep learning-based wildfire identification [24].

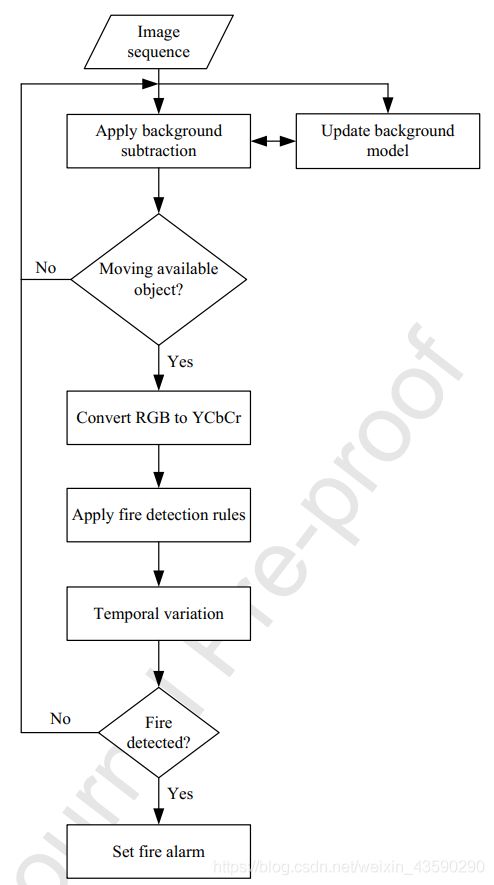

Mahmoud和Ren [26]提出了一种森林火灾检测系统,该系统应用了基于规则的图像处理技术和时间变化。 该系统使用背景减法跟踪包含区域检测的运动。 它将分段的运动区域从RGB转换为YCbCr颜色空间,并且还利用五种火灾检测规则来分离候选火灾像素。 值得注意的是,系统使用时间变化来在火焰对象和火焰颜色对象之间进行区分。 图3描绘了该系统的主要步骤和工作流程。 基于从输入设备收集的输入视频,在系统中使用背景减法和背景模型。 然后,将输入图像序列从RGB转换为YCbCr颜色空间,并相应地使用火灾检测规则和时间变化。 如果满足所有检测条件,则将启动火灾警报。

Mahmoud and Ren [26] have presented a forest fire detection system that applies a rule-based image processing technique and temporal variation. This system uses background subtraction to follow the movements containing region detection. It converts the segmented moving regions from RGB to YCbCr color space and, also, utilizes five fire detection rules for separating the candidate fire pixels. It is worth noting that the system uses temporal variation to make differences between the fire and fire-color objects. Fig. 3 depicts main steps and workflow of this system. Background subtraction and background model are used in the system based on the input video gathered from input device. Then, the input image sequence is converted from RGB to YCbCr color space, as well as fire detection rules and temporal variation are used accordingly. If all of the detection conditions are satisfied then a fire alarm will be activated.

图3.通过基于规则的图像处理和时间变化[26]进行森林火灾检测的流程图。

Fig. 3. Flowchart of a forest fire detection by rule-based image processing and temporal variation [26].

提出了粒子群优化[58,59],以提供可以在几行计算机代码中开发的非常简单的概念和范例。它仅需要原始数学运算符,并且就内存需求和处理速度而言,在计算上都是廉价的。 Khatami等。 [21]提出了一种使用基于粒子群优化和K-medoids聚类的图像处理技术的火焰检测系统。该系统应用转换矩阵来基于颜色特征描述基于新火焰的颜色空间。它是从特征提取和色彩空间转换方面简单介绍的,其中每个像素的色彩元素都可以直接提取以进行应用,而无需任何额外的分析。作者开发了基于火焰,转换矩阵的线性乘法以及样本图像的颜色属性的颜色空间。矩阵乘法生成差异色彩空间,以突出显示激发部分并使非激发部分变暗。通过粒子群优化和图像像素采样来计算色差转换矩阵的权重。此外,检测过程使用针对不同火灾图像实现的转换矩阵来实现快速简便的火灾检测系统。

Particle swarm optimization [58, 59] is presented to offer very simple concepts and paradigms that can be developed in few lines of the computer codes. It only needs primitive mathematical operators and is computationally inexpensive in terms of both memory requirements and processing speed. Khatami et al. [21] have presented a fire flame detection system using image processing techniques based on particle swarm optimization and K-medoids clustering. This system applies a conversion matrix to describe a new fire flame-based color space on the basis of color features. It is simply presented in terms of feature extraction and color space conversion in which color elements of every pixel are directly extracted to being applied without any extra analysis. The authors have developed a color space based on fire flames, linear multiplication of a conversion matrix, and color properties of a sample image. The matrix multiplication generates a differentiating color space to highlight the fire part and dim the non-fire part. Weights of the color-differentiating conversion matrix are calculated by particle swarm optimization and sample pixels of an image. Furthermore, detection process uses the conversion matrix that is achieved for different fire images to implement a fast and easy fire detection system.

3.2。 适用于所有环境的智能火灾探测系统

3.2. Intelligent fire detection systems for all of the environments

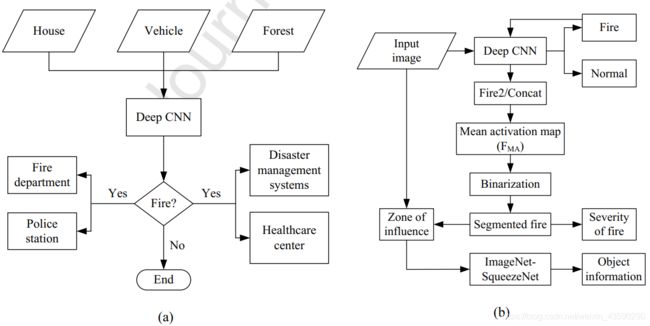

穆罕默德等。 [19]提出了一种使用深度CNN和视频信息的火灾探测系统。他们试图最小化用于火灾探测系统的传统手工制作功能的耗时功能。此外,他们基于深度学习技术在室内和室外环境的闭路电视(CCTV)监视网络中设计了一种早期火灾探测系统。该系统可以提高火灾探测的准确性,减少误报的次数,并将火灾损失降至最低。它是使用AlexNet架构[43]设计的,用于火灾检测过程和转移学习策略。作者提供了一种对着火区域敏感的智能特征图选择算法,以便从经过训练的CNN的卷积层中选择合适的特征图。这些特征图可以导致执行更准确的分割,从而确定火灾的基本属性,例如火灾的增长率,火灾的严重性和/或其燃烧程度。此外,他们还对ImageNet数据集中的1000类对象应用了预先训练的模型,以指定火灾是在房屋,汽车,森林还是其他任何地方。该模型可以帮助消防员对火灾最严重的地区进行优先排序。图4显示了该系统的概述和火源定位过程。图4(a)示出了用于火灾探测过程的系统的概述。摄像机安装在各种环境中,例如房屋,车辆和森林,以收集环境数据。然后,深层的CNN控制器分析收集到的图像,以确定环境中是否发生了火灾。如果发生任何火灾,将相应地通知消防部门,派出所,灾难管理系统和医疗中心。图4(b)描绘了深层CNN技术的火定位过程。深度CNN根据输入图像确定发生了火灾或环境状况正常。此外,该系统还可以通过激活图和二值化来分割火势,以确定各种特征,例如火势和物体信息。

Muhammad et al. [19] have presented a fire detection system by using deep CNN and video information. They have attempted to minimize time-consuming feature of the conventional hand-crafted features for fire detection systems. Furthermore, they have designed an early fire detection system in closed-circuit television (CCTV) surveillance networks for both indoor and outdoor environments based on deep learning techniques. This system can increase fire detection accuracy, reduce the number of false alarms, and minimize fire damages. It is designed by using the AlexNet architecture [43] for fire detection process and a transfer learning strategy. The authors have offered an intelligent feature map selection algorithm sensitive to fire regions in order to select appropriate feature maps from among the convolutional layers of trained CNN. These feature maps can lead to perform a more accurate segmentation to determine essential properties of the fire such as its growth rate, the severity of fire, and/or its burning degree. Besides, they have applied a pre-trained model on 1000 classes of objects in the ImageNet dataset to specify whether the fire is in a house, a car, a forest, or any other place. This model can aid firefighters to prioritize regions with the strongest fire. Fig. 4 shows overview and fire localization process of this system. Fig. 4 (a) illustrates overview of the system for fire detection process. Cameras are installed in various environments such as house, vehicle, and forest to gather environmental data. Then, a deep CNN controller analyzes the gathered images to determine whether a fire happens in the environment or not. If any fire happens, fire department, police station, disaster management systems, and healthcare center will be notified accordingly. Fig. 4 (b) depicts fire localization process of the deep CNN technique. The deep CNN determines that a fire occurrence happens or environmental condition is normal according to the input image. Besides, the system makes the segmented fire by mean activation map and binarization to determine various features such as severity of fire and object information.

图4. 使用深层CNN的火灾探测系统的一般特征[19]。 (a)系统概述; (b)火势定位过程。

Fig. 4. General characteristics of a fire detection system using the deep CNN [19]. (a) overview of the system; (b) fire localization process.

图像和视频帧的分析是火灾探测系统中的另一种技术,许多研究人员已经对其进行了研究。文献中有一些基于视频的火焰和火灾检测算法,这些算法应用了视觉信息,例如火灾区域的颜色,运动和几何形状。这些算法使用各种技术,例如用于火焰检测的颜色线索,像素颜色及其时间变化以及时间对象边界像素[4、7、46、47]。 Celik等。 [48]提出了一种实时火灾探测器,它同时应用了颜色信息和注册的背景场景。样本图像的统计测量可以确定火灾的颜色信息。三个高斯分布用于对场景的简单自适应信息进行建模。他们为所有颜色通道中与彩色信息有关的像素值建立模型。作者通过将前景对象与颜色统计信息结合在一起,并通过对连续帧的输出进行分析,开发了火灾探测系统。该系统可以在最早的时间内检测到火灾,除非在爆炸开始之前就已检测到烟雾的爆炸条件下。

Analysis of the image and video frames is another technique in fire detection systems, which has been already studied by many researchers. There are some of the video-based flame and fire detection algorithms in the literature that apply visual information such as color, motion, and geometry of fire regions. These algorithms use various techniques such as color clues for flame detection, pixel colors and their temporal variations, and temporal object boundary pixels [4, 7, 46, 47]. Celik et al. [48] have presented a real-time fire-detector that applies both of color information and registered background scene. Statistical measurement of the sample images can determine color information of the fires. Three Gaussian distributions are used to model simple adaptive information of the scene. They make a model for the pixel values related to colored information in all of the color channels. The authors have developed the fire detection system by combining the foreground objects with color statistics and also by analysis of the output via consecutive frames. This system can detect fire occurrences in the earliest time, except in the explosive conditions where smoke is detected before the fire is started.

Celik和Demirel [13]提供了基于规则的通用颜色模型,以基于火焰像素分类执行检测过程。该模型使用YCbCr颜色空间将亮度与色度分开。该机制可以比其他颜色空间(例如RGB)更有效地工作。它有助于为适当的火焰像素分类创建通用的色度模型。在RGB和归一化的rgb中开发的规则将转换为YCbCr颜色空间。此外,为了减少照明变化的有害影响并提高检测过程的效率,认为在YCbCr颜色空间中存在新的规则。如果更改图像的照度,则RGB颜色空间中的火灾像素分类规则将无法谨慎工作。此外,无法分离像素的强度和色度值。因此,作者已使用色度机制比强度模型更有效地建立火色模型。由于在RGB和YCbCr颜色空间之间存在线性转换过程,因此应用YCbCr颜色空间来制作火像素的适当模型。下面的公式表示从RGB到YCbCr颜色空间的转换过程:

Celik and Demirel [13] have offered a rule-based generic color model to perform a detection process based on a flame pixel classification. This model uses YCbCr color space to separate the luminance from the chrominance. This mechanism can work more effectively than the other color spaces (e.g., RGB). It aids to make a generic chrominance model for an appropriate flame pixel classification. The rules, developed in RGB and the normalized rgb, are translated to YCbCr color space. Besides, new rules are considered to being in YCbCr color space in order to reduce harmful effects of the changing illumination and improve efficiency of the detection process. If the illumination of image is changed, fire pixel classification rules in RGB color space will not work carefully. Besides, separating the pixel’s values of intensity and chrominance will not be possible. Thus, the authors have used the chrominance mechanism to make model of the color of fire more effectively than model of the intensity. Since there is a linear conversion process between RGB and YCbCr color spaces, YCbCr color space is applied to make an appropriate model of the fire pixels. The following equation formulates conversion process from RGB to YCbCr color spaces:

[ Y C b C r ] = [ 16 128 128 ] + [ 0.2568 0.5041 0.0979 − 0.1482 − 0.2910 0.4392 0.4392 − 0.3678 − 0.0714 ] ∗ [ R G B ] (5) \tag{5}\begin{bmatrix}Y\\Cb\\Cr\end{bmatrix}=\begin{bmatrix}16\\128\\128\end{bmatrix}+\begin{bmatrix}0.2568&0.5041&0.0979\\-0.1482&-0.2910&0.4392\\0.4392&-0.3678&-0.0714\end{bmatrix}* \begin{bmatrix}R\\G\\B\end{bmatrix} ⎣⎡YCbCr⎦⎤=⎣⎡16128128⎦⎤+⎣⎡0.2568−0.14820.43920.5041−0.2910−0.36780.09790.4392−0.0714⎦⎤∗⎣⎡RGB⎦⎤(5)

Borges和Izquierdo [50]提出了一种基于颜色信息的视频中火灾发生的检测系统。 他们考虑了火灾的视觉特征,例如面积大小,颜色,表面粗糙度,偏斜度和边界粗糙度,以使该检测系统可用于大多数火灾发生。 例如,偏斜度是非常适用的功能,因为它包含着火区域红色通道中饱和度的频繁发生。 该系统可用于监视和自动视频分类,以在新闻广播内容的数据库中执行火灾灾难的检索过程。 它评估特殊低层功能的帧到帧变化,以识别潜在的火灾区域。 实际上,该系统考虑了基于运动的特征的修改以及火灾发生概率的模型,以确定候选火灾区域的位置。

Borges and Izquierdo [50] have presented a detection system for fire occurrences in videos based on color information. They have considered visual features of the fire such as area size, color, surface coarseness, skewness, and boundary roughness to make the detection system useful for the most fire occurrences. For example, the skewness is a very applicable feature because it contains frequent occurrence of the saturation in red channel of fire zones. This system can be used for both surveillance and automatic video classification to perform retrieval process of the fire catastrophes in databases of newscast content. It evaluates frame-to-frame changes of the special low-level features to identify potential fire zones. In fact, the system considers modifications of the motion-based features and a model for probability of fire occurrences to determine location of the candidate fire zones.

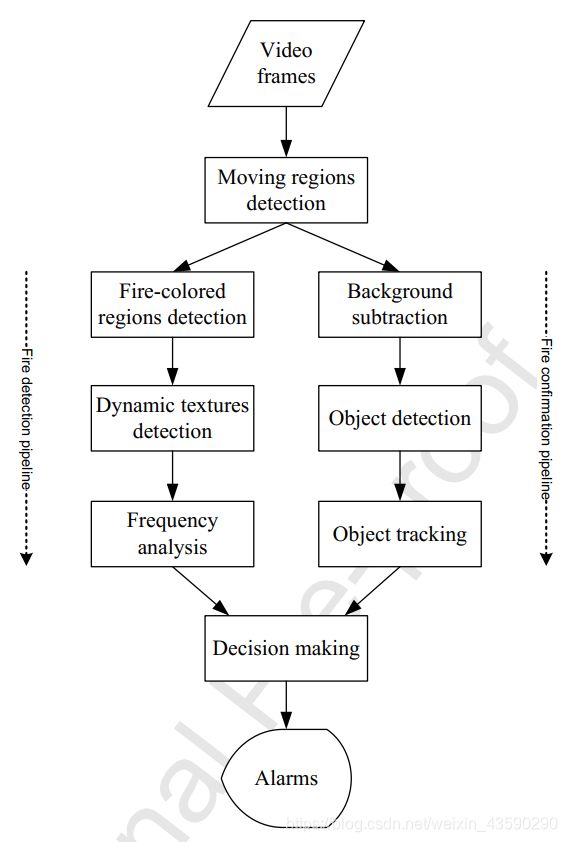

Gomes等。 [51]提出了一种使用固定监视智能摄像机的基于视觉的火灾探测方法。他们使用背景减法和基于上下文的学习来提高火灾探测过程的准确性和鲁棒性。随后,开发了基于颜色的火灾外观模型和基于小波的火灾频率特征模型,以快速区分火灾色的移动物体和火灾区域。在决策过程中使用移动物体的类别和行为来减少存在火红色移动物体时的错误警报数量。此外,通过使用基于GPS的校准系统可以预测摄像机的世界地图,以便估计对象的预期大小并生成地理参考警报。图5示出了作为处理管线提供的该方法的流程图。通过基于输入图像检测运动区域来开始工作流程。动态阈值用于通过三个连续帧中每个像素的强度变化的幅度特征来管理运动检测。最后,根据频率分析和对象跟踪的结果进行决策过程,以显示系统所需的任何警报。

Gomes et al. [51] have presented a vision-based approach for fire detection using the fixed surveillance smart cameras. They have used background subtraction and context-based learning to enhance the accuracy and robustness of fire detection process. Subsequently, a color-based model of fire’s appearance and a wavelet-based model of fire’s frequency signature are developed to make a fast discrimination between fire-colored moving objects and fire regions. The category and behavior of moving objects are used in decision-making process to decrease the number of false alarms in presence of the fire-colored moving objects. Besides, the camera-world mapping is predicted by using a GPS-based calibration system in order to estimate the expected size of objects and generate geo-referenced alarms. Fig. 5 shows flowchart of this approach that is offered as a processing pipeline. Workflow is started by detecting the moving regions based on input images. A dynamic threshold is employed to manage the motion detection through largeness feature of each pixel’s intensity variation across three consecutive frames. Finally, decision-making process is conducted based on the results of frequency analysis and object tracking to show any alarm required by the system.

图5. 基于视觉的火灾探测方法的处理流程[51]。

Fig. 5. Processing pipeline of a vision-based approach for fire detection [51].

Kim等。 [52]提供了一种在无线传感器网络上的视频序列中使用颜色模型的火灾探测算法。 该算法由用于前景检测的背景减法和用于背景建模的高斯混合模型开发。 由于火灾具有多种功能,因此作者已应用颜色信息来获取所收集视频帧的有用数据。 他们提出了基于对象连续增长的RGB颜色空间的颜色检测算法。 通过背景变化为每个像素构造所有与背景不同并且逐帧边界框变化的类似火的彩色对象。 值得注意的是,视频序列是通过静态摄像机获取的,以提供基于CCTV摄像机的适当监视视觉系统。

Kim et al. [52] have offered a fire detection algorithm using a color model in video sequences on wireless sensor network. This algorithm is developed by a background subtraction for foregrounds detection and a Gaussian mixture model for background modeling. Since fire has various features, the authors have applied color information to achieve useful data for the gathered video frames. They have presented the color detection algorithm for RGB color space based on area growth of the objects in consecutive. All of the fire-like colored objects, which differ from background and change in frame-by-frame boundary box, are constructed by temporal variation for each pixel. It is worth noting that video sequences are acquired by static camera to offer an appropriate surveillance visual system based on CCTV cameras.

Dimitropoulos等。 [53]提出了一种基于动态纹理分析和时空火焰建模中提取的特征相结合的火焰检测方法。 此方法着重于特定火灾特征(例如颜色和运动)的识别以及线性动力学系统的强大特性,以便评估像素强度的时间演变并提高检测算法的鲁棒性。 此外,使用颜色分析和有关非参数模型的背景减法来识别帧中的火灾区域。 通过从当前视频和先前视频中发现相邻区块中可能存在的火灾的知识库,可以预测所有候选火灾区域的时空一致性能量。 此外,作者使用了两类分类器和SVM算法来确定候选火区的分类。

Dimitropoulos et al. [53] have presented a flame detection method based on combination of the features extracted from dynamic texture analysis and spatio-temporal flame modeling. This method focuses on both the identification of specific fire features (e.g., color and motion) and the powerful properties of linear dynamical systems in order to evaluate temporal evolution of the pixels’ intensities and enhance robustness rate of the detection algorithm. Moreover, fire regions into a frame are identified using color analysis and background subtraction regarding a non-parametric model. The spatio-temporal consistency energy of all candidate fire regions are predicted by discovering the knowledge base of possible existing fires in neighboring blocks from the current and previous videos. Besides, the authors have used a two-class classifier and SVM algorithm to determine classification of the candidate fire regions.

Foggia等。 [54]提出了一种火灾探测系统,该系统使用了专家的组合,这些专家基于关于颜色,形状和火焰运动的一些基本信息。 该系统使用对监视摄像机获得的视频进行分析处理来检测火灾的发生。 系统的主要目的分为两个阶段。 在第一阶段,基于颜色信息,形状变化和运动分析,将补充信息和多专家系统组合在一起。 使用设计人员的相对较小的工作量,即可显着提高系统的整体效率。 在第二阶段中,词袋策略提供了一个描述符来指示对象的移动行为。 作者已经在真实和Web环境中捕获的大量火灾视频数据库上测试了该系统的敏感性和特异性。

Foggia et al. [54] have presented a fire detection system that uses a combination of experts based on some of the essential information about color, shape, and flame movements. This system detects fire occurrences using analysis process of the videos obtained by surveillance cameras. Main purposes of the system are categorized into two phases. In the first phase, complementary information and a multi expert system are combined together based on color information, shape variation, and motion analysis. Overall efficiency of the system noticeably is increased using a relatively small effort made by designer. In the second phase, a descriptor is offered by a bag-of-words strategy to indicate movement behavior of the objects. The authors have tested the system on a wide database of fire videos captured in the real and Web environments in terms of sensitivity and specificity.

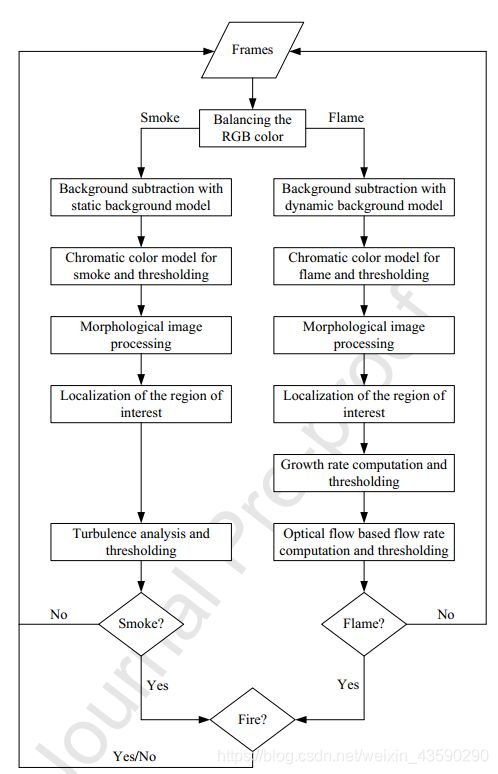

Qureshi等。 [2]提出了一种实时早期火灾探测系统,用于探测视频图像中的火焰和烟雾区域。他们已经应用了并行图像处理技术,以在开放或封闭的室内和室外环境中以高速,低响应时间和低误错误率检测这些区域。该系统由简单的图像和视频处理技术设计,可以计算运动和颜色提示,并在实时应用中启用从背景中分割出火焰和烟雾候选对象。它平衡了色彩空间,并将火焰和烟雾检测流分开。通过流和颜色信息两者检测候选区域,以对该区域进行形态图像处理。之后,通过湍流分析和烟雾探测系统对候选区域进行过滤。作者已经在校准阶段使用了手动调整参数的方法,而没有使用任何离线培训。当通过颜色模型进行对象分割时,颜色平衡将是系统的基本功能,并且系统是在不同照明情况下设计的。它由两个阶段组成:照明的估计和使用缩放因子的色归一化。作者已经使用“灰色世界”算法作为最简单的光源预测技术之一。此外,他们已经计算出每帧R,G和B平面中图像强度的平均值。图像的“灰度值”实际上是三个强度的最终矢量。之后,R,G和B平面分别通过乘数缩放,以将灰度值归一化为帧的平均强度。如果标度值大于最大可能强度,则将其设置为最大值。如图6所示,在平衡RGB颜色之后,将帧馈送到独立的烟雾和火焰检测管道。

Qureshi et al. [2] have presented a real-time early fire detection system to detect both of the flame and smoke regions in video images. They have applied a parallel image processing technique for detecting these regions with high speed, low response time, and low false error rate for the open or closed indoor and outdoor environments. The system is designed by the simple image and video processing techniques in a way that the motion and color cues will be computed and segmentation of the flame and smoke candidates from background will be enabled in real-time applications. It balances the color space and separates the flame and smoke detection streams. Candidate regions are detected by both of the streams and color information to do morphological image processing on the regions. Afterwards, candidate regions are filtered via a turbulence flow rate analysis and a smoke detection system. The authors have used manual adjustment of the parameters during a calibration phase without using any offline training. Color balancing will be an essential feature for the system when object segmentation is conducted by a color model and also the system is designed under different illumination situations. It is composed of two phases: estimation of the illumination and chromatic normalization using a scaling factor. The authors have used a “gray-world” algorithm as one of the simplest illuminant prediction techniques. Furthermore, they have calculated average value of image intensities in the R, G, and B planes over every frame. The “gray-value” of image is, in fact, the ultimate vector of three intensities. Afterwards, the R, G, and B planes are independently scaled by a multiplication factor for normalizing the gray-value to average intensity of the frame. If the scaled value is greater than maximum possible intensity then it will be set to the maximum value. As shown in Fig. 6, frames are fed to the independent smoke and flame detection pipelines after balancing the RGB color.

图6.使用组合视频处理策略的早期火灾探测系统的工作流程[2]

Fig. 6. Workflow of an early fire detection system using a combined video processing strategy [2]

Li等。 [55]通过Dirichlet过程高斯混合色彩模型在视频中提出了一种自主的火焰检测系统。该系统根据火焰的动态,颜色和闪烁特性使用火焰检测框架。 Dirichlet过程根据训练数据估计高斯分量的数量,以建立用于火焰颜色分布的模型。此外,将运动显着性和滤波后的时间序列作为特征来描述火焰的动力学和闪烁特征。通过使用一维小波变换和概率显着性分析方法来执行此过程。该模型为每个像素分配一个概率值,以根据颜色信息识别火焰的主要部分。此后,基于概率方法,使用每个像素的光流大小来获得显着性图。显着图和颜色模型的结果结合在一起,以使用两个独立的实验阈值来识别火焰的候选像素。

Li et al. [55] have presented an autonomous flame detection system in videos by a Dirichlet process Gaussian mixture color model. This system uses a flame detection framework according to the dynamics, color, and flickering features of flames. The Dirichlet process estimates the number of Gaussian components based on training data to make a model for distribution of the flame colors. Moreover, motion saliency and filtered temporal series are extracted as features to describe the dynamics and flickering features of flames. This process is conducted by using a one-dimensional wavelet transform and a probabilistic saliency analysis method. The model assigns a probability value for every pixel to identify major part of the flames according to color information. Afterwards, optical flow magnitude of every pixel is used to achieve a saliency map based on a probabilistic approach. The saliency map and results of the color model are combined together to identify candidate pixels of flames using two independent experimental thresholds.

模糊逻辑[56,57]可用于增强火灾探测系统的鲁棒性和性能。它是对经典逻辑和集合论的概括,它使用推理过程和基于人的经验。模糊决策能够基于模糊规则和推理引擎来预测复杂和不确定的条件。 Ko等。 [22]已经应用了立体图像和概率模糊逻辑来提出火灾探测系统和3D表面重建。该系统应用立体摄像机来估计给定摄像机与火灾区域之间的距离,并提供火灾前沿的3D表面。此外,颜色模型和背景差异模型用于识别候选火灾区域。由于着火区域在连续的帧中不断变化,因此生成了高斯隶属函数来确定火的形状,大小和运动变化。下一步,这些功能将用于模糊决策系统中以获取实时火灾验证。从左右图像分割出火区域后,匹配算法会提取特征点以进行距离估计和3D表面重建。这项工作不仅可以估算火势的确切位置,还可以通过水炮和自动灭火系统确定火势以及火场与摄像机之间的距离。如果检测到发生火灾,则警报系统将向位于远程站点的水炮发送预定义的命令,以抑制火灾的发生。此外,作者使用了预先校准的立体摄像机代替了普通的立体摄像机来检测候选火区。此功能将导致在3D透视图中跟踪火势蔓延,并确定从火势到摄像机的准确距离。

Fuzzy logic [56, 57] can be used to enhance the robustness and performance of fire detection systems. It is a generalization of the classical logic and set theory, which uses an inference process and human-based experiences. Fuzzy decision making is able to predict the complex and undefined conditions based on fuzzy rules and inference engine. Ko et al. [22] have applied stereoscopic pictures and probabilistic fuzzy logic to present a fire detection system and 3D surface reconstruction. This system applies a stereo camera to estimate distance between the given camera and fire regions, and also provide 3D surface of the fire front. Furthermore, color models and a background difference model are used to identify candidate fire regions. Since fire regions constantly change in successive frames, Gaussian membership functions are generated to determine the shape, size, and movement variation of fires. In the next step, these functions are used in fuzzy decision system to acquire real-time fire verification. After fire regions are segmented from left and right images, a matching algorithm extracts feature points to conduct the distance estimation and 3D surface reconstruction. This work not only estimates exact location of the fires, but also determines volume of fire and distance between fire region and camera via a water cannon and an automatic fire suppression system. If a fire occurrence is detected then a warning system will transmit a pre-defined command to the water cannon placed at a remote site to suppress the fire occurrence. Besides, the authors have utilized a pre-calibrated stereo camera instead of a normal one to detect candidate fire regions. This capability will lead to track the fire spread in a 3D perspective and determine an accurate distance from fire to the camera.

4.讨论和评估结果

4. Discussions and evaluation results

本节评估了上一节中介绍的基于智能和视觉的火灾探测系统的功能和效率。 根据最重要的参数,如准确性,精度,检测率,误报率和处理速度,基于工作的仿真和实验结果进行评估过程。

This section evaluates the functionality and efficiency of the intelligent and vision-based fire detection systems that were described in the previous section. Evaluation process is conducted based on the simulation and experimental results of works according to the most important parameters such as accuracy, precision, detection rate, false alarm rate, and processing speed.

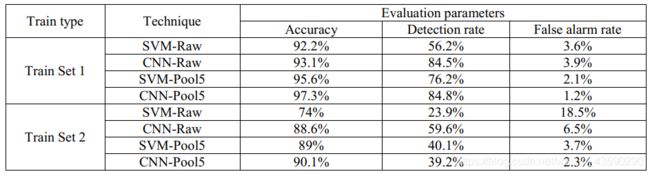

表1表示使用深度卷积神经网络对森林火灾进行检测的不同补丁分类器的评估结果[41]。 这些结果根据两个火车集和四个不同特征(SVM-Raw,CNN-Raw,SVM-Pool5和CNN-Pool5)下的准确性,检测率和误报率这三个参数进行分类。 他们证明,使用Train Set 1的CNN-Pool5技术比其他技术更准确。 此外,通过该技术可以提高检测率,减少误报率。 因此,使用列车1的CNN-Pool5技术在各种评估方案下都具有很高的性能。

Table 1 represents evaluation results of the different patch classifiers for forest fire detection using deep convolutional neural networks [41]. These results are categorized according to three parameters including accuracy, detection rate, and false alarm rate under two train sets and four different features: SVM-Raw, CNN-Raw, SVM-Pool5, and CNN-Pool5. They demonstrate that the CNN-Pool5 technique using Train Set 1 is more accurate than the other techniques. Besides, detection rate is enhanced and false alarm rate is reduced by this technique. Therefore, the CNN-Pool5 technique using Train Set 1 has a high performance under various evaluation scenarios.

表1.使用SVM-Raw,CNN-Raw,SVM-Pool5和CNN-Pool5的不同补丁分类器的评估结果[41]

Table 1. Evaluation results of the different patch classifiers using SVM-Raw, CNN-Raw, SVM-Pool5, and CNN-Pool5 [41]

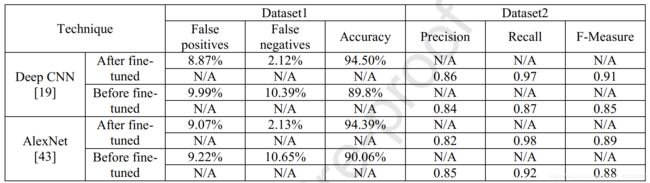

表2列出了使用深层CNN的火灾探测系统的评估结果[19]与AlexNet架构[43]的比较结果。 对Dataset1进行评估,包括误报,误报和准确性,对Dataset2进行评估,准确性,召回率和F-Measure。 这些结果表明,在不同情况下,微调功能对两个检测系统都有积极影响。 在大多数情况下,尤其对于微调功能,Deep CNN都执行了喜欢的结果。 总而言之,与AlexNet for Dataset1的结果相比,大多数情况下,深层CNN减少了误报和误报,并提高了准确性。 此外,深层CNN提高了精度和F-Measure,并且在Dataset2的大多数结果中也降低了召回率。

Table 2 indicates evaluation results of the fire detection system using a deep CNN [19] compared to the AlexNet architecture [43]. Evaluation process is conducted for Dataset1 in terms of false positives, false negatives, and accuracy while it is conducted for Dataset2 in terms of precision, recall, and F-Measure. These results demonstrate that the fine-tuned feature has positive effect on both of the detection systems under different scenarios. Deep CNN has carried out favorite results in the most cases, especially for the fine-tuned feature. In summary, false positives and false negatives have been decreased and accuracy has been enhanced by deep CNN in the most cases, compared the results of AlexNet for Dataset1. Moreover, deep CNN has improved the precision and F-Measure, and also has decreased the recall in the most results of Dataset2.

Table 2. Comparison results of the fire detection system using a deep CNN [19]

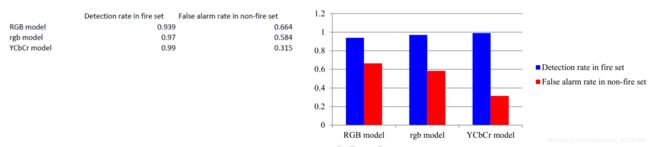

与包括RGB模型[7]和rgb模型[49]的其他算法相比,图7评估了使用视频序列和通用颜色模型[13]的火灾探测系统的性能。 这些结果在火灾集合中的检测率和非火灾集合中的误报率方面比较了所有方法。 他们证明,在不同评估参数下,YCbCr模型与RGB和rgb模型相比具有更高的性能。 原因是,YCbCr色彩空间包括从亮度信息中区分亮度的有效功能。 它提高了火灾探测器的检测率,并降低了非火灾探测器的误报率。 如前所述,色度明显地指示信息而没有亮度的影响。 因此,包括基于色度的规则和颜色模型的色度平面在火焰行为识别中非常有效。

Fig. 7 evaluates performance of the fire detection system that uses video sequences and a generic color model [13], compared to the other algorithms including RGB model [7] and rgb model [49]. These results compare all of the methods in terms of detection rate in fire set and false alarm rate in non-fire set. They demonstrate that YCbCr model has high performance compared to the RGB and rgb models under different evaluation parameters. The reason is that YCbCr color space includes an efficient feature to discriminate luminance from chrominance information. It has enhanced detection rate in fire set and has reduced false alarm rate in non-fire set. As mentioned before, the chrominance noticeably indicates information without the effect of luminance. Therefore, the chrominance plane including chrominance-based rules and color model is very effective in flame behavior identification.

图7.使用基于视频和通用颜色模型的火灾探测系统的性能评估[13]。

Fig. 7. Performance evaluation of the fire detection system using a video-based and generic color model [13].

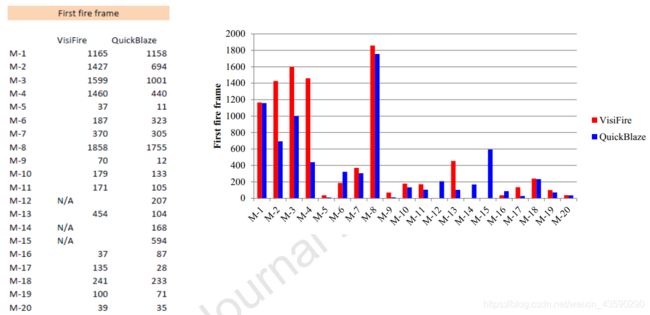

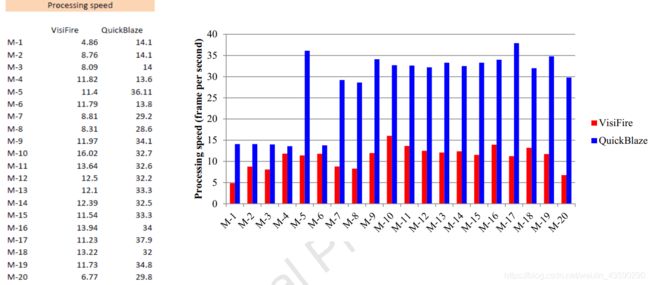

图8显示了火警探测系统通过组合视频处理策略QuickBlaze [2]与另一个火警探测平台VisiFire [42]的实验结果。 根据包含火灾和/或烟雾的视频在第一射击帧和处理速度方面进行比较结果。 图8(a)表示与其他系统相比,QuickBlaze减少了射击帧的数量。 此外,这些结果表明,QuickBlaze的响应时间少于VisiFire。 图8(b)示出了用于各种视频样本的两个系统的处理速度。 尽管有关候选火灾和烟雾区域数量的所有视频的帧速率均有所不同,但在所有样本上,QuickBlaze的运行速度都比VisiFire快。 实验结果表明,这些系统的性能之间的差异非常明显,特别是在处理速度方面。

Fig. 8 shows experimental results of the fire detection system by a combined video processing strategy, namely QuickBlaze [2], compared to another fire detection platform, namely VisiFire [42]. Comparison results are carried out based on the videos containing fire and/or smoke in terms of first fire frame and processing speed. Fig. 8(a) indicates that the number of fire frames has been decreased by QuickBlaze, comparison with another system. Besides, these results demonstrate that the response time of QuickBlaze is less than that of VisiFire. Fig. 8(b) illustrates processing speed of both the systems for various video samples. Although frame rate is varied for all of the videos regarding the number of candidate fire and smoke regions, QuickBlaze runs faster than VisiFire on all of the samples. Experimental results demonstrate that difference between the performances of these systems is very noticeable, especially in term of processing speed.

图8.使用组合视频处理策略的早期火灾探测系统的实验结果[2]。 (a)第一火架; (二)处理速度。

Fig. 8. Experimental results of the early fire detection system using a combined video processing strategy [2]. (a) first fire frame; (b) processing speed.

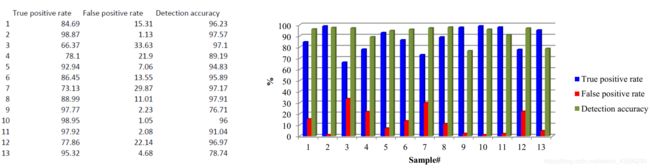

图9评估了使用K-medoids聚类技术和粒子群优化[21]对13个样本进行的真火焰,假阳性率和检测精度的火焰检测实验结果。 结果表明,该检测系统除了第3位和第7位之外,还具有更高的真实阳性率,因此在实际应用中可以更加鲁棒和高效。 此外,在大多数样本中,该系统在检测过程中没有很多错误,因为该系统获得了较低的误报率。 由于真阳性率高而假阳性率低,因此该系统在大多数视频样本中均实现了很高的检测精度。

Fig. 9 evaluates experimental results of the fire flame detection that uses K-medoids clustering technique and particle swarm optimization [21] for 13 samples in terms of true positive rate, false positive rate, and detection accuracy. The results indicate that this detection system has enhanced true positive rate, except for the 3rd and 7th, so it can be more robust and efficient in real applications. Furthermore, the system does not have many faults in the detection process in which it has obtained low false positive rates, in the most samples. Since true positive rates are high and false positive rates are low, the system has achieved high detection accuracy in the most video samples.

图9.使用K-medoids聚类和群体智能的火焰检测系统的评估结果[21]。

Fig. 9. Evaluation results of the fire flame detection system using K-medoids clustering and swarm intelligence [21].

表3表示使用无人机捕获的图像帧的显着性检测和基于深度学习的野火识别的评估结果[24]。 它比较了原始图像数据集和基于显着性的增强数据集获得的结果。 在所有模型架构中,基于显着性的增强数据集的验证精度均高于原始图像数据集的验证精度。 原因是通过适当的训练图像优化了深层结构的系数,而使用原始图像数据库训练的模型则具有较低的训练数据质量。 此外,通过扩充数据库中更多的学习样本来学习该体系结构,从而在训练过程之后获得非常优化的系数和权重。

Table 3 represents evaluation results of the saliency detection and deep learning-based wildfire identification [24] that uses the image frames captured by UAV. It compares the results obtained by original image dataset and those obtained by saliency-based augmented dataset. In all of the model architectures, validation accuracy of the saliency-based augmented dataset is higher than that of the original image dataset. The reason is that coefficients of the deep architectures are optimized with adequate training images while the models trained with original image database suffer from a lower training data quality. Besides, the architecture is learned by much more learning samples in the augmented database and, thereby, coefficients and weights are obtained very optimal after the training process.

表3.各种模型架构对显着性检测和基于深度学习的野火识别效率的影响[24]

Table 3. Effects of various model architectures on efficiency of the saliency detection and deep learning-based wildfire identification [24]

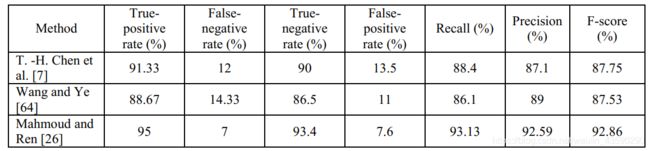

表4讨论了与其他基于图像的火灾检测相比,基于规则的图像处理和时间变化[26]对森林火灾检测系统的评估结果。[7,64]。 比较结果表明,与其他系统的结果相比,该系统具有更高的真阳性率,真阴性率,召回率,准确性和F分数,并且降低了假阴性率和假阳性率。 它们表明该系统是准确的,可以自动应用于森林火灾探测场景中。 值得注意的是F得分的计算如下

Table 4 discusses about evaluation results of the forest fire detection system by rule-based image processing and temporal variation [26], compared to the other image-based fire detections [7, 64]. Comparison results represent that this system has enhanced true-positive rate, true-negative rate, recall, precision, and F-score as well as it has reduced false-negative rate and false-positive rate, compared to the results of other systems. They indicate that the system is accurate and can be automatically applied in forest fire detection scenarios. It is worth noting that F-score is calculated as the below

F = 2 ∗ P r e ∗ R e c P r e + R e c (6) \tag{6} F=2*\frac{Pre*Rec}{Pre+Rec} F=2∗Pre+RecPre∗Rec(6)

其中Pre表示精度,Rec表示召回率。

where Pre indicates precision and Rec indicates recall.

表4.通过基于规则的图像处理和时间变化对森林火灾进行检测的比较结果[26]

Table 4. Comparison results of the forest fire detection by rule-based image processing and temporal variation [26]

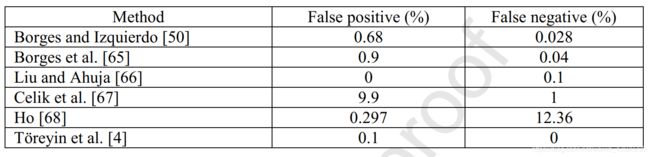

表5表示了与其他一些现有相关系统相比,基于颜色信息的视频中火灾发生检测系统的错误率结果。 他们指出,Liu和Ahuja [66]和Töreyin等人。 [4]包括与其他系统相比最低的误报率。 但是,这些系统假定摄像机是固定的,或者使用频率变换和运动跟踪。 因此,它们需要更多的计算处理时间,并且不适合视频检索。 Celik等。 [67]已经实现了良好的假阴性,但是通过这种方法获得的假阳性是所有方法中假阳性中最高的。 Ho [68]的假阳性率低,但假阴性最高。

Table 5 represents error rate results of the detection system for fire occurrences in videos based on color information, compared to some of the other existing related systems. They indicate that Liu and Ahuja [66] and Töreyin et al. [4] include the lowest false positive compared to the other systems. However, these systems assume that the camera is stationary or use frequency transforms and motion tracking. Thus, they need much more computational processing time and are not suitable for video retrieval. Celik et al. [67] has achieved a good false negative, but the false positive obtained by this method is the highest one among false positives of all the methods. Ho [68] involves a low false positive rate at the expense of the highest false negative.

Table 5. Comparison results of the color-based detection system for fire occurrences in videos [50]

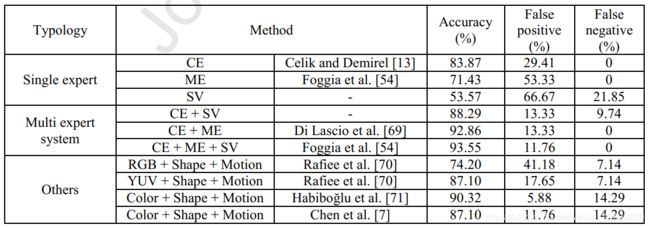

表6展示了由专家,颜色,形状和火焰运动结合的火焰检测系统的比较结果[54],以及在准确性,误报率和误报率方面的最新技术方法。该评估过程为 基于各种拓扑(包括单专家,多专家系统等)进行的操作。 此外,它考虑了几种技术,包括专家色彩评估(CE),专家运动评估(ME),专家形状变化(SV),RGB,YUV,形状,运动和颜色。 结果表明,与其他方法相比,该系统已实现了最高的准确率和可接受的误报率。 此外,他们表示该系统没有任何假阴性,类似于Celik和Demirel [13]和Di Lascio等人提出的方法。[69]。

Comparison results of the fire detection system by a combination of experts, color, shape, and flame movements [54] with state of the art methodologies in terms of accuracy, false positive, and false negative are represented in Table 6. This evaluation process is conducted based on various topologies including single expert, multi expert system, and some others. Furthermore, it considers several techniques including Expert Color Evaluation (CE), Expert Movement Evaluation (ME), Expert Shape Variation (SV), RGB, YUV, shape, motion, and color. The results indicate that this system has achieved the highest accuracy rate and an acceptable false positive rate, compared to the other methods. Besides, they represent that this system is lack of any false negative similar to the methods that are presented by Celik and Demirel [13] and Di Lascio et al. [69].

表6.专家,颜色,形状和火焰运动结合的火情探测系统的比较结果,以及最新技术[54]

Table 6. Comparison results of the fire detection system by a combination of experts, color, shape, and flame movements, with state of the art methodologies [54]

总之,仿真和实验结果表明了使用智能和基于视觉技术的火灾探测系统的性能。 在基于CNN和深层CNN的检测系统中,获得的大多数结果的准确性和检测率均超过90%左右,而错误警报率则低于10%左右。 在基于颜色模型的系统中,已经获得了超过95%左右的检测率,达到了不到30%左右的误报率,并且处理速度得到了显着提高。 在使用粒子群优化的检测系统中,获得的真实阳性率超过65%左右,获得的错误阳性率低于30%左右,并且检测精度已超过75%左右。 因此,在不同情况下,使用CNN和深层CNN的火灾探测系统在大多数评估结果中具有最高的性能。

In summary, the simulation and experimental results indicate performance of the fire detection systems that use the intelligent and vision-based techniques. In the detection systems based on CNN and deep CNN, the accuracy and detection rates have been achieved more than around 90% and false alarm rate has been obtained less than around 10%, in the most results. In the systems based on color models, detection rate has been obtained more than around 95%, false alarm rate has been achieved less than around 30%, and processing speed has been improved considerably. In the detection systems that use particle swarm optimization, true positive rate has been obtained more than around 65%, false positive rate has been achieved less than around 30%, and detection accuracy has been appeared more than around 75%. Therefore, the fire detection systems that use CNN and deep CNN have the highest performance in the most evaluation results under different scenarios.

5.基准数据集

5. Benchmark datasets

有各种基准数据集可用于评估火灾探测系统的性能,这些数据集可从不同的在线资源获得。 本节讨论第3节中讨论的智能和基于视觉的火灾探测系统的基准数据集。

There are various benchmark datasets to evaluate performance of the fire detection systems, which can be available from different online resources. This section discusses about benchmark datasets of the intelligent and vision-based fire detection systems that were discussed in Section 3.

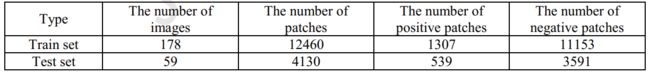

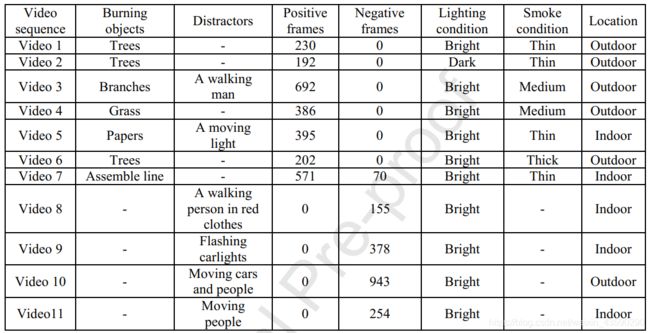

张等。 [41]主要从在线资源[42]中获取了视频数据,以介绍基于深度学习的森林火灾检测系统。如前所述,此数据集包含在森林环境中收集的25个视频样本,其中包含21个正(火)序列和4个负(非火)序列。从采集的视频中以5的采样率提取图像(即,每五帧一个采样图像)。之后,将它们调整为240×320像素的规范尺寸。现有的火灾补丁使用32×32边界框注释,以评估火灾补丁的本地化。表7表示训练图像,测试图像和带批注的补丁数量的统计信息。作者使用了一个很小的数据集,因为手动注释工作的时间受到限制。他们通过机器学习方法使用带注释的正负样本来学习防火补丁分类器(即二进制分类模型)。该系统通常(i)从头开始使用带注释的补丁学习二进制分类器,(ii)学习完整的图像火灾分类器,并且(iii)如果图像被分类为包含火灾,则应用细粒度补丁分类器。线性SVM [60]用于线性分类器,而基于CNN的Caffe框架[61]用于非线性分类器。由于带注释的数据集的大小很小,因此作者采用了CIFAR 10网络[62]。

Zhang et al. [41] have mainly acquired video data from an online resource [42] to present the deep-learning based detection system for forest fires. As describe before, this dataset contains 25 video samples that are gathered in the forest environment with 21 positive (fire) sequences and 4 negative (non-fire) sequences. The images are extracted from the acquired videos with a sample rate of 5 (i.e., one sample image per every five frames). Afterwards, they are resized in the canonical size of 240 × 320 pixels. The existing fire patches are annotated with 32 × 32 bounding boxes to evaluate the fire patch localization. Table 7 represents statistics of the trained images, tested images, and the number of annotated patches. The authors have used a small dataset because time consuming for the manual annotation work was limited. They have learned a fire patch classifier (i.e., a binary classification model) using the annotated positive and negative samples via machine learning method. This system generally (i) learns the binary classifier using the annotated patches from scratch, (ii) learns a full image fire classifier, and (iii) applies the fine-grained patch classifier if the image is classified as contains fire. The linear SVM [60] is used for the linear classifier and the CNN-based Caffe framework [61] is applied for the non-linear classifier. Since the size of the annotated dataset is small, the authors have adopted CIFAR 10 network [62].

表7.基于深度学习的森林火灾检测系统中数据集的统计信息[41]

Table 7. Statistical information of the dataset in the deep-learning based detection system for forest fires [41]

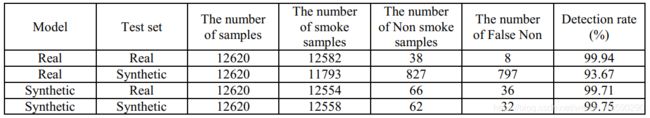

张等人在野外森林火灾烟雾探测系统中提出了一种方法。 [20],使用2800个烟框从烟雾图像的多样性中提取烟雾。 烟的形状可以自由更改,可以插入到通过变形过程随机选择的位置的12620个森林背景图像中。 每个合成森林烟雾图像都有一团烟雾,并且在插入过程中会自动确定烟雾的位置。 森林烟雾检测模型由RF数据集(真实模型)和SF数据集(合成模型)训练。 表8列出了这些数据集之间的交叉测试结果。 两种模型在其自己的训练数据集上都具有很高的检测率。 尽管SF数据集中的样本在视觉上不真实,但合成模型的检测性能要优于实模型,其中实综合分类包含797个False Non。

In the wildland forest fire smoke detection system presented by Zhang et al. [20], 2800 smoke frames are used to extract smoke from the diversity of smoke images. The smoke is freely changed in shape to being inserted into 12620 forest background images on the positions that are randomly selected with a deformation process. Every synthetic forest smoke image has one plume of smoke as well as location of the smoke is automatically determined in the inserting process. The forest smoke detection models are trained by RF dataset (Real Model) and SF dataset (Synthetic Model). Table 8 indicates results of cross test between these datasets. Both the models contain very high detection rate on their own training dataset. Although the samples in SF dataset are not visually realistic, detection performance of Synthetic Model is better than that of Real Model in which Real-Synthetic classification contains 797 False Non.

表8.使用更快的R-CNN [20]在野地森林火灾烟雾检测中RF数据集和SF数据集之间的交叉测试结果

Table 8. Results of cross test between RF dataset and SF dataset in the wildland forest fire smoke detection using faster R-CNN [20]

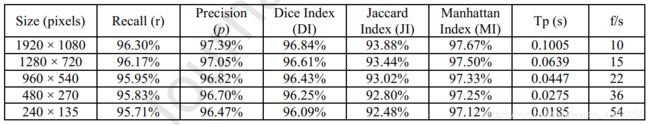

在用于无人机系统的森林火灾探测方法中[45],作者分析了来自欧洲火灾数据库[45]和林业图像组织数据库[63]的总共50张测试图像,以分析其关键目标。系统。所有图像均以jpeg格式和不同尺寸从各种角度和空中角度拍摄,最大分辨率为1920×1080像素。为了评估处理时间和性能,首先将它们调整为1920×1080像素;然后使用Matlab中的“ imresize”功能将其调整为1280×720、960×540、480×270和240×135像素。表9列出了所有测试图像的平均结果,这些图像基于上述指标的原始尺寸和缩小尺寸。最后一列表示每种尺寸的平均处理时间和每秒的帧数。 DI在[0,1]范围内确定,其中值“”表示最准确的检测。值得注意的是,该指数已转换为百分比值,可以轻松与其他指标进行比较。

In the forest fire detection approach for application in unmanned aerial systems [45], the authors have utilized a total of 50 test images from European Fire Database [45] and Forestry Images Organization Database [63] in order to analyze the key goal of this system. All the images are captured from various angles and aerial perspectives in jpeg format and different sizes in the maximum resolution of 1920 × 1080 pixels. In order to evaluate the processing time and performance, firstly they are resized to 1920 × 1080 pixels; then they are resized to 1280 × 720, 960 × 540, 480 × 270, and 240 × 135 pixels using the function “imresize” in Matlab. Table 9 represents average results for all of the test images based on the mentioned metrics in the original and reduced sizes. The last columns indicate the average processing time for each size and the frames per second. The DI is determined in the range of [0, 1] in which value ‘ ’ indicates the most accurate detection. It is worth noting that this index is transformed into percentage values to being compared with the other metrics easily.

表9.在森林火灾探测方法中应用于无人航空系统的超过50张调整大小图像的平均度量[45]

Table 9. Average metrics achieved over 50 resized images in the forest fire detection approach for application in unmanned aerial systems [45]

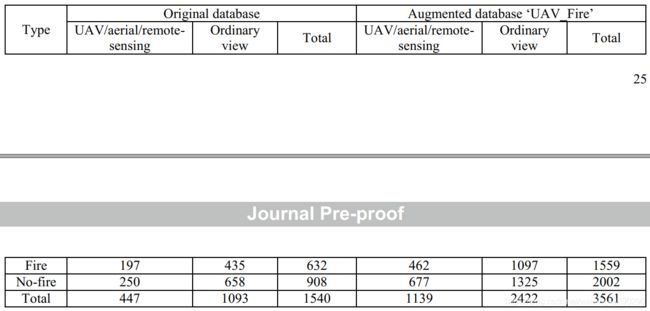

赵等。 [24]已经对来自原始图像数据集中具有火焰和烟雾特征的1105张图像进行了显着性检测和基于深度学习的野火识别。 考虑数据集的方式是图像较小,或者火焰和烟雾特征已覆盖大部分区域。 经过数据扩充过程后,新创建的图像数据集包含3500多个图像。 表10表示原始图像和增强图像数据库的详细信息。

Zhao et al. [24] have tested the saliency detection and deep learning-based wildfire identification on 1105 images with both flame and smoke features from the original image dataset. The dataset is considered in a way that size of the images is small or the flame and smoke features have covered most of the area. The new created image dataset contains over 3500 images after the data augmentation process. Table 10 represents details of the original and augmented image databases.

表10.在基于显着性检测和深度学习的野火识别中应用的原始图像数据库和增强图像数据库的详细信息[24]

Table 10. Details of the original and augmented image databases applied in the saliency detection and deep learning-based wildfire identification [24]

穆罕默德等。 [19]已经使用31个视频文件来评估使用深层CNN的火灾探测系统的性能。 视频的分辨率配置为320×240、352×288、400×256、480×272、720×480、720×576或800×600像素。 帧速率指定为7、9、10、15、25或29,模态确定为正常或射击。

Muhammad et al. [19] have used 31 video files to evaluate performance of their fire detection system using the deep CNN. Resolution of the videos is configured in 320 × 240, 352 × 288, 400 × 256, 480 × 272, 720 × 480, 720 × 576, or 800 × 600 pixels. Frame rates are specified as 7, 9, 10, 15, 25, or 29 as well as modality is determined as normal or fire.

在基于视觉的火灾探测方法中[51],作者使用了217张图像来评估他们在各种类别中的方法,包括城市,农村,室内和夜晚。 此外,他们利用12个视频文件来计算着火区域的成功率,在这些区域中,确定的分析帧数在[265,3904]范围内。

In the vision-based approach for fire detection [51], the authors have used 217 images to evaluate their approach in various categories including urban, rural, indoors, and night. Furthermore, they have utilized 12 video files to calculate success rate of the fire regions in which the number of analyzed frames is determined in the range of [265, 3904].

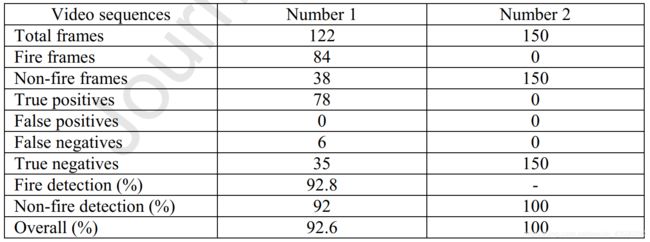

Kim等。 [52]已经使用视频数据集来评估无线传感器网络上基于颜色模型的火灾检测算法的真实率,错误率,错误率,真实率,失火率,非失火率和 整体表现。 表11表示有关所应用的数据集和分析结果的更多详细信息。 从评估结果中可以看出,在第一个数据集中获得的火灾探测率高于非火灾探测率。 此外,第二数据集中算法的整体性能优于第一数据集中。

Kim et al. [52] have used a video dataset to evaluate efficiency of the color model-based fire detection algorithm on wireless sensor network in terms of true positives, false positives, false negatives, true negatives, fire detection rate, non-fire detection rate, and overall performance. Table 11 represents more details about the applied datasets and analysis results. As seen in the evaluation results, fire detection rate is obtained more than non-fire detection rate in the first dataset. Moreover, overall performance of the algorithm in the second dataset is better than that in the first dataset.

表11.无线传感器网络上基于颜色模型的火灾检测算法的数据集和分析结果[52]

Table 11. Datasets and analysis results of the color model-based fire detection algorithm on wireless sensor network [52]

Qureshi等。 [2]使用组合的视频处理策略收集了总共30个视频,以测试早期火灾探测系统。 在数据集中,有20个视频包含火焰和/或烟雾,而10个视频是不包含火焰或烟雾的干扰物。 可以按各种类别捕获视频,例如室外便箱,花盆和仓库火灾。 持续时间在[55,5027]帧范围内指定,每秒帧数在{10,15,24,25,30}帧集中确定。

Qureshi et al. [2] have collected a total of 30 videos to test the early fire detection system using a combined video processing strategy. In the dataset, 20 videos contain flame and/or smoke, and 10 videos are distracters containing no flame or smoke. Videos are captured in various categories such as outdoor plain box, plant pot, and warehouse fire. Duration is specified in the range of [55, 5027] frame and frames per second is determined in the set of {10, 15, 24, 25, 30} frame.

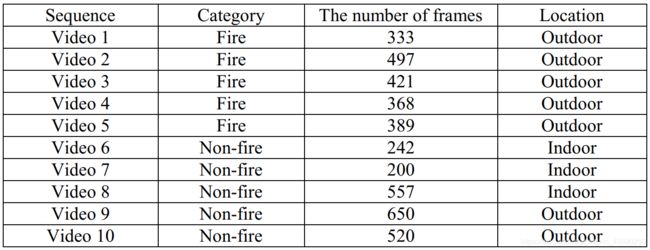

Li等。 [55]已使用各种测试视频文件来分析其自主火焰检测系统。 表12列出了其中一些测试视频的详细信息和规格。 根据各种材料(例如树,树枝和纸)确定燃烧对象。 总共有4468个帧,其中的帧与训练的帧不同。 将照明条件指定为亮或暗,将烟雾条件指定为稀,中或浓,并在室内或室外拍摄视频。

Li et al. [55] have used various testing video files to analyze their autonomous flame detection system. Table 12 represents details and specifications concerned to some of these testing videos. Burning objects are determined based on various materials such as tree, branch, and paper. There are total of 4468 frames in which the frames are different from the training ones. Lighting condition is specified as bright or dark, smoke condition is selected as thin, medium, or thick, as well as the videos are captured in the indoor or outdoor locations.

表12.通过Dirichlet过程高斯混合颜色模型[55]在自主火焰检测系统中测试视频的详细信息

Table 12. Details of the testing videos in the the autonomous flame detection system by a Dirichlet process Gaussian mixture color model [55]

表13列出了Ko等人提供的防火和非防火测试视频的详细信息。 [22]在基于立体图像和概率模糊逻辑的火灾探测系统评估和3D表面重建中利用了它们。 作者已捕获了13种类型的立体视频,因此摄像机与火之间的距离被确定为不同的值。 视频的帧频从15到30 Hz不等,输入图像的大小为320×240像素。 最初的五个视频(视频1-5)是从5 m距离处捕获的,其余视频(视频6-10)是从10 m距离处捕获的。

Table 13 indicates details of the fire and non-fire test videos that Ko et al. [22] have utilized them in evaluation process of the fire detection system and 3D surface reconstruction based on stereoscopic pictures and probabilistic fuzzy logic. The authors have captured 13 types of the stereoscopic videos so that the distance between the camera and fires is determined as different values. Frame rate of the videos varies from 15 to 30 Hz and size of the input images is 320 × 240 pixels. The initial five videos (videos 1-5) are captured from a 5-m distance and the remainder videos (videos 6-10) are captured from a 10-m distance.

表13.火灾检测系统中的火灾和非火灾测试视频的规格以及使用立体图片和概率模糊逻辑进行的3D表面重建[22]

Table 13. Specifications of the fire and non-fire test videos in the fire detection system and 3D surface reconstruction using stereoscopic pictures and probabilistic fuzzy logic [22]

6. Conclusions

火灾探测系统是监视和网络物理系统之一,消防中心和消防人员可以使用它来检测和定位各种环境中的火灾。本文介绍了智能和基于视觉的火灾探测系统的整体视图和主要功能。最初,它代表检测系统应考虑的某些类型的环境。因此,讨论了建筑物,森林和矿山的基本特性,以清晰地了解这些环境中的问题和挑战。随后,将对智能和基于视觉的火灾探测系统的两类进行更详细的讨论,包括用于森林火灾的智能探测系统和用于所有环境的智能火灾探测系统。本文讨论了卷积神经网络(CNN),颜色模型,模糊逻辑和粒子群优化等各种智能技术对各种环境中火灾探测过程的影响。在最真实的情况下,这些技术可以大大提高火灾探测系统的性能。详细讨论了基于CNN的检测系统的一般特征和工作流程,这些检测系统使用基于区域的更快CNN和深层CNN。研究人员已经提出了许多基于颜色模型的检测系统。因此,更详细地说明了将视频处理和基于规则的图像处理相结合的火灾探测系统的主要元素和处理流程。此外,本文还讨论了模糊决策和粒子群优化算法对火框架检测过程的影响。

Fire detection system is one of the surveillance and cyber-physical systems that can be used by fire centers and firefighters to detect and locate fire occurrences in various environments. This paper presented overall view and main features of the intelligent and vision-based fire detection systems. Initially, it represented some types of the environments that should be considered by detection systems. Accordingly, fundamental properties of the buildings, forests, and mines are discussed to give a clear view about problems and challenges in these environments. Afterwards, two categorizes of the intelligent and vision-based fire detection systems are discussed with more details, including intelligent detection systems for forest fires and intelligent fire detection systems for all of the environments. The paper discussed about effects of various intelligent techniques such as convolutional neural network (CNN), color models, fuzzy logic, and particle swarm optimization on fire detection processes in various environments. These techniques can considerably enhance performance of the fire detection systems, in the most real scenarios. General characteristics and workflows of the CNN-based detection systems that use faster regionbased CNN and deep CNN were discussed in detail. A considerable number of color model-based detection systems have been already presented by researchers. Therefore, main elements and processing pipelines of the fire detection systems applying the combined video processing and rule-based image processing were explained with more details. Furthermore, the paper discussed about effects of fuzzy decision making and particle swarm optimization on detection process of the fire frameworks.

本文分析了在不同评估方案下大多数火灾探测系统的性能。 进行了仿真和评估结果,以基于各种重要参数(例如检测率,准确性,精度,真阳性率,假阳性率,精度,召回率和处理速度)来评估智能和基于视觉的检测系统的效率。 他们证明,与不使用任何智能和基于视觉的机制的系统相比,这些系统对检测过程具有相当大的影响。 但是,应用CNN和深层CNN技术的系统比其他火灾探测系统获得了更好的结果。 最后,基于各种参数(例如样品数量,位置和材料类型)讨论了智能火灾探测系统的基准数据集。

The paper analyzed performance of the most fire detection systems under different evaluation scenarios. The simulation and evaluation results were carried out to evaluate efficiency of the intelligent and vision-based detection systems based on various important parameters such as detection rate, accuracy, precision, true positive rate, false positive rate, precision, recall, and processing speed. They demonstrated that these systems have considerable effects on the detection processes compared to the systems that do not use any intelligent and vision-based mechanisms. However, the systems applying the CNN and deep CNN techniques have obtained better results rather than the other fire detection systems. Finally, benchmark datasets of the intelligent fire detection systems were discussed based on various parameters such as the number of samples, location, and material type.